Abstract

We explore whether and to what extent the presence of high-stakes admission exams to selective schools affects student achievement, presumably through more intensive study effort. Our identification strategy exploits a quasi-experimental feature of a reform in Slovakia that shifted the school grade during which high-stakes exams are taken by 1 year. This reform enables us to compare students at the moment when they pass these exams with students in the same grade 1 year ahead of the exams. Using data from the low-stakes international TIMSS skills survey and employing difference-in-difference methodology, we find that the occurrence of high-stakes admission exams increased 10-year-old students’ math test scores by 0.2 standard deviations, on average. This effect additionally accrues by around 0.05 standard deviations among students with the highest probability of being admitted to selective schools. Although we find similar effects for both genders, there are indications that high-stakes exams in more competitive environments affect girls more than boys.

Similar content being viewed by others

Notes

Jürges et al. (2012) demonstrate that central exit exams have a positive effect only on exam-specific knowledge and no effect on knowledge not included in the tested fields. Jacob (2005) reports a similar result. When test results are relevant for teachers and school administrators, it seems that teachers predominantly teach the curriculum that is tested.

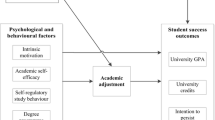

Based on empirical evidence provided by an ad hoc survey in the Czech Republic (Federičová and Münich 2014) and supported by rich anecdotal evidence, we assume that students are not very forward-looking, and mainly prepare for exams that are perceived to be imminent. In particular, it is assumed that the preparation period for high-stakes admission exams does not exceed 1 year.

Until 1993, the Czech Republic and Slovakia had the same educational system.

Currently, the education ministries in both countries are considering reducing enrolment in academic lower secondary schools in order to limit the adverse impact of this transition (brain drain effect) on mainstream schools.

The Czech Longitudinal Study in Education (CLoSE) follows the cohort of students in the Czech Republic who were tested in their fourth grade by TIMSS and PIRLS in 2011. The data include detailed information about the students’ intentions of applying to academic lower secondary schools, their preparation for the admission exams, and their results in 2012. For a further description of the CLoSE survey See Greger and Straková (2013).

Putwain et al. (2016) obtain similar results by examining teachers’ fear appeals before high-stakes testing. Those appraised by students as challenging lead to a positive effect on student engagement, whereas threatening appeals have a negative effect.

In particular, more than 90% of students in the CLoSE survey perceive admission to selective schools to be a gateway to university education.

On the impact of family background on children’s academic outcomes, See Cunha and Heckman (2007), Feinstein (2003), and Anger and Heineck (2010). On the impact of parents’ attitudes on the development of children’s attitudes and on the formation of their goals and motives, See Grolnick and Ryan (1989), Friedel et al. (2007).

In Slovak/Czech, Basic School is called základná škola/základní škola, academic school is called osemročné gymnázium/osmileté gymnasium.

Using a natural experiment in Northern Ireland, Guyon et al. (2012) demonstrate the effect of Academic Schools on success in national examinations at age 16 and 18.

These Academic Schools previously had a long tradition before the Second World War, but were then closed by the communist regime in the early 1950s and re-established only after the 1989 Velvet Revolution.

Act No. 245/2008 Coll. on Upbringing and Education (School Act) is available in Slovak at www.minedu.sk/data/att/10246.pdf.

For further information about the school reforms in Slovakia consult National Institute for Certified Educational Measurements (2012) available at http://www.oecd.org/edu/school/CBR_SVK_EN_final.pdf.

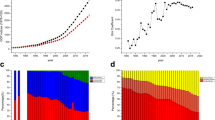

The assessment data from the first cycle of TIMSS in 1995 were scaled by setting the mean test scores from all participating countries to 500 and the standard deviation to 100 (Olson et al. 2008). In each successive TIMSS cycle, the assessment data were placed on the scale from the previous cycle to provide accurate measures of trends across all cycles.

We use the number of books at the student’s home as a proxy for their socio-economic family background. See, e.g., Brunello and Checchi (2007), who show that the number of books at home and parents’ level of education have similar relationships with student achievement.

As excess demand incorporates application behavior, and hence may be endogenous to student achievement, we use excess demand data from 2008 and 2011. Since it is not mandatory in Slovakia for schools to report the number of applications they received, we further add in the missing data by contacting the academic schools personally. Because some of these schools are now closed or did not record this data, excess demand data remains missing for 6% of observations.

Three different groupings of districts according to excess demand are considered. First, the upper and lower access demand threshold is computed as 0.5 standard deviation distance on both sides of the mean excess demand. Second, fixed levels of excess demand at 0.25 and 0.75 are used. Third, the thresholds are set at the lower and upper quartiles of excess demand.

The mean of the math test score distribution is normalized to 0 and the standard deviation to 1.

The upper and lower access demand thresholds are computed as a 0.5 standard deviation distance on each side of the mean excess demand.

See the descriptive statistics in Table 2.

In the absence of any other wave of TIMSS in which Slovakia and the Czech Republic coincide, we cannot use TIMSS data to show common trends, as would have been more proper.

The majority of students affected by the 2009 school reform in Slovakia reached 15 years of age in 2014. Using the PISA survey data from before and after the reform, i.e., PISA 2012 and future PISA 2015, we will be able to examine the effect of later selection, estimating whether 1 more year with the top students enhanced or worsened the level of achievement among those students who remained in basic school.

Excess demand is measured as the ratio of applications to admissions minus 1. Thus, 0 excess demand indicates districts in which the same number of students apply for places as are admitted.

For a detailed description of quantile regression See Koenker (2005).

It is a stylized fact supported by rich evidence from various studies that students enrolled in academic schools have notably stronger socio-economic family backgrounds.

The home possession variable is derived from questions about whether the student has a computer and internet connection at home, and whether they have their own mobile phone. The variable is then computed as the ratio of the sum of all items over the maximum score of valid responses.

Following the PISA 2009 Technical Report (OECD 2012), a household’s socio-economic status is computed as the unweighted mean of these four discrete but ordinal variables—each of them standardized to mean 0 and standard deviation 1. If more than one value is missing for a student, their socio-economic status is considered missing.

The longitudinal Czech project CLoSE ran a follow-up survey for the TIMSS 2011 student cohort 1 year later, and asked detailed questions concerning their preparation for academic school admission exams and their results. The CLoSE data enable us to estimate a Probit model of the probability of being admitted to academic school for the Czech sample of TIMSS 2011 students.

These results are available upon request.

This threshold is specified for each district by the proportion of students in the first year of academic school out of the total number of students in the respective cohort.

Standardized score S is computed from original TIMSS scores T as S = (T − T 2007) / σ 2007, where T 2007 is the mean score and σ 2007 is the standard error in 2007 (both countries).

The magnitude of the time change in test score in districts with high excess demand is the sum of the estimates for the year dummy and the interaction term between the year and high excess demand dummies.

The ordinal nature of TIMSS test scores means that a one-point difference in the test score at the lower tail of the skill distribution does not necessarily correspond to the same increase in skills in the upper tail. This problem was highlighted in a recent study by Bond and Lang (2013), showing the sensitivity of estimated test score gaps on the choice of scale. By applying various order-preserving scale transformations to the TIMSS test scores, similar to those used by Bond and Lang (2013), we find that the estimated parameters obtained from the transformed scores are not effectively significantly different from those of the baseline model.

Table 6 also reveals some impact on the tenth percentile of the test score distribution. This may be due to the spill-over effects onto low-skilled students from the higher study efforts of their high-skilled peers. Another possible explanation is that some of the low-skilled students do not know their real probability of admission to the Academic Schools. This misperception of admission probability may be due to the unequal allocation of talents across primary schools, and hence, the lack of a benchmark for many pupils.

As we discussed in “Sect. 2,” boys seem to be less mature and less responsive to parental authority than girls at the time of school selection (Wilder and Powell 1989). This difference in attitudes between girls and boys may partly explain why boys are not affected by the SEE.

References

Anger S, Heineck G (2010) Do smart parents raise smart children? The intergenerational transmission of cognitive abilities. J Popul Econ 23(3):1105–1132

Angrist J, Oreopoulos P, Williams T (2014) When opportunity knocks, who answers? J Hum Resour 49(3):572–610

Bishop JH (1997) The effect of national standards and curriculum-based exams on achievement. Am Econ Rev 87(2):260–264

Bishop JH (1999) Are national exit examinations important for educational efficiency? Swedish Economic Policy Review 6(2):349–401

Bond TN, Lang K (2013) The evolution of the black-white test score gap in grades K-3: the fragility of results. Rev Econ Stat 95(5):1468–1479

Brunello G, Checchi D (2007) Does school tracking affect equality of opportunity? New international evidence. Econ Policy 22(52):781–861

Clark D, Del Bono E (2016) The long-run effects of attending an elite school: evidence from the United Kingdom. American Economic Journal: Applied Economics 8(1):150–176

Crumpton HE, Gregory A (2011) “I’m not learning”: the role of academic relevancy for low-achieving students. J Educ Res 104(1):42–53

Cunha F, Heckman JJ (2007) The technology of skill formation. Am Econ Rev 97(2):31–47

Dearden L, Ferri J, Meghir C (2002) The effect of school quality on educational attainment and wages. Review of Economics and Statistics 84(1):1–20

Dunifon R, Duncan GJ (1998) Long-run effects of motivation on labor-market success. Soc Psychol Q 61(1):33–48

Elliot AJ, McGregor HA, Gable S (1999) Achievement goals, study strategies, and exam performance: a mediational analysis. J Educ Psychol 91(3):549–563

Federičová M, Münich D (2014) Preparing for the eight-year gymnasium: the great pupil steeplechase. In: IDEA study 2/2014. CERGE-EI, Prague

Feinstein L (2003) Inequality in the early cognitive development of British children in the 1970 cohort. Economica 70(277):73–97

Foy P, Galia J, Li I (2008) Scaling the data from the TIMSS 2007 mathematics and science assessments. In: Olson JF, Martin MO, Mullis IVS (eds) TIMSS 2007 Technical Report. Boston College, Chestnut Hill, MA

Friedel JM, Cortina KS, Turner JC, Midgley C (2007) Achievement goals, efficacy beliefs and coping strategies in mathematics: the roles of perceived parent and teacher goal emphases. Contemp Educ Psychol 32:434–458

Fryer RG (2011) Financial incentives and student achievement: evidence from randomized trials. Q J Econ 126(4):1755–1798

Fryer, R. G. (2013). Information and student achievement: evidence from cellular phone experiment. NBER Working Papers, 19113

Fuchs T, Woessmann L (2007) What accounts for international differences in student performance? A re-examination using PISA data. In: Dustmann C, Fitzenberger B, Machin S (eds) The economics and training of education. Physica-Verlag HD, Heidelberg, Germany, pp 209–240

Gneezy U, Rustichini A (2004) Gender and competition at a young age. Am Econ Rev 94(2):377–381

Greger D, Straková J (2013) Faktory ovlivňující přechod žáků 5. ročníku na osmileté gymnázium [Transition of 5-graders to multi-year grammar school]. Orbis Scholae 7(3):73–85

Grolnick WS, Ryan RM (1989) Parent styles associated with children’s self-regulation and competence in school. J Educ Psychol 81(2):143–154

Guyon N, Maurin E, McNally S (2012) The effect of tracking students by ability into different schools: a natural experiment. J Hum Resour 47(3):684–721

Hao L, Naiman DQ (eds) (2007) Quantile regression. Sage publications, Thousand Oaks, CA

Hidi S, Harackiewicz JM (2000) Motivating the academically unmotivated: a critical issue for the 21st century. Rev Educ Res 70(2):151–179

Jacob BA (2005) Accountability, incentives and behaviour: the impact of high-stakes testing in the Chicago Public Schools. The Journal of Public Economics 89:761–796

Jurajda Š, Münich D (2011) Gender gap in admission performance under competitive pressure. Am Econ Rev 101(3):514–518

Jürges H, Schneider K (2010) Central exit examinations increase performance … but take the fun out of mathematics. J Popul Econ 23(2):497–517

Jürges H, Schneider K, Büchel F (2005) The effect of central exit examinations on student achievement: quasi-experimental evidence from TIMSS Germany. J Eur Econ Assoc 3(5):1134–1155

Jürges H, Schneider K, Senkbeil M, Carstensen CH (2012) Assessment drives learning: the effect of central exit exams on curricular knowledge and mathematical literacy. Econ Educ Rev 31(1):56–65

Koenker R (ed) (2005) Quantile regression. Cambridge University Press, New York

Lenroot RK, Gogtay N, Greenstein DK, Molloy Wells E, Wallace GL, Clasen LS et al (2007) Sexual dimorphism of brain developmental trajectories during childhood and adolescence. NeuroImage 36:1065–1073

Montgomery H (2005) Gendered childhoods: a cross disciplinary overview. Gend Educ 17(5):471–482

National Institute for Certified Educational Measurements (2012) Country background report for the Slovak Republic. NUCEM, Bratislava

OECD (2012) PISA 2009 Technical Report. OECD Publishing, PISA

OECD (2013) Education at a glance 2013: OECD indicators. OECD Publishing. doi:10.1787/eag-2013-en

Olson JF, Martin MO, Mullis IVS (eds) (2008) TIMSS 2007 Technical Report. Boston College, Chestnut Hill, MA

Piopiunik M (2014) The effects of early tracking on student performance: evidence from a school reform in Bavaria. Econ Educ Rev 42:12–33

Putwain DW, Nicholson LJ, Nakhla G, Reece M, Porter B, Liversidge A (2016) Fear appeals prior to a high-stakes examination can have a positive or negative impact on engagement depending on how the message is appraised. Contemp Educ Psychol 44-45:21–31

Rubin DB (1987) Multiple imputations for nonresponse in surveys. John Wiley & Sons, Inc., New York

Ryan RM, Deci EL (2000) Intrinsic and extrinsic motivations: classic definitions and new directions. Contemp Educ Psychol 25:54–67

Vansteenkiste M, Simons J, Lens W, Sheldon KM, Deci EL (2004) Motivating learning, performance, and persistence: the synergistic effects of intrinsic goal contents and autonomy-supportive contexts. J Pers Soc Psychol 87(2):246–260

Wilder GZ, Powell K (1989) Sex differences in test performance: a survey of the literature. College Board Report No. 89(3). College Entrance Examination Board, New York

Woessmann, L. (2002). Central exams improve educational performance: international evidence. Kiel Discussion Papers, 397

Woessmann L (2003) Central exit exams and student achievement: international evidence. In: Peterson P, West M (eds) No child left behind? The politics and practice of school accountability. Brookings Institution Press, Washington, DC, pp 292–324

Woessmann L (2005) The effect of heterogeneity of central exams: evidence from TIMSS, TIMSS-Repeat and PISA. Educ Econ 13(2):143–169

Woessmann L (2007) International evidence on school competition, autonomy, and accountability: a review. Peabody J Educ 82(2–3):473–497

Wooldridge JM (2002) Econometric analysis of cross section and panel data. The MIT Press, Cambridge

Acknowledgements

This research was supported by grant P402/12/G130 awarded by the Grant Agency of the Czech Republic. We would especially like to thank Štěpán Jurajda and Steven Rivkin, participants at the Educational Governance and Finance Workshop at the AEDE Meeting in Valencia and at the Research Seminar at the University of Illinois at Chicago, for their helpful comments on previous drafts of this paper. We are indebted to Jana Palečková and Vladislav Tomášek for their assistance with data provision, as well as to Andrea Galádová from the National Institute for Certified Educational Measurements in Slovakia. Last but not least, we feel indebted to two anonymous referees who provided us with very helpful advice for improving the clarity and persuasiveness of the final manuscript.

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editor: Erdal Tekin

Rights and permissions

About this article

Cite this article

Federičová, M., Münich, D. The impact of high-stakes school admission exams on study achievements: quasi-experimental evidence from Slovakia. J Popul Econ 30, 1069–1092 (2017). https://doi.org/10.1007/s00148-017-0643-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00148-017-0643-2