Abstract

Having volunteers collect data can be a cost-effective strategy to complement or replace those collected by scientists. The quality of these data is essential where field-collected data are used to monitor progress against predetermined standards because they provide decision makers with confidence that choices they make will not cause more harm than good. The integrity of volunteer-collected data is often doubted. In this study, we made estimates of seven vegetation attributes and a composite measure of six of those seven, to simulate benchmark values. These attributes are routinely recorded as part of rehabilitation projects in Australia and elsewhere in the world. The degree of agreement in data collected by volunteers was compared with those recorded by professional scientists. Combined results showed that scientists collected data that was in closer agreement with benchmarks than those of volunteers, but when data collected by individuals were analyzed, some volunteers collected data that were in similar or closer agreement, than scientists. Both groups’ estimates were in closer agreement for particular attributes than others, suggesting that some attributes are more difficult to estimate than others, or that some are more subjective than others. There are a number of ways in which higher degrees of agreement could be achieved and introducing these will no doubt result in better, more effective programs, to monitor rehabilitation activities. Alternatively, less subjective measures should be sought when developing monitoring protocols. Quality assurance should be part of developing monitoring methods and explicitly budgeted for in project planning to prevent misleading declarations of rehabilitation success.

Similar content being viewed by others

Introduction

Quantitative estimates of environmental attributes, especially those based on vegetation, are frequently required to inform land management decisions for biodiversity conservation (Gorrod and Keith 2009). Decisions include the allocation of incentive funding to manage private land for biodiversity (USDA 2003), the determination of offset actions to compensate for unavoidable biodiversity losses (ten Kate and others 2004), and measuring the progress of ecosystem rehabilitation (Ruiz-Jaen and Mitchell Aide 2005). Unfortunately, natural resource managers frequently find they have diminishing resources for data collection (Middleton 2001; EPA 2002; Whitelaw and others 2003). Indeed, a major barrier to monitoring is the cost of employing highly trained environmental professionals or consultants (Kershner 1997). Monitoring data collected by community volunteer groups can be a cost-effective strategy to complement or replace the information collected by professionals (e.g., EPA 2002; Whitelaw and others 2003).

While monitoring schemes using self-funded volunteer labor have been valued for more than just the potential for cost-savings, for example, the educational benefits to participants (Newman and others 2003; Boudreau and Yan 2004), many managers and scientists have questioned the quality, reliability and utility of data collected by a ‘non-professional workforce’ (Stokes and others 1990; Stadel and Nelson 1995; Wells 1995; Fore and others 2001; Underwood and Chapman 2002). Concerns about data credibility have been nominated by scientists and volunteers as the most important impediment to volunteer monitoring efforts (Engel and Voshell 2002). Newman and others (2003) noted that issues surrounding the use of volunteers were brought to international attention when, in 1993, an amendment was made to prohibit the US National Biological Survey from accepting the services of volunteers. The amendment was supported by arguments in the House of Representatives that volunteers are incompetent and biased (Newman and others 2003). The accuracy of volunteer-collected data continues to be questioned in monitoring and assessment programs (Nerbonne and Vondracek 2003; Boudreau and Yan 2004; Delaney and others 2008; Crall and others 2010).

Given the challenge facing volunteer monitoring programs, audits of the accuracy of volunteer-collected data are required to demonstrate the validity of a program’s data (Boudreau and Yan 2004), and thus provide decision makers with the confidence that their choices will not cause more harm than good. Quality data are particularly important in ecosystem rehabilitation, where field collected data are used to evaluate environmental outcomes of management interventions (such as native revegetation) against predetermined benchmarks or expected norms. With massive investments being made in ecosystem rehabilitation, the importance of rigorous monitoring has attracted great attention in recent years. As a management practice, ecosystem rehabilitation in certain ecosystems has been described as ‘a booming world-wide phenomenon’ (e.g., Giller 2005) and described as multimillion dollar industries (Brooks and Lake 2007). Importantly, inaccurate data could result in further funds being spent unnecessarily or an erroneous declaration of rehabilitation success.

In this study, we made estimates of vegetation attributes to simulate benchmark values in order to investigate the degree of agreement of data collected by two different set of observers, professional scientists and volunteers. Comparisons of data collected by community volunteers were made against the same data recorded by professional scientists. Measures based on vegetation attributes, which were recorded by landholders on a voluntary basis as part of rehabilitation efforts in the Hunter Valley, NSW, Australia (32°0′0″S 151°0′0″E), were used in this case study. Our study has wider relevance because similar vegetation attributes are used as a basis for development applications, assessing ecological condition, surrogates for biodiversity, distributing incentive funds, and monitoring forest health in managed forests around the world (Noss 1990; Gibbons and Freudenberger 2006; McElhinny and others 2005; USDA 2003; Wilson and others 2006; Gollan and others 2009). In common with ecosystem rehabilitation, these purposes also use benchmark values for comparison. Inaccuracy in field estimates of environmental attributes is an important cause of uncertainty for decision makers, yet choices are usually made as though values of attributes are known without error (Gorrod and Keith 2009). Thus, we designed an investigation to determine if measures of vegetation attributes made by scientists are in closer agreement with benchmark values than those made by volunteers.

Study Area

Since European settlement, much of the Hunter Valley has been developed for agriculture, grazing, viticulture and coal mining (King and Woolmington 1960). Clearing of deep-rooted vegetation for agriculture, expanding urban and rural settlement and large-scale industry have been identified as sources of environmental pressure in the Hunter region (Anonymous 1997). Much of the original floodplain vegetation has been removed and only small remnants of vegetation remain (Story 1963).

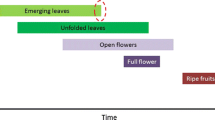

The launch of nation-wide tree planting initiatives in the late 1980 s saw the beginning of many small, and more recently, a number of large, revegetation projects in the Hunter region. Replacing non-native plants (e.g., willows Salix spp.) with native vegetation is designed to protect, conserve and enhance native biodiversity (Anonymous 2003). Along with in-kind contributions by way of volunteer involvement in planting trees, there has also been financial investment to provide resources and training of volunteers for implementation of works and monitoring (termed ‘community capacity building and support projects’; Anonymous 2003, Anonymous 2006) in the region. For example, the Project Journal was designed to assist landholders and volunteer groups record information on the implementation and progress of land management projects so that lessons can be learnt for the future (Anonymous 2007). Variables such as herbivore leaf-damage and percentage foliage cover estimates are some of the recommended variables for monitoring the progress of rehabilitation activities as well as to provide an indication of the improvements to ecosystem health as a result of the intervention.

Methods

Participant Selection and Preliminary Training Procedures

The degree to which data collected by volunteers and scientists agreed with benchmark values was assessed in two experiments. In the first experiment, visual estimates of habitat attributes made by volunteers and scientists were compared with an author’s (JG) estimates. In the second, we compared visual estimates of leaf damage made by participants (both volunteers and scientists) with a more quantitative, but labor-intensive method carried out by one author (JG).

Scientists (10 in total) chosen for the two experiments were technical officers and research scientists employed at the Australian Museum. All held a minimum of an undergraduate university science degree, and had previous experience collecting similar field data to those tested in this experiment. For comparisons of habitat attributes, volunteers (10 in total) were recruited by Conservation Volunteers Australia (CVA). All CVA participants were local landholders with experience in ecosystem rehabilitation on their property or who were regularly involved in other rehabilitation projects in the study region. Volunteers (8 in total) for leaf damage experiments were from a Green Corps team (working on the Upper Hunter River Rehabilitation Initiative at the time of our study). Green Corps is a program where young people (between 17 and 20 years of age) volunteer their time and effort in rehabilitation programs.

Prior to experiments, all participants were given the same induction in terms of type and duration of training and familiarization with sampling techniques. For the habitat attributes (see below) the training involved taking a participant to a field site and guiding them through what was required. Full assessments were performed at two sites by the participant and the author that established the benchmark scores (JG and see below). Participants were permitted to ask questions if any aspect was unclear and answers were provided. This dialogue was not documented but was used in subsequent training sessions with trainees in order to ensure all received the same information. Data that were recorded by the participants and the trainer were discarded. The same training approach was taken for the leaf damage experiment, where participants were guided through a set of 10 leaves. Examples of different types of leaf damage (see below) were highlighted. Leaf damage scores from training sessions were discarded.

Habitat Attributes

Twenty-two sites (20 × 20 m) ranging from almost open grassland with little overstorey cover (<10 % projected foliage cover) and leaf litter (<5 % ground cover), to mature woodland with dense over storey (>70 % projected foliage cover) and large amounts of leaf litter and coarse woody debris (>70 % ground cover) were used for the comparisons. At all sites, one author (JG) made percent estimates of each of six habitat attributes [foliage cover in the upper storey (>2.5 m), foliage cover in the mid storey (between 1 and 2.5 m), foliage cover in the lower storey (between 0.5 and 1.0 m), foliage cover in the ground storey (between 0 and 0.5 m), ground cover of leaf litter and coarse woody debris]. Composites of habitat attributes have been used as measures of environmental health or integrity and act as triggers for rehabilitation action (e.g., Jansen and others 2004; Wilson and others 2006). Therefore a seventh composite variable, habitat complexity, derived by addition of the six single habitat scores at each site was computed. Estimates of habitat attributes made by the author are hereafter referred to as ‘benchmark scores’. These scores were intended to simulate a set of targets or performance measures which could be used in a monitoring program aiming to report on the progress of rehabilitation activities. Each participant was asked to make visual estimates of all six habitat attributes at four sites, deliberately selected to span the range of site conditions.

Leaf Damage

A collection of 48 high-resolution digital images of leaves was made. This leaf database was created by taking a digital photograph of each leaf and measuring leaf total area and the amount of damage using the Scion Image for Windows (Scion Corporation 2000) software. The proportion of damaged leaf was calculated by dividing the total damaged area by the total area of the leaf. All areas of the leaf surface that were affected by disease, senescence or missing due to herbivore browse were considered to be leaf damage. Leaf damage percentages from image analysis served as the benchmark values for comparison with visual assessments made by scientists and volunteers.

For visual assessments, all 48 leaf images from the leaf database were presented one after the other in random order to 16 participants (eight scientists and eight volunteers). Each participant was asked to estimate the proportions that were damaged using a leaf damage key as an aid (Supplementary Material). Participants recorded their damage estimate of each leaf in one of the following eight categories: 1, ≤10 %; 2, 11–20 %; … 7, 61–70 %, and 8, >70 %. Damage keys have been used in assessments of damage caused by pathogens or insect pests to increase accuracy and reduce inter and intra-observer variability (e.g., Forbes and Korva 1994; Gilbert and Gregoire 2003).

Statistical Analyses

The degree of agreement between participants’ habitat complexity scores and benchmark scores was assessed using one-tailed Spearman rank correlation tests. The Spearman rank test was used because no assumption was made about the distribution of values, and one-tailed tests were used because the sign of the correlation coefficient (i.e., positive) was anticipated a priori (Motulsky 2003). The degree of agreement between each habitat attribute estimated by participants and benchmark scores were assessed using the Kappa (κ) statistic (Agresti 1984). Kappa measures the degree of agreement from −1 (complete disagreement) to +1 (perfect agreement), with 0 meaning no level of agreement or disagreement over that expected due to chance alone. ‘Strengths of agreement’ (to aid in interpretation) for the range of κ (<0, poor; 0.00–0.20, slight; 0.21–0.40, fair; 0.41–0.60, moderate; 0.61–0.80, substantial; ≥0.80, almost perfect) were based on Landis and Koch (1977).

To determine if visual estimates by participants of leaf damage differed between the two groups, scientists and volunteers, a linear regression was fitted to both data sets and a comparison was made between the slopes of each line (i.e., a test of the null hypothesis that the slopes are identical or parallel). The method of comparing slopes is discussed in detail in Zar (1999). Agreement between benchmark scores and an individual’s estimates was assessed using Spearman’s r value (one-tailed correlation tests) and the Kappa statistic.

Results

Habitat Attributes

The correlation between benchmark habitat complexity scores and those of participants (scientists and volunteers combined) was positive, and the strength of the correlation was moderate (r = 0.43, p < 0.0001, n = 80). When scientist and volunteer data were analyzed separately, the correlation between benchmark and scientists’ habitat complexity scores was strong and significant (r = 0.59, p < 0.0001, n = 40; Fig. 1a). Correlation between benchmark and volunteers’ habitat complexity scores was also positive, but the strength of the correlation was weaker and not significant (r = 0.15, p = 0.17, n = 40; Fig. 1b).

Of 240 observations of six habitat attributes made by the 10 scientists, 161 (67 %) estimates were in agreement with benchmark values and the strength of agreement was moderate (κ = 0.52 ± 0.04). Of 240 estimates made by the 10 volunteers, 126 (53 %) were in agreement with benchmark values and the strength of agreement was fair (κ = 0.35 ± 0.04). When each habitat attribute assessed by scientists was analyzed separately, the strength of agreement with benchmark scores differed among habitat attributes and ranged from slight (κ = 0.16 ± 0.14) to moderate (κ = 0.54 ± 0.11). For volunteers, the strength of agreement also differed among habitat variables, ranging from slight (κ = 0.02 ± 0.10) to moderate (κ = 0.53 ± 0.11; Fig. 2). When estimates were analyzed for each participant, strengths of agreement between scientists and benchmark scores ranged from fair (κ = 0.39 ± 0.14) to substantial (κ = 0.68 ± 0.13), while for volunteers, strengths of agreement varied from slight (κ = 0.09 ± 0.11) to substantial (κ = 0.61 ± 0.13; Fig. 3).

Mean Kappa statistics reflecting the level of agreement between benchmark scores and those of 10 scientists (white bars) and 10 volunteers (grey bars) for each of six habitat attributes at four sites. All observations of each habitat attribute were pooled across ten observers (n = 40) US % foliage cover in upper storey, MS % foliage cover in mid storey, LS % foliage cover in lower storey, GS % foliage cover in ground storey, CWD % amount of coarse woody debris, LL % leaf litter cover

Mean Kappa statistics reflecting the level of agreement between the benchmark scores and the assessment of six habitat attributes by each of ten scientists (white bars) and ten volunteers (grey bars). The numbers 1 to 10 were assigned arbitrarily to distinguish individual scientists and volunteers; no relationship is implied between a scientist and volunteer sharing the same numeric identifier. Each participant’s scores were pooled across all six habitat attributes (n = 24)

Leaf Damage

The correlation between benchmark leaf damage scores and those made by participants (scientists and volunteer combined) was significant and strong (r = 0.74, p < 0.0001, n = 768). When scientist and volunteer data were analyzed separately, the correlation between benchmark and scientists’ leaf damage scores was strong and significant (r = 0.83, p < 0.0001, n = 384; Fig. 4a). The correlation between benchmark and volunteers’ leaf damage scores was lower, but still strong and significant (r = 0.69, p < 0.0001, n = 384; Fig. 4b). Overall, estimates of leaf damage made by scientists were in stronger agreement with benchmark scores (κ = 0.36 ± 0.03) than those made by volunteers (0.25 ± 0.03). Comparison of the slopes of least-squares regression lines fitted to each data set (scientists versus volunteers) indicated a significant difference, that is, they were not parallel (F 1,764 = 29.08, p < 0.0001).

Relationships between estimates of leaf damage made by image processing and by visual assessment by a scientists (Spearman r = 0.83, p < 0.0001, n = 384) and b volunteers (Spearman r = 0.69, p < 0.0001, n = 384). The area of each circle is proportional to the frequency (adjacent number) of the given pair of scores. Solid lines are lines of best fit

The strength of agreement with benchmark leaf damage scores varied among individual observers, ranging from slight (κ = 0.05 ± 0.06) to moderate (0.50 ± 0.08; Fig. 5). The three highest Kappa statistics were achieved by scientists, while the three lowest were scored by volunteers. The highest four Kappa statistics achieved by volunteers were among the lowest attained by scientists.

Mean Kappa statistics reflecting the level of agreement between estimates of leaf damage and image-processed benchmarks (n = 48) achieved by eight scientists (white bars) and eight volunteers (grey bars). The numbers 1–8 were assigned arbitrarily to distinguish individual scientists and volunteers; no relationship is implied between a scientist and volunteer sharing the same numeric identifier

Discussion

We found that data collected by scientists, as a group, were in closer agreement with benchmark values (r = 0.59 and 0.83 for habitat complexity and leaf damage, respectively) than those collected by volunteers (r = 0.15 and 0.67, respectively). However, strength of agreement varied widely among individual participants (regardless of scientist or volunteer) for single habitat attributes and leaf damage scores. Of course assessment of accuracy (sensu stricto), for the habitat variables at least, assumes that the benchmark values reflected the ‘truth’. Indeed, the benchmark values have the potential for bias introduced by the author that made them. It is possible that benchmark and the scientists’ estimates were less accurate than the volunteers’, but this is unlikely. The result that the strength of agreement varied widely among individual participants (regardless of scientist or volunteer) for single habitat attributes demonstrates that inaccuracies occur—regardless of the accuracy of the benchmark estimates. It also reveals that composite indices such as habitat complexity can mask these differences. ‘Smoothing’ out the errors may not be a problem where a composite measure is of interest, but, the consequences should be considered where individual components are of interest.

Our study was prompted by other investigations of community volunteers and professional scientific involvement in data acquisition that have revealed data-integrity issues. For example, Underwood and Chapman (2002) outlined the problems encountered in continuing education programs organized by the Institute of Marine Ecology at the University of Sydney (from 1984 to 1996). In order to maintain integrity and reliability of data collected throughout a particular project, it was necessary to carefully examine the data for errors (Underwood and Chapman 2002). Inconsistencies, such as people recording that they observed ‘zero people taking bait’ while at the same time listing the bait species that were taken, made it impossible for the researchers to trust the information without daily checking and validation (Underwood and Chapman 2002). However, whether these same problems would have been encountered if scientists were used was not determined. Underwood and Chapman (2002) assumed that data collected by scientists would have been less error-prone. Underwood and Chapman (2002) did not explore whether data from all volunteers were invalid or if mistakes were only being made with certain types of measures. Of course, it is plausible that scientists may have been just as unreliable. In our data at least, data collected by some volunteers were in similar agreement with benchmark values as those collected by some scientists.

In contrast to the reports of problems with the use of data collected by volunteers, other accounts show no perceptible difference between the data collected by scientists and volunteers (McLaren and Cadman 1999; Fore and others 2001; Engel and Voshell 2002; Brandon and others 2003). For example, Fore and others (2001) detected no differences between field samples of aquatic invertebrates collected and identified by volunteers and scientists. In a similar study, Engel and Voshell (2002) showed that data collected by volunteers and scientists agreed very closely (96 %). Both studies concluded that volunteers could collect reliable data and make assessments comparable to those made by highly trained professionals (Fore and others 2001; Engel and Voshell 2002). Another study examining forest data (structural changes in dominant tree species) from one of the largest volunteer monitoring programs in the mid-western United States found no differences in accuracy of data collected by volunteers and botanists (Brandon and others 2003). Volunteer accuracy for 12 (out of 15) genera was 80 % or higher, but the study detected that species in two genera posed greater difficulties for volunteers and there were large discrepancies in some variables (Brandon and others 2003). Wilson and others (2006), in comparisons of vegetation scores made by scientists and volunteers, found that both types of participants were consistent for the measures of canopy continuity, proportion of native species in the canopy, and amounts of leaf litter, but were inconsistent for variables such as canopy width and proportion of natives in the ground cover. These results are analogous to ours that showed lower agreement for some habitat attributes in comparison to others. Generally, we found greater agreement with benchmark scores in percent foliage cover scores in the mid and lower storeys than other habitat variables, and this was consistent between scientists and volunteers. These findings suggest that some attributes are more difficult to estimate than others, or that some measures are more subjective than others.

While the quality of data collected by some volunteers can be just as high as those collected by some scientists, the results of our study have implications for monitoring and evaluation of the outcomes of rehabilitation programs using the methods investigated here. One important consequence is that without validation of the accuracy of measures (scientist or otherwise), one would not know whether apparent changes over time indicated real changes in the response being monitored or human error due to observer bias. Where expected norms (e.g., threshold or benchmark values) for monitoring rehabilitation success have been established a priori, this could result in a scenario where the rehabilitation project is deemed ‘successful’ by some but ‘unsuccessful’ by others. Given the variability among observers in our study, this scenario is highly likely for the types of data used in this study. If this scenario were to happen in a ‘real’ progress-monitoring scheme, two scenarios are possible, each with two possible outcomes. (1) If the rehabilitation really had been successful, some people would agree without adverse consequences. On the other hand, some would disagree that success had been achieved, and resources would continue to be used to confirm the outcome by monitoring. Resources might also be expended unnecessarily on further on-ground works to supplement the original effort. (2) If the rehabilitation really had not been successful, some people would agree, lessons could be learnt, and work on rehabilitating the habitats could continue. However, some would declare wrongly that success had been achieved. In this situation, there is an environmental cost because the habitat would remain degraded. To avoid perverse outcomes, quality data and agreement between collectors is necessary.

Calibration of scores is one option that could improve data quality. One approach that would be particularly well suited to the types of data collected in our investigation is the ‘Adelaide technique’ (Andrew and others 1979). Originally developed for estimating the biomass of shrubs in grazed paddocks, the technique involves clipping a branch (a hand-held unit), and scoring each shrub of interest in terms of the number of ‘equivalent-units’ that it is estimated to contain. The number of equivalent-units is then converted to a value (g dry weight) via a calibration curve. The calibration curve is established by estimating the number of equivalent-units contained in various ‘calibration shrubs’. Calibration shrubs are harvested and the foliage stripped, dried and weighed. A calibration curve is established by regressing the weight of the calibration shrubs against the number of equivalent-units. In like manner, the leaf image database could be used to produce a calibration curve before participants estimated leaf damage in the field to obtain more valid leaf damage scores. This procedure would involve each participant estimating damage to the leaves used to compile the leaf image database, and establishing a line of best fit to the data. Subsequent leaf damage estimates recorded by participants in the field (i.e., ‘real’ monitoring data) could be adjusted using each observer’s personal calibration line. Similarly, individualized calibration curves could be employed for habitat attributes like those used in our investigation. Whether additional resources should be spent on calibration techniques or observer training in the field depends on an individual observer’s accuracy and consistency in field estimation. For observers who are inaccurate but consistently so, training may improve their accuracy in an absolute sense but is unnecessary if a calibration technique is applied to their consistent over or under-estimates. For observers who are, on average, accurate but quite inconsistent, calibration techniques are futile and only training can improve their consistency.

Another technique that could be utilized to train or calibrate assessors conducting habitat assessments is modern, high-resolution 3D laser scanning measurements (or terrestrial LiDAR). These devices (e.g., Z + F Imager 5006 and Leica C5 ScanStation) have been employed to calculate metrics such as canopy openness and leaf area index in forest stands (e.g., Danson and others 2007; Seidel and others 2012). Compared to the Adelaide technique (discussed above), an advantage of terrestrial LiDAR is that this is non-destructive, which is a logical choice for monitoring forest health, plant growth and ecosystem restoration. But a major advantage of calculating the desired structural parameters from the scanner data is the non-subjective character of the data processing. As Seidel and others (2012) note, this is a major step forward in the direction of objective methods for canopy analysis. Removing the objectivity could be enough of an advantage to see land managers abandon visual methods entirely. However, the time taken to perform multiple scans, the need for trained personnel and the specialized hard- and software to model the structure of different layers may put this out of reach of many land management agencies. Visual estimates like those used in our study can be completed in a matter of minutes and for minimal cost, while scanning and post-scanning processing can take several hours, which comes at a relatively high pecuniary cost (JG unpublished data). Nonetheless, we see the comparison of visual estimates with those of ground-based LiDAR scanning as a worthwhile avenue for future research.

A second modern technology offering potential to train data collectors is the internet. Online training tools for measuring percent plant cover have reduced variability between trained volunteers and professionals (e.g., Newman and others 2010). Ashcroft and others (2012) also used training video posted to the video sharing website, YouTube, in order to train volunteers in invertebrate collecting methods. These types of training have additional benefits in that it can reach remote participants, the training is flexible and can be ‘revisited’ if required, and targeted support can be provided where necessary or to those that need it. However, there are also risks involved with this type of training because some variables or tasks may be too difficult. In our study, an online leaf image database would have worked well as a training tool, but capturing the ‘realness’ of habitat variables would be difficult to simulate. A compromise would be in having online training tools complimented with rigorous, repeated and hands-on, in-person training (Newman and others 2010).

Differences in agreement between scientists and volunteers could be explained by the greater experience of scientists in the use of such techniques as part of their everyday employment. This aside, the data we collected illustrate that assessors, both volunteers and professionals, are on a continuum of both aptitude and experience, and the challenge for any type of training is the need to consider this range. Providing participants with the opportunity to collect ‘practice data’ before ‘real data’ are collected might be one useful method for creating a more even spread of ability and familiarity. While we did provide some practice during the training sessions, this may not have been enough and so it is pertinent to ask then, how much practice is enough? Unfortunately, our study was not designed to answer this, but is worth addressing for these types of data in the future. Newman and others (2003) found that volunteer proficiency increased with experience when counting deer droppings, and entrances to the underground chambers of badgers. Thompson and Mapstone (1997) also found that careful training and retraining of observers in collecting data on reef fishes reduced observer-related biases. We chose not to consider differing levels of experience as a potential confounding factor at the outset of the study because the volunteer monitoring programs in our study region do not screen people who collect data based on previous experience, and so our experiments were designed to sample these potential participants. Regardless of the reason for differences in accuracy among participants, managers of monitoring programs should conduct preliminary screening to identify individuals that require further training. This screening process would reduce costs by providing targeted training. Screening could also exclude people from particular monitoring activities from the very beginning.

The disagreement between estimates and benchmarks among participants could be explained by ambiguity in our instructions, or differences in the way our instructions were interpreted by individuals. Clarity of instructions has been hypothesized to underlie the disparity in accuracy among scientists and volunteers in surveys of similar variables to assess riparian condition (Wilson and others 2006). From our study, we are unsure if accuracy could be improved by making instructions clearer or modifying the delivery of instructions in some way. Prior to experiments, all participants were given the same induction in terms of type and duration of training and familiarization with the sampling techniques used. In the assessments of leaf damage, we had participants use a leaf damage key because these types of aids have been shown to increase accuracy and reduce inter and intra-observer variability (Forbes and Korva 1994; Gilbert and Gregoire 2003). Our results suggest that the use of a leaf damage key may not have made any difference to accuracy or inter-observer variability. Interpretation of instructions we gave ultimately rested upon the individual, and how instructions are interpreted is probably linked with individual experience. Trials of variants on instructions would be beneficial in exploring style of instruction delivery as a method of improving data accuracy. This would require an iterative process using different variants of instructions coupled with purposefully designed surveys to determine what worked best. We chose not to pursue this as the purpose of the study was to evaluate a sample of participants currently engaged in monitoring methods developed by the local management authority. There may not be a single ‘best way’ to provide instruction. Learning theory suggests that people learn new skills in different ways, and those who give instruction should appreciate the existence of different learning styles and offer variety in their training approaches (Gardner 1993).

In order to maximize data quality, less subjective measures could also be sought. For example, amounts of coarse woody debris could be weighed (Oliver and others 2006), percent foliage cover could be recorded using a densiometer (Lemmon 1956), and leaf damage could be objectively sampled using computer imaging (Gilbert and Gregoire 2003). Vos and others (2000) argued that field methods should involve as many objective elements as possible, avoid subjective interpretation in the field, require counts rather than estimates, and the like. A potential obstacle with these approaches, especially for volunteer groups, is that quantitative methods often require specialist equipment and training in the use of instruments. Additional training would doubtless add to the costs of monitoring programs, but would deliver benefits. For example, decision makers and those who are accountable for investing in rehabilitation initiatives would have greater faith in the findings and more trust in the rehabilitation process. Moreover, training of volunteers to operate specialist equipment may create greater commitment to the project. Volunteers also add to their skill-base and increase their employability. In a study examining the benefits of volunteer involvement in a mammal monitoring program, Newman and others (2003) found that 4.5 % of participants (total of 155) changed career, retraining in biology (often after years in a successful alternative career).

Lastly, while we note that measuring the environmental outcomes of ecosystem rehabilitation is important for accounting purposes, especially where public money is used (Curtis and others 1998), it is perhaps too simple to reason that the success or failure of a restoration project will be determined by the degree to which data collected by volunteers agree with those of scientists. Volunteers in environmental projects using self-funded volunteer labor have been valued for more than just the potential for cost-savings. Volunteers experience high levels of many different satisfactions (Miles and others 1998). For example, participants gain educational benefits (Newman and others 2003; Boudreau and Yan 2004), there is potential to ‘reunite science and society’ (Carr 2004), the public can be inspired to appreciate nature through hands-on experiences (Cohn 2008) and participants can build a sense of ownership in both the program and place (Boylen and others 2004). These unmeasured benefits are a success in themselves. It may be far better to focus on these as a focus of success since these benefits will engender a society that protects the environment in the first place.

Conclusions

Considering the results presented in our study, there is no blanket justification for the ‘condemnation’ of volunteer-collected data—as was done in 1993, prohibiting the use of volunteers in the US National Biological Survey (Newman and others 2003). Data collected by volunteers can agree with benchmark values to a similar degree as data collected by scientists. The degree of agreement in data depends not only on each particular habitat feature being measured, but also on the individual’s ability to estimate the attribute. Regardless of who collects data for monitoring, quality control measures need to be in place to ensure that the data are acceptable.

References

Agresti A (1984) Analysis of ordinal categorical data. Wiley, New York

Andrew MH, Noble IR, Lange RT (1979) A non-destructive method for estimating the weight of forage on shrubs. Australian Rangeland Journal 1:225–231

Anonymous (1997) New South Wales State of the Environment 1997. Environment Protection Authority, Sydney, NSW

Anonymous (2003) Integrated Catchment Management Plan for the Hunter Catchment 2002. NSW Department of Land and Water Conservation

Anonymous (2006) Hunter-Central Rivers Catchment Management Authority annual report 2005–2006. http://www.hcr.cma.nsw.gov.au/pubs/annualreport_0506.pdf. Accessed 17 Nov 2009

Anonymous (2007) Keeping a project journal—guidelines for planning, monitoring and evaluating a land management project. Hunter-Central Rivers Catchment Management Authority. http://www.hcr.cma.nsw.gov.au/ep_resources.php3. Accessed 17 Nov 2009

Ashcroft M, Gollan JR, Batley M (2012) Combining citizen science, bioclimatic envelope models and observed habitat preferences to determine the distribution of an inconspicuous, recently detected introduced bee (Halictus smaragdulus Vachal Hymenoptera: Halictidae) in Australia. Biological Invasions 14:515–527

Boudreau SA, Yan ND (2004) Auditing the accuracy of a volunteer-based surveillance program for an aquatic invader Bythotrephes. Environmental Monitoring and Assessment 91:17–26

Boylen CW, Howe EA, Bartkowski JS, Eichler LW (2004) Augmentation of a long-term monitoring program for Lake George, NY by citizen volunteers. Lake and Reservoir Management 20(2):121–129

Brandon A, Spyreas G, Molano-Flores B, Carroll C, Ellis J (2003) Can volunteers provide reliable data for forest vegetation surveys? Natural Areas Journal 23:254–262

Brooks SS, Lake PS (2007) River restoration in Victoria, Australia: Change is in the wind, and none too soon. Restoration Ecology 15(3):584–591

Carr AJL (2004) Why do we all need community science? Society and Natural Resources 17:841–849

Cohn JP (2008) Citizen science: can volunteers do real research? BioScience 58(3):192–197

Crall AW, Newman GJ, Jarnevich CS, Stohlgren TJ, Waller DM, Graham J (2010) Improving and integrating data on invasive species collected by citizen scientists. Biological Invasions 12(10):3419–3428

Curtis A, Robertson A, Race D (1998) Lessons from recent evaluations of natural resource management programs in Australia. Australian Journal of Environmental Management 5:109–119

Danson FM, Hetherington D, Morsdorf F, Koetz B, Allgöwer B (2007) Forest canopy gap fraction from terrestrial laser scanning. IEEE Geoscience and Remote Sensing 4:157–160

Delaney DG, Sperling CD, Adams CS, Leung B (2008) Marine invasive species: validation of citizen science and implications for national monitoring networks. Biological Invasions 10(1):117–128

Engel SR, Voshell JR (2002) Volunteer biological monitoring: can it accurately assess the ecological condition of streams? American Entomologist 48:164–177

Environmental Protection Agency, EPA (2002) Methods for evaluating wetland condition: volunteers and wetland biomonitoring. EPA, Washington, D.C

Forbes GA, Korva KT (1994) The effect of using a Horsfall–Barratt scale on precision and accuracy of visual estimation of potato late blight severity in the field. Plant Pathology 43:675–682

Fore LS, Paulsen K, O’Laughlin K (2001) Assessing the performance of volunteers in monitoring streams. Freshwater Biology 46:109–123

Gardner H (1993) Multiple intelligences: the theory in practice. Basic Books, New York

Gibbons P, Freudenberger D (2006) An overview of methods used to assess vegetation condition at the scale of the site. Ecological Management and Restoration 7(S1):S10–S17

Gilbert M, Gregoire JC (2003) Visual, semi-quantitative assessments allow accurate estimates of leafminer population densities: an example comparing image processing and visual evaluation of damage by the horse chestnut leafminer Cameraria ohridella (Lep., Gracillariidae). Journal of Applied Entomology 127:354–359

Giller PS (2005) River restoration: seeking ecological standards, Editor’s introduction. Journal of Applied Ecology 42:201–207

Gollan JR, Ashcroft MB, Cassis G, Donnelly AP, Lassau SL (2009) Testing common habitat based surrogates in a semi arid rangeland. Biodiversity and Conservation 18:1147–1159

Gorrod EJ, Keith DA (2009) Observer variation in field assessments of vegetation condition: implications for biodiversity conservation. Ecological Management and Restoration 10(1):31–40

Jansen A, Robertson A, Thompson L, Wilson A (2004) Development and application of a method for the rapid appraisal of riparian condition. River management technical guideline no. 4, land and water Australia, Canberra, ACT

Kershner JL (1997) Monitoring and adaptive management. In: Williams JE, Dombeck MP, Wood CA (eds) Watershed restoration: principles and practices. American Fisheries Society Special Publication, Bethesda, pp 116–134

King WH, Woolmington ER (1960) The role of the river in the development of settlement in the lower Hunter Valley. Australian Geographer 8:3–16

Landis JR, Koch GG (1977) The measurement of observer agreement for categorical data. Biometrics 33:159–174

Lemmon PE (1956) A spherical densiometer for estimating forest overstorey density. Forest Science 2:314–332

McElhinny C, Gibbons P, Brack C, Bauhus J (2005) Forest and woodland stand structural complexity: its definition and measurement. Forest Ecology and Management 218:1–24

McLaren MA, Cadman MD (1999) Can novice volunteers provide credible data for bird surveys requiring song identification? Journal of Field Ornithology 70(4):481–490

Middleton JV (2001) The stream doctor project: community-driven stream restoration. BioScience 51:293–296

Miles I, Sullivan WC, Kuo FE (1998) Ecological restoration volunteers: the benefits of participation. Urban Ecosystems 2(1):27–41

Motulsky HJ (2003) Prism 4 statistics guide—statistical analyses for laboratory and clinical researchers. GraphPad Software Inc., San Diego

Nerbonne JF, Vondracek B (2003) Volunteer macroinvertebrate monitoring: assessing training needs through examining error and bias in untrained volunteers. Journal of the North American Benthological Society 22(1):152–163

Newman C, Buesching CD, Macdonald W (2003) Validating mammal monitoring methods and assessing the performance of volunteers in wildlife conservation–”Sed quis custodiet ipsos custodies?”. Biological Conservation 113:189–197

Newman G, Crall A, Laituri M, Graham J, Stohlgren T, Moore JC, Kodrich K, Holfelder KA (2010) Teaching citizen science skills online: implications for invasive species training programs. Applied Environmental Education and Communication 9(4):276–286

Noss RF (1990) Indicators for monitoring biodiversity: a hierarchical approach. Conservation Biology 4:355–364

Oliver I, Pearce S, Greenslade PM, Britton DR (2006) Contribution of paddock trees to the conservation of terrestrial invertebrate biodiversity within grazed native pastures. Austral Ecology 31:1–12

Ruiz-Jaen MC, Mitchell Aide T (2005) Rehabilitation success: how is it being measured? Rehabilitation Ecology 13(3):569–577

Scion Corporation (2000) Scion Image for Windows. http://www.scioncorp.com/pages/scion_image_windows.htm. Accessed 17 Nov 2009

Seidel D, Fleck S, Leuschner C (2012) Analyzing forest canopies with ground-based laser scanning: A comparison with hemispherical photography. Agricultural and Forest Meteorology 154–155:1–8

Stadel AV, Nelson JG (1995) The role of citizen participation in ecosystem monitoring. In: Herman T, Bondrup-Nelson S, Martin Willison JH, Munro NWP (eds). Second International conference on science and the management of protected areas. Dalhousie University, Halifax, Nova Scotia, Canada, pp 447–453

Stokes P, Havas M, Bridges T (1990) Public participation and volunteer help in monitoring programs: An assessment. Environmental Monitoring and Assessment 15:225–229

Story R (1963) Vegetation of the Hunter Valley. Commonwealth Scientific and Industrial Research Organization, Land Research Series Rep. No. 8

Ten Kate K, Bishop J, Bayon R (2004) Biodiversity offsets: views, experience, and the business case—executive summary. IUCN, Gland, Switzerland and Cambridge, UK and Insight Investment, London, UK. http://www.forest-trends.org/publication_details.php?publicationID=661. Accessed 17 Nov 2009

Thompson AA, Mapstone BD (1997) Observer effects and training in underwater visual surveys of reef fishes. Marine Ecology Progress Series 154:53–63

Underwood AJ, Chapman MG (2002) Conservation of coastal organisms depends on scientific realism, not community ‘monitoring’. In: Lunney D, Dickman C, Burgin S (eds) A clash of paradigms: community and research-based conservation. Royal Zoological Society of New South Wales, Mosman, pp 20–37

USDA (2003) Conservation reserve program. US Department of Agriculture, Farm Service Agency, Washington, DC. http://www.fsa.usda.gov/FSA/webapp?area=homeandsubject=coprandtopic=cr. Accessed 17 Nov 2009

Vos P, Meelis E, Keurs WJT (2000) A framework for the design of ecological monitoring programs as a tool for environmental and nature management. Environmental Monitoring and Assessment 61:317–344

Wells SM (1995) Reef assessment and monitoring using volunteers and non-professionals. Publication of Rosential School of Marine and Atmospheric Science, University of Miami, Miami, p 57

Whitelaw G, Vaughan H, Craig B, Atkinson D (2003) Establishing the Canadian community monitoring network. Environmental Monitoring and Assessment 88:409–418

Wilson A, Jansen A, Curtis A, Robertson A (2006) Measuring riparian condition: a comparison of assessments by landholders and scientists. Ecological Management and Restoration 7:123–129

Zar J (1999) Biostatistical analysis, 4th ed. Prentice Hall, Upper Saddle River

Acknowledgments

This project was supported by the NSW Environmental Trust (Grant 2003/RD/001) to L.W. The Coal and Allied Community Trust also provided financial support as part of the BugWise project. We thank Conservation Volunteers Australia, Green Corp volunteers and Australian Museum scientists for their time in collecting data. The Upper Hunter River Rehabilitation Initiative provided in-kind support. M Bulbert and H Smith assisted in the field. We also thank three anonymous reviewers for providing comments that led to improvements to the manuscript.

Conflict of interest

All work presented complied with current Australian law and the authors declare that they have no conflict of interest.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Gollan, J., de Bruyn, L.L., Reid, N. et al. Can Volunteers Collect Data that are Comparable to Professional Scientists? A Study of Variables Used in Monitoring the Outcomes of Ecosystem Rehabilitation. Environmental Management 50, 969–978 (2012). https://doi.org/10.1007/s00267-012-9924-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00267-012-9924-4