Abstract

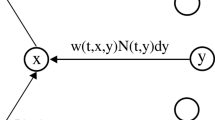

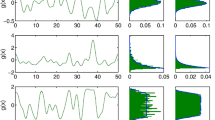

We introduce an extension of the classical neural field equation where the dynamics of the synaptic kernel satisfies the standard Hebbian type of learning (synaptic plasticity). Here, a continuous network in which changes in the weight kernel occurs in a specified time window is considered. A novelty of this model is that it admits synaptic weight decrease as well as the usual weight increase resulting from correlated activity. The resulting equation leads to a delay-type rate model for which the existence and stability of solutions such as the rest state, bumps, and traveling fronts are investigated. Some relations between the length of the time window and the bump width is derived. In addition, the effect of the delay parameter on the stability of solutions is shown. Also numerical simulations for solutions and their stability are presented.

Similar content being viewed by others

References

Abbott LF, Nelson SB (2000) Synaptic plasticity: taming the beast. Nat Neurosci 3:1178–1183

Amari S (1977) Dynamics of pattern formation in lateral-inhibition type neural fields. Biol Cybern 27:77–87

Baladron J, Fasoli D, Faugeras OD, Touboul J (2011) Mean field description of and propagation of chaos in recurrent multipopulation networks of Hodgkin–Huxley and Fitzhugh–Nagumo neurons. arXiv:1110.4294

Bienenstock EL, Cooper LN, Munro PW (1982) Theory for the development of neuron selectivity: orientation specificity and binocular interaction in visual cortex J Neurosci 2:32–48

Bressloff PC (2009) Lectures in mathematical neuroscience. Mathematical biology, IAS/Park City mathematical series 14, pp 293–398

Bressloff PC (2012) Spatiotemporal dynamics of continuum neural fields. J Phys A Math Theor 45(3):033001

Bressloff PC, Coombes S (2013) Neural bubble dynamics revisited. Cogn Comput 5:281–294

Connell L (2007) Representing object colour in language comprehension. Cognition 102(3):476–485

Coombes H, Schmidt S, Bojak I (2012) Interface dynamics in planar neural field models. J Math Neurosci 2(1):1–27

Coombes P, Beim Graben S, Potthast R (2012b) Tutorial on neural field theory. Springer, Berlin

Coombes S (2005) Waves, bumps, and patterns in neural field theories. Biol Cybern 93:91–108

Coombes S, Owen MR (2007) Exotic dynamics in a firing rate model of neural tissue with threshold accommodation. In: Botelho F, Hagen T, Jamison J (eds) Fluids and waves: recent trends in applied analysis, vol 440. AMS Contemporary Mathematics, pp 123–144

Dayan P, Abbott LF (2003) Theoretical neuroscience: computational and mathematical modeling of neural systems. J Cogn Neurosci 15(1):154–155

Ermentrout GB, Cowan JD (1979) A mathematical theory of visual hallucination patterns. Biol Cybern 34:137–150

Földiák P (1991) Learning invariance from transformation sequences. Neural Comput 3(2):194–200

Galtier MN, Faugeras OD, Bressloff PC (2011) Hebbian learning of recurrent connections: a geometrical perspective. Neural Comput 24(9):2346–2383

Gerstner W, Kistler WK (2002) Mathematical formulations of Hebbian learning. Biol Cybern 87:404–415

Goldman-Rakic PS (1995) Cellular basis of working memory. Neuron 14:477–485

Golomb D, Amitai Y (1997) Propagating neuronal discharges in neocortical slices: computational and experimental study. J Neurophysiol 78(3):1199–1211

Hebb DO (1949) The organization of behavior; a neuropsychological theory

Huang X (2004) Spiral waves in disinhibited mammalian neocortex. J Neurosci 24:9897–9902

Itskov D, Hansel V, Tsodyks M (2011) Short-term facilitation may stabilize parametric working memory trace. Front Comput Neurosci 5(40)

Lu Y, Sato Y, Amari S (2011) Traveling bumps and their collisions in a two-dimensional neural field. Neural Comput 23:1248–1260

Miller KD, MacKayt DJC (1994) The role of constraints in Hebbian learning. Neural Comput 6:100–126

Oja E (1982) Simplified neuron model as a principal component analyzer. J Math Biol 15(3):267–273

Olshausen BA, Field DJ (1997) Sparse coding with an overcomplete basis set: a strategy employed by v1? Vis Res 37(23):3311–3325

Pinto DJ (2005) Initiation, vitro involve distinct mechanisms. J Neurosci 25:8131–8140

Pinto DJ, Ermentrout GB (2001) Spatially structured activity in synaptically coupled neuronal networks: I. Traveling fronts and pulses. SIAM J Appl Math 62:206–225

Rao RPN, Sejnowski TJ (2001) Spike-timing-dependent Hebbian plasticity as temporal difference learning. Neural Comput 13(10):2221–2237

Robinson PA (2011) Neural field theory of synaptic plasticity. J Theor Biol 285:156–163

Sandstede B (2007) Evans functions and nonlinear stability of traveling waves in neuronal network models. Int J Bifurc Chaos 17:2693–2704

Sejnowski TJ (1977) Statistical constraints on synaptic plasticity. J Theor Biol 69(2):385–389

Wallis G, Baddeley R (1997) Optimal, unsupervised learning in invariant object recognition. Neural Comput 9:883–894

Wilson HR, Cowan JD (1972) Excitatory and inhibitory interactions in localized populations of model neurons. Biophys J 12:1–24

Wilson HR, Cowan JD (1973) A mathematical theory of the functional dynamics of cortical and thalamic nervous tissue. Kybernetika 13(2):55–80

Acknowledgments

It is pleasure for the authors to thank A. Abbasian for his useful comments about the biological aspects of the problem. This research was in part supported by a grant from IPM (No. 91920410). The third author was also partially supported by National Elites Foundation.

Author information

Authors and Affiliations

Corresponding author

Appendix: Proof of Theorem 1

Appendix: Proof of Theorem 1

Proof

We claim that in (9), if \({\mathrm {Re}}\lambda \ge 0\), we must have \({\mathrm {Im}}\lambda =0\). If it is not the case, assume that \(\lambda =r+{\mathrm {i}}m\) and \(m\ne 0\) and \(r\ge 0\). Let \(\theta =\alpha \gamma \tau ^{-1} f(\overline{u})^2f'(\overline{u})(W+\widehat{w}_m(\xi ))\) and considering imaginary parts of (9),

Since \(m\ne 0\),

Therefore we obtain

which is a contradiction (Note that \(\theta \ge 0\)).

The left-hand side of (9) is an increasing function of \(\lambda \in [0,\infty ]\). Therefore, its value at \(\lambda =0\) must be positive for stability, i.e.,

which concludes the results in the desired cases. (Note that \(\widehat{w}_m(\xi )\le W\) when the synaptic weight kernel is positive everywhere and also in the other case, Mexican-hat kernel \(w_m(x)=\frac{1}{4}(1-|x|){\mathrm {e}}^{-|x|}\), we have \(W=0\) and \(\widehat{w}_m(\xi )\le \frac{1}{4}\)). Also in the first case, \(\overline{u}\) is a root of \(\big (1-\kappa {\mathrm {e}}^{-\gamma \delta f(\overline{u})^2}\big )Wf(\overline{u})-\overline{u}\) and the stability condition reads that the derivative of this function with respect to \(\overline{u}\) should be negative at \(\overline{u}\) and this is the case for the extreme possible values of \(\overline{u}\) (which are of course positive) since the value of this function is positive at zero and negative at infinity. In fact in general, the possible constant steady states are alternatively stable and unstable. \(\square \)

Rights and permissions

About this article

Cite this article

Fotouhi, M., Heidari, M. & Sharifitabar, M. Continuous neural network with windowed Hebbian learning. Biol Cybern 109, 321–332 (2015). https://doi.org/10.1007/s00422-015-0645-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00422-015-0645-7