Abstract

We develop a new multi-scale framework flexible enough to solve a number of problems involving embedding random sequences into random sequences. Grimmett et al. (Random Str Algorithm 37(1):85–99, 2010) asked whether there exists an increasing \(M\)-Lipschitz embedding from one i.i.d. Bernoulli sequence into an independent copy with positive probability. We give a positive answer for large enough \(M\). A closely related problem is to show that two independent Poisson processes on \(\mathbb R \) are roughly isometric (or quasi-isometric). Our approach also applies in this case answering a conjecture of Szegedy and of Peled (Ann Appl Probab 20:462–494, 2010). Our theorem also gives a new proof to Winkler’s compatible sequences problem. Our approach does not explicitly depend on the particular geometry of the problems and we believe it will be applicable to a range of multi-scale and random embedding problems.

Similar content being viewed by others

1 Introduction

With his compatible sequences and clairvoyant demon scheduling problems Winkler introduced a fascinating class of dependent or “co-ordinate” percolation type problems (see e.g. [2, 4, 10, 24]). These, and other problems in this class, can be interpreted either as embedding one sequence into another according to certain rules or as oriented percolation problems in \(\mathbb Z ^2\) where the sites are open or closed according to random variables on the co-ordinate axes. A natural question of this class posed by Grimmett, Liggett and Richthammer [14] asks whether there exists a Lipshitz embedding of one Bernoulli sequence into another. The following theorem answers the main question of [14].

Theorem 1

Let \(\{X_i\}_{i\in \mathbb Z }\) and \(\{Y_i\}_{i\in \mathbb Z }\) be independent sequences of independent identically distributed \(\hbox {Ber}(\frac{1}{2})\) random variables. For sufficiently large \(M\) almost surely there exists a strictly increasing function \(\phi :\mathbb Z \rightarrow \mathbb Z \) such that \(X_i=Y_{\phi (i)}\) and \(1\le \phi (i)-\phi (i-1)\le M\) for all \(i\).

The original question of [14] was slightly different asking for a positive probability on the natural numbers with the condition \(\phi (0)=0\), which is implied by our theorem (and is equivalent by ergodic theory considerations). Among other results they showed that Theorem 1 fails in the case of \(M=2\). In a series of subsequent works Grimmett, Holroyd and their collaborators [6, 10–13, 16] investigated a range of related problems including when one can embed \(\mathbb Z ^d\) into site percolation in \(\mathbb Z ^D\) and showed that this was possible almost surely for \(M=2\) when \(D>d\) and the the site percolation parameter was sufficiently large but almost surely impossible for any \(M\) when \(D \le d\). Recently Holroyd and Martin showed that a comb can be embedded in \(\mathbb Z ^2\). Another important series of work in this area involves embedding words into higher dimensional percolation clusters [3, 5, 20, 21]. Despite this impressive progress the question of embedding one random sequence into another remained open. The difficulty lies in the presence of long strings of ones and zeros on all scales in both sequences which must be paired together.

In a similar vein is the question of a rough, (or quasi-), isometry of two independent Poisson processes. Informally, two metric spaces are roughly isometric if their metrics are equivalent up to multiplicative and additive constants. The formal definition, introduced by Gromov [15] in the case of groups and more generally by Kanai [17], is as follows.

Definition 1.1

We say two metric spaces \(X\) and \(Y\) are roughly isometric with parameters \((M,D,C)\) if there exists a mapping \(T:X\rightarrow Y\) such that for any \(x_1, x_2 \in X\),

and for all \(y\in Y\) there exists \(x\in X\) such that \(d_Y(T(x),y)\le C\).

Originally Abért [1] asked whether two independent infinite components of bond percolation on a Cayley graph are roughly isometric. Szegedy asked the problem when these sets are independent Poisson process in \(\mathbb R \) (see [22] for a fuller description of the history of the problem). The most important progress on this question is by Peled [22] who showed that Poisson processes on \([0,n]\) are roughly isometric with parameter \(M=\sqrt{\log n}\). The question of whether two independent Poisson processes on \(\mathbb R \) are roughly isometric for fixed \((M,D,C)\) was the main open question of [22]. We prove that this is indeed the case.

Theorem 2

Let \(X\) and \(Y\) be independent Poisson processes on \(\mathbb R \) viewed as metric spaces. There exists \((M,D,C)\) such that almost surely \(X\) and \(Y\) are \((M,D,C)\)-roughly isometric.

Again the challenge is to find a good matching on all scales, in this case to the long gaps in each of the point processes with ones of proportional length in the other. The isometries we find are also weakly increasing answering a further question of Peled [22]. Results of [22] show that Theorem 2 applies to one dimensional site percolation as well.

Our final result is the compatible sequence problem of Winkler. Given two independent sequence \(\{X_i\}_{i\in \mathbb N }\) and \(\{Y_i\}_{i\in \mathbb N }\) of independent identically distributed \(\hbox {Ber}(q)\) random variables we say they are compatible if after removing some zeros from both sequences, there is no index with a 1 in both sequence. Equivalently there exist increasing subsequences \(k_1,k_2,\ldots ,\) (respectively \(k_1^{\prime }\ldots \)) such that if \(X_j=1\) then \(j=k_i\) for some \(i\) (resp. if \(Y_j=1\) then \(j=k_i^{\prime }\)) so that for all \(i\), we have \(X_{k_i}Y_{k_i^{\prime }}=0\). We give a new proof of the following result of Gács [7].

Theorem 3

For sufficiently small \(q>0\) two independent \(\hbox {Ber}(q)\) sequences \(\{X_i\}_{i\in \mathbb N }\) and \(\{Y_i\}_{i\in \mathbb N }\) are compatible with positive probability.

Our proof is different and we believe more transparent enabling us to state a concise induction step (see Theorem 4.1). Other recent progress was made on this problem by Kesten et al. [18] constructing sequences which are compatible with a random sequence with positive probability.

Each of these results follows from an abstract theorem in the next section which applies to a range of different models. The novelty of our multi-scale approach is that, as far as possible, we ignore the anatomy of what makes different configurations difficult to embed and instead consider simply the probability that they can be embedded into a random block proving recursive power-law estimates for these quantities. It is thus well suited to addressing even more challenging embedding problems such as random embeddings or rough isometries of higher dimensional percolation where to give a description of bad configurations becomes increasingly complex. We expect that our framework will find uses in a range of other multi-scale problems.

Independent results Two other researchers have also solved some of these problems independently. Vladas Sidoravicius [23] solved the same set of problems and described his approach to us. His work is based on a different multi-scale approach, proving that for certain choices of parameters \(p_1\) and \(p_2\) one can see random binary sequence sampled with parameter \(p_1\) in the scenery determined by another binary sequence sampled with parameter \(p_2\), with positive probability. This generalizes the main theorem of [19] and a slight modification of it then implies Theorems 1, 2 and 3.

Shortly before completing this paper Peter Gács sent us a draft of his paper [9] solving Theorem 1. His approach extends his work on the scheduling problem [8]. The proof is geometric taking a percolation type view and involves a complex multi-scale system of structures. Our work was done completely independently of both.

1.1 General theorem

To apply to a range of problems we need to consider larger alphabets of symbols. Let \(\mathcal{C }^\mathbb{X }=\{C_1,C_2,\ldots \}\) and \(\mathcal{C }^\mathbb{Y }=\{C^{\prime }_1,C^{\prime }_2,\ldots \}\) be a pair of countable alphabets and let \(\mu ^\mathbb{X }\) and \(\mu ^\mathbb{Y }\) be probability measures on \(\mathcal{C }^\mathbb{X }\) and \(\mathcal{C }^\mathbb{Y }\) respectively.

We will suppose also that we have a relation \(\mathcal R \subseteq \mathcal{C }^\mathbb{X }\times \mathcal{C }^\mathbb{Y }\). If \((C_i,C^{\prime }_k)\in \mathcal R \), we denote this by \(C_i \hookrightarrow C^{\prime }_k\). Let \(G_0^\mathbb{X }\subseteq \mathcal{C }^\mathbb{X }\) and \(G_0^\mathbb{Y }\subseteq \mathcal{C }^\mathbb{Y }\) be two given subsets such that \(C_i\in G_0^\mathbb{X }\) and \(C^{\prime }_k\in G_0^\mathbb{Y }\) implies \(C_i\hookrightarrow C^{\prime }_k\). Symbols in \(G_0^\mathbb{X }\) and \(G_0^\mathbb{Y }\) will be referred to as “good”.

1.1.1 Definitions

Now let \(\mathbb X =(X_1,X_2,\ldots )\) and \(\mathbb Y =(Y_1,Y_2,\ldots )\) be two sequences of symbols coming from the alphabets \(\mathcal{C }^\mathbb{X }\) and \(\mathcal{C }^\mathbb{Y }\) respectively. We will refer to such sequences as an \(\mathbb X \)-sequence and a \(\mathbb Y \)-sequence respectively. For \(1\le i_1 < i_2\), we call the subsequence \((X_{i_1},X_{i_1+1},\ldots , X_{i_2})\) the “\([i_1,i_2]\)-segment” of \(\mathbb X \) and denote it by \(\mathbb X ^{[i_1,i_2]}\). We call \(\mathbb X ^{[i_1,i_2]}\) a “good” segment if \(X_i\in G_0^\mathbb{X }\) for \(i_1\le i\le i_2\) and similarly for \(\mathbb Y \).

Let \(R\) be a fixed constant. Let \(R_0=2R\), \(R_0^{-}=1\), \(R_0^{+}=3R^2\).

Definition 1.2

Let \(\mathbb X \) and \(\mathbb Y \) be sequences as above. Let \(X=(X_{a+1},\ldots X_{a+n})\) and \(Y=(Y_{a^{\prime }+1},\ldots Y_{a^{\prime }+n^{\prime }})\) be segments of \(\mathbb X \) and \(\mathbb Y \) respectively. We say \(X\) \(R\)-embeds or \(R\)-maps into \(Y\), denoted \(X \hookrightarrow _R Y\)if there exists \(a=i_0<i_1<i_2<\cdots <i_k=a+n\) and \(a^{\prime }=i^{\prime }_0<i^{\prime }_1<i^{\prime }_2< \cdots <i^{\prime }_k=a^{\prime }+n^{\prime }\) satisfying the following conditions.

-

1.

For each \(r\), either \(i_{r+1}-i_{r}=i^{\prime }_{r+1}-i^{\prime }_{r}=1\) or \(i_{r+1}-i_{r}=R_0\) or \(i^{\prime }_{r+1}-i^{\prime }_{r}=R_0\).

-

2.

If \(i_{r+1}-i_{r}=i^{\prime }_{r+1}-i^{\prime }_{r}=1\), then \(X_{i_{r}+1}\hookrightarrow Y_{i^{\prime }_{r}+1}\).

-

3.

If \(i_{r+1}-i_{r}=R_0\), then \(R_0^{-}\le i^{\prime }_{r+1}-i^{\prime }_{r}\le R_0^{+}\), and both \(\mathbb X ^{[i_r+1,i_{r+1}]}\) and \(\mathbb Y ^{[i^{\prime }_{r}+1,i^{\prime }_{r+1}]}\) are good segments.

-

4.

If \(i^{\prime }_{r+1}-i^{\prime }_{r}=R_0\), then \(R_0^{-}\le i_{r+1}-i_{r}\le R_0^{+}\), and both \(\mathbb X ^{[i_r+1,i_{r+1}]}\) and \(\mathbb Y ^{[i^{\prime }_{r}+1,i^{\prime }_{r+1}]}\) are good segments.

We say that \(\mathbb X \) \(R\)-embeds or \(R\)-maps into \(\mathbb Y \), denoted \(\mathbb X \hookrightarrow _R \mathbb Y \) if there exists \(0=i_0<i_1<i_2<\ldots \) and \(0=i^{\prime }_0<i^{\prime }_1<i^{\prime }_2< \ldots \) satisfying the above conditions.

Throughout we will use a fixed \(R\) defined in Theorem 4 and will simply refer to mappings and write that \(\mathbb X \hookrightarrow \mathbb Y \) (or \(X\hookrightarrow Y\)) except where it is ambiguous. The following elementary observation is useful. Suppose we have \(n_0<n_1<\ldots < n_k\) and \(n^{\prime }_0< n^{\prime }_1<\ldots < n^{\prime }_k\) such that \(\mathbb X ^{[n_r+1,n_{r+1}]}\hookrightarrow \mathbb Y ^{[n^{\prime }_r+1,n^{\prime }_{r+1}]}\) for \(0\le r<k\), then \(\mathbb X ^{[n_0+1,n_k]}\hookrightarrow \mathbb Y ^{[n^{\prime }_0+1,n^{\prime }_k]}\).

A key element in our proof is tail estimates on the probability that we can map a block \(X\) into a random block \(Y\) and so we make the following definition.

Definition 1.3

For \(X \in \mathcal{C }^\mathbb{X }\), we define the embedding probability of \(X\) as \(S_0^\mathbb{X }(X)=\mathbb P (X\hookrightarrow Y|X)\) where \(Y\sim \mu ^\mathbb{Y }\). We define \(S_0^\mathbb{Y }(Y)\) similarly and suppress the notation \(\mathbb X ,\mathbb Y \) when the context is clear.

1.1.2 General theorem

We can now state our general theorem which will imply the main results of the paper as shown in § 2.

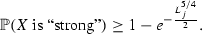

Theorem 4

(General Theorem) There exist positive constants \(\beta \), \(\delta \), \(m\), \(R\) such that for all large enough \(L_0\) the following holds. Let \(X\sim \mu ^\mathbb{X }\) and \(Y\sim \mu ^\mathbb{Y }\) where \(\mu ^\mathbb{X }\) and \(\mu ^\mathbb{Y }\) are probability distributions on alphabets such that for all \(k\ge L_0\),

Suppose the following conditions are satisfied

-

1.

For all \(0< p \le 1-L_0^{-1}\),

$$\begin{aligned} \mathbb P (S_0^\mathbb{X }(X)\le p)\le p^{m+1}L_0^{-\beta },\quad \mathbb P (S_0^\mathbb{Y }(Y)\le p)\le p^{m+1}L_0^{-\beta }. \end{aligned}$$(2) -

2.

Most symbols are “good”,

$$\begin{aligned} \mathbb P (X\in G_0^\mathbb{X })\ge 1-L_0^{-\delta }, \quad \mathbb P (Y\in G_0^\mathbb{Y })\ge 1-L_0^{-\delta }. \end{aligned}$$(3)

Then for \(\mathbb X =(X_1,X_2,\ldots )\) and \(\mathbb Y =(Y_1,Y_2,\ldots )\), two sequences of i.i.d. symbols with laws \(\mu ^\mathbb{X }\) and \(\mu ^\mathbb{Y }\) respectively, we have

1.2 Proof outline

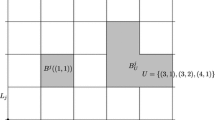

The proof makes use of a number of parameters, \(\alpha ,\beta ,\delta , m, k_0, R\) and \(L_0\) which must satisfy a number of relations described in the next subsection. Our proof is multi-scale and divides the sequences into blocks on a series of doubly exponentially growing length scales \(L_j=L_0^{\alpha ^j}\) for \(j\ge 0\) and at each of these levels we define a notion of a “good” block. Single characters in the base sequences \(\mathbb X \) and \(\mathbb Y \) constitute the level 0 blocks.

Suppose that we have constructed the blocks up to level \(j\) denoting the sequence as \((X_1^{(j)},X_2^{(j)}\ldots )\). In § 3, we give a construction of \((j+1)\)-level blocks out of \(j\)-level sub-blocks in such way that the blocks are independent and apart from the first block, identically distributed and that the first and last \(L_j^3\) sub-blocks of each block are good. For more details, see § 3.

At each level we distinguish a set of blocks to be good. In particular this will be done in such a way that at each level any good block maps into any other good block. Moreover, any segment of \(R_j=4^j (2R)\) good \(\mathbb X \)-blocks will map into any segment of \(\mathbb Y \)-blocks of length between \(R_j^-=4^j(2-2^{-j})\) and \(R_j^+=4^jR^2(2+2^{-j})\) and vice-versa. This property of certain mappings will allow us to avoid complicated conditioning issues. Moreover, being able to map good segments into shorter or longer segments will give us the flexibility to find suitable partners for difficult to embed blocks and to achieve improving estimates of the probability of mapping random \(j\)-level blocks \(X \hookrightarrow Y\). We describe how to define good blocks in § 3.

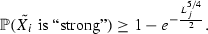

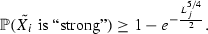

The proof then involves a series of recursive estimates at each level given in § 4. We ask that at level \(j\) the probability that a block is good is at least \(1-L_j^{-\delta }\) so that the vast majority of blocks are good. Furthermore, we show tail bounds on the embedding probabilities showing that for \(0<p\le 1-L_j^{-1}\),

where \(S_j^\mathbb{X }(X)\) denotes the \(j\)-level embedding probability \(\mathbb P [X \hookrightarrow Y|X]\) for \(X,Y\) random independent \(j\)-level blocks. We show the analogous bound for \(\mathbb Y \)-blocks as well. This is essentially the best we can hope for—we cannot expect a better than power-law bound here because of the probability of occurrences of sequences of repeating symbols in the base 0-level sequence of length \(C \log (L_j^{\alpha })\) for large \(C\). We also ask that good blocks have the properties described above and that the length of blocks satisfy an exponential tail estimate. The full inductive step is given in § 4.1. Proving this constitutes the main work of the paper.

The key quantitative estimate in the paper is Lemma 7.3 which follows directly from the recursive estimates and bounds the chance of a block having an excessive length, many bad sub-blocks or a particularly difficult collection of sub-blocks measured by the product of their embedding probabilities. In order to achieve the improving embedding probabilities at each level we need to take advantage of the flexibility in mapping a small collection of very bad blocks to a large number of possible partners by mapping the good blocks around them into longer or shorter segments using the inductive assumptions. To this effect we define families of mappings between partitions to describe such potential mappings. Because \(m\) is large and we take many independent trials the estimate at the next level improves significantly. Our analysis is split into 5 different cases.

To show that good blocks have the required properties we construct them so that they have at most \(k_0\) bad sub-blocks all of which are “semi-bad” (defined in § 3) in particular with embedding probability at least \((1-\frac{1}{20k_0R_{j+1}^{+}})\). We also require that each subsequence of \(L_j^{3/2}\) sub-blocks is “strong” in that every semi-bad block maps into a large proportion of the sub-blocks. Under these condition we show that for any good blocks \(X\) and \(Y\) at least one of our families of mappings gives an embedding. This holds similarly for embeddings of segments of good blocks.

To complete the proof we note that with positive probability \(X_1^{(j)}\) and \(Y_1^{(j)}\) are good for all \(j\) with positive probability. This gives a sequence of embeddings of increasing segments of \(\mathbb X \) and \(\mathbb Y \) and by taking a converging subsequential limit we can construct an \(R\)-embedding of the infinite sequences completing the proof.

We can also give deterministic constructions using our results. In Sect. 10 we construct a deterministic sequence which has an \(M\)-Lipshitz embedding into a random binary sequence in the sense of Theorem 1 with positive probability. Similarly, this approach gives a binary sequence with a positive density of ones which is compatible sequence with a random \(\hbox {Ber}(q)\) sequence in the sense of Theorem 3 for small enough \(q>0\) with positive probability.

1.2.1 Parameters

Our proof involves a collection of parameters \(\alpha ,\beta ,\delta ,k_0,m\) and \(R\) which must satisfy a system of constraints. The required constraints are

To fix on a choice we will set

Given these choices we then take \(L_0\) to be a sufficiently large integer. We did not make a serious attempt to optimize the parameters or constraints and indeed at times did not in order to simplify the exposition.

1.3 Organization of the paper

In Sect. 2 we show how to derive Theorems 1, 2 and 3 from our general Theorem 4. In Sect. 3 we describe our block constructions and formally define good blocks. In Sect. 4 we state the main recursive theorem and show that it implies Theorem 4. In Sects. 5 and 6 we construct a collection of generalized mappings of partitions which we will use to describe our mappings between blocks. In Sect. 7 we prove the main recursive tail estimates on the embedding probabilities. In Sect. 8 we prove the recursive length estimates on the blocks. In Sect. 9 we show that good blocks have the required inductive properties. Finally in Sect. 10 we describe how these results yield deterministic sequences with positive probabilities of \(M\)-Lipshitz embedding or being a compatible sequence.

2 Applications to Lipschitz embeddings, rough isometries and compatible sequences

In this section we show how Theorem 4 can be used to derive our three main results. Notice that Theorem 4 does not require \(\mathbb X \) and \(\mathbb Y \) to be independent. Hence all the three results shall remain valid even if we drop the assumption of independence between the two sequences.

2.1 Lipschitz embeddings

2.1.1 Defining the sequences \(\mathbb X \) and \(\mathbb Y \) and the alphabets \(\mathcal{C }^\mathbb{X }\) and \(\mathcal{C }^\mathbb{Y }\)

Let \(X^{*}=\{X_i^{*}\}_{i\ge 1}\) and \(Y^{*}=\{Y_i^{*}\}_{i\ge 1}\) be two independent sequences of i.i.d. \(\hbox {Ber}(\frac{1}{2})\) variables. Let \(M_0\) be a large constant which will be chosen later. Let \(\tilde{Y^{*}}=\{\tilde{Y_i^{*}}\}\) be the sequence given by \(\tilde{Y_i^{*}}={Y^*}^{[(i-1)M_0+1,iM_0]}\). Now let us divide the \(\{0,1\}\) sequences of length \(M_0\) in the following 3 classes.

-

1.

Class \(\mathbf \star \). Let \(Z=(Z_1,Z_2,\ldots ,Z_{M_0})\) be a sequence of \(0\)’s and \(1\)’s. A length 2-subsequence \((Z_i,Z_{i+1})\) is called a “flip” if \(Z_i\ne Z_{i+1}\). We say \(Z\in \mathbf \star \) if the number of flips in \(Z\) is at least \(2R_0^{+}\).

-

2.

Class \(\mathbf{0}\). If \(Z=(Z_1,Z_2,\ldots ,Z_{M_0})\notin \mathbf \star \) and \(Z\) contains more \(0\)’s than \(1\)’s, then \(Z\in \mathbf{0}\).

-

3.

Class \(\mathbf{1}\). If \(Z=(Z_1,Z_2,\ldots ,Z_{M_0})\notin \mathbf \star \) and \(Z\) contains more \(1\)’s than \(0\)’s, then \(Z\in \mathbf{1}\). For definiteness, let us also say \(Z\in \mathbf{1}\), if \(Z\) contains equal number of \(0\)’s and \(1\)’s and \(Z\notin \mathbf \star \).

Now set \(\mathbb X =(X_1,X_2,\ldots )=X^{*}\) and construct \(\mathbb Y =(Y_1,Y_2,\ldots )\) from \(\tilde{Y^{*}}\) as follows. Set \(Y_i=\mathbf{0}, \mathbf{1}\) or \(\mathbf \star \) according as whether \(\tilde{Y_i^*}\in \mathbf{0, 1}\) or \(\mathbf \star \).

It is clear from this definition that \(\mathbb X =(X_1,X_2,\ldots )\) and \(\mathbb Y =(Y_1,Y_2,\ldots )\) are two independent sequences of i.i.d. symbols coming from the alphabets \(\mathcal{C }^\mathbb{X }\) and \(\mathcal{C }^\mathbb{Y }\) having distributions \(\mu ^\mathbb{X }\) and \(\mu ^\mathbb{Y }\) respectively where

and \(\mu ^\mathbb{X }\) is the uniform measure on \(\{0,1\}\) and \(\mu ^\mathbb{Y }\) is the natural measure on \(\{\mathbf{0},\mathbf{1},\mathbf \star \}\) induced by the independent \(\hbox {Ber}(\frac{1}{2})\) variables.

We take the relation \(\mathcal R \subseteq \mathcal{C }^\mathbb{X }\times \mathcal{C }^\mathbb{Y }\) to be: \(\{0\hookrightarrow \mathbf{0}, 0\hookrightarrow \mathbf \star \), \(1\hookrightarrow \mathbf{1}, 1\hookrightarrow \mathbf \star \}\) and the good sets \(G_0^\mathbb{X }=\{0,1\}\) and \(G_0^\mathbb{Y }=\{\star \}\).

It is now very easy to verify that \(\mathcal{C }^\mathbb{X },\mathcal{C }^\mathbb{Y },\mu ^\mathbb{X },\mu ^\mathbb{Y }, \mathcal R ,G_0^\mathbb{X }, G_0^\mathbb{Y }\), as defined above satisfies all the conditions described in our abstract framework.

2.1.2 Constructing the Lipschitz embedding

Now we verify that the the sequences \(\mathbb X \) and \(\mathbb Y \) constructed from the binary sequences \(X^{*}\) and \(Y^{*}\) can be used to construct an embedding with positive probability. Note that though we constructed the sequences \(\mathbb X \) and \(\mathbb Y \) from i.i.d. \(\hbox {Ber}(\frac{1}{2})\) sequences \(X^{*}\) and \(Y^{*}\) in the previous subsection, the construction is deterministic and hence can be carried out for any binary sequence. We have the following lemma.

Lemma 2.1

Let \(X^{*}=\{X_i^{*}\}_{i\ge 1}\) and \(Y^{*}=\{Y_i^{*}\}_{i\ge 1}\) be two binary sequences. Let \(\mathbb X \) and \(\mathbb Y \) be the sequences constructed from \(X^{*}\) and \(Y^{*}\) as above. There is a constant \(M\) not depending on \(X^{*}\) and \(Y^{*}\), such that whenever \(\mathbb X \hookrightarrow \mathbb Y \), there exists a strictly increasing map \(\phi : \mathbb N \rightarrow \mathbb N \) such that for all \(i,j\in \mathbb N \), \(X_{i}=Y_{\phi (i)}\) and \(|\phi (i)-\phi (j)|\le M|i-j|\), \(\phi (1)< M/2\).

Before proceeding with the proof, let us make the following notation. We say \(X^{*}\hookrightarrow _{*M} Y^{*}\) if a map \(\phi \) satisfying the conditions of the lemma exists. Let us also make the following definition for finite subsequences.

Definition 2.2

Let \({X^{*}}^{[i_1,i_2]}\) and \({Y^{*}}^{[i^{\prime }_1,i^{\prime }_2]}\) be two segments of \(X^{*}\) and \(Y^{*}\) respectively. We say that \({X^{*}}^{[i_1,i_2]} \hookrightarrow _{* M} {Y^{*}}^{[i^{\prime }_1,i^{\prime }_2]}\) if there exists a strictly increasing \(\tilde{\phi }: \{i_1,i_1+1,\ldots ,i_2\}\rightarrow \{i^{\prime }_1,i^{\prime }_1+1,\ldots ,i^{\prime }_2\}\) such that

-

(i)

\(X_k=Y_{\tilde{\phi }(k)}\) and \(k,l \in \{i_1,i_1+1,\ldots ,i_2\}\) implies \(|\phi (k)-\phi (l)|\le M|k-l|\).

-

(ii)

\(\tilde{\phi }(i_1)-i^{\prime }_1\le M/3\) and \(i^{\prime }_2-\tilde{\phi }(i_2) \le M/3\).

In what follows, we shall always be taking \(M\ge 6\). The following observation is trivial.

Observation 2.3

Let \(0=i_0<i_2<\ldots \) and \(0=i^{\prime }_0<i^{\prime }_2<\ldots \) be two increasing sequences of integers. If \({X^{*}}^{[i_k+1,i_{k+1}]}\hookrightarrow _{*M} {Y^{*}}^{[i^{\prime }_k+1,i^{\prime }_{k+1}]}\) for each \(k\ge 0\), then \(X^{*}\hookrightarrow _{* M} Y^{*}\).

Proof of Lemma 2.1

Let \(X^{*},Y^{*},\mathbb X ,\mathbb Y \) be as in the statement of the Lemma. Let \(\mathbb X \hookrightarrow \mathbb Y \). Let \(0=i_0<i_1<i_2<\ldots \) and \(0=i^{\prime }_0<i^{\prime }_1<i^{\prime }_2< \ldots \) be the two sequences obtained from Definition 1.2. The previous observation then implies that it suffices to prove that there exists \(M\) (not depending on \(X^{*}\) and \(Y^{*}\)) such that for all \(h\ge 0\),

Notice that since \(\{i_{h+1}-i_h\}\) and \(\{i^{\prime }_{h+1}-i^{\prime }_h\}\) are bounded sequences, if we can find maps \(\phi _h: \{i_{h}+1,\ldots ,i_{h+1}\}\rightarrow \{i^{\prime }_{h}M_0+1,\ldots ,i^{\prime }_{h+1}M_0\}\) such that \(X^*_i =Y^*_{\phi _h(i)}\), then for sufficiently large \(M\) and for all \(h\) we shall have \({X^*}^{[i_h+1,i_{h+1}]}\hookrightarrow _{*M} {Y^*}^{[i^{\prime }_hM_0+1,i^{\prime }_{h+1}M_0]}\). We shall call such a \(\phi _h\) an embedding.

There are three cases to consider.

Case 1 \(i_{h+1}-i_h=i^{\prime }_{h+1}-i^{\prime }_h=1\). By hypothesis, this implies \(X_{i_h+1}\hookrightarrow Y_{i^{\prime }_h+1}\). If \(X^*_{i_{h}+1}=0\) and \({Y^*}^{[i^{\prime }_hM_0+1,i^{\prime }_{h}M_0+M_0]}\in \{\mathbf{0}, \mathbf \star \}\), then \({Y^*}^{[i^{\prime }_hM_0+1,i^{\prime }_{h}M_0+M_0]}\) must contain at least one \(0\) and hence an embedding exists. Similarly if \(X^*_{i_{h}+1}=1\) and \({Y^*}^{[i^{\prime }_hM_0+1,i^{\prime }_{h}M_0+M_0]}\in \{\mathbf{1}, \mathbf \star \}\) then also an embedding exists.

Case 2 \(i^{\prime }_{h+1}-i^{\prime }_h=R_0, R_0^{-}\le i_{h+1}-i_h \le R_0^{+}\). In this case, \(Y^{[i^{\prime }_h+1,i^{\prime }_{h+1}]}\) is a “good” segment, i.e., \({Y^*}^{[(i^{\prime }_h+k)M_0+1, (i^{\prime }_h+k+1)M_0]}\in \mathbf \star \), for \(0\le k \le {i^{\prime }_{h+1}-i^{\prime }_h-1}\). By what we have already observed it now suffices to only consider the case \(i_{h+1}-i_h= R_0^{+}\). Now by definition of \(\mathbf \star \), there exist an alternating sub-sequence of \(2R_0^{+}\) \(0\)’s and \(2R_0^{+}\) \(1\)’s in \({Y^*}^{[i^{\prime }_hM_0+1,(i^{\prime }_{h}+1)M_0]}\). It follows that any binary sequence of length \(R_0^{+}\) can be embedded into \({Y^*}^{[i^{\prime }_hM_0+1,(i^{\prime }_{h}+1)M_0]}\) and hence there is an embedding in this case also.

Case 3 \(i_{h+1}-i_h=R_0, R_0^{-}\le i^{\prime }_{h+1}-i^{\prime }_h \le R_0^{+}\). Similarly as in Case 2, there exists an embedding in this case as well, we omit the details. \(\square \)

2.1.3 Proof of Theorem 1

We now complete the proof of Theorem 1 by using Theorem 4.

Proof of Theorem 1

Let \(\mathcal{C }^\mathbb{X }, \mu ^\mathbb{X },\mathcal{C }^\mathbb{Y }, \mu ^\mathbb{Y }\) be as described above. Let \(X\sim \mu ^\mathbb{X }\), \(Y\sim \mu ^\mathbb{Y }\). (Notice that \(\mu ^\mathbb{Y }\) implicitly depends on the choice of \(M_0\)). Notice that (1) holds trivially if \(L_0\ge 3\). Let \(\beta , \delta , m, R, L_0\) be given by Theorem 4. First we show that there exists \(M_0\) such that (2) and (3) hold.

Let \(Z=(Z_1,Z_2,\ldots , Z_{M_0})\) be a sequence of i.i.d. \(\hbox {Ber}(\frac{1}{2})\) variables. Observe that

Hence we can choose \(M_0\) large enough such that

Since all \(\mathbb X \) blocks are good and \(\star \) is a good \(\mathbb Y \) block, \(\mathbb P (X\in G_0^\mathbb{X })=1\) and \(\mathbb P (Y\in G_0^\mathbb{Y }) = \mu ^\mathbb{Y }(\star )\ge 1-L_0^{-\delta }\) and hence (2) holds. For (3), notice that \(S_0^\mathbb{X }(X)>1-L_0^{-1}\) for all \(X\) and \(S_0^\mathbb{Y }(Y)\ge \frac{1}{2}\) for all \(Y\). Hence \(\mathbb P (S_0^\mathbb Y (Y)\le p)=0\) if \(p < \frac{1}{2}\). For \(1-L_0^{-1}\ge p \ge \frac{1}{2}\),

and hence (3) holds.

Now let \(X^{*}=\{X_i^{*}\}_{i\ge 1}\) and \(Y^{*}=\{Y_i^{*}\}_{i\ge 1}\) be two independent sequences of i.i.d. \(Ber(\frac{1}{2})\) variables. Choosing \(M_0\) as above, construct \(\mathbb X \), \(\mathbb Y \) as described in the previous subsection. Then by Theorem 4, we have that \(\mathbb P (\mathbb X \hookrightarrow _R \mathbb Y )>0\). Using Lemma 2.1 it now follows that for \(M\) sufficiently large, we have \(\mathbb P (X^{*}\hookrightarrow _{*M} Y^{*})>0\). This gives an embedding for sequences indexed by the natural numbers which can easily be extended to embedding of sequences indexed by the full integers with positive probability. To see that this has probability 1 we note that the event that there exists an embedding is shift invariant and i.i.d. sequences are ergodic with respect to shifts and hence it has probability 0 or 1 completing the proof. \(\square \)

2.2 Rough isometry

Proposition 2.1 and 2.2 of [22] showed that to show that there exists \((M,D,C)\) such that two Poisson processes on \(\mathbb R \) are \((M,D,C)\)-roughly isometric almost surely it is sufficient to show that two independent copies of Bernoulli percolation on \(\mathbb Z \) with parameter \(\frac{1}{2}\), viewed as subsets of \(\mathbb R \), are \((M^{\prime },D^{\prime },C^{\prime })\)-roughly isometric with positive probability for some \((M^{\prime },D^{\prime },C^{\prime })\). We will solve the percolation problem and thus infer Theorem 2.

2.2.1 Defining the sequences \(\mathbb X \) and \(\mathbb Y \) and the alphabets \(\mathcal{C }^\mathbb{X }\) and \(\mathcal{C }^\mathbb{Y }\)

Let \(X^{*}=\{X_i^{*}\}_{i\ge 0}\) and \(Y^{*}=\{Y_i^{*}\}_{i\ge 0}\) be two independent sequences of i.i.d. \(\hbox {Ber}(\frac{1}{2})\) variables conditioned so that \(X_0^*=Y_0^*=1\). Now let us define two sequences \(k_0<k_1<k_2<\ldots \) and \(k^{\prime }_0<k^{\prime }_1<k^{\prime }_2<\ldots \) as follows. Let \(k_0=0\) and \(k_{i+1}=\min _{r>k_i}X^{*}_r=1\). Similarly let \(k^{\prime }_0=0\) and \(k^{\prime }_{i+1}=\min _{r>k^{\prime }_i}Y^{*}_r=1\). Let \(\tilde{X_i^{*}}=X^{*[k_{i-1},k_{i}-1]}\) and \(\tilde{Y_i^{*}}=Y^{*[k^{\prime }_{i-1},k^{\prime }_{i}-1]}\). The elements of the sequences \(\{\tilde{X_i^{*}}\}_{i\ge 1}\) and \(\{\tilde{Y_i^{*}}\}_{i\ge 1}\) are sequences consisting of a single \(1\) followed by a number (possibly none) of \(0\)’s. We now divide such sequences into the following classes.

Let \(Z=(Z_0,Z_1,\ldots Z_L), (L\ge 0)\) be a sequence of \(0\)’s and \(1\)’s with \(Z_0=1\) and \(Z_i=0\) for \(0<i\le L\). We say that \(Z\in C_0\) if \(L=0\) and for \(j\ge 1\), we say \(Z\in C_j\) if \(2^{j-1}\le L< 2^j\).

Now construct \(\mathbb X =(X_1,X_2,\ldots )\) from \(\tilde{X^{*}}\) and \(\mathbb Y =(Y_1,Y_2,\ldots )\) from \(\tilde{Y^{*}}\) as follows. Set \(X_i=C_j\) if \(\tilde{X_i^{*}}\in C_j\). Similarly set \(Y_i=C_j\) if \(\tilde{Y_i^{*}}\in C_j\).

It is clear from this definition that \(\mathbb X =(X_1,X_2,\ldots )\) and \(\mathbb Y =(Y_1,Y_2,\ldots )\) are two sequences of i.i.d. symbols coming from the alphabets \(\mathcal{C }^\mathbb{X }\) and \(\mathcal{C }^\mathbb{Y }\) having distributions \(\mu ^\mathbb{X }\) and \(\mu ^\mathbb{Y }\) respectively where

and \(\mu ^\mathbb{X }=\mu ^\mathbb{Y }\) is given by

where \(Z={Z^{*}}^{[0,i-1]}\), \(Z_0^{*}=0\), \(Z_t^*\) are of i.i.d. \(\hbox {Ber}(\frac{1}{2})\) variables for \(t\ge 1\) and \(i=\min \{k>0: Z^*_{k}=1\}\).

We take the relation \(\mathcal R \subseteq \mathcal{C }^\mathbb{X }\times \mathcal{C }^\mathbb{Y }\) to be: \(C_k\hookrightarrow C_{k^{\prime }}\) if \(|k-k^{\prime }|\le M_0\). The “good” sets are defined to be \(G_0^\mathbb{X }=G_0^\mathbb{Y }=\{C_j:j\le M_0\}\). It is now very easy to verify that \(\mathcal{C }^\mathbb{X },\mathcal{C }^\mathbb{Y },\mu ^\mathbb{X },\mu ^\mathbb{Y }, \mathcal R ,G_0^\mathbb{X }, G_0^\mathbb{Y }\), as defined above satisfy all the conditions described in our abstract framework.

2.2.2 Existence of the rough isometry

Lemma 2.4

Let \(X^*=\{X_i^{*}\}_{i\ge 0}\) and \(Y^*=\{Y_i^{*}\}_{i\ge 0}\) be two binary sequences with \(X_0^{*}=Y_0^{*}=1\). Let \(N_{X^{*}}=\{i:X_i^{*}=1\}\) and \(N_{Y^{*}}=\{i:Y_i^{*}=1\}\). Let \(\mathbb X \) and \(\mathbb Y \) be the sequences constructed from \(X^{*}\) and \(Y^{*}\) as above. Then there exist constants \((M^{\prime },D^{\prime },C^{\prime })\), such that whenever \(\mathbb X \hookrightarrow _R \mathbb Y \), there exists \(\phi : N_{X^*}\rightarrow N_{Y^{*}}\) such that \(\phi (0)=0\) and

-

(i)

For all \(t,s\in N_{X^{*}}\),

$$\begin{aligned} \frac{1}{M^{\prime }}|t-s|-D^{\prime }\le |\phi (t)-\phi (s)|\le M^{\prime }|t-s|+D^{\prime }. \end{aligned}$$ -

(ii)

For all \(t\in N_{Y^*}\), \(\exists s\in N_{X^*}\) such that \(|t-\phi (s)|\le C^{\prime }\).

That is, \(\mathbb X \hookrightarrow _R \mathbb Y \) implies \(N_{X^{*}}\) and \(N_{Y^{*}}\) (viewed as subsets of \(\mathbb R \)) are \((M^{\prime },D^{\prime },C^{\prime })\)-roughly isometric.

Proof

Suppose that \(\mathbb X \hookrightarrow \mathbb Y \) and let \(0=i_0<i_1<i_2<\ldots \) and \(0=i^{\prime }_0<i^{\prime }_1<i^{\prime }_2< \ldots \) be the two sequences satisfying the conditions of Definition 1.2. Let \(0=k_0<k_1<k_2<\ldots \) and \(0=k^{\prime }_0<k^{\prime }_1<k^{\prime }_2<\ldots \) be the sequences described in the previous subsection while defining \(\mathbb X \) and \(\mathbb Y \). For \(r\ge 1\), define \(X_r^{**}={X^*}^{\left[ k_{i_{r-1}}, k_{i_r}-1\right] }\) and \(Y_r^{**}={Y^*}^{\left[ k^{\prime }_{i^{\prime }_{r-1}}, k^{\prime }_{i^{\prime }_r}-1\right] }\), i.e., \(X_r^{**}\) is the segment of \(X^{*}\) corresponding to \(\mathbb X ^{[i_{r-1}+1,i_r]}\) and \(Y_r^{**}\) is the segment of \(Y^{*}\) corresponding to \(\mathbb Y ^{[i^{\prime }_{r-1}+1,i^{\prime }_r]}\). Define \(N_{X,r}=N_{X^{*}}\cap [k_{i_{r-1}}, k_{i_r}-1]\) and \(N_{Y,r}=N_{Y^{*}}\cap [k^{\prime }_{i^{\prime }_{r-1}}, k^{\prime }_{i^{\prime }_r}-1]\). Notice that by construction, for each \(r\), \(X^{*}_{k_{i_{r-1}}}=1\) and \(Y^{*}_{k^{\prime }_{i^{\prime }_{r-1}}}=1\), i.e., \(k_{i_{r-1}}\in N_{X,r}\subseteq N_{X^{*}}\) and \(k^{\prime }_{i^{\prime }_{r-1}}\in N_{Y,r}\subseteq N_{Y^{*}}\).

Now let us define \(\phi : N_{X^{*}} \rightarrow N_{Y^{*}}\) as follows. If \(s\in N_{X,r}\), define \(\phi (s)=k^{\prime }_{i^{\prime }_{r-1}}\). Clearly \(\phi (0)=0\). We show now that for \(M^{\prime }=2^{M_0+2}R_0^{+}, D^{\prime }=2^{2M_0+3}(R_0^{+})^2\) and \(C^{\prime }=2^{M_0+1}R_0^{+}\), the map defined as above satisfies the conditions in the statement of the lemma.

Proof of (i) First consider the case where \(s,t\in N_{X,r}\) for some \(r\). If \(s\ne t\) then clearly \(\mathbb X ^{[i_{r-1}+1,i_r]}\) is a good segment and hence \(|s-t|\le 2^{M_0}R_0^{+}\). Clearly \(|\phi (s)-\phi (t)|=0\). It follows that for the specified choice of \(M^{\prime }\) and \(D^{\prime }\),

Let us now consider the case \(s\in N_{X,r_1}\), \(t\in N_{X,r_2}\) where \(r_1<r_2\). Clearly then \(k_{i_{r_1-1}}\le s < k_{i_{r_1}}\) and \(k_{i_{r_2-1}}\le t < k_{i_{r_2}}\). Also notice that by choice of \(D^{\prime }\), for any good segment \(\mathbb X ^{[i_h+1,i_{h+1}]}\) we must have \(|k_{i_{h+1}}-k_{i_h}|\le 2^{M_0}R_0^{+} \le \frac{D^{\prime }}{2M^{\prime }}\). Further if for some \(h\), \(i_{h+1}=i_h+1\), we must have that \(|N_{X,h+1}|=1\). It follows that \(s\le k_{i_{r_1-1}}+\frac{D^{\prime }}{M^{\prime }}\) and \(t\le k_{i_{r_2-1}}+\frac{D^{\prime }}{M^{\prime }}\). It is clear from the definitions that \(\phi (s)=k^{\prime }_{i^{\prime }_{r_1-1}}\) and \(\phi (t)=k^{\prime }_{i^{\prime }_{r_2-1}}.\)

Then we have,

and

It now follows from the definitions that for each \(h\),

Adding this over \(h=r_1,\ldots ,r_2-1\), we get that

in this case as well, which completes the proof of \((i)\).

Proof of (ii) Let \(t\in N_{Y^{*}}\) and let \(r\) be such that \(k^{\prime }_{i^{\prime }_r}\le t < k^{\prime }_{i^{\prime }_{r+1}}\). Now if \(i^{\prime }_{r+1}-i^{\prime }_r=1\) we must have \(t=k^{\prime }_{i^{\prime }_r}\) and hence \(t=\phi (s)\) where \(s=k_{i_r}\in N_{X^{*}}\) and hence \((ii)\) holds for \(t\). If \(i^{\prime }_{r+1}-i^{\prime }_r\ne 1\) we must have that \(\mathbb Y ^{[i_{r}+1,i_{r+1}]}\) is a good segment and hence \(k^{\prime }_{i^{\prime }_{r+1}}-k^{\prime }_{i^{\prime }_r}\le 2^{M_0}R_0^{+}\). Setting \(s=k_{i_r}\in N_{X^{*}}\) we see that \(\phi (s)=k^{\prime }_{i^{\prime }_r}\) and hence \(|t-\phi (s)|\le 2^{M_0}R_0^{+}\le C^{\prime }\), completing the proof of \((ii)\). \(\square \)

2.2.3 Proof of Theorem 2

Now we prove Theorem 2 using Theorem 4.

Proof of Theorem 2

Let \(\mathcal{C }^\mathbb{X }, \mu ^\mathbb{X },\mathcal{C }^\mathbb{Y }, \mu ^\mathbb{Y }\) be as described above. Let \(X\sim \mu ^\mathbb{X }\), \(Y\sim \mu ^\mathbb{Y }\).

Notice first that

for \(j\ge 1\), hence (1) is satisfied for for all \(k\). We first show that there exists \(M_0\) such that (2) and (3) hold if \(\beta , \delta , m, R\) and \(L_0\) are constants such that the conclusion of Theorem 4 holds.

First observe that everything is symmetric in \(\mathbb X \) and \(\mathbb Y \). Clearly, we can take \(M_0\) sufficiently large so that \(S_0^\mathbb{X }(X)\ge 1-L_0^{-1}\) for \(X=C_k\) for all \(k\le M_0\).

Now suppose \(X=C_{k}\), where \(k>M_0\).

Let us fix \(p\le 1-L_0^{-1}\). Then we have

for \(M_0\) sufficiently large. Also, since \(\sum _{k} \mu ^\mathbb{X }(C_k)=1\), by choosing \(M_0\) sufficiently large we can make \(\sum _{k\le M_0} \mu ^\mathbb{X }(C_k)\ge 1-L_0^{-\delta }\).

Hence there exists some constant \(M_0\) for which both (2) and (3) hold. This together with Lemma 2.4 and Theorem 4 implies a rough isometry with positive probability for two independent copies of site percolation on \(\mathbb N \cup \{0\}\) and hence on \(\mathbb Z \). The comments at the beginning of this subsection then show that the conditional results of [22] extend this result to Poisson processes on \(\mathbb R \) proving Theorem 2. \(\square \)

2.3 Compatible sequences

2.3.1 Defining the alphabets \(\mathcal{C }^\mathbb{X }\) and \(\mathcal{C }^\mathbb{Y }\)

Let \(\mathbb X =\{X_i\}_{i\ge 1}\) and \(\mathbb Y =\{Y_i\}_{i\ge 1}\) be two independent sequences of i.i.d. \(\hbox {Ber}(q)\) variables. Let us take \(\mathcal{C }^\mathbb{X }=\mathcal{C }^\mathbb{Y }=\{0,1\}\). The measures \(\mu ^\mathbb{X }\) and \(\mu ^\mathbb{Y }\) are induced by the distribution of \(X_i\)’s and \(Y_i\)’s, i.e., \(\mu ^\mathbb{X }(\{1\})=\mu ^\mathbb{Y }(\{1\})=q\) and \(\mu ^\mathbb{X }(\{0\})=\mu ^\mathbb{Y }(\{0\})=1-q\). It is then clear that \(\mathbb X \) and \(\mathbb Y \) are two independent sequences of i.i.d. symbols coming from the alphabets \(\mathcal{C }^\mathbb{X }\) and \(\mathcal{C }^\mathbb{Y }\) having distributions \(\mu ^\mathbb{X }\) and \(\mu ^\mathbb{Y }\) respectively.

We define the relation \(\mathcal R \subseteq \mathcal{C }^\mathbb{X }\times \mathcal{C }^\mathbb{Y }\) by

Finally the “good” symbols are defined by \(G_0^\mathbb{X }=G_0^\mathbb{Y }=\{0\}\). It is clear that all the conditions in the definition of our set-up is satisfied by this structure.

2.3.2 Existence of the compatible map

Lemma 2.4

Let \(\mathbb X =\{X_i\}_{i\ge 1}\) and \(\mathbb Y =\{Y_i\}_{i\ge 1}\) be two binary sequences. Suppose \(\mathbb X \hookrightarrow \mathbb Y \). Then there exist \(D,D^{\prime }\subseteq \mathbb N \) such that,

-

(i)

For all \(i\in D\), \(X_i=0\), for all \(i^{\prime }\in D^{\prime }\), \(Y_{i^{\prime }}=0\).

-

(ii)

Let \(\mathbb N -D={k_1<k_2<\ldots }\) and \(\mathbb N -D^{\prime }={k^{\prime }_1<k^{\prime }_2<\ldots }\). Then for each \(i\), \(X_{k_i}\ne Y_{k^{\prime }_i}\) and hence \(X_{k_i}Y_{k^{\prime }_i}=0\).

Proof

The sets \(D\) and \(D^{\prime }\) denote the set of sites we will delete. Let \(0=i_0<i_1<i_2<\ldots \) and \(0=i^{\prime }_0<i^{\prime }_1<i^{\prime }_2< \ldots \) be the sequences satisfying the properties listed in Definition 1.2. Let \(H_1^{*}=\{h:i_{h+1}-i_h=R_0\}\), \(H_2^{*}=\{h:i^{\prime }_{h+1}-i^{\prime }_{h}=R_0\}\), \(H_3^{*}=\{h: i_{h+1}-i_h=i^{\prime }_{h+1}-i^{\prime }_h=1, X_{i_h+1}=Y_{i^{\prime }_h+1}=0\}\). Let \(H^{*}=\cup _{i=1}^{3}H_i^{*}\). Now define

It is clear from Definition 1.2 that \(D,D^{\prime }\) defined as above satisfies the conditions in the statement of the lemma. \(\square \)

2.3.3 Proof of Theorem 3

Now we complete proof of Theorem 3 using Theorem 4.

Proof of Theorem 3

Let \(\mathcal{C }^\mathbb{X }, \mu ^\mathbb{X },\mathcal{C }^\mathbb{Y }, \mu ^\mathbb{Y }\) be as described above. Let \(X\sim \mu ^\mathbb{X }\), \(Y\sim \mu ^\mathbb{Y }\). (Notice that \(\mu ^\mathbb{X }\), \(\mu ^\mathbb{Y }\) implicitly depend on \(q\)) Let \(\beta , \delta , m, R, L_0\) be constants such that the conclusion of Theorem 4 holds. Take \(q_0=L_0^{-\delta }\). Let \(q\le q_0\). Clearly, then, for any \(X\in \mathcal{C }^\mathbb{X }\) (resp. for any \(Y\in \mathcal{C }^\mathbb{Y }\)) we have \(S_0^\mathbb{X }(X)\ge 1-q\ge 1-L_0^{-1}\) (resp. \(S_0^\mathbb{Y }(Y)\ge 1-L_0^{-1}\)). Hence, (2) is vacuously satisfied. That (3) holds follows directly from the definitions. Notice that since the alphabet sets are finite (1) trivially holds for \(L_0\ge 3\). Theorem 3 now follows from Lemma 2.5 and Theorem 4. \(\square \)

3 The multi-scale structure

Let \(\mathbb X \), \(\mathbb Y \), \(\mathcal{C }^\mathbb{X }, \mathcal{C }^\mathbb{Y }, G_0^\mathbb{X }, G_0^\mathbb{Y }\) be as described in § 1.1. As we have described in § 1.2 before, our strategy of proof of Theorem 4 is to partition the sequences \(\mathbb X \) and \(\mathbb Y \) into blocks at each level \(j\ge 1\). Because of the symmetry between \(\mathbb X \) and \(\mathbb Y \) we only describe the procedure to form the blocks for the sequence \(\mathbb X \). For each \(j\ge 1\), we write \(\mathbb X =(X_1^{(j)},X_2^{(j)},\ldots )\) where we call each \(X_i^{(j)}\) a level \(j\) \(\mathbb X \)-block. Most of the time we would clearly state that something is a level \(j\) block and drop the superscript \(j\). Each of the \(\mathbb X \)-block at level \(j\) is a concatenation of a number of level \((j-1)\) \(\mathbb X \)-blocks, where level \(0\) blocks are just the characters of the sequence \(\mathbb X \). At each level, we also have a recursive definition of “\(good\)” blocks. Let \(G_j^\mathbb{X }\) and \(G_j^\mathbb{Y }\) denote the set of good \(\mathbb X \)-blocks and good \(\mathbb Y \)-blocks at \(j\)-th level respectively. Now we are ready to describe the recursive construction of the blocks \(X_i^{(j)}\). for \(j\ge 1\).

3.1 Recursive construction of blocks

We only describe the construction for \(\mathbb X \). Let us suppose we have already constructed the blocks of partition upto level \(j\) for some \(j\ge 0\) and we have \(X=(X_1^{(j)},X_2^{(j)},\ldots )\). Also assume we have defined the “good” blocks at level \(j\), i.e., we know \(G_j^\mathbb{X }\). We can start off the recursion since both these assumptions hold for \(j=0\). We describe how to partition \(\mathbb X \) into level \((j+1)\) blocks: \(\mathbb X =(X_1^{(j+1)},X_2^{(j+2)},\ldots )\).

Recall that \(L_{j+1}=L_j^{\alpha }=L_0^{\alpha ^{j+1}}\). Suppose the first \(k\) (\(k\ge 0\)) blocks \(X_1^{(j+1)},\ldots , X_k^{(j+1)}\) at level \((j+1)\) has already been constructed and suppose that the rightmost level \(j\)-subblock of \(X_k^{(j+1)}\) is \(X_m^{(j)}\). Then \(X_{k+1}^{(j+1)}\) consists of the sub-blocks \(X_{m+1}^{(j)},X_{m+2}^{(j)},\ldots ,X_{m+l+L_j^3}^{(j)}\) where \(l>L_j^3+L_j^{\alpha -1}\) is selected in the following manner. Let \(W_{k+1,j+1}\) be a geometric random variable having \(\hbox {Geom}(L_j^{-4})\) distribution and independent of everything else. Then

That such an \(l\) is finite with probability 1 will follow from our recursive estimates.

Put simply, our block construction mechanism at level \((j+1)\) is as follows:

Starting from the right boundary of the previous block, we include \(L_j^3\) many sub-blocks, then further \(L_j^{\alpha -1}\) many sub-blocks, then a \(Geom(L_j^{-4})\) many sub-blocks. Then we wait for the first occurrence of a run of \(2L_j^3\) many consecutive good sub-blocks, and end our block at the midpoint of this run.

This somewhat complex choice of block structure is made for several reasons. It guarantees stretches of good sub-blocks at both ends of the block thus ensuring these are not problematic when trying to embed one block into another. The fact that good blocks can be mapped into shorter or longer stretches of good blocks then allows us to line up sub-blocks in a potential embedding in many possible ways which is crucial for the induction. Our blocks are not of fixed length. It is potentially problematic to our approach if conditional on a block being long that it contains many bad blocks. Thus we added the geometric term to the length. This has the effect that given that the block is long, it is most likely because the geometric random variable is large, not because of the presence of many bad blocks. Finally, the construction implies that blocks will be independent.

We now record two simple but useful properties of the blocks thus constructed in the following observation. Once again a similar statement holds for \(\mathbb Y \)-blocks.

Observation 3.1

Let \(\mathbb X =(X_1^{(j+1)},X_2^{(j+1)},\ldots )=(X_1^{(j)}, X_2^{(j)}, \ldots )\) denote the partition of \(\mathbb X \) into blocks at levels \((j+1)\) and \(j\) respectively. Then the following hold.

-

1.

Let \(X_i^{(j+1)}=(X_{i_1}^{(j)},X_{i_1+1}^{(j)},\ldots X_{i_1+l}^{(j)})\). For \(i\ge 1\), \(X_{i_1+l+1-k}^{(j)}\in G_j^\mathbb{X }\) for each \(k\), \(1\le k \le L_j^3\). Further, if \(i>1\), then \(X_{i_1+k-1}^{(j)}\in G_j^\mathbb{X }\) for each \(k\), \(1\le k \le L_j^3\). That is, all blocks at level \((j+1)\), except possibly the leftmost one (\(X_1^{(j+1)}\)), are guaranteed to have at least \(L_j^3\) “good” level \(j\) sub-blocks at either end. Even \(X_1^{(j+1)}\) ends in \(L_j^3\) many good sub-blocks.

-

2.

The blocks \(X_1^{(j+1)},X_2^{(j+1)}, \ldots \) are independently distributed. In fact, \(X_2^{(j+1)}, X_3^{(j+1)}, \ldots \) are independently and identically distributed according to some law, say \(\mu _{j+1}^\mathbb{X }\). Furthermore, conditional on the event \(\{X_i^{(k)}\in G_k^\mathbb{X } ~\text {for}~ i=1,2,\ldots , L_k^3,~\text {for all}~k\le j\}\), the \((j+1)\)-th level blocks \(X_1^{(j+1)}, X_2^{(j+1)},\ldots \) are independently and identically distributed according to the law \(\mu _{j+1}^\mathbb{X }\).

From now on whenever we say “a (random) \(\mathbb X \)-block at level \(j\)”, we would imply that it has law \(\mu _{j}^\mathbb{X }\), unless explicitly stated otherwise. Similarly let us denote the corresponding law of “a (random) \(\mathbb Y \)-block at level \(j\)” by \(\mu _{j}^\mathbb{Y }\). Also for convenience, we assume \(\mu _{0}^\mathbb{X }=\mu ^\mathbb{X }\) and \(\mu _0^\mathbb{Y }=\mu ^\mathbb{Y }\).

Also, for \(j\ge 0\), let \(\mu _{j,G}^\mathbb{X }\) denote the conditional law of an \(\mathbb X \) block at level \(j\), given that it is in \(G_j^\mathbb{X }\). We define \(\mu _{j,G}^\mathbb{Y }\) similarly.

We observe that we can construct a block with law \(\mu _{j+1}^\mathbb{X }\) (resp. \(\mu _{j+1}^\mathbb{Y }\)) in the following alternative manner without referring to the the sequence \(\mathbb X \) (resp. \(\mathbb Y \)).

Observation 3.2

Let \(X_1,X_2,X_3,\ldots \) be a sequence of independent level \(j\) \(\mathbb X \)-blocks such that \(X_i\sim \mu _{j,G}^\mathbb{X }\) for \(1\le i \le L_j^3\) and \(X_i\sim \mu _{j}^\mathbb{X }\) for \(i> L_j^3\). Now let \(W\) be a \(Geom(L_j^{-4})\) variable independent of everything else. Define as before

Then \(X=(X_1,X_2,\ldots ,X_{l+L_j^3})\) has law \(\mu _{j+1}^\mathbb{X }\).

Whenever we have a sequence \(X_1,X_2,\ldots \) satisfying the condition in the observation above, we shall call \(X\) the (random) level \((j+1)\) block constructed from \(X_1,X_2,\ldots .\) and we shall denote the corresponding geometric variable to be \(W_X\) and \(T_X=l-L_j^3-L_j^{\alpha -1}\).

3.2 Embedding probabilities and semi-bad blocks

Now we make some definitions that we are going to use throughout our proof.

Definition 3.3

For \(j\ge 0\), let \(X\) be a block of \(\mathbb X \) at level \(j\) and let \(Y\) be a block of \(\mathbb Y \) at level \(j\). We define the embedding probability of \(X\) to be \(S_j^\mathbb{X }(X)=\mathbb P (X\hookrightarrow Y|X)\). Similarly we define \(S_j^\mathbb{Y }(Y)=\mathbb P (X\hookrightarrow Y|Y)\). As noted above the law of \(Y\) is \(\mu _j^\mathbb{Y }\) in the definition of \(S_j^\mathbb{X }\) and the law of \(X\) is \(\mu _j^\mathbb{X }\) in the definition of \(S_j^\mathbb{Y }\).

Notice that \(j=0\) in the above definitions correspond to the definitions we had in § 1.1.

Definition 3.4

Let \(X\) be an \(\mathbb X \)-block at level \(j\). It is called “semi-bad” if \(X\notin G_j^\mathbb{X }\), \(S_j^\mathbb{X }(X)\ge 1-\frac{1}{20k_0R_{j+1}^{+}}\),\(|X|\le 10L_j\) and \(C_k\notin X\) for any \(k>L_j^m\). Here \(|X|\) denotes the number of \(\mathcal{C }^\mathbb{X }\) characters in \(X\). A “semi-bad” \(\mathbb Y \) block at level \(j\) is defined similarly.

We denote the set of all semi-bad \(\mathbb X \)-blocks (resp. \(\mathbb Y \)-blocks) at level \(j\) by \(SB_j^\mathbb{X }\) (resp. \(SB_j^\mathbb{Y }\)).

Definition 3.5

Let \(\tilde{Y}=(Y_1,\ldots ,Y_{n})\) be a sequence of consecutive \(\mathbb Y \) blocks at level \(j\). \(\tilde{Y}\) is said to be a “strong sequence” if for every \(X\in SB_j^\mathbb{X }\)

Similarly a “strong” \(\mathbb X \)-sequence can also be defined.

3.3 Good blocks

To complete the description, we need now give the definition of “good” blocks at level \((j+1)\) which we have alluded to above. With the definitions from the preceding section, we are now ready to give the recursive definition of a “good” block as follows. Suppose we already have definitions of “good” blocks upto level \(j\) (i.e., characterized \(G_k^\mathbb{X }\) for \(k\le j\)). Good blocks at level \((j+1)\) are then defined in the following manner. As usual we only give the definition for \(\mathbb X \)-blocks, the definition for \(\mathbb Y \) is exactly similar.

Let \(X^{(j+1)}=(X_{a+1}^{(j)},X_{a+2}^{(j)},\ldots , X_{a+n}^{(j)})\) be a \(\mathbb X \) block at level \((j+1)\). Notice that we can form blocks at level \((j+1)\) since we have assumed that we already know \(G_j^\mathbb{X }\). Then we say \(X^{(j+1)}\in G_{j+1}^\mathbb{X }\) if the following conditions hold.

-

(i)

It starts with \(L_j^3\) good sub-blocks, i.e., \(X_{a+i}^{(j)}\in G_j^\mathbb{X }\) for \(1\le i \le L_j^3\).

-

(ii)

It contains at most \(k_0\) bad sub-blocks. \(\#\{1\le i \le n: X_{a+i}\notin G_{j}^\mathbb{X }\}\le k_0\).

-

(iii)

For each \(1\le i \le n\) such that \(X_{a+i}\notin G_{j}^\mathbb{X }\), \(X_{a+i}\in SB_j^\mathbb{X }\), i.e., the bad sub-blocks are only semi-bad.

-

(iv)

Every sequence of \(\lfloor L_j^{3/2} \rfloor \) consecutive level \(j\) sub-blocks is “strong”.

-

(v)

The length of the block satisfies \(n\le L_{j}^{\alpha -1}+L_j^5\).

Finally we define “segments” of a sequence of consecutive \(\mathbb X \) or \(\mathbb Y \) blocks at level \(j\). Notice that for \(j=0\) the following definition reduces to the definition given in § 1.1.

Definition 3.6

Let \(\tilde{X}=(X_1,X_2,\ldots )\) be a sequence of consecutive \(\mathbb X \)-blocks. For \(i_2 > i_1 \ge 1\), we call the subsequence \((X_{i_1},X_{i_1+1},\ldots , X_{i_2})\) the “\([i_1,i_2]\)-segment” of \(\tilde{X}\) denoted by \(\tilde{X}^{[i_1,i_2]}\). The “\([i_1,i_2]\)-segment” of a sequence of \(\mathbb Y \) blocks is also defined similarly. Also a segment is called a “good” segment if it consists of all good blocks.

4 Recursive estimates

Our proof of the general theorem depends on a collection of recursive estimates, all of which are proved together by induction. In this section we list these estimates for easy reference. The proofs of these estimates are provided in the next sections. We recall that for all \(j>0\), \(L_j=L_{j-1}^{\alpha }=L_0^{\alpha ^j}\) and for all \(j\ge 0\), \(R_j=4^j(2R)\), \(R_j^{-}=4^j(2-2^{-j})\) and \(R_j^{+}=4^jR^2(2+2^{-j})\). For \(j=0\), this definition of \(R_j\), \(R_j^{+}\) and \(R_j^{-}\) agrees with the definition given in § 1.1.

4.1 Tail estimate

-

I.

Let \(j\ge 0\). Let \(X\) be a \(\mathbb X \)-block at level \(j\) and let \(m_j=m+2^{-j}\). Then

$$\begin{aligned} \mathbb P (S_j^\mathbb{X }(X)\le p)\le p^{m_j}L_j^{-\beta }\quad \text {for}~~p\le 1-L_{j}^{-1}. \end{aligned}$$(7)Let \(Y\) be a \(\mathbb Y \)-block at level \(j\). Then

$$\begin{aligned} \mathbb P (S_j^\mathbb{Y }(Y)\le p)\le p^{m_j}L_j^{-\beta }\quad \text {for}~~p\le 1-L_{j}^{-1}. \end{aligned}$$(8)

4.2 Length estimate

-

II.

For \(X\) be an \(\mathbb X \)-block at at level \(j\ge 0\),

$$\begin{aligned} \mathbb E [\exp (L_{j-1}^{-6}(|X|-(2-2^{-j})L_j))] \le 1. \end{aligned}$$(9)Similarly for \(Y\), a \(\mathbb Y \)-block at level \(j\), we have

$$\begin{aligned} \mathbb E [\exp (L_{j-1}^{-6}(|Y|-(2-2^{-j})L_j))] \le 1. \end{aligned}$$(10)

For the case \(j=0\) we interpret Eqs. (9) and (10) by setting \(L_{-1}=L_0^{\alpha ^{-1}}\).

4.3 Properties of good blocks

-

III.

Good blocks map to good blocks, i.e.,

$$\begin{aligned} X\in G_j^\mathbb{X }, Y\in G_j^\mathbb{Y } \Rightarrow X\hookrightarrow Y. \end{aligned}$$(11) -

IV.

Most blocks are good.

$$\begin{aligned}&\mathbb P (X\in G_j^\mathbb X )\ge 1-L_j^{-\delta }.\end{aligned}$$(12)$$\begin{aligned}&\mathbb P (Y\in G_j^\mathbb Y )\ge 1-L_j^{-\delta }. \end{aligned}$$(13) -

V.

Good blocks can be compressed or expanded. Let \(\tilde{X}=(X_1,X_2,\ldots )\) be a sequence of \(\mathbb X \)-blocks at level \(j\) and \(\tilde{Y}=(Y_1,Y_2,\ldots )\) be a sequence of \(\mathbb Y \)-blocks at level \(j\). Further we suppose that \(\tilde{X}^{[1,R_j^{+}]}\) and \(\tilde{Y}^{[1,R_j^{+}]}\) are “good segments”. Then for every \(t\) with \(R_j^{-}\le t \le R_j^{+}\),

$$\begin{aligned} \tilde{X}^{[1,R_j]}\hookrightarrow \tilde{Y}^{[1,t]} \quad \text {and}\quad \tilde{X}^{[1,t]}\hookrightarrow \tilde{Y}^{[1,R_j]}. \end{aligned}$$(14)

Theorem 4.1

(Recursive Theorem) For \(\alpha \), \(\beta \), \(\delta \), \(m\), \(k_0\) and \(R\) as in Eq. (4), the following holds for all large enough \(L_0\). If the recursive estimates (7), (8), (9), (10), (11), (12), (13) and (14) hold at level \(j\) for some \(j\ge 0\) then all the estimates hold at level \((j+1)\) as well.

Before giving a proof of Theorem 4.1 we show how using this theorem we can prove the general theorem.

Proof of Theorem 4

Let \(\mathbb X =(X_1,X_2,\ldots )\), \(\mathbb Y =(Y_1,Y_2,\ldots )\) be as in the statement of the theorem. Let \(\alpha , \beta , \delta , m, k_0, R\) be as in Theorem 4.1 and let \(L_0\) be a sufficiently large constant such that the conclusion of Theorem 4.1 holds. Let for \(j\ge 0\), \(\mathbb X =(X_1^{(j)}, X_2^{(j)},\ldots )\) denote the partition of \(\mathbb X \) into level \(j\) blocks as described above. Similarly let \(\mathbb Y =(Y_1^{(j)}, Y_2^{(j)},\ldots )\) denote the partition of \(\mathbb Y \) into level \(j\) blocks. Notice that this partition depends on parameters \(\alpha , k_0, R\) and \(L_0\). Recall that the characters are the blocks at level \(0\), i.e., \(X_i^{(0)}=X_i\) and \(Y_i^{(0)}=Y_i\) for all \(i\ge 1\). Hence the hypotheses of Theorem 4 implies that (7), (8), (12), (13) hold for \(j=0\). It follows from definition of \(R\)-embedding that (11) and (14) also hold at level \(0\). That (9) and (10) hold for \(j=0\) is trivial. Hence the estimates \(I-V\) hold at level \(j\) for \(j=0\). Using Theorem 4.1, it now follows that (7), (8), (9), (10), (11), (12), (13) and (14) hold for each \(j\ge 0\).

Let \(\mathcal T _j^\mathbb{X }=\{X_k^{(j)}\in G_{j}^\mathbb{X }, 1\le k \le L_j^3\}\) be the event that the first \(L_j^3\) blocks at level \(j\) are good. Notice that on the event \(\cap _{k=0}^{j-1} \mathcal T _k^\mathbb{X }=\mathcal T _{j-1}^\mathbb{X }\), \(X_1^{(j)}\) has distribution \(\mu _j^\mathbb{X }\) by Observation 3.1 and so \(\{X_i^{(j)}\}_{i\ge 1}\) is i.i.d. with distribution \(\mu _j^\mathbb{X }\). Hence it follows from Eq. (12) that \(\mathbb P (\mathcal T _j^\mathbb{X }|\cap _{k=0}^{j-1} \mathcal T _k^\mathbb{X })\ge (1-L_j^{-\delta })^{L_j^3}\). Similarly defining \(\mathcal T _j^\mathbb{Y }=\{Y_k^{(j)}\in G_{j}^\mathbb{Y }, 1\le k \le L_j^3\}\) we get using (13) that \(\mathbb P (\mathcal T _j^\mathbb{Y }|\cap _{k=0}^{j-1} \mathcal T _k^\mathbb{Y })\ge (1-L_j^{-\delta })^{L_j^3}\).

Let \(\mathcal A =\cap _{j\ge 0}(\mathcal T _j^\mathbb{X }\cap \mathcal T _j^\mathbb{Y })\). It follows from above that \(\mathbb P (\mathcal A )>0\) since \(\delta >3\) and \(L_0\) sufficiently large. Also, notice that, on \(\mathcal A \), \(X_1^{(j)}\hookrightarrow Y_1^{(j)}\) for each \(j\ge 0\). Since \(|X_1^{(j)}|, |Y_1^{(j)}|\rightarrow \infty \) as \(j\rightarrow \infty \), it follows that there exists a subsequence \(j_n \rightarrow \infty \) such that there exist \(R\)-embeddings of \(X_1^{(j_n)}\) into \(Y_1^{(j_n)}\) with associated partitions \((i_0^n,i_1^n,\ldots ,i_{\ell _n}^n)\) and \(({i^{\prime }}_0^n,{i^{\prime }}_1^n,\ldots ,{i^{\prime }}_{\ell _n}^n)\) with \(\ell _n\rightarrow \infty \) satisfying the conditions of Definition 1.2 and such that for all \(r\ge 0\) we have that \(i_r^n \rightarrow i_r^\star \) and \({i^{\prime }}_r^n\rightarrow {i^{\prime }}_r^\star \) as \(n\rightarrow \infty \). These limiting partitions of \(\mathbb N \), \((i_0^\star ,i_1^\star ,\ldots )\) and \(({i^{\prime }}_0^\star ,{i^{\prime }}_1^\star ,\ldots )\), satisfy the conditions of Definition 1.2 implying that \(\mathbb X \hookrightarrow _R \mathbb Y \). It follows that \(\mathbb P (\mathbb X \hookrightarrow \mathbb Y )>0\). \(\square \)

The remainder of the paper is devoted to the proof of the estimates in the induction. Throughout these sections we assume that the estimates \(I-V\) hold for some level \(j\ge 0\) and then prove the estimates at level \(j+1\). Combined they complete the proof of Theorem 4.1.

From now on, in every Theorem, Proposition and Lemma we state, we would implicitly assume the hypothesis that all the recursive estimates hold upto level \(j\), the parameters satisfy the constraints described in § 1.2.1 and \(L_0\) is sufficienctly large.

5 Notation for maps: generalised mappings

Since in our estimates we will need to map segments of sub-blocks to segments of sub-blocks we need a notation for constructing such mappings. Let \(A,A^{\prime } \subseteq \mathbb N \), be two sets of consecutive integers. Let \(A=\{n_1+1,\ldots ,n_1+n\}\), \(A^{\prime }=\{n_1^{\prime }+1,\ldots , n_1^{\prime }+n^{\prime }\}\). Let

denote the set of partitions of \(A\). For \(P= \{n_1=i_0<i_1<\cdots <i_z=n_1+n\}\in \) \(\mathcal P _{A}\), let us denote the “length” of \(P\) by \(l(P)=z\). Also let the set of all blocks of \(P\) be denoted by \(\mathcal B (P)=\{[i_r+1,i_{r+1}]\cap \mathbb Z : 0\le r\le z-1\}\).

5.1 Generalised mappings

Now let \(\Upsilon \) denote a “generalised mapping” which assigns to the tuple \((A,A^{\prime })\), a triplet \((P,P^{\prime },\tau )\), where \(P\in \mathcal P _{A}\), \(P^{\prime }\in \mathcal P _{A^{\prime }}\), with \(l(P)=l(P^{\prime })\), and \(\tau : \mathcal B (P) \mapsto \mathcal B (P^{\prime })\) be the unique increasing bijection from the blocks of \(P\) to the blocks of \(P^{\prime }\). Let \(P=\{n_1=i_0<i_1<\cdots <i_{l(P)}=n_1+n\}\) and \(P^{\prime }=\{n_1^{\prime }=i_0<i^{\prime }_1<\cdots <i^{\prime }_{l(P^{\prime })}=n_1^{\prime }+n^{\prime }\}\). Then by “\(\tau \) is an increasing bijection” we mean that \(l(P)=l(P^{\prime })=z\) (say), and \(\tau ([i_r+1,i_{r+1}]\cap \mathbb Z )=[i^{\prime }_r+1,i^{\prime }_{r+1}]\cap \mathbb Z \). A generalised mapping \(\Upsilon \) of \((A,A^{\prime })\) (say, \(\Upsilon (A,A^{\prime })=(P,P^{\prime },\tau )\)) is called “admissible” if the following holds.

Let \(\{x\}\in \mathcal B (P)\) is a singleton. Then \(\tau (\{x\})=\{y\}\) (say) is also a singleton. Similarly, if \(\{y\}\in \mathcal B (P^{\prime })\) is a singleton, then \(\tau ^{-1}(\{y\})\) is also a singleton. Note that since we already require \(\tau \) to be a bijection, it makes sense to talk about \(\tau ^{-1}\) as a function here.

If \(\tau (\{x\})=\{y\}\) or \(\tau ^{-1}(\{y\})={x}\), we simply denote this by \(\tau (x)\!=\!y\) and \(\tau ^{-1}(y)\!=\!x\) respectively.

Let \(B\subseteq A\) and \(B^{\prime }\subseteq A^{\prime }\) be two subsets of \(A,A^{\prime }\) respectively. An admissible generalized mapping \(\Upsilon \) of \((A,A^{\prime })\) is called of class \(G^j\) with respect to \((B,B^{\prime })\) (we denote this by saying \(\Upsilon (A,A^{\prime },B,B^{\prime })\) is admissible of class \(G^j\)) if it satisfies the following conditions:

-

(i)

If \(x\in B\), then the singleton \(\{x\}\in \mathcal B (P)\). Similarly if \(y\in B^{\prime }\), then \(\{y\}\in \mathcal B (P^{\prime })\).

-

(ii)

If \(i_{r+1}>i_r+1\) (equivalently, \(i^{\prime }_{r+1}>i^{\prime }_r+1\)), then \((i_{r+1}-i_{r})\wedge (i^{\prime }_{r+1}-i^{\prime }_{r})> L_j\) and \(\frac{1-2^{-(j+5/4)}}{R}\le \frac{i^{\prime }_{r+1}-i^{\prime }_{r}}{i_{r+1}-i_{r}} \le R(1+2^{-(j+5/4)})\).

-

(iii)

For all \(x\in B\), \(\tau (x)\notin B^{\prime }\).

Similarly, an admissible generalised mapping \(\Upsilon (A,A^{\prime })=(P,P^{\prime },\tau )\) is called of \(Class~H_1^j\) with respect to \(B\) if it satisfies the following conditions:

-

(i)

If \(x\in B\), then \(\{x\}\in \mathcal B (P)\).

-

(ii)

If \(i_{r+1}>i_r+1\) (equivalently, \(i^{\prime }_{r+1}>i^{\prime }_r+1\)), then \((i_{r+1}-i_{r})\wedge (i^{\prime }_{r+1}-i^{\prime }_{r})> L_j\) and \(\frac{1-2^{-(j+5/4)}}{R} \le \frac{i^{\prime }_{r+1}-i^{\prime }_{r}}{i_{r+1}-i_{r}}\le R(1+2^{-(j+5/4)})\).

-

(iii)

For all \(x\in B\), \(L_j^3< \tau (x)-n_1 \le n^{\prime }-L_j^3\).

Finally, an admissible generalised mapping \(\Upsilon ^j(A,A^{\prime })=(P,P^{\prime },\tau )\) is called of \(Class~H_2^j\) with respect to \(B\) if it satisfies the following conditions:

-

(i)

If \(x\in B\), then \(\{x\}\in \mathcal B (P)\).

-

(ii)

\(L_j^3< \tau (x)-n_1\le n^{\prime }-L_j^3\) for all \(x\in B\).

-

(iii)

If \([i_{h}+1, i_{h+1}]\cap \mathbb Z \in \mathcal B (P)\) and \(i_{h}+1\ne i_{h+1}\) then \(i_{h+1}-i_{h}=R_j\) and \(R_j^{-}\le i^{\prime }_{h+1}-i^{\prime }_{h}\le R_{j}^{+}\).

5.2 Generalised mapping induced by a pair of partitions

Let \(A,A^{\prime },B,B^{\prime }\) be as above. By a “marked partition pair” of \((A,A^{\prime })\) we mean a triplet \((P_{*},P^{\prime }_{*},Z)\) where \(P_{*}=\{n_1=i_0<i_1<\cdots <i_{l(P_{*})}=n_1+n\}\in \mathcal P _{A}\) and \(P^{\prime }_{*}=\{n_1^{\prime }=i_0<i^{\prime }_1<\cdots <i^{\prime }_{l(P^{\prime }_{*})}=n_1^{\prime }+n^{\prime }\}\in \mathcal P _{A^{\prime }}\), \(l(P_{*})=l(P^{\prime }_{*})\) and \(Z\subseteq [l(P_{*})-1]\) is such that \(r\in Z\Rightarrow i_{r+1}-i_r=i^{\prime }_{r+1}-i^{\prime }_r\).

It is easy to see that a “marked partition pair” induces a Generalised mapping \(\Upsilon \) of \((A,A^{\prime },B,B^{\prime })\) in the following natural way.

Let \(P\) be the partition of \(A\) whose blocks are given by

Similarly let \(P^{\prime }\) be the partition of \(A^{\prime }\) whose blocks are given by

Clearly, \(l(P_{*})=l(P^{\prime }_{*})\) and the condition in the definition of \(Z\) implies that \(l(P)=l(P^{\prime })\). Let \(\tau \) denote the increasing bijection from \(\mathcal B (P)\) to \(\mathcal B (P^{\prime })\). Clearly in this case \(\Upsilon (A,A^{\prime })=(P,P^{\prime },\tau )\) is a generalised mapping and is called the generalised mapping induced by the marked partition pair (\(P_{*},P^{\prime }_{*},Z\)).

The following lemma gives condition under which an induced generalised mapping is admissible. The proof is straightforward and hence omitted.

Lemma 5.1

Let \(A,A^{\prime },B,B^{\prime }\) be as above. Let \(P_{*}=\{n_1=i_0<i_1<\cdots <i_{l(P_{*})}=n_1+n\}\in \mathcal P (A)\) and \(P^{\prime }_{*}=\{n_1^{\prime }=i_0<i^{\prime }_1<\cdots <i^{\prime }_{l(P^{\prime }_{*})}=n_1^{\prime }+n^{\prime }\}\in \mathcal P^{\prime }_{*} \) be partitions of \(A\) and \(A^{\prime }\) respectively of equal length. Let \(B_{P_*}=\{r: B\cap [i_r+1,i_{r+1}]\ne \emptyset \}\) and \(B_{P^{\prime }_{*}}=\{r: B^{\prime } \cap [i^{\prime }_r+1,i_{r+1}]\ne \emptyset \}\). Let us suppose the following conditions hold.

-

(i)

\((P_{*}, P^{\prime }_{*}, B_{P_*}\cup B_{P^{\prime }_{*}})\) is a marked partition pair.

-

(ii)

For \(r\notin (B_{P_*}\cup B_{P^{\prime }_{*}})\), \((i_{r+1}-i_{r})\wedge (i^{\prime }_{r+1}-i^{\prime }_r)>L_j\) and \(\frac{1-2^{-(j+5/4)}}{R}\le \frac{i^{\prime }_{r+1}-i^{\prime }_{r}}{i_{r+1}-i_{r}} \le R(1+2^{-(j+5/4)})\).

-

(iii)

\(B_{P_*} \cap B_{P^{\prime }_{*}}=\emptyset \),

then the induced generalised mapping \(\Upsilon (A,A^{\prime },B,B^{\prime })\) is admissible of \(Class~G^j\).

The usefulness of making these abstract definitions follow from the following lemma and next couple of propositions.

Lemma 5.2

Let \(X=(X_1,X_2,\ldots )\) be a sequence of \(\mathbb X \) blocks at level \(j\) and \(Y=(Y_1,Y_2,\ldots )\) be a sequence of \(\mathbb Y \) blocks at level \(j\). Further suppose that \(n,n^{\prime }\) are such that \(X^{[1,n]}\) and \(Y^{[1,n^{\prime }]}\) are both “good” segments, \(n>L_j\) and \(\frac{1-2^{-(j+5/4)}}{R}\le \frac{n^{\prime }}{n}\le R(1+2^{-(j+5/4)})\). Then \(X^{[1,n]}\hookrightarrow Y^{[1,n^{\prime }]}\).

Proof

Let us write \(n=kR_j+r\) where \(0\le r<R_j\) and \(k\in \mathbb N \). Now let \(s=[\frac{n^{\prime }-r}{k}]\). Define \(0=t_0<t_1<t_2<\cdots <t_k=n^{\prime }-r\le t_{k+1}=n^{\prime }\) such that for all \(i\le k\), \(t_{i}-t_{i-1}=s\) or \(s+1\).

Claim

\(R_j^- \le s \le R_j^{+}-1\).

Proof of Claim

From \(\frac{n^{\prime }}{n}\le R(1+2^{-(j+5/4)})\) it follows that,

Since \(n>L_j\) and \(L_0\) is sufficiently large we have \(\frac{1}{k}\le \frac{2R_j}{L_j}\le 2^{-(j+13/4)}(2^{1/4}-1)\), it follows from the above that

the last inequality follows as \(2^jR^2(2^{1/4}-1)\ge 2^{9/4}\) for all \(j\) since \(R>10\). Hence \(s\le R_j^{+}-1\).

To prove the other inequality in the claim, we note that it follows from \(\frac{1-2^{-(j+5/4)}}{R}\le \frac{n^{\prime }}{n}\) that

This in turn implies that

where the last inequality follows from the fact that for \(L_0\) sufficiently large we have for all \(j\ge 0\), \(k\ge \frac{L_j}{2R_j}\ge \frac{(2R+1)2^{j+5/4}}{2^{1/4}-1}\). This completes the proof of the claim.

Now, from (11) and (14) it follows that, \(X^{[iR_j+1,(i+1)R_j]}\hookrightarrow Y^{[t_i+1,t_{i+1}]}\) for \(0\le i <k\) and \(X^{[kR_j+1,n]}\hookrightarrow Y^{[t_k+1,t_{k+1}]}\). The lemma follows. \(\square \)

Let \(X=(X_1,X_2,X_3,\ldots ,X_n)\) be an \(\mathbb X \)-block (or a segment of \(\mathbb X \)-blocks) at level \((j+1)\) where the \(X_i\)’s denote the \(j\)-level sub-blocks constituting it. Similarly, let \(Y=(Y_1,Y_2,Y_3,\ldots , Y_{n^{\prime }})\) be a \(\mathbb Y \)-block (or a segment of \(\mathbb Y \)-blocks) at level \((j+1)\). Let \(B_X=\{i: X_i\notin G_j^\mathbb{X }\}=\{l_1<l_2<\cdots <l_{K_X}\}\) denote the positions of “bad” level \(j\) \(\mathbb X \)-subblocks. Similarly let \(B_Y=\{i: Y_i\notin G_j^\mathbb{Y }\}=\{l^{\prime }_1<l^{\prime }_2<\cdots <l^{\prime }_{K_Y}\}\) be the positions of “bad” \(Y\)-subblocks.

We next state an easy proposition.

Proposition 5.3

Let \(X,Y,B_X,B_Y\) be as above. Suppose there exists a generalised mapping \(\Upsilon \) given by \(\Upsilon ([n],[n^{\prime }],B_X,B_Y)=(P,P^{\prime },\tau )\) which is admissible and is of \(Class~G^j\). Further, suppose, for \(1\le i\le K_X\), \(X_{l_i}\hookrightarrow Y_{\tau (l_i)}\) and for each \(1\le i\le K_Y\), \(X_{\tau ^{-1}(l^{\prime }_i)}\hookrightarrow Y_{l^{\prime }_i}\). Then \(X\hookrightarrow Y\). \(\square \)

Proof

Let \(P,P^{\prime }\) be as in the statement of the proposition with \(l(P)=l(P^{\prime })=z\). Let us fix \(r\), \(0\le r\le z-1\). From the definition of an admissible mapping, it follows that there are 3 cases to consider.

-

\(i_{r+1}-i_r=i^{\prime }_{r+1}-i^{\prime }_{r}=1\) and either \(i_{r}+1\in B_X\) or \(i^{\prime }_{r}+1\in B_Y\). In either case it follows from the hypothesis that \(X_{i_{r}+1}\hookrightarrow Y_{i^{\prime }_{r}+1}\).

-

\(i_{r+1}-i_r=i^{\prime }_{r+1}-i^{\prime }_{r}=1\), \(i_{r}+1\notin B_X\), \(i^{\prime }_{r}+1\notin B_Y\). In this case \(X_{i_{r}+1}\hookrightarrow Y_{i^{\prime }_{r}+1}\) follows from the inductive hypothesis (11).

-

\(i_{r+1}-i_r\ne 1\). In this case both \(X^{[i_r+1,i_{r+1}]}\) and \(Y^{[i^{\prime }_r+1,i^{\prime }_{r+1}]}\) are good segments, and it follows from Lemma 5.2 that \(X^{[i_r+1,i_{r+1}]}\hookrightarrow Y^{[i^{\prime }_r+1,i^{\prime }_{r+1}]}\).

Hence for all \(r\), \(0\le r\le z-1\), \(X^{[i_{r}+1,i_{r+1}]}\hookrightarrow Y^{[i^{\prime }_r+1,i^{\prime }_{r+1}]}\). It follows that \(X\hookrightarrow Y\), as claimed. \(\square \)

In the same vein, we state the following Proposition whose proof is essentially similar and hence omitted.

Proposition 5.4

Let \(X\), \(Y\), \(B_X\) be as before. Suppose there exists a generalised mapping \(\Upsilon \) given by \(\Upsilon ([n],[n^{\prime }])=(P,P^{\prime },\tau )\) which is admissible and is of \(Class~H_1^j\) or \(H_2^j\) with respect to \(B_X\). Further, suppose, for \(1\le i\le K_X\), \(X_{l_i}\hookrightarrow Y_{\tau (l_i)}\) and for each \(i^{\prime }\in [n^{\prime }] {\setminus } \{\tau (l_i): 1\le i \le K_X\}\), \(Y_{i^{\prime }}\in G_j^\mathbb{Y }\). Then \(X\hookrightarrow Y\).

6 Constructions

In this section we provide the necessary constructions of generalised mappings which we would use in later sections to prove different estimates on probabilities that certain \(\mathbb X \)-blocks can be mapped to certain \(\mathbb Y \)-blocks.

Proposition 6.1

Let \(j\ge 0\) and \(n,n^{\prime }>L_j^{\alpha -1}\) such that

Let \(B=\{l_1<l_2<\cdots <l_{k_x}\}\subseteq [n]\) and \(B^{\prime }=\{l^{\prime }_1<l^{\prime }_2<\cdots <l^{\prime }_{k_y}\} \subseteq [n^{\prime }]\) be such that \(l_1,l^{\prime }_1>L_j^3; (n-l_{k_x}),(n^{\prime }-l^{\prime }_{k_y})\ge L_j^3\), \(k_x, k_y \le k_0R_{j+1}^{+}\). Then there exist a family of admissible generalised mappings \(\Upsilon _h\) for \(1\le h \le L_j^2\), such \(\Upsilon _h([n],[n^{\prime }],B,B^{\prime })=(P_h,P^{\prime }_h,\tau _h)\) is of class \(G^j\) and such that for \(1\le h\le L_j^2\), \(1\le i\le k_x\), \(1\le r \le k_y\), \(\tau _h(l_i)=\tau _{1}(l_i)+h-1\) and \(\tau _h^{-1}(l^{\prime }_r)=\tau _1^{-1}(l^{\prime }_r)-h+1\).

To prove Proposition 6.1 we need the following two lemmas.

Lemma 6.2

Assume the hypotheses of Proposition 6.1. Then there exists a piecewise linear increasing bijection \(\psi : [0,n]\mapsto [0,n^{\prime }]\) and two partitions \(Q\) and \(Q^{\prime }\) of \([0,n]\) and \([0,n^{\prime }]\) respectively of equal length (\(=q\), say), given by \(Q=\{0=t_0<t_1<\cdots <t_{q-1}<t_q=n\}\) and \(Q^{\prime }=\{0=\psi (t_0)<\psi (t_1)<\cdots <\psi (t_{q-1})<\psi (t_q)=n^{\prime }\}\) satisfying the following properties:

-

1.

None of the intervals \([t_{r-1}, t_r]\) intersect both \(B\) and \(\psi ^{-1}(B^{\prime })\) but each intersects \(B\cup \psi ^{-1}(B^{\prime })\). Hence, none of the intervals \([\psi (t_{r-1}),\psi (t_r)]\) intersect both \(B^{\prime }\) and \(\psi (B)\) but each intersects \(B^{\prime } \cup \psi (B)\).

-

2.

For all \(a,b\); \(0\le a < b \le n\), \(\frac{1-2^{-(j+3/2)}}{R}\le \frac{\psi (b)-\psi (a)}{b-a} \le R(1+2^{-(j+3/2)})\).

-

3.

Suppose \(i\in (B \cup \psi ^{-1}(B^{\prime }))\cap [t_{r-1},t_r]\). Then \(|i-t_{r-1}|\wedge |t_{r}-i| \ge L_j^{9/4}\). Similarly if \(i^{\prime }\in (B^{\prime } \cup \psi (B))\cap [\psi (t_{r-1}),\psi (t_r)]\), then \(|i^{\prime }-\psi (t_{r-1})|\wedge |\psi (t_{r})-i| \ge L_j^{9/4}\).

Note that in the statement of the above lemma, \(t_i\) are arbitrary real numbers and not necessarily integers.

Proof

Let us define a family of maps \(\psi _s: [0,n]\rightarrow [0,n^{\prime }]\), \(0\le s \le L_j^{5/2}\) as follows:

It is easy to see that \(\psi _s\) is a piecewise linear bijection for each \(s\) with the piecewise linear inverse being given by

Notice that since \(\alpha >4\), for \(L_0\) sufficiently large, we get from (15) that \(\frac{1-2^{-(j+13/8)}}{R}\le \frac{n^{\prime }-L_j^3}{n-L_j^3} \le R(1+2^{-(j+13/8)})\). Since each \(\psi _s\) is piecewise linear, it follows that each \(\psi _s\) satisfies condition (2) in the statement of the lemma.

Let \(S\) be distributed uniformly on \([0,L_j^{5/2}]\), and consider the random map \(\psi _S\). Let

It follows that for \(i\in B, i^{\prime }\in B^{\prime }\), \(\mathbb P (|\psi _S(i)-i^{\prime }|< 2L_j^{9/4})\le \frac{8RL_j^{9/4}}{L_j^{5/2}}=\frac{8R}{L_j^{1/4}}\). Similarly \(\mathbb P (|i-\psi _S^{-1}(i^{\prime })|< 2L_j^{9/4})\le \frac{8R}{L_j^{1/4}}\). Using \(k_x,k_y\le k_0R_{j+1}^{+}\), a union bound now yields

for \(L_0\) large enough. It follows that there exists \(s_0\in [0, L_j^{5/2}]\) such that \(|\psi _{s_0}(i)-i^{\prime }|\ge 2L_j^{9/4}, |i-\psi _{s_0}^{-1}(i^{\prime })|\ge 2L_j^{9/4} \forall i\in B, i^{\prime }\in B^{\prime }\).

Setting \(\psi =\psi _{s_0}\) it is now easy to see that for sufficiently large \(L_0\) there exists \(0=t_0<t_1<\cdots <t_q=n\in [0,n]\) satisfying the properties in the statement of the lemma. One way to do this is to choose \(t_k\)’s at the points \(\frac{l_i+\psi ^{-1}(l^{\prime }_{i^{\prime }})}{2}\) where \(i,i^{\prime }\) are such that there does not exist any point in the set \(B\cup \psi ^{-1}(B^{\prime })\) in between \(l_i\) and \(\psi ^{-1}(l^{\prime }_{i^{\prime }})\). That such a choice satisfies the properties \((1)-(3)\) listed in the lemma is easy to verify. \(\square \)

Lemma 6.3

Assume the hypotheses of Proposition 6.1. Then there exist partitions \(P_*\) and \(P^{\prime }_*\) of \([n]\) and \([n^{\prime }]\) of equal length (\(=\!z\), say) given by \(P_*=\{0=i_0<i_1<\cdots <i_{z}=n\}\) and \(P^{\prime }_*=\{0=i^{\prime }_0<i^{\prime }_1<\cdots <i^{\prime }_{z}=n^{\prime }\}\) such that if we denote \(B_{P_*}=\{r: B\cap [i_r+1,i_{r+1}]\ne \emptyset \}\) and \(B^{\prime }_{P^{\prime }_*}=\{r: B^{\prime } \cap [i^{\prime }_r+1,i^{\prime }_{r+1}]\ne \emptyset \}\) then all the following properties hold.

-

1.

\((P_*,P^{\prime }_*,B_{P_*}\cup B^{\prime }_{P^{\prime }_*})\) is a marked partition pair.

-

2.

For \(r\notin B_{P_*}\cup B^{\prime }_{P^{\prime }_{*}}\), \((i_{r+1}-i_{r})\wedge (i^{\prime }_{r+1}-i^{\prime }_r)\ge \frac{L_j^{17/8}}{4R}\) and \(\frac{1-2^{-(j+7/5)}}{R} \le \frac{i^{\prime }_{r}-i^{\prime }_{r-1}}{i_{r}-i_{r-1}} \le R(1+2^{-(j+7/5)})\).

-

3.

\(B_{P_*}\cap B^{\prime }_{P^{\prime }_*}=\emptyset \), \(0, z-1\notin B_{P_{*}} \cup B_{P^{\prime }_{*}}\), \(B_{P_{*}} \cup B_{P^{\prime }_{*}}\) does not contain consecutive integers.

-

4.

If \(l_i\in [i_{r}+1,i_{r+1}]\), then \(|l_i-i_{r}|\wedge |l_i-i_{r+1}|>\frac{1}{2}L_j^{17/8}\). Similarly if \(l^{\prime }_i\in [i^{\prime }_{r}+1,i^{\prime }_{r+1}]\), then \(|l^{\prime }_i-i^{\prime }_{r}|\wedge |l^{\prime }_i-i^{\prime }_{r+1}|>\frac{1}{2}L_j^{17/8}\).

Proof

Choose a map \(\psi \) and partitions \(Q,Q^{\prime }\) as given by Lemma 6.2. Let us fix an interval \([t_{r-1},t_{r}]\), \(1\le r \le q\). We need to consider two cases.

Case 1 \(B_r:=B\cap [t_{r-1},t_{r}]=\{b_1<b_2<\cdots < b_{k_r}\}\ne \emptyset \).

Clearly \(k_r\le k_0R_{j+1}^{+}\). We now define a partition \(P^r=\{\lfloor t_{r-1} \rfloor =i_0^r<i_1^r<\cdots <i_{z_r}^r=\lfloor t_{r} \rfloor \}\) of \([\lfloor t_{r-1} \rfloor +1,\lfloor t_{r}\rfloor ]\) as follows.

-

\(i_1^r=b_1-\lfloor L_j^{17/8}\rfloor \).

-

For \(h\ge 1\), if \([i_{h-1}^r,i_{h}^r]\cap B_r=\emptyset \), then define, \(i_{h+1}^r=\min \{i\ge i_h^r+\lfloor L_j^{17/8}\rfloor : B_r\cap [i-\lfloor L_j^{17/8}\rfloor , i+3\lfloor L_j^{17/8}\rfloor ]=\emptyset \}\).

-

For \(h\ge 1\), if \([i_{h-1}^r,i_{h}^r]\cap B_r \ne \emptyset \), define \(i_{h+1}^r=\min \{i\ge i_h^r+2\lfloor L_j^{17/8}\rfloor : i+\lfloor L_j^{17/8}\rfloor +1\in B_r\}\) or \(\lfloor t_r\rfloor \) if no such \(i\) exists.

Notice that the construction implies that \(i^r_{z_r-1}=b_{k_r}+ \lfloor L_j^{17/8} \rfloor +1\). Also \(i^r_{h+1}-i^r_{h}\ge 2 \lfloor L_j^{17/8} \rfloor \) for all \(h\). Also notice that this implies that alternate blocks of this partition intersect \(B_r\) and hence \(z_r\le 2k_0R_{j+1}^{+}+2\). It also follows that the total length of the blocks intersecting \(B_{r}\) is at most \(8k_0R_{j+1}^{+}L_j^{17/8}\).

Now we construct a corresponding partition \(P^{\prime r}=\{\lfloor \psi (t_{r-1}) \rfloor =i_0^{^{\prime }r}<i_1^{^{\prime }r}<\cdots < i_{z_r}^{^{\prime }r}=\lfloor \psi (t_{r}) \rfloor \}\) of \([\lfloor \psi (t_{r-1}) \rfloor +1,\lfloor \psi (t_{r})\rfloor ]\) as follows.

-

\(i_1^{^{\prime }r}=\lfloor \psi (i_1^{r})\rfloor \).

-