Abstract

We analyze human behavior in crowdsourcing contests using an all-pay auction model where all participants exert effort, but only the highest bidder receives the reward. We let workers sourced from Amazon Mechanical Turk participate in an all-pay auction, and contrast the game theoretic equilibrium with the choices of the humans participants. We examine how people competing in the contest learn and adapt their bids, comparing their behavior to well-established online learning algorithms in a novel approach to quantifying the performance of humans as learners. For the crowdsourcing contest designer, our results show that a bimodal distribution of effort should be expected, with some very high effort and some very low effort, and that humans have a tendency to overbid. Our results suggest that humans are weak learners in this setting, so it may be important to educate participants about the strategic implications of crowdsourcing contests.

Similar content being viewed by others

Notes

Empirical results do not always exhibit overbidding. Some studies examining all-pay auctions between two players find no overbidding [16, 32], while studies examining auctions between more than two players find significant overbidding [11, 22] (recent work on this contains a more complete discussion [20]). Further, the degree of overbidding depends on the specifics of the contest and domain [7, 12, 19, 25,26,27].

We also allowed players to play against a specific friend, but such games were not used for the analysis in this paper.

We note that players who follow the mixed-strategy of the Nash equilibrium are extremely unlikely to be labeled as spammers. The symmetric mixed-strategy under the Nash equilibrium is choosing a bid uniformly at random over the range, making each possible strategy have a very small probability. In particular, for a player who selects bids uniformly at random, the probability of selecting the specific precluded bids in over 25% of the games is very low. This means that our spammer-detection rule is very unlikely to have a “false-positive”, and mistakenly labeling a player who is using the Nash mixed-strategy as a spammer.

Notably, such collusive strategies are predicted by a different game theoretic analysis of this as a repeated game setting, showing another way human behavior differs from idealized mathematical models in crowdsourcing contests.

Note that the uniform distribution over the bids gives an expected number of distinct bids of 70. Shortly, we provide two more precise comparisons with the uniform distribution.

A player who lost the auction can also lower their bid in an attempt to improve the negative utility they incur, but achieving a positive utility requires increasing the bids so as to win the auction.

We have also tried using only the bid difference \(d_{t-1} = x^{bid}_{t-1} - y^{bid}_{t-1}\) as a feature (i.e. using the two features \(W_{t-1}, d_{t-1}\), which achieves \(R^2=0.34\), still lower than the baseline model.

References

Amann, E., & Leininger, W. (1996). Asymmetric all-pay auctions with incomplete information: The two-player case. Games and Economic Behavior, 14(1), 1–18.

Arad, A., & Rubinstein, A. (Jan. 2010). Colonel blottos top secret files. Levine’s Working Paper Archive 814577000000000432, David K. Levine.

Arad, A., & Rubinstein, A. (2012). The 11–20 money request game: A level-k reasoning study. American Economic Review, 102(7), 3561–73.

Arora, S., Hazan, E., & Kale, S. (2012). The multiplicative weights update method: A meta-algorithm and applications. Theory of Computing, 8(1), 121–164.

Baye, M., Kovenock, D., & Vries, C. D. (1996). The all-pay-auction with complete information. Economic Theory, 8, 291–305.

Bennett, J., & Lanning, S. (2007). The netflix prize. In In KDD Cup and Workshop in conjunction with KDD (Vol. 2007, p. 35).

Burkart, M. (1995). Initial shareholdings and overbidding in takeover contests. The Journal of Finance, 50(5), 1491–1515.

Byers, J. W., Mitzenmacher, M., & Zervas, G. (2010). Information asymmetries in pay-per-bid auctions: How swoopo makes bank. CoRR, arXiv:abs/1001.0592.

Camerer, C. F. (2011). Behavioral game theory: Experiments in strategic interaction. Princeton: Princeton University Press.

Chowdhury, S. M., Kovenock, D., & Sheremeta, R. M. (2013). An experimental investigation of colonel blotto games. Economic Theory, 52(3), 833–861.

Davis, D. D., & Reilly, R. J. (1998). Do too many cooks always spoil the stew? An experimental analysis of rent-seeking and the role of a strategic buyer. Public Choice, 95(1–2), 89–115.

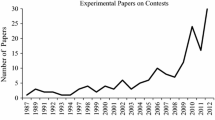

Dechenaux, E., Kovenock, D., & Sheremeta, R. M. (2015). A survey of experimental research on contests, all-pay auctions and tournaments. Experimental Economics, 18(4), 609–669.

DiPalantino, D., & Vojnovic, M. (2009). Crowdsourcing and all-pay auctions. In Proceedings of the 10th ACM conference on Electronic commerce, EC ’09 (pp. 119–128). New York: ACM.

Douceur, J. R. (2002). The sybil attack. In Revised Papers from the First International Workshop on Peer-to-Peer Systems, IPTPS ’01 (pp. 251–260). London: Springer-Verlag.

Erev, I., & Roth, A. E. (1998). Predicting how people play games: Reinforcement learning. American Economic Review, 88, 848–881.

Ernst, C., & Thni, C. (2013) Bimodal bidding in experimental all-pay auctions. Technical report.

Fallucchi, F., Renner, E., & Sefton, M. (2013). Information feedback and contest structure in rent-seeking games. European Economic Review, 64, 223–240.

Freund, Y., & Schapire, R. E. (1997). A decision-theoretic generalization of on-line learning and an application to boosting. Journal of Computer and System Sciences, 55(1), 119–139.

Gao, X. A., Bachrach, Y., Key, P., Graepel, T. (2012) Quality expectation-variance tradeoffs in crowdsourcing contests. In AAAI (pp. 38–44).

Gelder, A., Kovenock, D., & Sheremeta, R. M. (2015). Behavior in all-pay auctions with ties.

Ghosh, A., & McAfee, P. (2012). Crowdsourcing with endogenous entry. In Proceedings of the 21st international conference on World Wide Web WWW ’12 (pp. 999–1008). New York: ACM.

Gneezy, U., & Smorodinsky, R. (2006). All-pay auctions-an experimental study. Journal of Economic Behavior & Organization, 61(2), 255–275.

Kohli, P., Kearns, M., Bachrach, Y., Herbrich, R., Stillwell, D., & Graepel, T. (2012). Colonel blotto on facebook: the effect of social relations on strategic interaction. In WebSci (pp. 141–150).

Krishna, V., & Morgan, J. (1997). An analysis of the war of attrition and the all-pay auction. Journal of Economic Theory, 72(2), 343–362.

Lev, O., Polukarov, M., Bachrach, Y., & and Rosenschein, J. S. (May 2013). Mergers and collusion in all-pay auctions and crowdsourcing contests. In The Twelfth international joint conference on autonomous agents and multiagent systems (AAMAS 2013). Saint Paul: Minnesota, To appear.

Lewenberg, Y., Lev, O., Bachrach, Y., & Rosenschein, J. S. (2013). Agent failures in all-pay auctions. In Proceedings of the twenty-third international joint conference on artificial intelligence (pp. 241–247). AAAI Press.

Lewenberg, Y., Lev, O., Bachrach, Y., & Rosenschein, J. S. (2017). Agent failures in all-pay auctions. IEEE Intelligent Systems, 32(1), 8–16.

Liu, T. X., Yang, J., Adamic, L. A., & Chen, Y. (2011). Crowdsourcing with all-pay auctions: A field experiment on taskcn. Proceedings of the American Society for Information Science and Technology, 48(1), 1–4.

Lugovskyy, V., Puzzello, D., & Tucker, S. (2010). An experimental investigation of overdissipation in the all pay auction. European Economic Review, 54(8), 974–997.

Mago, S. D., Samak, A. C., & Sheremeta, R. M. (2016). Facing your opponents: Social identification and information feedback in contests. Journal of Conflict Resolution, 60(3), 459–481.

Mash, M., Bachrach, Y., & Zick, Y. (2017). How to form winning coalitions in mixed human-computer settings. In Proceedings of the 26th international joint conference on artificial intelligence (IJCAI) (pp. 465–471).

Potters, J., de Vries, C. G., & van Winden, F. (1998). An experimental examination of rational rent-seeking. European Journal of Political Economy, 14(4), 783–800.

Price, C. R., & Sheremeta, R. M. (2011). Endowment effects in contests. Economics Letters, 111(3), 217–219.

Price, C. R., & Sheremeta, R. M. (2015). Endowment origin, demographic effects, and individual preferences in contests. Journal of Economics & Management Strategy, 24(3), 597–619.

Sheremeta, R. M., & Zhang, J. (2010). Can groups solve the problem of over-bidding in contests? Social Choice and Welfare, 35(2), 175–197.

Stahl, D. O., & Wilson, P. W. (1995). On players models of other players: Theory and experimental evidence. Games and Economic Behavior, 10(1), 218–254.

Tang, J. C., Cebrian, M., Giacobe, N. A., Kim, H.-W., Kim, T., & Wickert, D. B. (2011). Reflecting on the darpa red balloon challenge. Communications of the ACM, 54(4), 78–85.

Vojnovic, M. (2015). Contest theory. Cambridge: Cambridge University Press.

Wang, Z., & Xu, M. (2012). Learning and strategic sophistication in games: The case of penny auctions on the internet. Working paper.

Yang, J., Adamic, L. A., & Ackerman, M. S. (2008). Crowdsourcing and knowledge sharing: strategic user behavior on taskcn. In Proceedings of the 9th ACM conference on electronic commerce, EC ’08 (pp. 246–255). New York: ACM.

Young, H. P. (2004). Strategic learning and its limits. Oxford: Oxford University Press.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Joel Oren: The project was completed while all authors were employed by Microsoft Research, Cambridge, UK.

Appendix: Including spammers: revised results

Appendix: Including spammers: revised results

In this appendix section, we revisit our findings without the exclusion of the so-called Spammers (the 85 players whose at least 25% of their bids were taken from the set \(\{0, 1, 1000, 9999\}\)). Recall that in total, there are 340 players who played a total of 11, 327 games. The average revenue over all games, was 11, 832 (previously 13, 730).

Figure 7 shows the average player bid distributions, which is quite close to that in the original analysis (though of course including more “spam” bids).

Figure 8 shows the average bid per time period (and again, the results are similar to those occuring with spammers, although unsuprisingly there are more players who do not adjust their bid when including spammers, as these are by definition players who use the same bids frequently).

Figures 9 and 10 show key clusters when including spammers in the clustering analysis. Again, the results are qualitatively similar to the original analysis.

Figure 11 shows the average utility of bids against the empirical bid distribution. The figure is very similar to that in the original analysis. In other words, although best-responses against “spam” bids do well against the bids taken only from spammers, the overall best responses (when reacting to the general population of all players, including both spammers and non-spammers) are very similar to what we found in the original analysis. Figure 12 shows the utility distribution of players, which is again very similar to the distribution found in our original analysis.

To conclude, repeating the analysis carried in the main paper which not filtering our players who frequently use the same “focal-bids” (but do filtering our uses who used fake profiles) does not yield significantly different results. This indicates that our results are relatively robust to our choice of mechanism for eliminating spammers.

Rights and permissions

About this article

Cite this article

Bachrach, Y., Kash, I.A., Key, P. et al. Strategic behavior and learning in all-pay auctions: an empirical study using crowdsourced data. Auton Agent Multi-Agent Syst 33, 192–215 (2019). https://doi.org/10.1007/s10458-019-09402-4

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10458-019-09402-4