Abstract

Despite intensive debates regarding action imitation and sentence imitation, few studies have examined their relationship. In this paper, we argue that the mechanism of action imitation is necessary and in some cases sufficient to describe sentence imitation. We first develop a framework for action imitation in which key ideas of Hurley’s shared circuits model are integrated with Wolpert et al.’s motor selection mechanism and its extensions. We then explain how this action-based framework clarifies sentence imitation without a language-specific faculty. Finally, we discuss the empirical support for and philosophical significance of this perspective.

Similar content being viewed by others

Notes

Human intention is not flat but multi-layered. According to Hurley (2001), it can be roughly divided into nonbasic intention (the goal of an actor) and basic intention (desired means to achieve that goal).

Low-level controllers will generate commands needed to complete an action similar to the observed one. If the paired predictions match with actual subsequent states, then these commands represent the appropriately segmented movements of the observed action (Wolpert et al. 2003).

To identify the actor’s desired states X * t , the observer’s highest level needs to issue predictive states \(\hat{X}_{t}\) that have the least mismatch with actual states \(X_{t}\). This final prediction’s paired command \(U_{t}\) can be described by Eq. (5) in Appendix “The Hierarchy of Models”.

In the debate regarding what mirror neurons mirror, there are three main hypotheses (Oztop et al. 2005): mirror neurons encode (i) the detailed low-level motor parameters of the observed action; (ii) the higher-level motor plan; or (iii) the actor’s intention. In this paper, we presuppose view (iii).

Hickok and Poeppel (2004) show that the brain’s speech perception is realized in two processing streams: dorsal stream maps sound into articulation-based representation and ventral stream maps sound into meaning, which are interfaced by the posterior region of the middle temporal gyrus.

For simplicity, although a hearer’s visual perception of a speaker’s lip movements might affect how the speaker’s sound is perceived (e.g., the McGurk effect), we consider only auditory input, which by no means indicates that the framework is not applicable to the visual processing of written sentences.

As the hearer’s perception of the number of syllables in a word is also determined by the sequential arrangement of phonemes (Mannell et al. 2014), the low level needs to check its output with sequence processing at a higher level.

To describe this process computationally, the framework activates low-level controllers 1, 2, 3,…, n and generates commands \(u_{t}^{1},u_{t}^{2},u_{t}^{3}, \ldots,u_{t}^{n}\). Each efference copy of a motor command is send to its paired predictor to generate a prediction \(\hat{x}_{t + 1}^{i} = \varPhi \left({w_{t}^{i}, x_{t},u_{t}} \right)\). Each prediction is then compared with the sensory input to generate responsibility signal \(\lambda_{t}^{i} = \frac{{e^{{{{- \left| {x_{t} + - \hat{x}_{t}^{i}} \right|^{2}} \mathord{\left/{\vphantom {{- \left| {x_{t} + - \hat{x}_{t}^{i}} \right|^{2}} {\sigma^{2}}}} \right. \kern-0pt} {\sigma^{2}}}}}}}{{\mathop \sum \nolimits_{j = 1}^{n} e^{{{{- \left| {x_{t} + - \hat{x}_{t}^{i}} \right|^{2}} \mathord{\left/{\vphantom {{- \left| {x_{t} + - \hat{x}_{t}^{i}} \right|^{2}} {\sigma^{2}}}} \right. \kern-0pt} {\sigma^{2}}}}}}}\). Each signal helps a controller to revise its motor command, and the final command of the entire framework can be generated through \(u_{t} = \sum\nolimits_{i = 1}^{n} {\lambda_{t}^{i} u_{t}^{i}}\). This final motor commands is a properly segmented constituent (usually a word or free morpheme) of the utterance.

Recursive processing is required for constituency and is presupposed by the framework.

Based on Eq. (2) in Appendix section “A combined Forward and Inverse Model”, we may define a gradient learning rule of each controller, in which the desired command \(\left({u_{t}^{*} - u_{t}^{i}} \right)\) can be approximated by using the feedback command \(u_{fb}\):\(\Delta \alpha_{t}^{i} = \epsilon {\lambda_{t}^{i} \frac{{d\psi_{i}}}{{d\alpha_{t}^{i}}}\left({u_{t}^{*} - u_{t}^{i}} \right)} = \epsilon {\frac{{du_{t}^{i}}}{{d\alpha_{t}^{i}}}\lambda_{t}^{i} \left({u_{t}^{*} - u_{t}^{i}} \right)} \cong \epsilon {\frac{{du_{t}^{i}}}{{d\alpha_{t}^{i}}}\lambda_{t}^{i} u_{fb}}\)

However, when a fluent speaker intentionally utters a sentence, not all of his or her words are necessarily consciously selected or explicitly intended. Nevertheless, this does not prevent the framework from using the mechanism to understand the speaker’s words because the words are linked to what the speaker would likely intend if she were aware of her word selection.

References

Austin, J. L. (1962). How to do things with words. Cambridge, MA: Harvard University Press.

Barrett, H. C., & Kurzban, R. (2006). Modularity in cognition: Framing the debate. Psychological Review, 113, 628–647.

Boza, A. S., Guerra, R. H., & Gajate, A. (2011). Artificial cognitive control system based on the shared circuits model of sociocognitive capacities. A first approach. Engineering Applications of Artificial Intelligence, 24(2), 209–219.

Brass, M., Schmitt, R. M., Spengler, S., & Gergely, G. (2007). Investigating action understanding: Inferential processes versus action simulation. Current Biology, 17, 2117–2121.

Brooks, R. A. (1999). Cambrian intelligence: The early history of the New AI. Cambridge, MA: The MIT Press.

Byrne, R. W. (2006). Parsing behaviour. A mundane origin for an extraordinary ability? In N. Enfield & S. Levinson (Eds.), The roots of human sociality (pp. 478–505). New York, NY: Berg.

Cappelen, H., & Lepore, E. (2005). Insensitive semantics: A defense of semantic minimalism and speech act pluralism. Oxford: Blackwell.

Carruthers, P. (2006). The architecture of the mind. Oxford: Oxford University Press.

Cass, H., Reilly, S., Owen, L., Wisbeach, A., Weekes, L., Slonims, V., & Charman, T. (2003). Findings from a multidisciplinary clinical case series of females with Rett syndrome. Developmental Medicine and Child Neurology, 45(5), 325–337.

Clark, A., & Lappin, S. (2010). Linguistic nativism and the poverty of the stimulus. Oxford: Wiley Blackwell.

Cosmides, L., & Tooby, J. (1992). Cognitive adaptations for social exchange. In J. Barkow, L. Cosmides, & J. Tooby (Eds.), The adapted mind: Evolutionary psychology and the generation of culture. New York, NY: Oxford University Press.

Dever, J. (2012). Compositionality. In G. Russell & D. Graff Fara (Eds.), The Routledge handbook to the philosophy of language (pp. 91–102).

Evans, N., & Levinson, S. C. (2009). The myth of language universals: Language diversity and its importance for cognitive science. Behavioral and Brain Sciences, 32(5), 429–492.

Fodor, J. (2008). LOT 2: The language of thought revisited. Oxford: Oxford University Press.

Garrod, S., Gambi, C., & Pickering, M. J. (2014). Prediction at all levels: forward model predictions can enhance comprehension. Language, Cognition and Neuroscience, 29(1), 46–48.

Garrod, S., & Pickering, M. J. (2008). Shared circuits in language and communication. Behavioural and Brain Sciences, 31(1), 26–27.

Glenberg, A. M., & Gallese, V. (2012). Action-based language: A theory of language acquisition, comprehension, and production. Cortex, 48, 905–922.

Graf Estes, K., Evans, J. L., Alibali, M. W., & Saffran, J. R. (2007). Can infants map meaning to newly segmented words? Statistical segmentation and word learning. Psychological Science, 18, 254–260.

Greenberg, A., Bellana, B., & Bialystok, E. (2013). Perspective - taking ability in bilingual children: Extending advantages in executive control to spatial reasoning. Cognitive Development, 28(1), 41–50.

Grice, H. P. (1975). Logic and conversation. In P. Cole & J. L. Morgan (Eds.), Syntax and semantics 3: Speech acts (pp. 41–58). New York, NY: Academic Press.

Guenther, F. H., & Vladusich, T. (2012). A neural theory of speech acquisition and production. Journal of Neurolinguistics, 25(5), 408–422.

Hamilton, A. F. (2013). The mirror neuron system contributes to social responding. Cortex, 49(10), 2957–2959.

Haruno, M., Wolpert, D. M., & Kawato, M. (2003). Hierarchical MOSAIC for movement generation. International Congress Series, 1250, 575–590.

Hickok, G. (2014). The architecture of speech production and the role of the phoneme in speech processing. Language, Cognition and Neuroscience, 29(1), 2–20.

Hickok, G., & Poeppel, D. (2004). Dorsal and ventral streams: A framework for understanding aspects of the functional anatomy of language. Cognition, 92(1–2), 67–99.

Hung, T. -W. (Ed.). (2014). What action comprehension tells us about meaning interpretation. Communicative action: selected papers of the 2013 IEAS conference on language and action. Singapore: Springer.

Hurley, S. (2001). Perception and action: Alternative views. Synthese, 129, 3–40.

Hurley, S. (2008). The shared circuits model (SCM): How control, mirroring, and simulation can enable imitation, deliberation, and mind reading. Behavioral and Brain Sciences, 31(1), 1–22.

Kiverstein, J., & Clark, A. (2008). Bootstrapping the mind. Behavioural and Brain Sciences, 31(1), 41–52.

Kovács, Á. M. (2009). Early bilingualism enhances mechanisms of false-belief reasoning. Developmental Science, 12(1), 48–54.

Kovács, Á. M., & Mehler, J. (2009). Cognitive gains in 7-month-old bilingual infants. Proceedings of the National Academy of Sciences, 106, 6556–6560.

Mannell, R., Cox, F., & Harrington, J. (2014). An introduction to phonetics and phonology. Macquarie University. Retrieved 26 Sep 2015 from http://clas.mq.edu.au/speech/phonetics/index.html.

Marr, D. (1982). Vision. San Francisco, CA: W.H. Freeman.

Miller, J. F. (1973). Sentence imitation in pre-school children. Language and Speech, 16(1), 1–14.

Nelson, K. E., Carskaddon, G., & Bonvillian, J. D. (1973). Syntax acquisition: Impact of experimental variation in adult verbal interaction with the child. Child Development, 44(3), 497–504.

Over, H., & Gattis, M. (2010). Verbal imitation is based on intention understanding. Cognitive Development, 25(1), 46–55.

Oztop, E., Wolpert, D., & Kawato, M. (2005). Mental state inference using visual control parameters. Cognitive Brain Research, 22(2), 129–151.

Pickering, M. J., & Garrod, S. (2013). An integrated theory of language production and comprehension. Behavioural and Brain Sciences, 36(4), 329–347.

Pinker, S. (1994). The language instinct: How the mind creates language. New York, NY: Harper Collins.

Prior, M., & Ozonoff, S. (2007). Psychological factors in autism. In F. R. Volkmar (Ed.), Autism and pervasive developmental disorders (pp. 69–128). New York, NY: Cambridge University Press.

Pulvermüller, F., & Fadiga, L. (2010). Active perception: Sensorimotor circuits as a cortical basis for language. Nature Reviews Neuroscience, 11, 351–360.

Ratner, N. B., & Sih, C. C. (1987). Effects of gradual increases in sentence length and complexity on children’s dysfluency. Journal of Speech and Hearing Disorders, 52(3), 278–287.

Seeff-Gabriel, B., Chiat, S., & Dodd, B. (2010). Sentence imitation as a tool in identifying expressive morphosyntactic difficulties in children with severe speech difficulties. International Journal of Language and Communication Disorders, 45(6), 691–702.

Silverman, S. W., & Ratner, N. B. (1997). Syntactic complexity, fluency, and accuracy of sentence imitation in adolescents. Journal of Speech, Language, and Hearing Research, 40(1), 95–106.

Sperber, D. (2002). In defense of massive modularity. In E. Dupoux (Ed.), Language, brain and cognitive development: Essays in honor of Jacques Mehler. Mass: MIT Press.

Thompson, S. P., & Newport, E. L. (2007). Statistical learning of syntax: The role of transitional probability. Language Learning and Development, 3(1), 1–42.

Tincoff, R., & Jusczyk, P. W. (1999). Some beginnings of word comprehension in 6-month-olds. Psychological Science, 10(2), 172–175.

Tourville, J. A., & Guenther, F. H. (2011). The DIVA model: A neural theory of speech acquisition and production. Language and Cognitive Processes, 26(7), 952–981.

Verhoeven, L., Steenge, J., van Weerdenburg, M., & van Balkom, H. (2011). Assessment of second language proficiency in bilingual children with specific language impairment: A clinical perspective. Research in Developmental Disabilities, 32(5), 1798–1807.

Willatts, P. (1999). Development of means–end behavior in young infants: Pulling a support to retrieve a distant object. Developmental Psychology, 35(3), 651–667.

Wolpert, D., Doya, K., & Kawato, M. (2003). A unifying computational framework for motor control and social interaction. Philosophical Transactions of the Royal Society of London B, 358(1431), 593–602.

Woodyatt, G., & Ozanne, A. (1992). Communication abilities and Rett syndrome. Journal of Autism and Developmental Disorders, 22(2), 155–173.

Acknowledgments

This research was sponsored in part by the Ministry of Science and Technology, Taiwan under Grant No. 101-2410-H-001-100-MY2.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

The Convertor

The convertor receives the actor’s intention d t and actual state x t at time t, and it outputs desired state x * t+1 at time t + 1. We use p t = f (x t , d t ) and x * t+1 = g(d t , p t ) to describe the parameter generated by motor control processing and a desired motor state generated by motor planning, where f and g are functions with inverse relationship \(x_{t + 1}^{*} = g\left({d_{t},f\left({x_{t},d_{t}} \right)} \right).\)

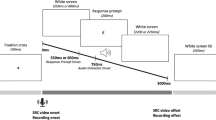

A Combined Forward and Inverse Model

Suppose each model activates multiple predictors 1, 2, 3,…, n at t, and select among their next state predictions \(\hat{x}_{t + 1}^{1},\hat{x}_{t + 1}^{2},\hat{x}_{t + 1}^{3}, \ldots,\hat{x}_{t + 1}^{n}\) through testing (see Fig. 3). Each predictor receives actual feedback x t and the efference copy of motor command u t to generate a prediction. The prediction of the i-th predictor is \(\hat{x}_{t + 1}^{i} = \varPhi \left({w_{t}^{i}, x_{t},u_{t}} \right)\), where \(w_{t}^{i}\) represents the parameters of the function approximator \(\varPhi\). This predictive next state is compared with the actual next state. If an error occurs, then the wrong prediction is sent to a responsibility estimator to generate responsibility signal \(\lambda_{t}^{i}\), which can be calculated by using the softmax activation function.

In Eq. (1), \(x_{t}\) is the framework’s actual voice output, and \(\sigma\) is a scaling constant. The softmax activation function calculates the error signals and normalizes them into probability values between 0 and 1. Predictors with few errors receive higher responsibilities. Thus, responsibility signals can regulate predictor learning in a competitive manner. Moreover, a paired controller exists for each predictor, and it receives the desire next state x * t+1 and outputs motor commands. Suppose that the framework activates controllers 1, 2, 3,…, n and generates \(u_{t}^{1},u_{t}^{2},u_{t}^{3}, \ldots, u_{t}^{n}\). The motor command of the i-th controllers is \(u_{t}^{i} = \psi \left({\alpha_{t}^{i}, x_{t + 1}^{*}} \right)\), where \(\alpha_{t}^{i}\) is the parameter of a function approximator \(\psi\). The summation of the motor commands generated by controllers 1, 2, 3,…, n is represented by Eq. (2).

The Hierarchy of Models

For simplicity, we describe only a two-level hierarchy, although it is extendable to an arbitrary number of levels. Suppose that the predictor of the i-th higher-level model receives actual state X t and the efference copy of U t at t, and suppose that it outputs the approximate prediction \(\hat{X}_{t + 1}^{i}\) without activating subordinate controllers:

In Eq. (3), \(\varPhi\) refers to a vector-valued and nonlinear function approximator; \(W_{t}^{i}\) is the synaptic weight of the higher-level j-th pair; X t is the current state (posterior probability); U t is the higher-level command; and \(P\left({j|W_{t}^{i},X_{t},U_{t}} \right)\) refers to the posterior probability in which the j-th pair is selected under \(W_{t}^{i},X_{t},{\text{and}}\;U_{t}\). Likewise, the i-th higher-level prediction \(\hat{X}_{t + 1}^{i}\) is compared with actual state X t from the subordinate level to generate higher-level responsibility (i.e., prior probability) \(\lambda_{i}^{H} \left(t \right)\) via the estimator

\(\lambda_{i}^{H} \left(t \right)\) can regulate the subordinate level in a competitive manner. Moreover, each higher-v predictor has a paired controller that receives the desired next state X * t+1 and current state X t from the subordinate level as input. X * t+1 is an abstract representation that determines the selection and activation order of subordinate controllers. Each higher-level controller generates commands to the subordinate level, and the command of the i-th higher-level controller is \(U_{t}^{i} = \varPsi \left({\varLambda_{t}^{i}, X_{t + 1}^{*},X_{t}} \right)\), where \(\varLambda_{t}^{i}\) is the parameter of a function approximator \(\varPsi\). Then, \(U_{t}\), the summation of (prior probability) commands for the lowest pairs, is weighted by \(\lambda_{i}^{H} \left(t \right)\):

Abstraction of the Sequence of Constituents

Suppose that the k-th higher-level model receives a sequence of actual input [\(x_{0},x_{1}, \ldots,x_{t}\)] (represented by X t ) and generates a prediction regarding a sequence of output [\(\hat{x}_{1}^{\text{k}}, \ldots,\hat{x}_{t}^{\text{k}}\)] (represented by \(\hat{X}_{t + 1}^{\text{k}}\)). The task of this higher level is to determine the prediction that has the least mismatch with the next actual sequence of input. The comparison result can be represented as Eq. (4). The responsibility signal can be used to revise higher-level commands (see Eq. (5)), which determines the behavior of the subordinate level. The efference copy of the commands can also be used for further prediction (and revision). We also use a recurrent network to describe the higher-level prediction of sequence \(\hat{X}_{t + 1}^{\text{k}} = f\left({W_{t}^{k}, X_{t}} \right)\), in which f is a nonlinear function that can use weights \(W_{t}^{k}\) to predict a vector of posterior probabilities. The network dynamics can be described as:

In the above differential equation, g(X) is the sigmoid function with derivative g(X)(1 − g(X)), \(a_{i}\) is the activation, and \(b_{i}\) is the output at the i-th node. In Haruno et al.’s (2003) simulation, their models successfully learned two sequences and determined the one that should be reproduced under a given context, even when 5 % noise was added.

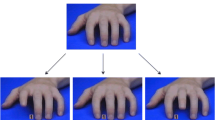

Control Variable Processing for Estimated Intention

Suppose that the highest models generate predictive control parameters \(\hat{p}_{t}^{1},\hat{p}_{t}^{2},\hat{p}_{t}^{3}, \ldots, \hat{p}_{t}^{n}\) at time t. Each predictive parameter will be compared with actually observed parameter p t from the control variable encoding (Fig. 4). The comparison result is represented by the responsibility signal:

The mechanism of intention/parameter matching in control variable processing generates simulated intention \(\hat{d}_{t}^{1},\hat{d}_{t}^{2},\hat{d}_{t}^{3}, \ldots, \hat{d}_{t}^{n}\). The final estimated intention is represented by:

Searching for Meaning

Here, we use Oztop et al.’s (2005) algorithm of mental state search to infer the meaning that the speaker intends to convey. To initialize the algorithm, we also set T k and S k to an empty sequence (T k = S k = []). T k and S k represent sequences of observed and mentally simulated vectors of control variables extracted under the mental state k. Next, repeat steps (1)–(5) from speech onset to speech end.

-

1.

Pick next possible mental state (j) (which can be thought of as an index for the possible referent to which the speaker is referring).

-

2.

Observe: Extract the relevant control variables based on the hypothesized mental state (j), \(x_{j}^{i}\), and add them to T j (T j = [T j , \(x_{j}^{i}\)]). Here, i indicates that the collected data were placed in ith position in the visual control variable sequence.

-

3.

Simulate: Mentally simulate speech with mental state j while storing the simulated control variables x j in S j (S j = [\(x_{j}^{0}, x_{j}^{1}, \ldots, x_{j}^{N}\)], where N is the number of control variables collected during observation).

-

4.

Compare: Compute the discounted difference between T j and S j , where N is the length of T j and S j . \(D_{N} = \frac{{\left({1 - \gamma} \right)}}{{\left({1 = \gamma^{N + 1}} \right)}}\mathop \sum \limits_{i = 0}^{N} \left({x_{\text{sim}}^{i} - x^{0}} \right)^{T} {\mathbf{W}}\left({x_{\text{sim}}^{i} - x^{i}} \right)\gamma^{N - i}\), where \(x_{\text{sim}}^{i}\) ∈ Sj and \(x^{i}\) ∈ Tj and W is a diagonal matrix normalizing components of \(x^{i}\) and γ is the discount factor.

-

5.

If DN is smallest so far, set j min = j.

Return: j min (the observer infers that j min is the actor’s intended meaning).

Rights and permissions

About this article

Cite this article

Hung, TW. How Sensorimotor Interactions Enable Sentence Imitation. Minds & Machines 25, 321–338 (2015). https://doi.org/10.1007/s11023-015-9384-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11023-015-9384-8