Abstract

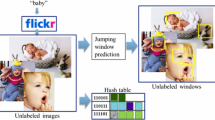

Automatic image annotation enables efficient indexing and retrieval of the images in the large-scale image collections, where manual image labeling is an expensive and labor intensive task. This paper proposes a novel approach to automatically annotate images by coherent semantic concepts learned from image contents. It exploits sub-visual distributions from each visually complex semantic class, disambiguates visual descriptors in a visual context space, and assigns image annotations by modeling image semantic context. The sub-visual distributions are discovered through a clustering algorithm, and probabilistically associated with semantic classes using mixture models. The clustering algorithm can handle the inner-category visual diversity of the semantic concepts with the curse of dimensionality of the image descriptors. Hence, mixture models that formulate the sub-visual distributions assign relevant semantic classes to local descriptors. To capture non-ambiguous and visual-consistent local descriptors, the visual context is learned by a probabilistic Latent Semantic Analysis (pLSA) model that ties up images and their visual contents. In order to maximize the annotation consistency for each image, another context model characterizes the contextual relationships between semantic concepts using a concept graph. Therefore, image labels are finally specialized for each image in a scene-centric view, where images are considered as unified entities. In this way, highly consistent annotations are probabilistically assigned to images, which are closely correlated with the visual contents and true semantics of the images. Experimental validation on several datasets shows that this method outperforms state-of-the-art annotation algorithms, while effectively captures consistent labels for each image.

Similar content being viewed by others

References

Amiri SH, Jamzad M (2015) Automatic image annotation using semi-supervised generative modeling. Pattern Recognit 48(1):174–188

Ankerst M, Breunig MM, Kriegel H et al. (1999) “OPTICS: ordering points to identify the clustering structure,”. Proc SIGMOD’99 ACM SIGMOD Int Conf Manag Data 49–60

Assari SM, Zamir AR, Shah M et al. (2014) “Video classification using semantic concept co-occurrences,”. Proc 2014 I.E. Conf Comput Vision Pattern Recognit (CVPR) 2529–2536

Bannour H, Hudelot C (2014) Building and using fuzzy multimedia ontologies for semantic image annotation. Multimed Tools Appl 72(3):2107–2141

Bao B-K, Li T, Yan S (2012) Hidden-concept driven multilabel image annotation and label ranking. IEEE Trans Multimed 14(1):199–210

Barnard K, Duygulu P, Forsyth D, de Freitas N, Blei DM, Jordan MI (2003) Matching words and pictures. J Mach Learn Res 3:1107–1135

Bouveyron C, Girard S, Schmid C (2007) High-dimensional data clustering. Comput Stat Data Anal 52(1):502–519

Campello R, Moulavi D, Sander J et al. (2013) “Density-based clustering based on hierarchical density estimates,”. Proc 17th Pacific-Asia Conf Adv Knowledge Discovery Data Mining (PAKDD) Springer, LNAI 160–172

Carneiro G, Chan AB, Moreno PJ, Vasconcelos N (2007) Supervised learning of semantic classes for image annotation and retrieval. Pattern Anal Mach Intell IEEE Trans 29(3):394–410

Chapelle O, Haffner P, Vapnik VN (1999) Support vector machines for histogram-based image classification. Neural Networks, IEEE Trans 10(5):1055–1064

Chen Z, Fu H, Chi Z, Feng D (2012) An adaptive recognition model for image annotation. Syst Man, Cybern Part C Appl Rev IEEE Trans 42(6):1120–1127

Chua T, Tang J, Hong R et al. (2009) “NUS-WIDE: a real-world web image database from national university of Singapore,”. Proc ACM Int Conf Image Video Retriev, Greece 48–53

Csurka G, Dance CR, Fan L et al. (2004) “Visual categorization with bags of keypoints,”. Proc Workshop Statistical Learn Comput vision (ECCV 2004) 1–22

Donoho DL, Grimes C (2003) Hessian eigenmaps: locally linear embedding techniques for high-dimensional data. Proc Natl Acad Sci 100(10):5591–5596

Escalante HJ, Hernández CA, Gonzalez JA, López-López A, Montes M, Morales EF, Enrique Sucar L, Villaseñor L, Grubinger M (2010) The segmented and annotated IAPR TC-12 benchmark. Comput Vis Image Underst 114(4):419–428

Ester M, Kriegel H, Sander J et al. (1996) “A density-based algorithm for discovering clusters in large spatial databases with noise,”. Proc Second Int Conf Knowledge Discovery Data Mining (KDD-96)

Fernando B, Fromont E, Tuytelaars T et al. (2012) “Effective use of frequent itemset mining for image classification,”. Proc Europe Conf Comput Vision (ECCV) 214–227

Gao Y, Ji R, Liu W, Dai Q, Hua G (2014) Weakly supervised visual dictionary learning by harnessing image attributes. Imag Process IEEE Trans 23(12):5400–5411

Gao S, Tsang IW, Chia L (2013) Laplacian sparse coding, hypergraph laplacian sparse coding, and applications. Pattern Anal Mach Intell IEEE Trans 35(1):92–104

Gould S, Rodgers J, Cohen D, Elidan G, Koller D (2008) Multi-class segmentation with relative location prior. Int J Comput Vis 80(3):300–316

Guha S, Rastogi R, Shim K et al. (1998) “CURE: an efficient clustering algorithm for large databases,”. Proc SIGMOD’98 ACM SIGMOD Int Conf Manag Data 73–84

He K, Chan W, Zhu G, Lin L, Zhou X (2014) Image region labeling by exploring contextual information of visual spatial and semantic concepts. Proc 15th Pacific Rim Conf Adv Multimed Inform Process–PCM 1:93–102

He X, Zemel RS, Carreira-Perpinan MA (2004) “Multiscale conditional random fields for image labeling,”. Proc 2004 I.E. Comput Soc Conf Comput Vision Pattern Recognit (CVPR)

Huiskes M, Thomee B, Lew M et al. (2010) “New trends and ideas in visual concept detection: the MIR flickr retrieval evaluation initiative,”. Proc 11th ACM Int Conf Multimed Inform Retriev 527–536

Izadinia H, Shah M (2012) Recognizing complex events using large margin joint low-level event model. Proc Europ Conf Comput Vision (ECCV) 7575:430–444

Jiang W, Chang SF, Loui AC (2007) Context-based concept fusion with boosted conditional random fields. Proc 2007 I.E. Int Conf Acoustics, Speech, Sign Process I(Pts 1-3):949–952

Ladick’ L, Russell C, Kohli P et al (2009) “Associative hierarchical crfs for object class image segmentation,”. Proc 12th IEEE Int Conf Comput Vision (ICCV) 739–746

Lazebnik S, Raginsky M (2009) Supervised learning of quantizer codebooks by information loss minimization. Pattern Anal Mach Intell IEEE Trans 31(7):1294–1309

Lazebnik S, Schmid C, Ponce J (2006) Beyond bags of features: spatial pyramid matching for recognizing natural scene categories. Proc 2006 I.E. Comput Soc Conf Comput Vision Pattern Recognit (CVPR) 2:2169–2178

Leung CHC, Chan AWS, Milani A, Liu J, Li Y (2012) Intelligent social media indexing and sharing using an adaptive indexing search engine. ACM Trans Intell Syst Technol 3(3):1–27

Li L, Jiang S, Huang Q (2012) Learning hierarchical semantic description via mixed-norm regularization for image understanding. IEEE Trans Multimed 14(5):1401–1413

Li Z, Liu J, Tang J, Lu H (2014) Projective matrix factorization with unified embedding for social image tagging. Comput Vis Image Underst 124:71–78

Li J, Wang JZ (2008) Real-time computerized annotation of pictures. IEEE Trans Pattern Anal Mach Intell 30(6):985–1002

Liu W, Tao D (2013) Multiview hessian regularization for image annotation. Imag Process IEEE Trans 22(7):2676–2687

Liu W, Tao D, Cheng J, Tang Y (2014) Multiview hessian discriminative sparse coding for image annotation. Comput Vis Image Underst 118:50–60

Llorente A, Manmatha R, Rüger S et al. (2010) “Image retrieval using Markov random fields and global image features,”. Proc ACM Int Conf Imag Video Retriev 243

Ma Z, Nie F, Yang Y, Uijlings JRR, Sebe N (2012) Web image annotation via subspace-sparsity collaborated feature selection. IEEE Trans Multimed 14(4):1021–1030

Makadia A, Pavlovic V, Kumar S (2010) Baselines for image annotation. Int J Comput Vis 90(1):88–105

Nguyen C-T, Kaothanthong N, Tokuyama T, Phan X-H (2013) A feature-word-topic model for image annotation and retrieval. ACM Trans Web 7(3):1–24

Parsons L, Haque E, Liu H (2004) Subspace clustering for high dimensional data: a review. ACM SIGKDD Explor Newsl 6(1):90–105

Rabinovich A, Vedaldi A, Galleguillos C et al. (2007) “Objects in context,”. Proc 11th IEEE Int Conf Comput Vision (ICCV) 1–8

Rasiwasia N, Vasconcelos N (2009) “Holistic context modeling using semantic co-occurrences,”. Proc 2009 I.E. Conf Comput Vision Pattern Recognit (CVPR) 1889–1895

Ritendra D, Joshi D, Li J, Wang JZ (2008) Image retrieval: ideas, influences, and trends of the new age. ACM Comput Surv 40(2):1–60

Russell BC, Efros AA, Sivic J, Freeman WT, Zisserman A (2006) Using multiple segmentations to discover objects and their extent in image collections. Proc 2006 I.E. Comput Soc Conf Comput Vision Pattern Recognit (CVPR) 2:1605–1614

Schultz M, Joachims T (2004) “Learning a distance metric from relative comparisons,”. Adv Neural Inf Process Syst 41–48

Shotton J, Winn J, Rother C et al. (2006) “Textonboost: joint appearance, shape and context modeling for multi-class object recognition and segmentation,”. Proc Europ Conf Comput Vision (ECCV), Springer Berlin Heidelberg 1–15

Sivic J, Zisserman A (2003) Video Google: a text retrieval approach to object matching in videos. Proc 9th IEEE Int Conf Comput Vision (ICCV) 2:1470–1477

Song X, Jiang S, Wang S, Li L, Huang Q (2014) Polysemious visual representation based on feature aggregation for large scale image applications. Multimed Tools Appl 74(2):595–611

Su J-H, Huang W-J, Yu PS, Tseng VS (2011) Efficient relevance feedback for content-based image retrieval by mining user navigation patterns. Knowl Data Eng IEEE Trans 23(3):360–372

Tao D, Jin L, Liu W, Li X (2013) Hessian regularized support vector machines for mobile image annotation on the cloud. Multimed, IEEE Trans 15(4):833–844

Tirilly P, Claveau V, Gros P et al. (2008) “Language modeling for bag-of-visual word image categorization,”. Proc CIVR’08 - Int Conf Content-Based Imag Video Retriev 249–258

Vailaya A, Figueiredo MAT, Jain AK, Zhang H-J (2001) Image classification for content-based indexing. Imag Process IEEE Trans 10(1):117–130

Van Gemert JC, Snoek CGM, Veenman CJ, Smeulders AWM, Geusebroek J (2010) Comparing compact codebooks for visual categorization. Comput Vis Image Underst 114(4):450–462

Vogel J, Schiele B (2007) Semantic modeling of natural scenes for content-based image retrieval. Int J Comput Vis 72(2):133–157

Wang X, Du J, Wu S, Li X, Xin H, Zhang Y, Li F (2015) High-level semantic image annotation based on hot Internet topics. Multimed Tools Appl 74(6):2055–2084

Wang H, Lu T, Wang Y et al. (2014) “Weakly-supervised region annotation for understanding scene images,”. Multimed Tools Appl 1–25

Wang Y, Mei T, Gong S, Hua X-S (2009) Combining global, regional and contextual features for automatic image annotation. Pattern Recognit 42(2):259–266

Xiang Y, Zhou X, Liu Z et al. (2010) “Semantic context modeling with maximal margin Conditional Random Fields for automatic image annotation,”. Proc 2010 I.E. Conf Comput Vision Pattern Recognit (CVPR) 3368–3375

Xu C, Tao D, Xu C (2014) Large-margin multi-view information bottleneck. Pattern Anal Mach Intell IEEE Trans 36(8):1559–1572

Zha Z.-J, Hua X.-S, Mei T et al. (2008) “Joint multi-label multi-instance learning for image classification,”. Proc 2008 I.E. Conf Comput Vision Pattern Recognit (CVPR) 1–8

Zhang D, Islam MM, Lu G (2012) A review on automatic image annotation techniques. Pattern Recognit 45(1):346–362

Zhang D, Monirul Islam M, Lu G (2013) Structural image retrieval using automatic image annotation and region based inverted file. J Vis Commun Image Represent 24(7):1087–1098

Zhang S, Tian Q, Hua G, Huang Q, Gao W (2014) ObjectPatchNet: towards scalable and semantic image annotation and retrieval. Comput Vis Image Underst 118:16–29

Zhao S, Yao H, Yang Y et al. (2014) “Affective image retrieval via multi-graph learning,”. Proc ACM Int Conf Multimed 1025–1028

Zheng Y-T, Neo S-Y, Chua T-S, Tian Q (2009) Toward a higher-level visual representation for object-based image retrieval. Vis Comput 25(1):13–23

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Zand, M., Doraisamy, S., Abdul Halin, A. et al. Visual and semantic context modeling for scene-centric image annotation. Multimed Tools Appl 76, 8547–8571 (2017). https://doi.org/10.1007/s11042-016-3500-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11042-016-3500-5