Abstract

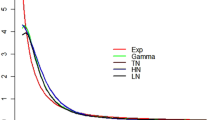

We introduce a stochastic frontier model with a one-parameter distribution known as the Rayleigh distribution which has a non-zero mode and yet it is easy to estimate and use. We show how this model can be estimated using various estimation methods. The Rayleigh model with environmental variables and time-varying features is also considered. It is also tested against exponential and half-normal models using two real data sets.

Similar content being viewed by others

References

Aigner D, Lovell C, Schmidt P (1977) Formulation and estimation of stochastic frontier production function models. J Econom 12:21–37

Alvarez A, Amsler C, Orea L, Schmidt P (2006) Interpreting and testing the scaling property in models where inefficiency depends on firm characteristics. J Prod Anal 25:201–212

Battese G, Coelli T (1992) Frontier production functions, technical efficiency and panel data: with application to paddy farmers in India. J Prod Anal 3:153–169

Bishop CM (2006) Pattern recognition and machine learning. Springer, New York

Coelli T, Rao P, O’Donnell C, Battese G (2005) An Introduction to Efficiency and Productivity Analysis, 2nd edn. Kluwer Academic Publishers, Boston

Frühwirth-Schnatter S (2006) Finite mixture and markov switching models. Springer, New York

Gelfand A, Dey D (1994) Bayesian model choice: asymptotics and exact calculations. J Royal Stat Soc B 56:501–514

Geweke J (2005) Contemporary bayesian econometrics and statistics. Wiley, New York

Greene W (1990) A gamma-distributed stochastic frontier model. J Econom 46:141–163

Greene W (2003) Simulated likelihood estimation of the normal-gamma stochastic frontier function. J Prod Anal 19:179–190

Greene W (2008) Econometric approach to efficiency analysis, Greene, Ch. 2. In: Fried HO, Lovell CAK, Schmidt SS (eds) The measurement of productive efficiency—techniques and applications. Oxford University Press, Oxford, pp 92–250

Greene W, Alvarez A, Arias C (2004) Accounting for unobservables in production models: management and inefficiency, Econometric Society 2004 Australasian Meetings, Econometric Society.

Griffin J, Steel M (2007) Bayesian stochastic frontier analysis using WinBUGS. J Prod Anal 27:163–176

Griffin J, Steel M (2008) Flexible mixture modelling of stochastic frontiers. J Prod Anal 29:33–50

Hajargasht G, Griffiths W (2013) Estimation and testing of stochastic frontier models using variational bayes, Presented in EWEPA 13.

Hajargasht G, Woźniak T (2013) Accurate computation of marginal data density using variational Bayes, Unpublished Manuscript

Johnson NL, Kotz S, Balakrishnan N (1994) Continuous univariate distributions, vol 2. Wiley, Hoboken, NJ

Koop G, Steel M (2001) Bayesian analysis of stochastic frontier models. In: Baltagi B (ed) A companion to theoretical econometrics. Blackwell Publishers, Oxford, pp 520–573

Kumbhakar S (1990) Production frontiers and panel data, and time varying technical inefficiency. J Econom 46:201–211

Kumbhakar S, Lovell K (2000) Stochastic frontier analysis. Cambridge University Press, Cambridge

Lee Y, Schmidt P (1993) A production frontier model with flexible temporal variation in technical efficiency. In: Fried H, Lovell K, Schmidt S (eds) The measurement of productive efficiency: techniques and applications. Oxford University Press, New York, pp 237–255

Meeusen W, van den Broeck J (1977) Efficiency estimation from Cobb-Douglas production functions with composed error. Int Econom Rev 18:435–444

Ormerod J, Wand M (2010) Explaining variational approximations. Am Stat 64:140–153

Ritter C, Simar L (1997) Pitfalls of normal-gamma stochastic frontier models. J Prod Anal 8:167–182

Sickles R (2005) Panel estimators and the identification of firm specific efficiency levels in parametric, semiparametric and nonparametric settings. J Econom 126:305–334

Stevenson R (1980) Likelihood functions for generalized stochastic frontier functions. J Econom 13:57–66

Tsionas G (2007) Efficiency measurement with the Weibull stochastic frontier. Oxford Bull Econ Stat 69:693–706

Van den Broeck J, Koop G, Osiewalski J, Steel M (1994) Stochastic frontier models: a bayesian perspective. J Econom 61:273–303

Acknowledgments

Gholamreza Hajargasht is grateful to Bill Griffiths and Prasada Rao for their helpful comments and suggestions. Also the author would like to thank three anonymous referees for their valuable comments.

Author information

Authors and Affiliations

Corresponding author

Appendix: Bayesian estimation and model selection

Appendix: Bayesian estimation and model selection

The Bayesian approach to the estimation of stochastic frontier models is popular and has been described in Koop and Steel (2001) among others. Griffin and Steel (2007) have also shown how a wide range of stochastic frontier models can be estimated using the WinBUGS software. Here we consider the model

For Bayesian analysis, we need priors for all parameters. We assume the following standard priors:

where G(.,.)denotes a gamma distribution. Using Bayes theorem we can obtain the log of the posterior as follows

where C1 is an unknown constant. It is straightforward to obtain the conditional densities and set-up the following Gibbs sampler

where i is a vector of ones (with size T), ⊗ denotes Kronecker product, σ −2 = σ −2 u + Tσ −2 v , \(\mu_{i} = \pm \frac{{\sigma_{v}^{ - 2} \sum_{t = 1}^{T} {({\mathbf{x}}_{it} {\varvec{\upbeta}} - y_{it} )} }}{{\sigma_{u}^{ - 2} + T\sigma_{v}^{ - 2} }}\) and C i is the constant that turns the last distribution into a proper density. By direct integration one can show that \(C_{i} = \sqrt {2\pi } \sigma \left\{ {\sigma_{i} \phi ({{\mu_{i} } \mathord{\left/ {\vphantom {{\mu_{i} } {\sigma )}}} \right. \kern-0pt} {\sigma )}} + \mu_{i} \varPhi ({{\mu_{i} } \mathord{\left/ {\vphantom {{\mu_{i} } {\sigma )}}} \right. \kern-0pt} {\sigma )}}} \right\}\).

Conditionals for \({\varvec{\upbeta}},\sigma_{v}^{ - 2} \;{\text{and}}\;\sigma_{u}^{ - 2}\) are normal and gamma distributed and drawing from them is straightforward. However, the distribution for u i is not of a well-known form. Methods such as Metropolis–Hastings can be used to draw from this distribution but note that by direct integration we can obtain the cdf of this distribution as

Therefore, an inverse-cdf method (see e.g. Geweke 2005) can be used to draw from it. Solving the nonlinear equation G(u r i ) = F r i in terms of u r i where F r i is the rth random draw from uniform(0, 1) provides a draw from this distribution.

1.1 Model selection

Bayesian model selection relies on the Bayes factor defined below

where prior probabilities for models M 1 and M 2 are considered the same and ML represents the marginal likelihood. Unfortunately, calculating marginal likelihoods can be a challenging task, even when using Makov chain Monet Carlo (MCMC). A range of methods for calculating marginal likelihoods have been proposed (see e.g., Frühwirth-Schnatter (2006) for a review of various methods). In most cases, implementation of these methods needs a candidate distribution. Here we follow Hajargasht and Woźniak (2013) to use a variational Bayes posterior (see below for further information) as a candidate for computation of the marginal likelihood. Specifically, we use the framework proposed by Gelfand and Dey (1994) to write

where the θ ms are MCMC draws from the posterior p(θ|y) and q(θ m|y) is the posterior from variational Bayes calculated at MCMC draw θ m. This method provides accurate estimates if the candidate density q(θ m|y) is a good approximation and has narrower tails than the true posterior which is normally the case with a variational Bayes posterior. In Sect. 5 we use this methodology to compare models.

1.2 Variational bayes

Variational Bayes (VB) is an approximate method for Bayesian inference (see e.g. Bishop 2006, Ormerod and Wand 2010 and for its application to stochastic frontier models see Hajargasht and Griffiths 2013). The idea behind VB is to approximate an intractable posterior p(θ|y) with a more tractable density q(θ m). The optimal q(θ) is obtained by minimizing the Kullback–Leibler distance between the true posterior and the simpler density as follows

To obtain a tractable approximation we have to make simplifying assumptions about q(θ). One common assumption in variational Bayes is

where \(\{ {\varvec{\uptheta}}_{1} , \ldots ,{\varvec{\uptheta}}_{K} \}\) is some partition of \({\varvec{\uptheta}}\) and the q k s are probability density functions. Using results from the calculus of variations, and some simple algebraic manipulations, it is shown that the optimal q k (which we denote as \(q_{\text{k}}^{*}\)) are given by

where \(E_{{ - {\varvec{\uptheta}}_{i} }}\) denotes expectation with respect to the density \(\prod\nolimits_{j \ne i} {q({\varvec{\uptheta}}_{j} )}\). An iterative procedure is required to find the densities that satisfy these equations.

To apply VB to our stochastic frontier model we consider the following factorization

Note that for brevity we have suppressed the subscripts on the q densities. Using (14) and (21) we can derive the optimal densities as

where \(\overline{{\sigma^{ - 2} }} = \overline{{\sigma_{u}^{ - 2} }} + T\overline{{\sigma_{v}^{ - 2} }}\), \(\tilde{\mu }_{i} = \pm \frac{{\overline{{\sigma_{v}^{ - 2} }} }}{{\overline{{\sigma_{{}}^{ - 2} }} }}\left( {\sum\limits_{t = 1}^{T} {({\mathbf{x}}_{{{\mathbf{i}}t}} \overline{{\varvec{\upbeta}}} - y_{it} )} } \right)\), V ui is variance of u i and C i is the constant that makes the last distribution into a proper density and is equal to \(C_{i} = \sqrt {\frac{2\pi }{{\overline{{\sigma^{ - 2} }} }}} \left\{ {\sqrt {\frac{1}{{\overline{{\sigma^{ - 2} }} }}} \phi \left( {\tilde{\mu }_{i} \sqrt {\overline{{\sigma^{ - 2} }} } } \right) + \tilde{\mu }_{i} \varPhi \left( {\tilde{\mu }_{i} \sqrt {\overline{{\sigma^{ - 2} }} } } \right)} \right\}\).

In (23) tr denote the trace of a matrix. The quantities \(\bar{u}_{i} ,\;\overline{{\sigma^{ - 2} }}\), \({\bar{\mathbf{\beta }}}\), and \(\bar{V}_{\beta }\) are the relevant means and variances from the q* densities in (23). They appear in the above distributions when we take expectations of the form \(E_{{ - {\varvec{\uptheta}}_{k} }} \log [p({\mathbf{y,\theta }})]\), as described in Eq. (21). Using well-known results on expectations for normal and gamma distributions and direct integration in case of u i , we can set up an iterative algorithm to find values of \(\bar{u}_{i} ,\;\overline{{\sigma^{ - 2} }}\), \({\bar{\mathbf{\beta }}}\), V ui , and V β that simultaneously satisfy the equations in (23). The following parameters should be iterated until convergence.

where \(\bar{u}_{i} = \frac{{\frac{{\tilde{\mu }_{i} }}{{\sqrt {\overline{{\sigma^{ - 2} }} } }}\phi \left( {\tilde{\mu }_{i} \sqrt {\overline{{\sigma^{ - 2} }} } } \right) + (\tilde{\mu }_{i}^{2} + {1 \mathord{\left/ {\vphantom {1 {\overline{{\sigma^{ - 2} }} }}} \right. \kern-0pt} {\overline{{\sigma^{ - 2} }} }})\varPhi \left( {\tilde{\mu }_{i} \sqrt {\overline{{\sigma^{ - 2} }} } } \right)}}{{\sqrt {\frac{1}{{\overline{{\sigma^{ - 2} }} }}} \phi \left( {\tilde{\mu }_{i} \sqrt {\overline{{\sigma^{ - 2} }} } } \right) + \tilde{\mu }_{i} \varPhi \left( {\tilde{\mu }_{i} \sqrt {\overline{{\sigma^{ - 2} }} } } \right)}}\quad \overline{{u_{i}^{2} }} = \frac{{\frac{{\tilde{\mu }_{i} + \frac{2}{{\overline{{\sigma^{ - 2} }} }}}}{{\sqrt {\overline{{\sigma^{ - 2} }} } }}\phi \left( {\tilde{\mu }_{i} \sqrt {\overline{{\sigma^{ - 2} }} } } \right) + \tilde{\mu }_{i} \left( {\tilde{\mu }_{i}^{2} + \frac{3}{{\overline{{\sigma^{ - 2} }} }}} \right)\varPhi \left( {\tilde{\mu }_{i} \sqrt {\overline{{\sigma^{ - 2} }} } } \right)}}{{\sqrt {\frac{1}{{\overline{{\sigma^{ - 2} }} }}} \phi \left( {\tilde{\mu }_{i} \sqrt {\overline{{\sigma^{ - 2} }} } } \right) + \tilde{\mu }_{i} \varPhi \left( {\tilde{\mu }_{i} \sqrt {\overline{{\sigma^{ - 2} }} } } \right)}}\)

Rights and permissions

About this article

Cite this article

Hajargasht, G. Stochastic frontiers with a Rayleigh distribution. J Prod Anal 44, 199–208 (2015). https://doi.org/10.1007/s11123-014-0417-8

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11123-014-0417-8