Abstract

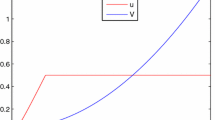

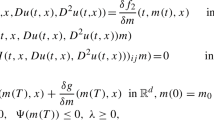

In this paper we give conditions for the existence of discounted robust optimal policies under an infinite planning horizon for a general class of controlled diffusion processes. As for the attribute “robust” we mean the coexistence of unknown and non-observable parameters affecting the coefficients of the diffusion process. To obtain optimality, we rewrite the problem as a zero-sum game against nature, also known as worst case optimal control. Our analysis is based on the use of the dynamic programming technique by showing, among other facts, the existence of classical solutions (twice differentiable solutions) of the so-called Hamilton Jacobi Bellman equation. We provide an example on pollution accumulation control to illustrate our results.

Similar content being viewed by others

References

Adams RA (1975) Sobolev spaces. Academic Press, New York

Akella R, Kumar PR (1986) Optimal control of production rate in a failure prone manufacturing system. IEEE Trans Autom Control AC-31:116–126

Altman A, Hordijk A (1995) Zero-sum Markov games and worst-case optimal control of queueing systems. Queueing Syst Theory Appl 21:415–447

Arapostathis A, Ghosh MK, Borkar VS (2011) Ergodic control of diffusion processes. Cambridge University Press, Cambridge

Basar T (1994) Minimax control of switching systems under sampling. In: Proceedings of the 33rd Conference on decision and control. Lake Buena Vista, Florida, USA, pp 716–721

Coraluppi SP, Marcus SI (2000) Mixed risk-neutral/minimax control of discrete-time finite state Markov decision processes. IEEE Trans Autom Control 45:528–532

Gilbarg D, Trudinger NS (1998) Elliptic partial differential equations of second order. Reprinted version. Springer, Heidelberg

González-Trejo TJ, Hernández-Lerma O, Hoyos-Reyes LF (2003) Minimax control of discrete-time stochastic systems. SIAM J Control Optim 41:1626–1659

Hernández-Lerma O, Lasserre JB (1996) Discrete-time Markov control processes: basic optimality criteria. Springer, New York

Hordijk A, Passchier O, Spieksma FM (1997) Optimal control agains worst case admission polcies: a multichained stochastic game. Math Meth Oper Res 45:281–301

Jasso-Fuentes H, Hernández-Lerma O (2008) Characterizations of overtaking optimality for controlled diffusion processes. Appl Math Optim 57:349–369

Jasso-Fuentes H, Hernández-Lerma O (2009) Ergodic control, bias, and sensitive discount optimality for Markov diffusion processes stoch. Anal Appl 27:363–385

Jasso-Fuentes H, Yin GG (2013) Advanced criteria for controlled Markov-modulated diffusions in an infinite horizon: overtaking, bias, and Blackwell optimality. Science Press, Beijing

Kawaguchi K, Morimoto H (2007) Long-run average welfare in a pollution accumulation model. J Econ Dyn Control 31:703–720

Klebaner FC (2005) Introduction to stochastic calculus with applications, 2nd edn. Imperial College Press, London

Lieb EH, Loss M (2001) Analysis, 2nd edn. American Mathematical Society, Providence

Meyn SP, Tweedie RL (1993) Stability of Markovian processes. III. Foster-Lyapunov criteria for continuous-time processes. Adv Appl Prob 25:518–548

Luque-Vásquez F, Minjárez-Sosa JA, Rosas-Rosas LC (2011) Semi-Markov control models with partially known holding times distribution: discounted and average criteria. Acta Appl Math 114:135–156

Poznyak AS, Duncan TE, Pasik-Duncan B, Boltyansky VG (2002) Robust stochastic maximum principle for multimodel worst case optimization. Int J Control 75:1032–1048

Poznyak AS, Duncan TE, Pasik-Duncan B, Boltyansky VG (2002) Robust optimal control for minimax stochastic linear quadratic problem. Int J Control 75:1054–1065

Poznyak AS, Duncan TE, Pasik-Duncan B, Boltyansky VG (2002) Robust maximum principle for multi-model LQ-problem. Int J Control 75:1170–1177

Schäl M (1975) Conditions for optimality and for the limit of \(n\)-stage optimal policies to be optimal. Z Wahrs Verw Gerb 32:179–196

Schied A (2008) Robust optimal control for a consumption-investment problem. Math Meth Oper Res 67:1–20

Yu W, Guo X (2002) Minimax controller design for discrete-time time-varying stochastic systems. In: Proceedings of the 41st IEEE CDC. Las Vegas, Nevada, USA, pp 598–603

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

López-Barrientos, J.D., Jasso-Fuentes, H. & Escobedo-Trujillo, B.A. Discounted robust control for Markov diffusion processes. TOP 23, 53–76 (2015). https://doi.org/10.1007/s11750-014-0323-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11750-014-0323-2