Abstract

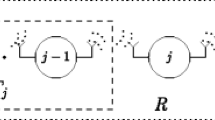

Minimizing the average achievable distortion (AAD) of a Gaussian source at the destination of a two-hop block fading relay channel is studied in this paper. The communication is carried out through the use of a Decode and Forward (DF) relay with no source-destination direct links. The associated receivers of both hops are assumed to be aware of the corresponding channel state information (CSI), while the transmitters are unaware of their corresponding CSI. The current paper explores the effectiveness of incorporating the successive refinement source coding together with multi-layer channel coding in minimizing the AAD. In this regard, the closed form and optimal power allocation policy across code layers of the second hop is derived, and using a proper curve fitting approach, a close-to-optimal power allocation policy associated with the first hop is devised. It is numerically shown that the DF strategy closely follows the Amplify and Forward (AF) relaying, while there is a sizable gap between the AAD of multi-layer coding and single-layer source coding.

Similar content being viewed by others

Notes

The condition under which \(\mathcal {G}(.)\) is an increasing function is provided in Appendix 1.2

References

Van Der Meulen EC (1971) Three-terminal communication channels. Adv Appl Probab 3 (1):120–154

Cover T, Gamal AE (1979) Capacity theorems for the relay channel. IEEE Trans Inf Theory 25(5):572

Steiner A, Shamai S (2006) Single-user broadcasting protocols over a two-hop relay fading channel. IEEE Trans Inf Theory 52(11):4821–4838

Sharma A, Aggarwal M, Ahuja S, et al. (2018) Performance analysis of DF-relayed cognitive underlay networks over EGK fading channels. AEU-Int J Electron Commun 83:533–540

Luan NT, Do DT (2017) A new look at AF two-way relaying networks: energy harvesting architecture and impact of co-channel interference. Ann Telecommun 72(11-12):533–540

Hadj Alouane W, Hamdi N, Meherzi S (2015) Semi-blind two-way AF relaying over Nakagami-m fading environment. Ann Telecommun 70(1-2):49–62

Shamai S (1997) A broadcast strategy for the Gaussian slowly fading channel. In: IEEE International symposium on information theory, p 150

Shamai S, Steiner A (2003) A broadcast approach for a single user slowly fading MIMO channel. IEEE Trans Inf Theory 49(10):2617

Steiner A, Shamai S (2006) Achievable rates with imperfect transmitter side information using a broadcast transmission strategy. IEEE Trans Wirel Commun 7(3):1043–1051

Liang Y, Lai L, Poor HV, Shamai S (2014) A broadcast approach for fading wiretap channels. IEEE Trans Inf Theory 60(2):842–858

Pourahmadi V, Motahari AS, Khandani AK (2013) Multilayer codes for broadcasting over quasi-static fading MIMO networks. IEEE Trans Commun 61(4):1573–1583

Cohen KM, Steiner A, Shamai S (2020) On the broadcast approach over parallel MIMO two-state fading channel. International Zurich Seminar on Information and Communication (IZS) 26–28

Khodam Hoseini SA, Akhlaghi S (2018) Proper multi-layer coding in fading dirty-paper channel. IET Commun 12(19):2454–2459

Zohdy M, Tajer A, Shamai S (2019) Broadcast approach to multiple access with local CSIT. IEEE Trans Commun 67(11):7483–7498

Pourahmadi V, Bayesteh A, Khandani AK (2012) Multilayer coding over multihop single user networks. IEEE Trans Inf Theory 58(8):5323

Keykhosravi S, Akhlaghi S (2016) Multi-layer coding strategy for multi-hop block fading channels with outage probability. Ann Telecommun 71(5-6):173–185

Baghani M, Akhlaghi S, Golzadeh V (2016) Average achievable rate of broadcast strategy in relay-assisted block fading channels. IET Commun 10(3):346–355

Cover TM, Thomas JA (2012) Elements of information theory, (John)Wiley and Sons, New York

Tian C, Steiner A, Shamai S, Diggavi SN (2008) Successive refinement via broadcast: Optimizing expected distortion of a Gaussian source over a gaussian fading channel. IEEE Trans Inf Theory 54(7):2903–2918

Steinberg Y (2008) Coding and common knowledge. In: Proc 2008 information theory and applications (ITA 2008) workshop

Aguerri EI, Gunduz D (2016) Distortion exponent in MIMO fading channels with time-varying source side information. IEEE Trans Inf Theory 62(6):3597–3617

Ng TC, Gunduz D, Goldsmith AJ, Erkip E (2009) Distortion minimization in gaussian layered broadcast coding with successive refinement. IEEE Trans Inf Theory 55(11):5074–5086

Ng TC, Tian C, Goldsmith AJ, Shamai S (2012) Minimum expected distortion in Gaussian source coding with fading side information. IEEE Trans Inf Theory 58(9):5725–5739

Mesbah W, Shaqfeh M, Alnuweiri H (2014) Jointly optimal rate and power allocation for multilayer transmission. IEEE Trans Wirel Commun 13(2):834–845

Hoseini SAK, Akhlaghi S, Baghani M (2012) The achievable distortion of relay-assisted block fading channels. IEEE Commun Lett 16(8):1280–1283

Saatlou O, Akhlaghi S, Hoseini SAK (2013) The achievable distortion of df relaying with average power constraint at the relay. IEEE Commun Lett 17(5):960–963

Wang J, Kim YH, Cosman PC, Milstein LB (2013) Milstein, Minimization of expected distortion with layer-selective relaying of two-layer superposition coding. In: 77th IEEE Vehicular technology conference (VTC Spring),(pp 1-5)

Khodam Hoseini SA, Akhlaghi S (2019) The impact of distribution uncertainty on the average distortion in a block fading channel. In: Iran workshop on communication and information theory (IWCIT), Tehran, Iran, pp 24–25

Geldfand I, Fomin S (1991) Calculus of variation. Dover, New York

Abramowitz M, Irene AS (1965) Handbook of mathematical functions: with formulas, graphs, and mathematical tables. Dover, Washington D.C.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix 1

Appendix 1

1.1 1.1 Proof of Lemma 1

The last constraint of Eq. 18, results in Eq. 19. Now, one can formulate the Lagrangian function of Eq. 18 as follows:

where λ and ψ(.) are, respectively, the slack multiplier and the slack arbitrary positive function that ensure meeting the power constraint, as well as monotonically non-decreasing characteristics of \(T^{\prime }(.)\). Defining

and applying the variational method in [29] to Eq. 45 gives the following:

where \(\mathcal {G}_{D}(D(x))=\frac {\partial }{\partial D(x)}\mathcal {G}(D(x))\), and \(\psi ^{\prime }(x)=\frac {d}{dx}\psi (x)\). Taking the slackness condition corresponding to the positivity of \(T^{\prime }(.)\) into account, for positive power allocation to the code layers, the second constraint of Eq. 18 is met with inequality, so ψ(x) = 0, and Eq. 47 leads to:

As the above equation is derived for the case of having \(T^{\prime }(.)>0\), bringing both sides of Eq. 48 to the power of (b + 1) and taking derivation w.r.t. x, gives the requirements of having the aforementioned condition met, as follows:

Noting that \(\mathcal {G}(.)\) is an increasing convex function, \(\mathcal {G}_{D}\left (D(x)\right )\) has positive values. Going further through the left-most term of Eq. 49, x2f(x) is a non-negative value, as we are dealing with a non-negative f(.) function, so, the term \(\frac {d}{dx}\mathcal {G}_{D}\left (D(x)\right )\) should be discussed. Incorporating the derivative chain rule leads to:

where \(\mathcal {G}_{DD}(D(x))=\frac {\partial ^{2}}{\partial D^{2}}\mathcal {G}(D(x))\). Considering the definition of D(.) in Eq. 46, the relation Eq. 50 converts to:

Plugging the result of Eq. 51 into Eq. 49 results in Eq. 52:

Noting the convexity and the increasing properties of \(\mathcal {G}(.)\), the positivity of \(T^{\prime }(x)\) depends on:

In the sequel, the existence of at most one single interval of positive power allocation in any region like \(x\in \mathcal {R}=[l,u]\) which satisfies the \(\frac {d}{dx}\left (x^{2} f(x)\right )>0\) is discussed. To this end, let us assume the contradiction. For instance, assume there are two disjoint intervals of [x1,x2] and [x3,x4] (x1 < x2 < x3 < x4) in the region \(\mathcal {R}\) where \(T^{\prime }(x)=0\) for x ∈ (x2,x3), which leads to T(x2) = T(x3). Using Eq. 48, the latter equality leads to:

which contradicts the positivity of \(\frac {d}{dx}(x^{2}f(x))\) in \(\mathcal {R}\). Therefore, there should be at most one continues positive power allocation interval in the region satisfying Eq. 53.

1.2 1.2 Proof of the increasing property for \(\mathcal {G}(.)\) function

Here, we demonstrate that \(\mathcal {G}(.)\) is an increasing function. Inserting the derived auxiliary function of Eq. 16 into Eq. 8 we have:

Re-writing the power constraint of Eq. 9 using Eq. 16, the following expression for the integral part of Eq. 55 is derived.

Noting that β1 and β2 in Eq. 55 are dependent to Dr(α), substituting Eq. 56 in Eq. 55, and taking partial derivative from Eq. 55, yields:

According to Eq. 17, the first term in Eq. 57 is 0. Simplifying the second component, it is equal to 0, too. Thus, Eq. 57 changes to:

In order to characterize \(\frac {\partial \beta _{1}}{\partial D_{r}(\alpha )}\), one can re-write the integral of Eq. 56 as \({\int \limits }_{\beta _{1}}^{\beta _{2}}\frac {1}{\beta ^{2}} \left (\beta ^{2}f_{r}(\beta )\right )^{\frac {1}{b+1}}d\beta \). Thus, taking derivative from Eq. 56 with respect to Dr(α) yields:

Re-ordering Eq. 59 leads to:

and simplifying Eq. 60 by the use of Eq. 17 gives:

Applying Eq. 61 into Eq. 58 and simplifying the relation gives:

and finally using Eqs. 17, 62 simplifies to:

To give an example, in the Rayleigh fading case, the necessary condition to have \(\frac {\partial \mathcal {G}\left (D_{r}(\alpha )\right )}{\partial D_{r}(\alpha )}\geq 0\) is β2 ≤ 1.

Rights and permissions

About this article

Cite this article

Khodam Hoseini, S.A., Akhlaghi, S. & Baghani, M. Minimizing the average achievable distortion using multi-layer coding approach in two-hop networks. Ann. Telecommun. 76, 83–95 (2021). https://doi.org/10.1007/s12243-020-00812-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12243-020-00812-0