Abstract

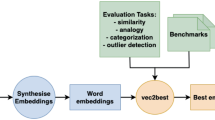

Learning an embedding for a large collection of items is a popular approach to overcome the computational limitations associated to one-hot encodings. The aim of item embeddings is to learn a low dimensional space for the representations, able to capture with its geometry relevant features or relationships for the data at hand. This can be achieved for example by exploiting adjacencies among items in large sets of unlabelled data. In this paper we interpret in an Information Geometric framework the item embeddings obtained from conditional models. By exploiting the \(\alpha \)-geometry of the exponential family, first introduced by Amari, we introduce a family of natural \(\alpha \)-embeddings represented by vectors in the tangent space of the probability simplex, which includes as a special case standard approaches available in the literature. A typical example is given by word embeddings, commonly used in natural language processing, such as Word2Vec and GloVe. In our analysis, we show how the \(\alpha \)-deformation parameter can impact on standard evaluation tasks.

Similar content being viewed by others

Change history

15 May 2021

A Correction to this paper has been published: https://doi.org/10.1007/s41884-021-00045-7

Notes

In the following for each word w we suppose the \(\text {arg max}\) to be unique. When this is not the case the formula can be easily generalized.

References

Amari, S.I.: Theory of information spaces: a differential geometrical foundation of statistics. Post RAAG Reports (1980)

Amari, S.I.: Differential geometry of curved exponential families-curvatures and information loss. Ann. Stat. 10, 357–385 (1982)

Amari, S.I.: Geometrical theory of asymptotic ancillarity and conditional inference. Biometrika 69(1), 1–17 (1982)

Amari, S.I.: Differential-Geometrical Methods in Statistics. Lecture Notes in Statistics, vol. 28. Springer, New York (1985)

Amari, S.I.: Dual connections on the Hilbert bundles of statistical models. In: Geometrization of Statistical Theory, pp. 123–151. ULDM Publ., Lancaster (1987)

Amari, S.I.: Information Geometry and Its Applications, Applied Mathematical Sciences, vol. 194. Springer, Tokyo (2016)

Amari, S.I., Cichocki, A.: Information geometry of divergence functions. Bull. Polish Acad. Sci. Tech. Sci. 58(1), 183–195 (2010)

Amari, S.I., Nagaoka, H.: Methods of Information Geometry. American Mathematical Society, Providence (2000)

Arora, S., Li, Y., Liang, Y., Ma, T., Risteski, A.: Linear Algebraic Structure of Word Senses, with Applications to Polysemy. arXiv:1601.03764 (2016)

Arora, S., Li, Y., Liang, Y., Ma, T., Risteski, A.: Rand-walk: a latent variable model approach to word embeddings. arXiv:1502.03520 (2016)

Bakarov, A.: A Survey of Word Embeddings Evaluation Methods. arXiv:1801.09536 (2018)

Barkan, O., Koenigstein, N.: ITEM2vec: neural item embedding for collaborative filtering. In: IEEE 26th International Workshop on Machine Learning for Signal Processing (MLSP), pp. 1–6 (2016)

Baroni, M., Dinu, G., Kruszewski, G.: Don’t count, predict! A systematic comparison of context-counting vs. context-predicting semantic vectors. In: 52nd Annual Meeting of the Association for Computational Linguistics, pp. 238–247 (2014)

Baroni, M., Lenci, A.: How we blessed distributional semantic evaluation. In: Workshop on Geometrical Models of Natural Language Semantics, pp. 1–10 (2011)

Bengio, Y., Ducharme, R., Vincent, P., Jauvin, C.: A neural probabilistic language model. J. Mach. Learn. Res. 3, 1137–1155 (2003)

Bengio, Y., Simard, P., Frasconi, P., et al.: Learning long-term dependencies with gradient descent is difficult. IEEE Trans. Neural Netw. 5(2), 157–166 (1994)

Bullinaria, J.A., Levy, J.P.: Extracting semantic representations from word co-occurrence statistics: a computational study. Behav. Res. Methods 39(3), 510–526 (2007)

Bullinaria, J.A., Levy, J.P.: Extracting semantic representations from word co-occurrence statistics: stop-lists, stemming, and SVD. Behav. Res. Methods 44(3), 890–907 (2012)

Casella, G., Berger, R.L.: Statistical Inference, 2nd edn. Duxbury Press, California (2001)

Coenen, A., Reif, E., Yuan, A., Kim, B., Pearce, A., Vigas, F., Wattenberg, M.: Visualizing and Measuring the Geometry of BERT. NeurIPS (2019)

Devlin, J., Chang, M.W., Lee, K., Toutanova, K.: BERT: pre-training of deep bidirectional transformers for language understanding. Human Language Technologies, North American Chapter of the Association for Computational Linguistics (2019)

Firth, J.R.: A Synopsis of Linguistic Theory (1957)

Fonarev, A., Grinchuk, O., Gusev, G., Serdyukov, P., Oseledets, I.: Riemannian optimization for skip-gram negative sampling. In: Proceedings of the Association for Computational Linguistics, pp. 2028–2036 (2017)

Guy, L.: Riemannian geometry and statistical machine learning. Ph.D. Thesis, Carnegie Mellon University (2005)

Hewitt, J., Manning, C.: A structural probe for finding syntax in word representations. In: North American Chapter of the Association for Computational Linguistics: Human Language Technologies, pp. 4129–4138 (2019)

Ichimori, T.: On rounding off quotas to the nearest integers in the problem of apportionment. JSIAM Lett. 3, 21–24 (2011)

Jawanpuria, P., Balgovind, A., Kunchukuttan, A., Mishra, B.: Learning multilingual word embeddings in latent metric space: a geometric approach. Trans. Assoc. Comput. Linguist. 7, 107–120 (2019)

Koren, Y., Bell, R., Volinsky, C.: Matrix factorization techniques for recommender systems. Comput. IEEE 42(8), 30–37 (2009)

Krishnamurthy, B., Puri, N., Goel, R.: Learning vector-space representations of items for recommendations using word embedding models. Procedia Comput. Sci. 80, 2205–2210 (2016)

Lauritzen, S.L.: Statistical manifolds. Differential geometry in statistical inference, pp. 163–216 (1987)

Lebanon, G.: Metric learning for text documents. IEEE Trans. Pattern Anal. Mach. Intell. 28(4), 497–508 (2006)

Lee, L.S.Y.: On the linear algebraic structure of distributed word representations. arXiv:1511.06961 (2015)

Levy, O., Goldberg, Y.: Neural word embedding as implicit matrix factorization. NIPS p. 9 (2014)

Levy, O., Goldberg, Y., Dagan, I.: Improving distributional similarity with lessons learned from word embeddings. Trans. Assoc. Comput. Linguist. 3, 211–225 (2015)

Meng, Y., Huang, J., Wang, G., Zhang, C., Zhuang, H., Kaplan, L., Han, J.: Spherical text embedding. Advances in Neural Information Processing Systems (2019)

Michel, P., Ravichander, A., Rijhwani, S.: Does the geometry of word embeddings help document classification? A case study on persistent homology-based representations. In: Proceedings of the 2nd Workshop on Representation Learning for NLP (2017)

Mikolov, T., Chen, K., Corrado, G., Dean, J.: Efficient estimation of word representations in vector space. In: International Conference on Learning Representations (2013)

Mikolov, T., Karafit, M., Burget, L., Cernock, J., Khudanpur, S.: Recurrent neural network based language model. In: Annual Conference of the International Speech Communication Association (2010)

Mikolov, T., Sutskever, I., Chen, K., Corrado, G.S., Dean, J.: Distributed representations of words and phrases and their compositionality. Advances in Neural Information Processing Systems (2013)

Mikolov, T., Yih, W.T., Zweig, G.: Linguistic regularities in continuous space word representations. NAACL-HLT (2013)

Mu, J., Bhat, S., Viswanath, P.: All-but-the-top: simple and effective postprocessing for word representations. ICLR (2018)

Nagaoka, H., Amari, S.I.: Differential geometry of smooth families of probability distributions. Tech. rep., Technical Report METR 82-7, Univ. Tokyo (1982)

Nickel, M., Kiela, D.: Poincaré embeddings for learning hierarchical representations. Advances in Neural Information Processing Systems (2017)

Pennington, J., Socher, R., Manning, C.: Glove: Global vectors for word representation. In: Proceedings of the 2014 Conference on Empirical Methods in Natural Language Processing (EMNLP) (2014)

Radford, A., Narasimhan, K., Salimans, T., Sutskever, I.: Improving Language Understanding by Generative Pre-Training. Computer Science (2018)

Raunak, V.: Simple and Effective Dimensionality Reduction for Word Embeddings. LLD Workshop NIPS (2017)

Rudolph, M., Ruiz, F., Mandt, S., Blei, D.: Exponential family embeddings. Advances in Neural Information Processing Systems (2016)

Rumelhart, D.E., Hinton, G.E., Williams, R.J.: Learning representations by back-propagating errors. Nature 323(6088), 533–536 (1986)

Sugawara, K., Kobayashi, H., Iwasaki, M.: On approximately searching for similar word embeddings. In: Proceedings of the 54th Annual Meeting of the Association for Computational Linguistics (2016)

Tifrea, A., Bécigneul, G., Ganea, O.E.: Poincaré glove: hyperbolic word embeddings. In: International Conference on Learning Representations (2019)

Volpi, R., Malagò, L.: Evaluating natural alpha embeddings on intrinsic and extrinsic tasks. In: Proceedings of the 5th Workshop on Representation Learning for NLP (2020)

Volpi, R., Thakur, U., Malagò, L.: Changing the geometry of representations: \(\alpha \)-embeddings for nlp tasks (submitted) (2020)

Wada, J.: A divisor apportionment method based on the Kolm–Atkinson social welfare function and generalized entropy. Math. Soc. Sci. 63(3), 243–247 (2012)

Wikiextractor. https://github.com/attardi/wikiextractor. Accessed 2017-10

Wu, L., Fisch, A., Chopra, S., Adams, K., Bordes, A., Weston, J.: StarSpace: embed all the things! arXiv:1709.03856 (2018)

Yang, Z., Dai, Z., Yang, Y., Carbonell, J., Salakhutdinov, R., Le, Q.V.: XLNet: generalized autoregressive pretraining for language understanding. In: Conference on Neural Information Processing Systems (2019)

Zhao, X., Louca, R., Hu, D., Hong, L.: Learning item-interaction embeddings for user recommendations. arXiv:1812.04407 (2018)

Acknowledgements

The authors are supported by the DeepRiemann project, co-funded by the European Regional Development Fund and the Romanian Government through the Competitiveness Operational Programme 2014–2020, Action 1.1.4, project ID P_37_714, Contract No. 136/27.09.2016.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Supplementary material 1 (mp4 69 KB)

Supplementary material 2 (mp4 211 KB)

Appendix A: GloVe training

Appendix A: GloVe training

During the training of GloVe we monitor performances in terms of accuracy on the word analogies task, in comparison with the literature, see Table 5.

Rights and permissions

About this article

Cite this article

Volpi, R., Malagò, L. Natural alpha embeddings. Info. Geo. 4, 3–29 (2021). https://doi.org/10.1007/s41884-021-00043-9

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s41884-021-00043-9