Abstract

The ephemeral reward task consists of giving an animal a choice between two distinctive stimuli, A and B (e.g., black and white), on each of which is placed a bit of food. If the animal chooses the food on A, it gets that reinforcer, but the other stimulus, B, is removed, and the trial is over. If it chooses the food on B, however, it gets that food and the stimulus A remains, so it can have that food as well. Thus, choice of stimulus B gives the animal two reinforcers rather than one. Wrasse (cleaner fish) easily learn to choose optimally, whereas surprisingly, most non-human primates do not. Parrots, however, appear to learn this task as easily as the fish. To test the hypothesis that animals that choose with their mouth can learn it, we tested pigeons and found that they show no evidence of optimal learning with this task (with either the manual presentation of the stimuli or the operant presentation of the stimuli). Similarly, rats show no evidence of optimal learning. However, if a 20-s delay (fixed-interval schedule) is inserted between stimulus choice and reinforcement, both pigeons and rats learn to perform optimally. The ephemeral reward task appears to promote impulsive choice in several species, but with this task (and others), inserting a delay between choice and reinforcement promotes more careful choice, leading to optimal performance.

Similar content being viewed by others

Introduction

Irene Pepperberg’s research with parrots has demonstrated their remarkable conceptual ability (see, e.g., Pepperberg, 1999). Because we work primarily with pigeons, it is rare that the target of our research overlaps with hers. There is one line of research that she conducted with parrots, however, that encouraged us to pursue similar research with pigeons. It involved what appeared to be a relatively simple task that has come to be known as the ephemeral reward task (Pepperberg & Hartsfield, 2014). This task was first studied in wrasse (cleaner fish), which are known to live on warm water reefs, and have a symbiotic relation with larger fish by cleaning their mouths of mucus and parasites (Bshary & Grutter, 2002).

The task consists of giving animals a choice between two distinctive stimuli (e.g., a black disk and a white disk), on each of which is placed an identical reinforcer. Although the reinforcers are the same, the contingencies associated with each are different. If, for example, the animal chooses the reinforcer on the black disk, it gets that reinforcer, but the white disk is removed, and the trial is over. If it chooses the reinforcer on the white disk, however, it gets that reinforcer and the black disk remains, so it can also have the reinforcer on the black disk. With this arrangement, the optimal solution is for the subject always to choose the reinforcer on the white disk because then it gets two reinforcers per trial, whereas if it chooses the reinforcer on the black disk it gets only one (see Zentall, 2019, for a review of the findings with this procedure).

Salwiczek et al. (2012) trained wrasse on this task and found that adult wrasse acquired it readily (within 100 trials), whereas juvenile wrasse generally did not learn to choose optimally. This difference suggests that experience or maturation may be involved. Surprisingly, however, when primates were trained on this task, capuchin monkeys were no better than the juvenile wrasse, nor were orangutans, and only two of four chimpanzees were able to learn as quickly as the adult wrasse (Salwiczek et al., 2012).

One suggestion by Salwiczek et al. (2012) was that wrasse are more sensitive to the removal of the second reinforcer (punishment or omission) when they chose suboptimally. This may be because in their natural environment they often have a choice between an ephemeral visitor to the reef and a more permanent resident, and they have learned to service the visitor first before it swims away. This hypothesis is supported by the fact that juveniles do not learn this task as easily as adult wrasse. Furthermore, unlike the wrasse, primates have not had to learn that some distinctive reinforcers may disappear if not chosen quickly.

Pepperberg and Hartsfield (2014) reasoned that parrots have evolved and live in an environment similar to that of primates. Furthermore, they also live on fruit and nuts, similar to what primates eat and, thus, they likely have not had the kind of experience with loss of reinforcement that adult wrasse have had. Thus, according to Salwiczek et al. (2012), they should not be able to easily learn the ephemeral reward task. Yet, Pepperberg and Hartsfield found that parrots learned the ephemeral reward task as readily as the adult wrasse.

Cross-species comparisons are notoriously difficult to make for a variety of reasons, including differences in sensory ability, the nature of the required response, and differences in motivation. Furthermore, Pepperberg and Hartsfield (2014) note that it may be difficult to transfer an ecologically based task from the field to the laboratory in a way that tests the underlying mechanism in question.

What then accounts for the similarity of wrasse and parrots and their difference from non-human primates? Pepperberg and Hartsfield suggest that fish and parrots eat by putting food in their mouths directly and grasp multiple foods sequentially. Non-human primates, however, can collect food simultaneously with both hands. In the ephemeral reward task, the inability to acquire food at both locations simultaneously may have led to frustration for the primates, thus interfering with task acquisition.

According to Pepperberg and Hartsfield (2014), differences in the size of the species in question may also play a role in their rate of task learning. Small animals like wrasse and parrots likely have higher metabolism than larger animals like primates. Thus, the energetic cost of making a wrong choice (half as much food) may be greater for smaller animals.

The differences in findings between wrasse and parrots on the one hand and primates on the other hand led us to ask whether pigeons would be more like parrots or like non-human primates in their performance of the ephemeral reward task (Zentall, Case, & Luong, 2016).

Pigeons’ performance of the ephemeral reward task

In our first experiment, we trained pigeons with a procedure very similar to that used with the other species (Zentall et al., 2016, Experiment 1). A diagram of the apparatus appears in Fig. 1. The color associated with optimal choice was counterbalanced over subjects and the position of the colors was counterbalanced over trials. Choice of the blue disc, for example, resulted in reinforcement (a single dried pea), but the trial was over, and the apparatus was withdrawn. Choice of the yellow disc resulted in reinforcement, but the apparatus was not withdrawn, and the subject could obtain the reinforcement on the blue disc. Thus, choice of the blue disc resulted in one pea, while choice of the yellow disc resulted in two peas.

A diagram of the apparatus used in Zentall, Case, and Luong (2016, Experiment 1). One disc was blue, the other yellow. There was a dried pea on each disc. See text for contingencies

Surprisingly, not only did the pigeons fail to learn to choose the color that provided them with two peas, but they showed a significant preference for the color that provided them with only one pea. In our second experiment (Zentall et al., 2016, Experiment 2), we asked if we could obtain the same result with an operant version of this task (see Fig. 2). A new set of pigeons was trained on a blue yellow discrimination task presented on the left and right response keys of the operant panel. In this experiment, a peck to one color provided mixed grain reinforcement and the trial was over. A peck to the other color provided the same reinforcement but the first color remained on the other response key and a peck to that color provided a second reinforcement. In spite of the differences in the procedures, the results were very similar. Once again, the pigeons showed a significant preference for the color that provided them with a single reinforcement on each trial.

Apparatus used in Zentall, Case, and Luong (2016, Experiment 2)

To explain the paradoxical preference for the suboptimal alternative, Zentall et al. (2016) considered the frequency of reinforcement associated with each of the alternatives on the first few trials of training. If on initial trials the pigeon chose each of the two alternatives randomly, the subject would have received twice as many reinforcements associated with the suboptimal alternative. More specifically, choice of the optimal alternative would have resulted in one reinforcement associated with the optimal alternative and one reinforcement associated with the suboptimal alternative, whereas choice of the suboptimal alternative would have ended the trial with reinforcement associated with the suboptimal alternative. Furthermore, all trials would have ended with reinforcement associated with the suboptimal alternative. Thus, for these reasons, this task may have produced a bias for the suboptimal alternative.

To test this hypothesis for the preference for the suboptimal alternative, in Zentall et al. (2016), Experiment 3, we included a group for which choice of the optimal alternative resulted in two reinforcements, but after the first reinforcement for choice of the optimal alternative, we arranged to have the color of the suboptimal alternative change from yellow to red. A peck to red provided the second reinforcer. Thus, for this group, initial random choice resulted in equal reinforcement associated with yellow, blue, and red. We found that the pigeons in this group performed the ephemeral reward task significantly better than the pigeons in the control group (a replication of the standard ephemeral reward task, as in Experiment 2), but even after 400 trials of training, they did not perform significantly better than chance. Thus, even after the removal of the bias to choose suboptimally, the pigeons performed no better than the non-human primates. Therefore, it appears that when the pigeons chose the optimal alternative, they failed to associate the second reinforcer with their initial choice.

Rats’ performance of the ephemeral reward task

The ephemeral reward task, although a relatively simple task, is one that has not received very much attention in research with animals. For this reason, we wondered how rats, another species often studied by psychologists, would do with this task (Zentall, Case, & Berry, 2017b). For reasons already mentioned, cross-species comparisons are difficult to make, but we wanted to test the generality of the conclusion that many species find this task much more difficult than it would appear to be. In this experiment, we trained rats much like the pigeons in Experiment 2 of Zentall et al. (2016). In an operant box with two retractable levers, choice of one lever resulted in a pellet of food and the trial was over. Choice of the other lever also resulted in a pellet of food, but the first lever remained accessible and pressing it produced a second pellet. For half of the rats, the optimal lever was signaled by a light immediately above the lever. For the remaining rats, the light signaled the suboptimal lever.

After over 400 trials of training, although the rats did not show a preference for the suboptimal alternative, they also did not show significant evidence of learning to choose the optimal alternative. Thus, for rats as well, the ephemeral reward task appears to be difficult to acquire.

What does it take for pigeons and rats to learn the ephemeral reward task?

The ephemeral reward task bears some resemblance to a delay-discounting task. In a delay-discounting task, an animal is given a choice between a small amount of food after a short delay or a larger amount of food after a longer delay. Different species have been found to choose either the smaller sooner or the larger later, depending on the difference in the amount of food associated with the two alternatives and the difference or ratio of the two delays (see, e.g., Odum, 2011). The slope of the function relating the ability of the organism to delay the larger reinforcer has been taken as a measure of impulsivity, or the absence of self-control. In judging the behavior of humans in modern society, we tend to view impulsivity as a negative trait; however, in many competitive contexts, rewards may go to the more impulsive individual. Hence, “he who hesitates is lost.”

In a classic paper on delay discounting by pigeons, Rachlin and Green (1972) found that pigeons showed a strong preference for a small immediate reward (2-s access to grain) over a larger delayed reward (4-s access to grain after a delay of 4 s). However, they also found that if 10 s before this choice they offered the pigeons a choice of not being able to later choose the small immediate reward and only get the larger delayed reward, they showed a preference for not having the later choice. This preference is surprising because independent of their initial choice, they always had the option of choosing the larger delayed reward. By choosing not to make the later choice, one might say that they were avoiding the “temptation” of later choosing the suboptimal smaller, more immediate alternative.

Rachlin and Green (1972) viewed the added delay as forcing the pigeon to make a “prior commitment.” One could also view the added delay, however, as altering the ratio of the larger delay to the smaller delay. In the example above, the added 10-s delay would make it 10 s for the smaller sooner and 14 s for the larger later, a ratio closer to 1.0 than when the smaller sooner was immediate and the larger later was 4.0 s.

How this research on “prior commitment” applies to the ephemeral reward needs to be more fully explained. Given the difficulty of the ephemeral reward task, one must assume that when an animal chooses the optimal alternative, it does not associate the second reinforcement with that choice. This is assumed, in spite of the fact that when the optimal alternative is chosen, the delay between the first and second reinforcement is generally very short. In the case of the manual presentation of the choice in Zentall et al. (2016, Experiment 1) the delay between the two reinforcements was about 1 s. The delay between the two reinforcements was very short because when the second reinforcement remained, the pigeons had learned that on many trials the second reinforcer is quickly removed (whenever they chose suboptimally). In the case of the operant version of the task, the delay to the second reinforcement was somewhat longer but it was rarely longer than 2 s. Nevertheless, when the animal chose the optimal alternative, even though the delay between the first and second reinforcers was relatively short, it appears that the initial choice and the second reinforcement were not readily associated.

Using an argument similar to that used to explain the Rachlin and Green (1972) results, we wondered if we could increase the chance that when pigeons chose optimally they would associate the second reinforcement with their choice, if, after they made their initial choice, we forced them to wait for several seconds before obtaining the first reinforcement (Zentall, Case, & Berry, 2017a). The operant procedure that we used was to start each trial with a ready signal that the pigeons had to peck in order to receive a choice between the optimal and suboptimal stimuli. Once the pigeon made its choice, the other key was turned off, and a fixed-interval 20-s schedule began (the first peck after 20 s resulted in reinforcement). Following reinforcement, if the optimal alternative had been chosen, the other key was turned back on and a single peck to it provided the second reinforcement.

For pigeons in the control group, if the optimal alternative had been chosen, a single peck resulted in reinforcement, and a single peck to the remaining stimulus provided the second reinforcement. To control for the considerably longer duration of trials for pigeons in the experimental group, the initial peck to the ready signal started a fixed interval 20-s schedule, prior to the presentation of the choice between the discriminative stimuli.

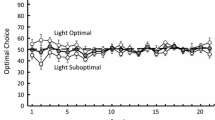

The results of that experiment appear in Fig. 3. Once again, as in the Zentall et al. (2016) experiments, the control group showed a bias to choose the suboptimal alternative; however, in this experiment the control group showed some improvement in choice of the optimal alternative with continued training. Still, after 40 sessions of training, the pigeons failed to surpass 50% choice of the optimal alternative. The performance of the experiment group, however, showed systematic improvement in their choice of the optimal alternative. Although, just like the control group, the experimental group started out well below chance, by Session 7 they had reached an average of 50% choice of the optimal alternative, and by the end of training they had reached 90% choice of the optimal alternative. Thus, forcing the pigeons to wait 20 s before obtaining their first reinforcement got them to choose optimally. At a more theoretical level, it may be that under the normal (control) conditions, even though the delay between the first and the second reinforcement was relatively short (perhaps 2 s), it was long enough that the ratio between the delay to the second reinforcement and the delay (perhaps 0.5 s) to the first reinforcement, about 4:1, was different enough to prevent the association between the pigeon’s choice and the second reinforcement. With the 20-s delay between the initial choice and the first reinforcement, the corresponding ratio between the delay to the second reinforcement and the first reinforcement (now about 24:20.5) was much closer to 1.0. Thus, the difference between one and two reinforcements could control the choice response.

Pigeons’ performance on the ephemeral reward task in which the first reinforcement came with a single peck (FR1 Choice) or after completion of a fixed-interval 20-s schedule (FI20 s Choice). After Zentall, Case, and Berry (2017a). Error bars show ± one standard error of the mean

Given that inserting a delay between choice and the first reinforcer facilitated choice of the optimal alternative by pigeons, Zentall et al. (2017b, Experiment 2) asked whether introducing a similar delay between choice and the first reinforcer for rats would have a similar effect. The results of that experiment are presented in Fig. 4. Also presented in Fig. 4 are the results of the first experiment, already described, in which initial choice required a single response. As can be seen in the figure, the rats benefitted from the delay between choice and the first reinforcement similarly to the pigeons.

Rats’ performance on the ephemeral reward task in which the first reinforcement came with a single peck (FR1 Choice, open circles) or after completion of a fixed-interval 20-s schedule (FI20 s Choice, filled circles). After Zentall, Case, and Berry (2017a). Results of the fixed-interval 20-s schedule with pigeons is presented for comparison (Zentall, Case, & Berry, 2017a, open squares). Error bars show ± one standard error of the mean

Introducing a delay between choice and reinforcement facilitates performance of the ephemeral reward task for both pigeons and rats. Although the mechanism by which it does this is not clear, it appears that it functions to reduce impulsive choice. When reinforcement is visible (in the manual presentation of the stimuli), both alternatives appear to present the same reinforcer, so the difference in the outcome of the choice is not obvious. Delaying the reinforcement may cause animals to make their choice more carefully because the added delay in making their choice may be relatively small, compared with the delay imposed by the procedure. If reinforcement is immediate, however, the added delay required to make a careful choice would be proportionately longer.

The generality of inserting a delay between choice and reinforcement

The ephemeral reward task is not the only task in which introducing a delay between presentation of the stimuli and reinforcement can facilitate performance. Zentall and Raley (2019) tested pigeons on a form of object permanence. They presented a pigeon with two cups and, in front of the pigeon, they placed a small amount of grain in one of them. Then, they allowed the pigeon to choose between the cups. Surprisingly, they found that the pigeons in this experiment initially chose at chance, and even after considerable training, they showed remarkably poor performance. Noticing that the pigeons became quite active when the grain was placed in the cup, a delay was imposed between baiting the cup and giving the pigeons a choice between the two cups. In spite of the fact that the delay introduced the possibility of forgetting which cup was baited, now the pigeons performed the task quite well, and they went on to show that they could perform an invisible displacement, when, after baiting, the cups were rotated – either 90° or even 180°.

A second task in which pigeons have benefitted from introducing a delay between stimulus presentation and reinforcement is a gambling-like task in which pigeons are given a choice between a stimulus that provides a signal for reinforcement 50% of the time, or a signal for the absence of reinforcement 50% of the time, and another stimulus that provides a signal for reinforcement 100% of the time. Surprisingly, with this procedure, pigeons are either indifferent between the two choice alternatives (Smith & Zentall, 2016) or they actually show a preference for the alternative that predicts 50% reinforcement (see also McDevitt, Spetch, & Dunn, 1997).

In a follow-up study, Zentall, Andrews, and Case (2017) asked whether inserting a delay between the choice response and the appearance of the signal for reinforcement (or its absence) would affect choice between the two alternatives. Zentall et al. found that when the signal that followed their choice was delayed by 20 s, the pigeons chose the optimal alternative significantly more often. Thus, once again, the addition of a delay to reinforcement, or in this case, to a signal for reinforcement (a conditioned reinforcer) resulted in fewer suboptimal choices by the pigeons.

Results show that given enough training, both pigeons and rats can acquire the ephemeral reward task when a delay is inserted between choice and reinforcement. It may be that non-human primates would also be able to acquire this task if a short delay were inserted between choice and reinforcement.

Indirect evidence that primates would benefit from such a procedure comes from research on chimpanzees trained on a reverse contingency task (Boysen, Berntson, Hannan, & Cacioppo, 1996). In this task, the chimpanzee is presented with a choice between two magnitudes of reinforcement, but the chimpanzee must point to the smaller magnitude of reinforcement to get the larger amount. This task turns out to be very difficult for the chimpanzee to perform with any consistency. When the chimpanzee is given the same problem, however, but the immediacy of reinforcement is removed by giving it a choice between two numeric symbols signaling each of the magnitudes of reinforcement, accuracy in the reverse contingency task has been found to greatly improve. Removing the sight of the actual reinforcer by requiring the chimpanzee to choose between two conditioned reinforcers allowed the chimpanzee to learn to perform this task.

Why do some species learn to choose optimally without the added delay?

The fact that under the right conditions, species like pigeons and rats, and perhaps even primates, can learn to perform the ephemeral reward task optimally does not explain why wrasse and parrots can do so without such a delay. What is it about these two species that allows them to do so well with this task?

In the case of wrasse, in their natural environment, they must learn to approach sources of reinforcement carefully. If a fish earns its keep by swimming into the mouth of a large predator, it cannot be too impulsive. One might imagine that some sort of communication between the two species must take place to avoid the inadvertent swallowing of the wrasse by the client fish. Could it be that the care in approaching and entering the mouth of the client fish serves the same purpose as the delay does for pigeons and rats?

But what about the parrots? They certainly do not need to learn to be careful in making choices between alternatives. Although it is possible that they are a not a very impulsive species, the three parrots that were used in the Pepperberg and Hartsfield (2014) experiment had had extensive prior training. One parrot had been exposed to “continuing studies on comparative cognition and interspecies communication,” whereas the other two had received considerable referential communication training. It is possible that this training had the effect of reducing their natural impulsivity. In support of the hypothesis that these parrots had developed considerable impulse control, one of the parrots was found to show a relatively flat delay-discounting function when given a choice between an immediate desirable reward and a delayed (by as much as 15 min) more desirable reward (Koepke, Gray, & Pepperberg, 2015). It would be interesting to know if less well trained (experimentally naïve) parrots would also show wrasse-like learning of the ephemeral reward task.

The results of recent research by Prétôt, Bshary, and Brosnan (2016a, b) are generally consistent with the impulsivity account of the failure to learn the optimal choice with the ephemeral reward task. In Prétôt et al. (2016a, Experiment 1), monkeys that did not acquire the optimal response in the original version of the task were exposed to a computer version of the task in which they had to move a cursor to the chosen stimulus. This modification in the task appeared to facilitate choice of the optimal alternative. The use of a computerized version of the task changes it in many ways but perhaps the most important difference is the reduced contiguity between choice and reward in the computerized task. Subjects reached for the reward directly in the original version of the task. Thus, according to the impulsivity hypothesis, one would expect reduced impulsivity. In a follow-up experiment (Prétôt et al., 2016a, Experiment 2), the researchers added movement to the ephemeral (optimal) alternative on the computer screen and found that this too facilitated choice of the optimal alternative. In this case, in addition to the separation of choice and reward inherent in the computerized version of the task, the movement of the optimal alternative would have drawn particular attention to that stimulus, thus increasing the chances that it would be selected.

In Prétôt et al. (2016b, Experiment 3) the researchers used the original (manual) version of the task but hid the food under a distinctive cup that the monkeys had to lift or point to. This modification led to a choice of the optimal alternative as well. But as with the computerized version of the task, this procedure separated the choice response from the reward itself, thus likely reducing impulsivity. Another procedure used with both the monkeys and the fish (Prétôt et al., 2016b, Experiment 2) was to color the ephemeral and permanent food distinctively (pink or black). This too, facilitated acquisition of the optimal alternative. Why distinctive coloring would facilitate acquisition is not obvious, but the unusual color of the food may have reduced the monkeys’ tendency to choose impulsively. Overall, the results of recent research with primates that did not originally easily acquire the optimal choice response with this task are quite consistent with the hypothesis that any change in procedure that reduces the tendency of the subjects to respond impulsively may facilitate acquisition of the optimal response.

The ephemeral reward task is a relatively simple task that has proven to be an unexpected challenge for several species. Understanding why that is, and what procedure can facilitate performance by those species, has provided us with a better understanding of factors involved, not only in the ephemeral reward task, but also, more generally, in tasks that involve the acquisition of simultaneous discriminations.

Open Practices Statements

The data and materials for all experiments are available from the author. None of the experiments was preregistered.

References

Boysen, S. T, Berntson, G. G., Hannan, M. B., & Cacioppo, J. T (1996). Quantity-based interference and symbolic representations in chimpanzees (Pan troglodytes). Journal of Experimental Psychology: Animal Behavior Processes, 22, 76-86.

Bshary, R., & Grutter, A. S. (2002) Experimental evidence that partner choice is a driving force in the payoff distribution among cooperators or mutualists: the cleaner fish case. Ecology Letters, 5, 130–136.

Koepke, A. E., Gray, S. L., & Pepperberg, I. M. (2015). Delayed gratification: A grey parrot (Psittacus erithacus) will wait for a better reward. Journal of Comparative Psychology, 129(4), 339–346.

McDevitt, M. A., Spetch, M. L., & Dunn, R. (1997). Contiguity and conditioned reinforcement in probabilistic choice. Journal of the Experimental Analysis of Behavior, 68, 317–327.

Odum, A. L. (2011). Delay discounting: I’m a k, you’re a k. Journal of the Experimental Analysis of Behavior, 96, 427– 439

Pepperberg, I. M., & Hartsfield, L. A. (2014). Can Grey parrots (Psittacus erithacus) succeed on a “complex” foraging task failed by nonhuman primates (Pan troglodytes, Pongo abelii, Sapajus apella) but solved by wrasse fish (Labroides dimidiatus)? Journal of Comparative Psychology, 128, 298-306.

Pepperberg, I.M. (1999). The Alex studies. Cambridge, MA: Harvard University Press.

Prétôt, L., Bshary, R., & Brosnan, S. F. (2016a). Comparing species decisions in a dichotomous choice task: Adjusting task parameters improves performance in monkeys. Animal Cognition, 19, 819–834.

Prétôt, L., Bshary, R., & Brosnan, S. F. (2016b). Factors influencing the different performance of fish and primates on a dichotomous choice task. Animal Behaviour, 119, 189–199.

Rachlin, H, & Green, L. (1972). Commitment, choice and self-control. Journal of the Experimental Analysis of Behavior, 17, 15–22.

Salwiczek, L. H., Prétôt, L., Demarta, L., Proctor, D., Essler, J., Pinto, A. I., Wismer, S., Stoinski, T., Brosnan, S. F., & Bshary, R. (2012). Adult cleaner wrasse outperform capuchin monkeys, chimpanzees, and orang-utans in a complex foraging task derived from cleaner-client reef fish cooperation. PLoS One, 7, e49068. doi:https://doi.org/10.1371/journal.pone.0049068

Smith, A. P., & Zentall, T. R. (2016). Suboptimal choice in pigeons: Choice is based primarily on the value of the conditioned reinforcer rather than overall reinforcement rate. Journal of Experimental Psychology: Animal Behavior Processes, 42, 212-220.

Zentall, T. R. (2019). What suboptimal choice tells us about the control of behavior. Comparative Cognition & Behavior Reviews, 14, 1-18.

Zentall, T. R., Andrews, D. M., & Case, J. P. (2017). Prior commitment: Its effect on suboptimal choice in a gambling-like task. Behavioural Processes, 145, 1-9.

Zentall, T. R., Case, J. P., & Berry, J. R. (2017a). Early commitment facilitates optimal choice by pigeons. Psychonomic Bulletin & Review, 24(3), 957-963.

Zentall, T. R., Case, J. P., & Berry, J. R. (2017b). Rats' acquisition of the ephemeral reward task. Animal Cognition, 20, 419-425.

Zentall, T. R., Case, J. P. & Luong, J. (2016). Pigeon’s paradoxical preference for the suboptimal alternative in a complex foraging task. Journal of Comparative Psychology, 130, 138-144.

Zentall, T. R., & Raley, O. L. (2019). Object permanence in the pigeon: Insertion of a delay prior to choice facilitates visible- and invisible-displacement accuracy. Journal of Comparative Psychology, 133, 132-139.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zentall, T.R. The paradoxical performance by different species on the ephemeral reward task. Learn Behav 49, 99–105 (2021). https://doi.org/10.3758/s13420-020-00429-2

Published:

Issue Date:

DOI: https://doi.org/10.3758/s13420-020-00429-2