Abstract

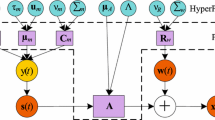

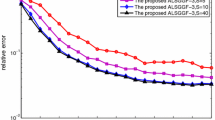

This paper presents a novel algorithm, named GMM-PARAFAC, for blind identification of underdetermined instantaneous linear mixtures. The GMM-PARAFAC algorithm uses Gaussian mixture model (GMM) to model non-Gaussianity of the independent sources. We show that the distribution of the observations can also be modeled by a GMM, and derive a maximum-likelihood function with regard to the mixing matrix by estimating the GMM parameters of the observations via the expectation-maximization algorithm. In order to reduce the computation complexity, the mixing matrix is estimated by maximizing a tight upper bound of the likelihood instead of the log-likelihood itself. The maximum of the tight upper bound is obtained by decomposition of a three-way tensor which is obtained by stacking the covariance matrices of the GMM of the observations. Simulation results validate the superiority of the GMM-PARAFAC algorithm.

Similar content being viewed by others

References

A. Belouchrani, K. Abed-Meraim, J.F. Cardoso, E. Moulines, A blind source separation technique using second-order statistics. IEEE Trans. Signal Process. 45(2), 434–444 (1997)

J. Bilmes, A gentle tutorial on the EM algorithm and its application to parameter estimation for Gaussian mixture and hidden Markov models, Tech. Rep. ICSI-TR-97-021, University of Berkely, 1997, http://www.Citeseer.nj.nec.com/blimes98gentle.html

C.F. Caiafa, A.N. Proto, Separation of statistically dependent sources using an l 2-distance non-Gaussianity measure. Signal Process. 86(11), 3404–3420 (2006)

Y. Chen, D. Han, L. Qi, New ALS methods with extrapolating search directions and optimal step size for complex-valued tensor decompositions. IEEE Trans. Signal Process. 59(12), 5888–5898 (2011)

A.P. Dempster, N.M. Laird, D.B. Rubin, Maximum likelihood from incomplete data via the EM algorithm. J. R. Stat. Soc., Ser. B, Methodol. 39(1), 1–38 (1977)

A. Ferréol, L. Albera, P. Chevalier, Fourth-order blind identification of underdetermined mixtures of sources (FOBIUM). IEEE Trans. Signal Process. 53(5), 1640–1653 (2005)

F. Gu, H. Zhang, W. Wang, D. Zhu, Generalized generating function with Tucker decomposition and alternating least squares for underdetermined blind identification. EURASIP J. Adv. Signal Process. 2013, 124 (2013). doi:10.1186/1687-6180-2013-124

F. Gu, H. Zhang, W. Wang, An expectation-maximization algorithm for blind separation of noisy mixtures using Gaussian mixture model, IEEE Trans. Signal Process. (revised)

A. Hyvärinen, Fast and robust fixed-point algorithms for independent component analysis. IEEE Trans. Neural Netw. 10(3), 1697–1710 (1999)

A. Karfoul, L. Albera, G. Birot, Blind underdetermined mixture identification by joint canonical decomposition of HO cumulants. IEEE Trans. Signal Process. 58(2), 638–649 (2010)

T.G. Kolda, B.W. Bader, Tensor decompositions and applications. SIAM Rev. 51(3), 455–500 (2009)

S. Kullback, R.A. Leibler, On information and sufficiency. Ann. Math. Stat. 22(1), 79–86 (1951)

L.D. Lathauwer, J. Castaing, Blind identification of underdetermined mixtures by simultaneous matrix diagonalization. IEEE Trans. Signal Process. 56(3), 1096–1105 (2008)

L.D. Lathauwer, B.D. Moor, J. Vandewalle, On the best rank-1 and rank-(R 1,R 2,…,R N ) approximation of higher-order tensors. SIAM J. Matrix Anal. Appl. 21, 1324–1342 (2000)

L.D. Lathauwer, J. Castaing, J.F. Cardoso, Fourth-order cumulant-based blind identification of underdetermined mixtures. IEEE Trans. Signal Process. 55(6), 2965–2973 (2007)

Q. Li, A. Barron, Mixture density estimation, in Advances in Neural Information Processing Systems, vol. 12 (MIT Press, Cambridge, 2000), pp. 279–285

É. Moulines, J.F. Cardoso, E. Gassiat, Maximum likelihood for blind separation and deconvolution of noisy signals using mixture models, in IEEE International Conference on Audio, Speech, and Signal Processing, Munich, Germany (1997), pp. 3617–3620

D. Nion, L.D. Lathauwer, An enhanced line search scheme for complex-valued tensor decompositions: application in DS-CDMA. Signal Process. 88(3), 749–755 (2008)

D.T. Pham, J.F. Cardoso, Blind separation of instantaneous mixtures of nonstationary sources. IEEE Trans. Signal Process. 49(9), 1837–1848 (2001)

T. Routtenberg, J. Tabrikian, MIMO-AR system identification and blind source separation for GMM-distributed sources. IEEE Trans. Signal Process. 57(5), 1717–1730 (2009)

G. Schwarz, Estimating the dimension of a model. Ann. Stat. 6(2), 461–464 (1978)

H. Snoussi, A.M. Djafari, Bayesian source separation with mixture of Gaussians prior for sources and Gaussian prior for mixture coefficients, in Proc. of MaxEnt, Amer. Inst. Physics. in Bayesian Inference and Maximum Entropy Methods, France (2000), pp. 388–406

P. Tichavský, Z. Koldovský, Weight adjusted tensor method for blind separation of underdetermined mixtures of nonstationary sources. IEEE Trans. Signal Process. 59(3), 1037–1047 (2011)

K. Todros, J. Tabrikian, Blind separation of independent sources using Gaussian mixture model. IEEE Trans. Signal Process. 55(7), 3645–3658 (2007)

H. Yang, S. Amari, Adaptive online learning algorithms for blind separation: maximum entropy and minimum mutual information. Neural Comput. 9, 1457–1482 (1997)

Y. Zhang, X. Shi, C.H. Chen, A Gaussian mixture model for underdetermined independent component analysis. Signal Process. 86, 1538–1549 (2006)

Acknowledgements

The authors would like to thank Lieven De Lathauwer for sharing with us the Matlab codes of the FOOBI algorithm. They also thank the anonymous reviewers for their careful reading and helpful remarks, which have contributed to improving the clarity of the paper. This work is supported in part by the Natural Science Foundation of China under Grant 61001106 and the National Program on Key Basic Research Project of China under Grant 2009CB320400.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

In this appendix, it is shown that (17) can be formulated as (20).

According to (19)

where \(\gamma_{t,m}^{*} = \hat{\omega}_{m}^{*}N(\mathbf{x}_{t}|\hat{\boldsymbol{\eta }}_{m}^{*},\hat{\mathbf{R}}_{m}^{*}) / \sum_{m' = 1}^{M} \hat{\omega}_{m'}^{*}N(\mathbf{x}_{t}|\hat{\boldsymbol{\eta }}_{m'}^{*},\hat{\mathbf{R}}_{m'}^{*})\). Therefore

Since trace is a linear operator, the summation with regard to t in the mid-term of (32) can be inserted into the trace operator. Hence, (32) can be rewritten in the following form:

The factor \(\sum_{t = 1}^{T} \gamma_{t,m}^{*} \) can be extracted out of the main brackets and (33) can be formulated as follows:

The updating equations of the EM algorithm for GMM parameter estimation can be formulated as follows:

Therefore

Substitution of (36) into (35), yields the expression (37) as follows:

Applying that η m =A μ m and \(\mathbf{R}_{m} = \mathbf{AC}_{m}\mathbf{A}^{\operatorname{T}} + \mathbf{R}_{\mathbf{w}}\)

Express the KL divergence of a zero-mean Q-variate normal density with covariance matrix Σ 2 from a zero-mean Q-variate normal density with covariance matrix Σ 1 as

Then (38) can be formulated in the following form:

where \(\mathrm{KL}_{\mathrm{norm}}[\hat{\mathbf{R}}_{m}^{*}|\mathbf {AC}_{m}\mathbf{A}^{\operatorname{T}} + \mathbf{R}_{\mathbf{w}}] \) is the KL divergence between two zero-mean Q-variate normal densities with a covariance matrix \(\hat{\mathbf{R}}_{m}^{*} \) and \(\mathbf{AC}_{m}\mathbf {A}^{\operatorname{T}} + \mathbf{R}_{\mathbf{w}}\), respectively. Therefore, (20) can be derived by inserting (40) into (37).

Rights and permissions

About this article

Cite this article

Gu, F., Zhang, H., Wang, W. et al. PARAFAC-Based Blind Identification of Underdetermined Mixtures Using Gaussian Mixture Model. Circuits Syst Signal Process 33, 1841–1857 (2014). https://doi.org/10.1007/s00034-013-9719-8

Received:

Revised:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00034-013-9719-8