Abstract

This study developed a novel framework for the color transfer between color images, which can further achieve emotion transfer between color images based on the human emotion (human feeling) or a predefined color-emotion model. In the study, we propose a new skill, which makes it possible to adjust the amount of main colors in image according to the complexity of the content of images. It can improve the previous methods which merely take single main color or the fix number of main colors combinations to implement color transfer. Other contributions of the study are the algorithms of the TFS and the TUS, which can improve the identification of the background and foreground and the other main colors that are extracted from the images. The category of images in this study focuses on the color images, such as scenic photographs, still life images, paintings, and wallpaper. The proposed method can also aid those non-professionals to manipulate and describe the connection between colors and emotion in a more objective and precise way. Potential applications include advertising design, cover design, clothing matching on color, interior design, colorization of grayscale images, and re-emotion the photograph of the camera.

Similar content being viewed by others

1 Introduction

In recent years, researchers have intensively studied the use of images and semantics to convey emotions and opinions (Antonio et al. 2013; Hao-Chiang et al. 2012; Qi and Zaretzki 2015; Ming et al. 2014; Michela et al. 2011; Yang and Peng 2008). Hao-Chiang et al. (2012) presented that construct an extensible lexicon and use semantic clues to analyze the sentences. They preprocess the sentence to eliminate the useless information and then transform it to an emotion lexicon. Antonio et al. (2013) investigated a preliminary study aiming at investigating the eye gaze pattern and pupil size variation to discriminate emotional states induced by looking at pictures having different arousal contents.

Images are an important media for conveying human emotions. Colors are also the main component of an image, so they usually are applied to make for discussing and researching (Zhang et al. 2016; Tai et al. 2005; Michela et al. 2011; Xiao 2006; Taemin Lee et al. 2016). Zhang et al. (2016) proposed a new appearance-based person reidentification method, which used color as the feature and carried on the simple and effective color invariants processing.

The human reaction to colors has been vigorously studied for many years (Chou 2011; Csurka et al. 2010). Certain colors generate certain feelings. Professional designers who understand that the colors and the emotions can be used this information to present a business appropriately. For example, Fig. 1 (Simon McAr- dle 2016) shows a color-emotion guide and a fun infographic of the emotions that well-known brands wish to elicit. Color and emotion (human feeling) are closely related. Moreover, colors are a stimulus and an objective symbol that produces subjective reactions and sense perceptions. Diverse studies in both science and art domains indicate that, in addition to a single color, more than one color of color combinations is needed to deliver a specific emotion. In the past, most studies rely on image color statistics for color transfer. In recent year, color-transfer technology is combined with emotion and applied to emotion-transfer between images. Therefore, some research of high-level scene content analysis has been presented until recent years. For instances, Yang and Peng (2008) presented a method of transferring mood between color images by using histogram matching to preserve spatial coherence and that (Qi and Zaretzki 2015) showed an approach of the image color transfer to evoke different emotions based on color combinations. The content-based color-transfer method developed by Wu et al. (2013) utilized subject area detection and surface layout recovery to minimize user effort and generate accurate results, as well as integrated spatial distribution of target color styles in the optimization process. Pouli et al. (2011) presented a histogram reshaping technique for selecting how well the color palette of the source image should be matched to that of the target. Chang et al. (2005) proposed a perception-based scheme for transferring colors based on the basic color categories derived through psychophysical experiments.

Nevertheless, an unsolved problem is how to understand and describe the emotions aroused by an image. Another problem is how to understand the inherent subjectivity of emotional responses by the user. This domain is challenging because emotions are subjective feelings that are affected by the unique personal characteristics of the user. Restated, since emotions and messages about the content of an image are usually conveyed to humans, views differ because of differences in culture, race, gender, age, etc. Until now, no studies have proposed a reliable and objective solution to this challenge. For instance, an experiment by Michela et al. (2011) analyzed the opinions of only a few people. They proposed a case study to analyze emotion and opinion in natural images. A classification algorithm was used to categorize images according to the emotions perceived by humans when looking at them and to understand which features are more closely related to such emotions. However, this is not the majority view. The solution developed in this study is to use a color-emotion model instead of the previous method. Many studies of color-emotion models have been presented or published. For instance, Kobayashi (1992) published the book on Color Image Scale and Eisemann (2000) book called Pantones Guide to Communication with Color. These models can be used to find reliable and objective solutions to color-emotion transfer problems. Reinhard et al. (2001) used colors to extract the emotion in images and were forerunners in this field of research. They made color transfer possible by providing a reference image. Since then, several different color-transfer algorithms have been proposed (Pouli et al. 2011; Chiou et al. 2010; Dong et al. 2010; Xiao 2006; Tai et al. 2005). The first emotion transfer algorithm developed by Yang and Peng (2008) used the conventional color-transfer framework but added a single color scheme for emotions. In several domains, the relationship between color and emotion is termed color emotion (Amara 2016). The beautiful colors of a sunset or red and golden yellow leaves of autumn may stimulate a reaction of delight. Therefore, the use of colors to transmit an emotion has attracted researchers from various scientific fields (Csurka et al. 2010; I.R.I., 2011; Whelan 1994; Simon 2016). The new emotion transfer method proposed in this study uses a scheme of dynamic and adjustable color combinations that is based on the complex content of a color image to determine which number of color combinations are adopted, two or three or five colors. A solution, the color-emotion guide of Chou (2011), Color Scheme Bible Compact Edition, is adopted for mapping between color and emotion, containing 24 emotions and each emotion contains 32 five-color combinations, 48 three-color combinations, and 24 two-color combinations. Figure 2 shows one of these emotions. Therefore, the proposed method supports both emotion-transfer solutions. The first solution allows users to choose the desired emotion directly from a predefined color-emotion model, which applied the Chous color-emotion guide (Chou 2011). The second solution allows users to use reference images in a manner similar to that in previous color-transfer methods.

The emotion of softness, which contains two-, three-, and five-color combinations, in Chous color-emotion study (Chou 2011)

The challenges of using dynamic and adjustable color combinations in the proposed emotion-transfer method include how to decide the amount of represented colors in the image dynamically, how to identify the dominant main color more accurately, and how to transfer these chosen main colors to a destination color combinations. The method developed by Su and Chang (2002) is used to choose the initial representative color points in color space, and no more than three cycles of the LBG (Linde et al. 1980) algorithm are used to get final representative color points by clustering. This study then proposes two novel algorithms: Touch Four Sides (TFS) and Touch Up Sides (TUS). This enables accurate identification of the background color and the dominant color among the main colors. Finally, it enables emotion transfer between the target emotion and the input image based on our novel framework. Figure 3a, b show the two emotion-transfer methods proposed in this study.

The remainder of this paper is organized as follows. Section 2 reviews previous studies in related areas. Section 3 describes the proposed dynamic and adjustable color combinations for the emotion transfer framework in detail. Section 4 presents the results and comparisons. Section 5 concludes the study and suggests further works.

2 Related works

Automatic color-transfer algorithm was first proposed by Reinhard et al. (2001). It shifts and scales the pixel values of the input image to match the mean and standard deviation of the reference image. Although the Reinhard study was performed in 2001, psychological experiments were performed as early as 1894, when Cohn proposed the first empirical method for color preference (Eysenck 1941). Despite the considerable number of studies performed, (Norman and Scott 1952) reported that the findings from these early studies were dive and tentative. Later, Kobayashi (1992) developed a color-emotion model based on data obtained in psychological studies. In (Amara 2016), two color-emotion models were proposed: one for a single color and one for a combination of colors. The two main lines of research in the study of single color emotion are classification and quantification. The classification of a single color emotion uses principal component analysis to reduce a large number of colors to a small number of categories (Tai et al. 2005; Xiao and Ma 2009). Early attempts to quantify color emotion include Sato et al. (2000) and Ou et al. (2004a), who provided a color-emotion model for quantifying three color-emotion factors: activity, weight, and heat. This study was then verified by Wei-Ning et al. (2006). Studies of color combinations by Kobayashi (1992) and by Ou et al. (2004a) showed a simple relationship between emotion and a single color and between an emotion and a color pair. Lee et al. (2007) used set theory to evaluate color combinations. Whereas studies of color emotion only considered color pairs, our emotion transfer approach uses a color-emotion scheme called Color Scheme Bible Compact Edition (Chou 2011). Compared to single color emotion, color combinations provide a simpler and more accurate description of color emotion in images. Tanaka et al. (2000) reported that emotion semantics are the most abstract semantic structure in images because they are closely related to cognitive models, cultural background, and the esthetic standards of users. Of all of the factors that affect the emotion of images, colors are considered the most important. Tanaka found that the relative importance of each heterogeneous feature to attractiveness is color > size > spatial frequency and that the relative contribution of each heterogeneous feature to attractiveness is color heterogeneity > size heterogeneity > shape heterogeneity > texture heterogeneity . Two methods of extracting emotion features from images were soon developed. First, a domain-based method was developed for extracting relevant features based on special knowledge of the application field (Wei-Ning et al. 2006; Baoyuan Wang et al. 2010). For instance, Itten (1962) formulated a theory for using color and color combinations in art and included the semantics. The other method of extracting emotional features is performing psychological experiments to identify the types of features that have a significant impact on users, via psychological experiments. For instance, Mao et al. (2003) proved that the fractal dimensions of the images are related to the affective properties of an image. In this work, color transfer based on color factors and color distributions is used to perform emotion transfer. Reinhard et al. (2001) transferred the pixel color values of the source image by matching the global color mean and standard deviation of the target in the Lab opponent color space, which is an average de-correlated space that allows independent transfer of color in each channel (CIELB 2016). Other global approaches transfer high-level statistical properties. For instance, to preserve the gradients of the input image, (Neumann and Neumann 2005) used 3D histogram matching in the HSL color space, and (Xiao and Ma 2009) used histogram matching in the Lab color space. Li et al. (2010) proposed a color-transfer method that considers the weighted influences of the input and the reference images. Pitie et al. (2005) proposed a method of transferring one N-dimensional probability distribution function to another. This study adopts a novel framework for emotion-based color transfer of images that uses dynamic and adjustable color combinations. It enables users to provide a reference image similar to that used in the conventional for color-transfer algorithm and can also use a predefined color-emotion model to acquire desired emotions for images. Therefore, the color-transfer method used in this study differs from previously studies of similar issue. Although the algorithm proposed by Zang and Huang (2010) included emotional elements, only warm and cool aspects of color emotions are considered. Emotion in images requires a more complex color-emotion model. Recently, though the method which adopted a predefined color-emotion model was proposed by Qi and Zaretzki (2015), the pities are that they only provide fix colors (3 colors) to perform the color-transfer algorithm and the dominant color may not have the largest weight.

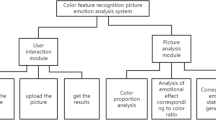

3 The proposed scheme

This study developed a new emotion-transfer method based on dynamic and adjustable color combinations, in which the complexity of contents of the color image to determine what amount of color combinations are used. Additionally, the proposed method can accurately identify the primary representative colors (main colors) of the color image and also supports both solutions, i.e., using reference images or semantics which come from a predefined color-emotion model for emotion transfer. Figure 4 shows the components of the proposed emotion-transfer framework.

The target emotion in Fig. 4, which is from the emotion source, can be separated in two ways. One way adopted the reference image (RI) to get a target emotion, which is acquired by two steps including main colors extraction and emotion matching. The step of main colors extraction is described in Sects. 2 and 3 the dynamic extraction of the main colors. In another step, the emotion matching can analyze the input image to compare with the reference image based on the number of main colors. There are two cases for processing of the emotion matching. If the number of main colors is same, the main colors of reference image will be adopted as the color combinations of target emotion directly. One example of this way is shown in Fig. 5. When the number of main colors is different, the target emotion will be endured with same emotion and to search the closest color combination from a predefined color-emotion model.

Another way is to allow users to choose a desired emotion directly from a predefined color-emotion model. In Fig. 4, the step of main colors extracting is used to extract the main colors for the input image and reference image. These main colors can be in three kinds of color combination: two-color, three-color, and five-color. Moreover, our method also supports other color-emotion model of emotion guide books that may provide more than three kinds and five-color combinations. In this study, the number of color combinations is chosen according to the complexity of the contents of the input image or reference image. For example, Fig. 6 shows that the number of main colors generally increases with complexity. Among these color combinations, the first color of the cluster is the background, the second color of the cluster is the dominant color, and the rest can then be deduced by analogy. Therefore, the main colors can be selected adjustably and dynamically according to the color distribution of the images.

Right side of Fig. 4, the step main colors extracted from the input image are mapped to the target emotion with the same amount of color combinations. The proposed approach uses a reference image or the predefined color-emotion guide developed by Chou (2011), which provides three kinds of number of color combinations. Therefore, the number of main colors must be controlled within this range. After the color-transfer algorithm is used to transfer the target emotion to the input image, the output image is obtained, and the procedures of the color-emotion transfer are completed.

3.1 Color-emotion model

The model developed by Chou (2011) contains 24 emotions, each of which includes 24 two-color combinations, 48 three-color combinations, and 32 five-color combinations. The 24 emotions are: fresh, soft and elegant, illusive and misty, pretty and mild, frank, soothing and lazy, lifelike, solicitous and considerate, clean and cool, excited, optimistic, ebullient, graceful, plentiful, magnificent, moderate, primitive, simple, solemn, mysterious, silent and quiet, sorrowful, recollecting, and withered. All of the colors in the Chou model are mapped to the RGB color space and the CMYK color space. However, this study uses the CIELab (2016) color space, which is converted from the RGB color space. The CIELab is shown in Fig. 7 that represents these values using three axes: L, a and b.

3.2 The dynamic extraction of the main colors

Figure 8 shows the flowchart and the components of the main color extraction phase, which is part of the feature extraction phase of this framework. Both the reference image and the input image use this step to determine the representative colors, which are also called the main colors for each image. The two phases in this step can identify the main colors and then determine the amount of the main colors. Conventional approaches use only one color or a fixed number of color combinations (Qi and Zaretzki 2015; Ou et al. 2004a). But this method permits adjustable and dynamic combinations of one to five colors, depending on the color complexity of the content of the images. Complexity is defined as the distribution of the image pixels in Lab color space. For instance, Fig. 6a shows that less complex images use two-color combinations and Fig. 6c shows that more complex images use five-color combinations.

3.2.1 Identification of the main colors

The second step in the flowchart in Fig. 8 identifies the main colors. In this step, the representative colors of the processed images in Lab color space are determined. The sources of processed images can be referenced images or input images. Most conventional methods use cluster skill to identify the main colors, according to the distribution of the image pixels in the color space. The k-means clustering method (Hartigan and Wong 1979) is widely used, but its drawback is that the initial points in the color space must be chosen randomly from the source image. Another common method is clustering vector quantization (VQ) (Gray 1984), in which the nearest quantization vector centroid is moved toward the initial point by a small fraction of the distance. This classic quantization technique was initially developed for image processing. However, its disadvantage is its slow speed when the initial points are poor. More importantly, its performance significantly depends on the initially selected points.

The technique that is proposed in this study combines two methods to identify the representative points from image pixels. Firstly, independent scalar quantization (ISQ) (Foley et al. 1990) enables easy identification of the initial point. Figure 8 shows the 3D and 2D diagrams, and Fig. 9a shows that the 3D Lab color space is quantized into 8 individual cubes of the same size, using ISQ. Figure 9b shows the pixel distribution in the 2D Lab color space. Three cubes have pixels, and one cube with no pixels is called an empty cube. The centroids of these cubes, which are based on the distribution of the pixels, respectively, are computed using the pixels of the cube. Since an empty cube has no representative point, the number of initial points may be less than 8, but the maximum number is 8. Chou (2011) limited the maximum number of color combinations to five, so this method appropriately modulates those initial points to match the color combinations. Figure 9c shows how the LBG algorithm (Linde et al. 1980) is used to make necessary modifications after ISQ. The LBG algorithm is similar to a K-means clustering algorithm, which uses a set of input vectors, S\(=\) xi Rd—i\(=\) 1, 2, ..., n, as the input and generates a representative subset of vectors. Initiate a codebook, C\(=\) cj Rd—j\(=\) 1, 2, ..., K, which is randomly selected, with a user-specified K !‘!‘ n as the output. Although the settings for vector quantization (VQ) are usually d\(=\) 16 and K\(=\) 256 or 512, the settings used in this study are d\(=\) 3 and K !‘\(=\) 8.

The convergence of the LBG algorithm depends on the initial codebook, C, the distortion, Dk, and the threshold. The maximum number of iterations must be defined in order to ensure convergence. Step 2 of the LBG substitutes the ISQ for a random selection and replaces the codebook with the initial representative points for the main colors. The pixel clusters for the LBG algorithm are a good representative point when the initial points (initial guesses) are appropriately chosen. However, when the initial points are poor, the final representative

points may also be poor and this increases computation time. Therefore, the performance of the LBG significantly depends on the initial point. Because the applicable initial points are identified using the ISQ, the LBG algorithm can be performed more efficiently. The experiment shows that no more than three cycles of the LBG are necessary to obtain a good result (Su and Chang 2002). However, Fig. 9 shows that some representative colors are lost during processing by the LBG. The Euclidean distance between points p and q is d(p,q), as shown in (1) when p\(=\) (pL, pa, pb) and q\(=\) (qL, qa, qb) are two points in Lab color space.

In step 5 of the LBG, the clustering centers are updated, as shown in (2). Cubes that have no pixels or that have insufficient pixels must be re-established. Representative colors are also eliminated, which eventually reduces the number of representative colors. For instance, Fig. 10

shows that a pixel can belong to cube Ci, but it may be further away from centroid Ci than from centroid Cj of cube Cj. In this case, the LBG must be used to make modifications. In Fig. 10a, b, the stars define the representative colors for every cube and the dots represent the pixels in the color space.

3.2.2 Determination of the amount of main colors

When the main colors have been identified, the result can contain 18 clusters, i.e., 18 representative points in the color space. The number of clusters is also determined, and the representative points that are used to determine the color combinations in the color space are detected. The proposed method is then used to determine the background and other main colors. Figures 12 and 13 show the flowcharts for these processes.

The fourth step in the flowchart in Fig. 8 judges all of the clusters that are obtained using the ISQ and a single cycle of the LBG. Every cluster is checked using the number of pixels. After establishing an appropriate threshold, all clusters are compared. Those that do not meet the threshold are eliminated because they have less effect on the color-emotion expression. If the judgment in the fourth step is true (Y), a further single cycle of the LBG renews the remaining clusters. In this implementation, the threshold is set to 1. The next step limits the number of clusters to the maximum number of color combinations. Chou (2011) set the maximum number of color combinations as five. Therefore, this step judges the number of clusters again. If the number of clusters exceeds the maximum number of color combinations, the five largest clusters are retained and a single cycle of the LBG is used to renew these remaining clusters. The number of clusters is finally dynamically limited to no more than five.

3.3 Identification of the background and the foreground

Generally, most methods that extract color from images cannot consistently and accurately extract the background color and foreground color, because only the numbers of pixels in clusters are used to determine that the largest is treated as the most important (Qi and Zaretzki 2015; Yang and Peng 2008). Figure 11 shows that the general methods find it difficult to differentiate between the background color and the foreground color. However, the proposed method easily and accurately segments the background and the foreground. The image in Fig. 11a is named the source image; the color of the parts that are not leaves (dark green) is expected to be the background color cluster. In Fig. 11b, c, the proposed approach uses the Touch Four Sides (TFS) algorithm to improve the identification of the background and the other main colors that are extracted from the images. Generally, the majority of images, such as scenic photograph, landscape photograph, still object photograph, and painting, have similar characteristics, in terms of the background and the foreground pattern. Usually, their pattern is located near the center and the background is on the outside or on the top. In previous works by Krishnan et al. (2007), dominant colors were identified according to foreground objects. Wu et al. (2013) analyzed the structure and content of a scene and divided it into different regions, such as salient objects, sky, and ground; Chen et al. (2012) adopted a pixels classifier based on the RGB color that could be trained by ELM (extreme learning machine) algorithm, which not only extracts object regions, but also provides a reference boundary of objects. They are similar views to our method.

All clusters of main colors in Fig. 12 are then ready for step 1. These clusters are then checked by the TFS algorithm in step 2. If the pixels of a cluster contact the edge of the image, including upside, downside, right side, and left side [the examples illustrate on the upside of Figs. 14 and 15, (3) of (a); (3) of (b)], i.e., if they touch four sides simultaneously, this cluster must be chosen and moved to the next step. When the number of the clusters which own the TFS is more than one, then the cluster with the most pixels touching the four edges is chosen as the background color. If all of the clusters do not have touch four sides in step 2, then the TUS algorithm is used to check all clusters again. The TUS examines the pixels of these clusters whether contact the edge of the upper side or not. Some examples are shown at the lower side in Figs. 14 and 15c, d. When the number of the clusters that keep TUS is more than one, then the cluster with the most pixels touching the top is chosen as the background color. When the background color has been identified, the other main colors are then determined in the next phase.

Figure 13 shows the flowchart for this procedure. Firstly, the cluster of background colors must be eliminated from these clusters of main colors and the remaining main colors are then sorted based on the amount of pixels in each cluster. Normally, the dominant pattern in images is spread over one or many areas that combine the other main colors without background. The dominant pattern is also called the foreground of an image. The previous phase continuously selects the background color and then sorts the remaining main colors. The main colors are ordered according to their importance in the images, i.e., the background color is the first and then the largest cluster (in the remaining main colors) is secondary and so on. This is mapped to the color combinations of the predefined color- emotion guide.

3.4 Emotion transfer

Figure 16 which illustrates emotion-transfer processing carries out the final step in the proposed framework. The target emotion that derives from two sources may adopt a reference image or directly use an emotion keyword. There are three kinds of color combinations for target emotion and main color extraction in Fig. 16. In the proposed framework, the input image and the reference image have same processing that the number of main colors can be extracted adjustably and dynamically. The detail of the emotion transfer is described as follows.

3.4.1 Matching for the amount of colors

When the main colors of the target emotion and the input image are ready, this step corresponds them to obtain a set of the same amount of color combinations for next phase. In Fig. 4, the assignment of emotion matching is to find the closest color combinations between the reference image and the input image. When the number of color combinations is different, this step can help to choose a closest color combinations with the same emotion from the color-emotion model. For instance, when Rc (the number of main colors of reference image) is 2, the main colors of three colors and five colors with the same emotion are ready for the processing of emotion matching.

3.4.2 Color transfer

When the number of the main colors has matched between the target emotion and the input image. Then, the color-transfer procedure can be performed. The processes of color transfer are that first of all the background color of the input image is substituted for the background color of the target emotion and then next to deal with the dominant color. Furthermore, if the remaining colors still exist, they are keep on transformation from the target emotion to the input image. It is based on the size of the cluster in sequence. That is, larger cluster owns higher priority for color transfer in this study.

3.4.3 Pixel updates

In CIELab space, the L axis values range from 0 (black) to 100 (white), and the other two color axes range from positive to negative. On the a–a axis, a positive value of

+127 indicates an amount of red and a negative value of \(-128\) indicates an amount of green. On the b–b axis, yellow is positive +127 and blue is negative \(-128\). When the target emotion is mapped to the input image, the value of pixels in each cluster of input images must to update according to the new value of the target emotion. However, all updated pixels must reside within the CIELab space, because the centers of the clusters of the input image are updated to another Lab value, and some values may be out of range that would cause distortion and an unnatural output image. Therefore, necessary adjustments are made when the pixels are updated. In the proposed method, the L axis values are controlled following the input image to avoid arise the unnatural of images (Wu et al. 2013).

When the a and b values are out of range and over the cube space, the operations of adjustment are shown in Eq. ( 5). The adjustment factor is set as Eq. (3). If the source color (Sp) is to be transferred to a new goal color, namely Gp = (gpL, gpa, gpb), dpa, and dpb are set as the distance from Sp to Gp by using Eq. (3). Other variables, Giamax, Giamin, Gibmax and Gibmin, are maximum and minimum pixel values for goal clustering,

and Oia and Oib are the pixel values for the output cluster. The procedures of pixel updates are expressed as Eqs. (3), (4), and (5). The Oia and Oib are adjusted by using the above procedures to ensure that the pixel values are always within range and over the CIELab space. It are also to regulate the values of the a and b within the Lab color space based on the factor of.

3.4.4 Gradient preservation

The final phase in the emotion transfer procedure is gradient preservation, which is needed to preserve scene fidelity. The gradient is preserved by using the algorithm proposed by (Xiao and Ma 2009) where the formulation of the problem is described as an optimization problem of where Ixy, I’xy, and Oxy are pixels in the input image, the intermediate image (image after updating all pixels), and the output image, respectively. The x and y are the horizontal and vertical axes of the image. The \(\lambda \) is a coefficient weighting the importance of gradient preservation and new colors. The first term of Eq. 6 ensures that the output image is as similar as possible to the intermediate image. The second term of Eq. 6 maximizes the similarity between the gradient of the output image and the gradient of the input image.

3.4.5 Output image producing

To sum up, when user selects a specific emotion and a specific color combinations, the number of colors must equal the number of the main colors in the input image. When the user chooses a reference image in another option, the target emotion of the color combination is extracted by the step of main color extraction. And it must pick the closest color combination of the emotion from the predefined color-emotion model. When an emotion is chosen, one of three different color combinations (2, 3, or 5 colors) must be selected to prepare for mapping with the input image.

4 Experimental results and comparisons

Two ways of the proposed approach which are picking a specific emotion or using a reference image to change the colors of an input image are all adopting the skill that is adjustable and dynamic for the number of color combinations. The proposed approach is superior to previous methods. For instance, the clustering result of most previous methods in Fig. 11b illustrates that the dominant main color is incorrectly recognized as that of the background rather than that of the leaves. In our experiment, Fig. 17 shows that the proposed approach can correctly extract the dominant color which is acquired by the building, and the desired background color which is the blue of the sky can be correctly got, too. Additionally, the photograph on the right side is an example which adopts one of the 5 colors of a specific emotion that is chosen from the predefined color-emotion model.

To extract the main colors uses our approach, and five-color combinations are got dynamically come from the photograph on the left side. The desired background color is the blue of the sky, and the dominant color is the second color acquired from the building. The photograph on the right side is an example which adopts one of the 5-colors of a specific emotion that is chosen from the predefined color-emotion model

Diverse images can be deal with for emotion transfer in this study, including scenic photographs, still life photographs, wallpapers, and artwork paintings. The target emotion is transferred in two ways, so the experimental results are shown in terms of difference. Figure 18a–j takes a reference image to obtain a target emotion, and a color emotion is then transferred into the input image. In Fig. 18a–j, the up left image (1) is the input image, the up middle image (2) is the main colors of the input image, the up right image (3) illustrates the clustering of the input image, the down left image (4) is the reference image, the down middle image (5) is the main color from the reference image, and the down right image (6) is the output image that results when the input image is changed, according to a target emotion.

The color-transfer procedure is performed using the same number of color combinations in (a)–(f) but a different number of color combinations in (g)–(j). The five-color is used in (a)–(b), the three-color is used in (c)–(d), and the two-color is used in (e)–(f). In the remainder, (g)–(h) change a five-color combinations to a three-color combinations by using the first three- of five-color combinations for target emotion, and (i)–(j) transfer the three-color to the five-color that have to select a three-color combinations in the same emotion for matching by using the procedure shown in (i)–(j). In this example, the same emotion is selected from the predefined color-emotion model in the Chou’s model (Chou 2011), i.e., graceful.

In Fig. 19, the target emotion adopts directly the semantic by user. In Fig. 19a–f, the leftmost image is the input image, the second image is the main colors extracted from the input image, the middle image is the clustering result of the input image, and the fourth image is the target emotion by the color-emotion model. The fifth image is the output image when the input image is changed by the target emotion. In Fig. 19g–j, different emotions are shown in a same image. The four emotions used in (g)–(j) are fresh, soft and elegant, illusive and misty, and solicitous and considerate, respectively, and the images are including scenery, building, landscape painting, and flower painting. The target emotions are derived as described in the color-emotion study by Chou (2011).

From left to right, Fig. 20a–d shows a comparison of the emotion transfer, using the proposed method that is an adjustable and dynamic color combinations and merely adopting a single color. Emotion transfer using a single color is achieved by moving the mean of the L, a, b values of the input image to the dominant main color of the target emotion. The main colors of this method are one-to-one mapped from target emotions to the input images. Since the composition of an image is used for dynamically choosing the representative colors (main colors), the results of the comparison display that our method is more natural, more meticulous, more colorful, and more accurate to present the emotion desired by the user.

In (a)–(d), the leftmost image is the input image, the second image of the left is the color combinations extracted from the input image, and the third and the fourth images of the left are a single color for target emotion and the result of transferring by this color, respectively. The fifth is a color combination (five colors) which must amount to the input image and map to each color of the second. Then, the rightmost is present, according to the color combination of the fifth by target emotion. The background is similar in the fourth and rightmost images, but differs in the other parts. For instance, the dominant color differs, and the remaining colors differ. Therefore, the rightmost image is more natural and more colorful compared to the fourth image (using a single color). Moreover, if the fourth image has other fixed color combinations such as two or three colors, the results will be similar to that for only one color. Figure 21 shows the use of a reference image to perform the color transfer. Although the purpose of the proposed color-transfer algorithm differs from those of algorithms used in previous color-transfer methods, we also compare our method (not including the color-emotion model) to two representative color-transfer algorithms (Pouli et al. 2011; Reinhard et al. 2001).

If users provide a reference image, the purpose of our algorithm is to transfer emotion in the input image to emotion of the reference image by changing colors. In contrast, the purpose of previous color transfer methods is to transfer the colors in the input image to colors of the reference image. Figure 21a, b shows the color transfer results with two input images and two reference images. Compared to the methods developed by Pouli et al. (2011) and by Reinhard et al. (2001), our method is superior in terms of blending the colors of the reference image while preserving the look and details of the input image.

The dominant color may not be absolutely and correctly identified for all images; generally, the users will regard the flower as the dominant color (mauve) in this input image, but the result (dark green) is not. Therefore, in the future may be add other elements, such as shape and texture, for improving

5 Conclusions and future work

This study proposes a novel method and framework for performing emotion transfer based on adjustable and dynamic color combinations for color images. The results for adjustable and dynamic color combinations have several advantages. It illustrates the emotions in images enables color transfer to be performed separately in different regions of the images. Figures 18, 19 and 20 show that the method improves the expression of color and emotion in images. Non-professionals can also use the proposed method to describe objectively and efficiently for the communication of human emotions. The experimental results in Fig. 22 show that the proposed approach can naturally alter the emotions between photograph and painting. It also allows emotionally rich images for art and design. Since previous color-transfer algorithms use only one color or a fixed color combination to obtain new images, the images are usually not rich or nature in terms of colors and emotions, and the clustering result sometimes does not recognize the dominant main color correctly. The proposed approach uses the skill of ISQ + LBG, which is more effective than LBG alone, and the quality of images are similar. Other new algorithms in the proposed approach, the TFS and TUS algorithms, can usually extract the background and dominant color correctly from the main colors for most images. Furthermore, the representative colors are obtained from the skill of adjustable and dynamic color combinations, which provides a closer link between human and emotion. Our method supports adjustable and dynamic extraction for color combinations. In the future, different color-emotion models could be used in this framework. Therefore, it has various potential applications in different fields. For instance, since the main colors extracted from color images are the most important representational colors, they can be used to identify specific images for the image retrieval skills that use the feature of the emotion. Moreover, it can be applied to affective computing, cognitive science, and so on.

However, although the method always extracts the background color accurately, the dominant color may not be absolutely and correctly identified for all images, for example, shown in Fig. 23.

Therefore, the method may be improved by additional some elements to improve extraction of emotion from images, such as shape and texture.

References

Amara (2016) The complete guide to color psychology. http://www.amara.com. Accessed 2016

Chang Y, Saito S, Uchikawa K, Nakajima M (2005) Example-based color stylization of images. ACM Trans Appl Percept 2(3):322345

Chiou W-C, Chen Y-L, Hsu C-T (2010) Color transfer for complex content images based on intrinsic component. In: IEEE international workshop on multimedia signal processing (MMSP), pp 156–161

Chou C-K (2011) Color scheme Bible compact edition. Grandtech Information Co., Ltd, Taipei

CIELAB (2016) CIELab—Color models technical guides. http://dba.med.sc.edu-priceirf-Adobe-tg-models-cielab.html. Accessed 2016

Csurka G, Ska S, Marchesotti L, Saunders C (2010) Learning moods and emotions from color combinations. In: Proceedings of the seventh Indian conference on computer vision, graphics and image processing, ICVGIP 10, ACM, New York, NY, USA, pp 298–305

Dellagiacoma M, Zontone P, Boato G (2011) Emotion based classification of natural images, DETECT11, Glasgow, Scotland, UK. Copyright 2011 ACM 978-1-4503-0962-2/11/10

Dong W, Bao G, Zhang X, Paul J-C (2010) Fast local color transfer via dominant colors mapping. In: ACM SIGGRAPH ASIA 2010 Sketches, SA 10, ACM, New York, NY, USA, pp 461–462

Eisemann L (2000) Pantones guide to communicating with color. Grax Press, London

Eysenck HJ (1941) A critical and experimental study of color preferences. Am J Psychol 54:385394

Foley JD, Dam AV, Feiner Sk, Hughes JE (1990) Computer graphics: principles and practice. Addison-Wesley, Reading

Gray R (1984) Vector quantization. IEEE ASSP Mag 1:4–29

Hartigan JA, Wong MA (1979) Algorithm AS 136: a K-means clustering algorithm. J R Stat Soc Ser C 28(1): 100–108. JSTOR 2346830

I.R.I. (2011) The color for designer. DrSmart Press Co., Ltd., New Taipei City

Itten J (1962) Art of colour. Van Nostrand Reinhold, New York

Kobayashi S (1992) Color Image Scale. Kodansha International

Krishnan N, M.S Univ., Washington, Banu MS, Callins Christiyana C (2007) Content based image retrieval using dominant color identification based on foreground objects, conference on computational intelligence and multimedia applications. International conference on (vol 3)

Lanata Antonio, Valenza Gaetano, Scilingo Enzo Pasquale (2013) Eye gaze patterns in emotional pictures. J Ambient Intell Hum Comput 4:705715

Lee J, Cheon Y-M, Kim S-Y, Park E-J (2007) Emotional evaluation of color patterns based on rough sets. In: Natural computation. Third international conference on ICNC 2007, vol 1, pp 140–144

Lee T, Lim H, Kim D-W, Hwang S, Yoon K (2016) System for matching paintings with music based on emotions. ACM SIGGRAPH ASIA 2016 technical briefs (November 2016). doi:10.1145/3005358.300536

Li M-T, Huang M-L, Wang C-M (2010) Example based color alternation for images. In: Computer engineering and technology (ICCET) 2nd international conference on, vol 7, pp V7316–V7320

Lin Hao-Chiang Koong, Hsieh Min-Chai, Loh Li-Chen, Wang Cheng-Hung (2012) An emotion recognition mechanism based on the combination of mutual information and semantic clues. J Ambient Intell Hum Comput 3:1929

Linde Y, Buzo A, Gray RM (1980) An algorithm for vector quantizer design. IEEE Trans Commun 28:84–95

Mao X, Chen B, Muta I (2003) Affective property of image and fractal dimension. Chaos Solitons Fractals 15(5):905910

Neumann L, Neumann A (2005) Color style transfer techniques using hue, lightness and saturation histogram matching, in computational aesthetics in graphics. Vis Imaging 2005:111–122

Norman RD, Scott WA (1952) Colour and aect: a review and semantic evaluation. J Gen Psychol 46:185233

Ou L-C, Luo MR, Woodcock A, Wright A (2004) Colour emotions for single colours, in Part I of A study of colour emotion and colour preference. Color Res Appl 29:232240

Ou L-C, Luo MR, Woodcock A, Wright A (2004) Colour emotions for two-colour combinations, in Part II of A study of colour emotion and colour preference. Color Res Appl 29:292298

Pan Chen, Park Dong Sun, Huijuan Lu, Xiangping Wu (2012) Color image segmentation by fixationbased active learning with ELM. Soft Comput 16:15691584

Pitie F, Kokaram A, Dahyot R (2005) N-dimensional probability density function transfer and its application to color transfer. In: Computer vision, ICCV 2005. International conference on tenth IEEE, vol 2, pp 1434–1439

Pouli T, Reinhard E (2011) Progressive histogram reshaping for creative color transfer and tone reproduction. Comput Graph 35(1):67–80

Pouli T, Reinhard E (2011) Progressive color transfer for images of arbitrary dynamic range. Comput Graph 35:6780. Extended Papers from NonPhotorealistic Animation and Rendering (NPAR)

Qi H, Zaretzki R (2015) Image color transfer to evoke different emotions based on color combinations. SIViP 9:19651973

Reinhard M, Ashikhmin B Gooch, Shirley P (2001) Color transfer between images. IEEE Comput Graph 3441

Sato T, Kajiwara K, Hoshino H, Nakamura T (2000) Quantitative evaluation and categorizing of human emotion induced by colour. In: Advances in colour science and technology vol 3, pp 5359. Simon McArdle, Accessed 2016, Psychology of Color In Logo Design, internet: www.huffingtonpost.com

Su YY, Chang CC (2002) A New Approach of Color Image Quantization Based on Multi-Dimensional Directory, VRAI 2002. China, Hangzhou, pp 508–514

Tai Y-W, Jia J, Tang C-K (2005) Local color transfer via probabilistic segmentation by expectation maximization. IEEE Computer Society Conference on Computer Vision and Pattern Recognition vol 1, pp 747–754

Tanaka S, Iwadate Y, Inokuchi S (2000) An attractiveness evaluation model based on the physical features of image regions. In: Proceedings, 15th international conference on pattern recognition

Wang B, Yu Y, Wong T-T, Chen C, Xu Y-Q (2010) Data-driven image color theme enhancement. ACM Trans Graph 29:6. Article 146 (December 2010), 10 pages. doi:10.1145/1882261.1866172

Wei-Ning W, Ying-Lin Y, Sheng-ming J (2006) Image retrieval by emotional semantics: a study of emotional space and feature extraction. In: Systems, man and cybernetics. IEEE international conference on SMC 06, vol 4, pp 3534–3539

Whelan BM (1994) Color Harmony 2: a guide to creative color combinations. Rockport Publishers, Beverly

Wu Fuzhang, Dong Weiming, Kong Yan, Mei Xing, Paul Jean-Claude, Zhang Xiaopeng (2013) Content based color transfer. Comput Graph Forum 32(1):190203

Xiao X, Ma L (2009) Gradient-preserving color transfer. Comput Graph Forum 18:791–886

Xiao X, Ma L (2006) Color transfer in correlated color space. In: Proceedings of the 2006 ACM international conference on virtual reality continuum and its applications, VRCIA 06, ACM, New York, NY, USA, pp 305–309

Yang C-K, Peng L-K (2008) Automatic mood transferring between color images. IEEE Comput Graph Appl 28:5261

Zang Y, Huang H, Li C-F (2010) Example-based painting guided by colorfeatures. Vis Comput 26(6–8):933–942

Zhanga Ming, Zhanga Ke, Fenga Qinghe, Wanga Jianzhong, Konga Jun, Lua Yinghua (2014) A novel image retrieval method based on hybrid information descriptors. J Vis Commun Image Represent 25(7):15741587

Zhang L, Li K (2016) Adaptive image segmentation based on color clustering for person re-identification. Soft Comput. doi:10.1007/s00500-016-2150-x

Acknowledgements

This research was supported in part by the Ministry of Science and Technology, Taiwan, under the Grants MOST-104-2221-E-007-071-MY3.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

All authors declare that they have no conflicts of interest regarding the publication of this manuscript.

Additional information

Communicated by V. Loia.

Rights and permissions

About this article

Cite this article

Su, YY., Sun, HM. Emotion-based color transfer of images using adjustable color combinations. Soft Comput 23, 1007–1020 (2019). https://doi.org/10.1007/s00500-017-2814-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00500-017-2814-1