Abstract

A high-level of understanding about the surrounding context of an image is indispensable for VQA when faced with difficult questions. Previous studies address this issue by modeling object-level visual contents and transforming the internal relationships into a graph or tree. On one hand, however, this still leaves a gap between the modalities of language and vision. On the other hand, the abstract-level contents of the images and the meaning of the relationships between them are ignored. This paper proposes introducing a method of question-relationship guided graph attention network (QRGAT) to study a new representation of the visual features of an image through the guidance of a question and the explicit, internal relationships of objects. Specifically, to narrow the gap between different modalities, visual regions are represented as the combination of their attributes and visual features. Meanwhile, semantic relationships are transformed into the modality of language and used to form updated visual features. The three graph encoders with diverse relationships are considered to capture high-level features of images. Experimental results of the VQA 2.0 model show that our proposed QRGAT outperforms other interpretable visual context structures.

Similar content being viewed by others

References

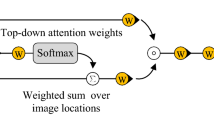

Anderson, P., He, X., Buehler, C., Teney, D., Johnson, M., Gould, S., Zhang, L.: Bottom-up and top-down attention for image captioning and visual question answering. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 6077–6086 (2018)

Battaglia, P.W., Hamrick, J.B., Bapst, V., Sanchez-Gonzalez, A., Zambaldi, V., Malinowski, M., Tacchetti, A., Raposo, D., Santoro, A., Faulkner, R., et al.: Relational inductive biases, deep learning, and graph networks. arXiv:1806.01261 (2018)

Ben-Younes, H., Cadene, R., Cord, M., Thome, N.: Mutan: Multimodal tucker fusion for visual question answering. 2017 IEEE International Conference on Computer Vision (ICCV), pp. 2631–2639 (2017)

Cadene, R., Ben-Younes, H., Cord, M., Thome, N.: Murel: Multimodal relational reasoning for visual question answering. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1989–1998 (2019)

Dai, B., Zhang, Y., Lin, D.: Detecting visual relationships with deep relational networks. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 3298–3308 (2017)

Gkioxari, G., Girshick, R., Dollár, P., He, K.: Detecting and recognizing human-object interactions. 2018 IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 8359–8367,18-23 (2018)

Hudson, D.A., Manning, C.D.: Learning by abstraction: the neural state machine. Conference on Neural Information Processing Systems, pp. 5871–5884 (2019)

Kim, J.H., Jun, J., Zhang, B.T.: Bilinear attention networks. 32nd Conference on Neural Information Processing Systems (NeurlPS), pp. 1564–1574 (2018)

Kingma, D.P., Ba, J.: Adam: a method for stochastic optimization. arXiv preprint arXiv:1412.6980 (2014)

Li, L., Gan, Z., Cheng, Y., Liu, J.: Relation-aware graph attention network for visual question answering. 2019 IEEE/CVF International Conference on Computer Vision (ICCV), pp. 1031–10321 (2019)

Liang, J., Jiang, I., Cao, L., Kalantidis, Y., Li, L.J., Hauptmann, A.G.: Focal visual-text attention for memex question answering. IEEE Trans. Pattern Anal. Mach. Intell. 41, 1 (2019)

Lin, Z., Feng, M., Santos, C.N.d., Yu, M., Xiang, B., Zhou, B., Bengio, Y.: A structured self-attentive sentence embedding. arXiv preprint arXiv:1703.03130 (2017)

Lu, C., Krishna, R., Bernstein, M., Fei-Fei, L.: Visual relationship detection with language priors. ECCV 2016:14th European Conference on Computer Vision, pp. 852–869 (2016)

Lu, J., Yang, J., Batra, D., Parikh, D.: Hierarchical question-image co-attention for visual question answering. 30th Annual Conference on Neural Information Processing Systems (NeurlPS), pp. 289–297(2016)

Luong, M.T., Pham, H., Manning, C.D.: Effective approaches to attention-based neural machine translation. arXiv preprint arXiv:1508.04025 (2015)

Noh, H., Kim, T., Mun, J., Han, B.: Transfer learning via unsupervised task discovery for visual question answering. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 8377–8386 (2019)

Norcliffe-Brown, W., Vafeias, S., Parisot, S.: Learning conditioned graph structures for interpretable visual question answering. 32nd Conference on Neural Information Processing Systems (NeurlPS), pp. 8834–9343(2018)

Peng, G., Jiang, Z., You, H., Lu, P., Hoi, S., Wang, X., Li, H.: Dynamic fusion with intra-and inter-modality attention flow for visual question answering. arXiv:1812.05252 (2018)

Remi, C., Hedi, B.-Y., Cord, M., Thome, N.: Murel: multimodal relational reasoning for visual question answering. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1989–1998 (2019)

Ren, S., He, K., Girshick, R., Sun, J.: Faster r-cnn: Towards real-time object detection with region proposal networks. IEEE Transactions on Pattern Analysis and Machine Intelligence, vol. 39, no. 6, pp. 1137–1149 (2017)

Shaw, P., Uszkoreit, J., Vaswani, A.: Self-attention with relative position representations. arXiv preprint arXiv:1803.02155 (2018)

Shih, K.J., Singh, S., Hoiem, D.: Where to look: Focus regions for visual question answering. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 4613–4621 (2016)

Singh, A., Natarajan, V., Shah, M., Jiang, Y., Chen, X., Batra, D., Parikh, D., Rohrbach, M.: Towards vqa models that can read. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 8309–8318 (2019)

Tang, K., Zhang, H., Wu, B., Luo, W., Liu, W.: Learning to compose dynamic tree structures for visual contexts. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6612–6621 (2019)

Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A.N., Kaiser, Ł., Polosukhin, I.: Attention is all you need. 31st International Conference on Neural Information Processing Systems, vol. 30, pp. 5998–6008 (2017)

Veličković, P., Cucurull, G., Casanova, A., Romero, A., Lio, P., Bengio, Y.: Graph attention networks. 2018 International Conference on Learning Representations (2018)

Wu, C., Liu, J., Wang, X., Dong, X.: Chain of reasoning for visual question answering. 32nd International Conference on Neural Information Processing Systems, vol. 31, pp. 275–285 (2018)

Wu, Z., Pan, S., Chen, F., Long, G., Zhang, C., Yu, P.S.: A comprehensive survey on graph neural networks. IEEE Transactions on Neural Networks and Learning Systems, vol. 32, no. 1, pp. 4–24 (2020)

Xu, K., Ba, J., Kiros, R., Cho, K., Courville, A., Salakhudinov, R., Zemel, R., Bengio, Y.: Show, attend and tell: neural image caption generation with visual attention. 32nd International conference on machine learning (ICML), volume 3 of 3, pp. 477–499 (2015)

Xu, K., Wang, Z., Shi, J., Li, H., Zhang, Q.C.: A2-net: molecular structure estimation from cryo-em density volumes. Proc. AAAI Conf. Artif. Intell. 33, 1230–1237 (2019)

Yao, T., Pan, Y., Li, Y., Mei, T.: Exploring visual relationship for image captioning. ECCV 2018: 15th European Conference on Computer Vision, pp. 711–727 (2018)

Yi, K., Wu, J., Gan, C., Torralba, A., Kohli, P., Tenenbaum, J.: Neural-symbolic vqa: disentangling reasoning from vision and language understanding. Advances in Neural Information Processing Systems, vol. 31, 2018, pp. 1031–1042 (2017)

Yu, Z., Xu, D., Yu, J., Yu, T., Zhao, Z., Zhuang, Y., Tao, D.: Activitynet-qa: a dataset for understanding complex web videos via question answering. Proceedings of the AAAI Conference on Artificial Intelligence, vol. 33, no. 1, pp. 9127–9134 (2019)

Yu, Z., Yu, J., Cui, Y., Tao, D., Tian, Q.: Deep modular co-attention networks for visual question answering. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6274–6283 (2019)

Yu, Z., Yu, J., Xiang, C., Fan, J., Tao, D.: Beyond bilinear: generalized multimodal factorized high-order pooling for visual question answering. IEEE Trans. Neural Netw. Learn. Syst. 29(12), 5947–5959 (2018)

Zhang, C., Chao, W.L., Xuan, D.: An empirical study on leveraging scene graphs for visual question answering. 2018 British Machine Vision Conference (BMVC), p. 288 (2018)

Zhang, J., Shih, K.J., Elgammal, A., Tao, A., Catanzaro, B.: Graphical contrastive losses for scene graph parsing. 2019 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 11527–11535 (2019)

Yang, Z., He, X., J.L.A.: Stacked attention networks for image question answering. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 21–29 (2016)

Acknowledgements

This work was supported in part to Dr. Liansheng Zhuang by NSFC under contract No. U20B2070 and No. 61976199, and in part to Dr. Houqiang Li by NSFC under contract o. 61836011, Next Generation AI Project of China no. 2018AAA0100602.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Liu, R., Zhuang, L., Yu, Z. et al. Question-relationship guided graph attention network for visual question answer. Multimedia Systems 28, 445–456 (2022). https://doi.org/10.1007/s00530-020-00745-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00530-020-00745-7