Abstract

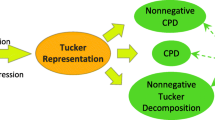

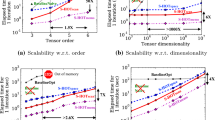

How can we analyze tensors that are composed of 0’s and 1’s? How can we efficiently analyze such Boolean tensors with millions or even billions of entries? Boolean tensors often represent relationship, membership, or occurrences of events such as subject–relation–object tuples in knowledge base data (e.g., ‘Seoul’-‘is the capital of’-‘South Korea’). Boolean tensor factorization (BTF) is a useful tool for analyzing binary tensors to discover latent factors from them. Furthermore, BTF is known to produce more interpretable and sparser results than normal factorization methods. Although several BTF algorithms exist, they do not scale up for large-scale Boolean tensors. In this paper, we propose DBTF, a distributed method for Boolean CP (DBTF-CP) and Tucker (DBTF-TK) factorizations running on the Apache Spark framework. By distributed data generation with minimal network transfer, exploiting the characteristics of Boolean operations, and with careful partitioning, DBTF successfully tackles the high computational costs and minimizes the intermediate data. Experimental results show that DBTF-CP decomposes up to 163–323 \(\times \) larger tensors than existing methods in 82–180 \(\times \) less time, and DBTF-TK decomposes up to 83–163 \(\times \) larger tensors than existing methods in 86–129 \(\times \) less time. Furthermore, both DBTF-CP and DBTF-TK exhibit near-linear scalability in terms of tensor dimensionality, density, rank, and machines.

Similar content being viewed by others

References

Kolda, T.G., Bader, B.W.: Tensor decompositions and applications. SIAM Rev. 51(3), 455–500 (2009)

Erdős, D., Miettinen, P.: Walk ’n’ merge: a scalable algorithm for Boolean tensor factorization. In: ICDM, pp. 1037–1042 (2013)

Miettinen, P.: Boolean tensor factorizations. In: ICDM (2011)

Erdős, D., Miettinen, P.: Discovering facts with Boolean tensor tucker decomposition. In: CIKM, pp. 1569–1572 (2013)

Metzler, S., Miettinen, P.: Clustering Boolean tensors. DMKD 29(5), 1343–1373 (2015)

Belohlávek, R., Glodeanu, C.V., Vychodil, V.: Optimal factorization of three-way binary data using triadic concepts. Order 30(2), 437–454 (2013)

Leenen, I., Van Mechelen, I., De Boeck, P., Rosenberg, S.: INDCLAS: a three-way hierarchical classes model. Psychometrika 64(1), 9–24 (1999)

Zaharia, M., Chowdhury, M., Das, T., Dave, A., Ma, J., McCauly, M., Franklin, M.J., Shenker, S., Stoica, I.: Resilient distributed datasets: a fault-tolerant abstraction for in-memory cluster computing. In: NSDI, pp. 15–28 (2012)

Park, N., Oh, S., Kang, U.: Fast and scalable distributed Boolean tensor factorization. In: ICDE, pp. 1071–1082 (2017)

Cerf, L., Besson, J., Robardet, C., Boulicaut, J.: Closed patterns meet n-ary relations. TKDD 3(1), 3 (2009)

Ji, L., Tan, K., Tung, A.K.H.: Mining frequent closed cubes in 3D datasets. In: VLDB, pp. 811–822 (2006)

Kang, U., Papalexakis, E.E., Harpale, A., Faloutsos, C.: Gigatensor: scaling tensor analysis up by 100 times—algorithms and discoveries. In: KDD, pp. 316–324 (2012)

Jeon, B., Jeon, I., Sael, L., Kang, U.: Scout: scalable coupled matrix-tensor factorization—algorithm and discoveries. In: ICDE, pp. 811–822 (2016)

Jeon, I., Papalexakis, E.E., Faloutsos, C., Sael, L., Kang, U.: Mining billion-scale tensors: algorithms and discoveries. VLDB J. 25(4), 519–544 (2016)

Sael, L., Jeon, I., Kang, U.: Scalable tensor mining. Big Data Res. 2(2), 82–86 (2015). (visions on Big Data)

Park, N., Jeon, B., Lee, J., Kang, U.: Bigtensor: mining billion-scale tensor made easy. In: CIKM, pp. 2457–2460 (2016)

Beutel, A., Talukdar, P.P., Kumar, A., Faloutsos, C., Papalexakis, E.E., Xing, E.P.: Flexifact: scalable flexible factorization of coupled tensors on hadoop. In: SDM, pp. 109–117 (2014)

Papalexakis, E.E., Faloutsos, C., Sidiropoulos, N.D.: Parcube: sparse parallelizable tensor decompositions. In: ECML PKDD, pp. 521–536 (2012)

Li, J., Choi, J., Perros, I., Sun, J., Vuduc, R.: Model-driven sparse CP decomposition for higher-order tensors. In: IPDPS (2017)

Smith, S., Park, J., Karypis, G.: An exploration of optimization algorithms for high performance tensor completion. In: SC (2016)

Karlsson, L., Kressner, D., Uschmajew, A.: Parallel algorithms for tensor completion in the CP format. Parallel Comput. 57, 222–234 (2016)

Shin, K., Sael, L., Kang, U.: Fully scalable methods for distributed tensor factorization. TKDE 29(1), 100–113 (2017)

Lathauwer, L.D., Moor, B.D., Vandewalle, J.: On the best rank-1 and rank-(\(R_{1},R_{2},\ldots, R_{N}\)) approximation of higher-order tensors. SIMAX 21(4), 1324–1342 (2000)

Kolda, T.G., Sun, J.: Scalable tensor decompositions for multi-aspect data mining. In: ICDM, pp. 363–372 (2008)

Oh, J., Shin, K., Papalexakis, E.E., Faloutsos, C., Yu, H.: S-hot: scalable high-order tucker decomposition. In: WSDM (2017)

Smith, S., Karypis, G.: Accelerating the tucker decomposition with compressed sparse tensors. In: Europar (2017)

Kaya, O., Uçar, B.: High performance parallel algorithms for the tucker decomposition of sparse tensors. In: ICPP (2016)

Oh, S., Park, N., Sael, L., Kang, U.: Scalable tucker factorization for sparse tensors—algorithms and discoveries. In: ICDE (2018)

Chakaravarthy, V.T., Choi, J.W., Joseph, D.J., Liu, X., Murali, P., Sabharwal, Y., Sreedhar, D.: On optimizing distributed tucker decomposition for dense tensors (2017). CoRR arXiv:1707.05594

Choi, J.H., Vishwanathan, S.: Dfacto: distributed factorization of tensors. In: NIPS (2014)

Kaya, O., Uçar, B.: Scalable sparse tensor decompositions in distributed memory systems. In: SC, pp. 1–11 (2015)

Austin, W., Ballard, G., Kolda, T.G.: Parallel tensor compression for large-scale scientific data. In: IPDPS (2016)

Smith, S., Karypis, G.: A medium-grained algorithm for distributed sparse tensor factorization. In: IPDPS (2016)

Acer, S., Torun, T., Aykanat, C.: Improving medium-grain partitioning for scalable sparse tensor decomposition. IEEE Trans. Parallel Distrib. Syst. 29, 2814–2825 (2018)

Dean, J., Ghemawat, S.: Mapreduce: simplified data processing on large clusters. In: OSDI, pp. 137–150 (2004)

Apache hadoop. http://hadoop.apache.org/

Kang, U., Tsourakakis, C.E., Faloutsos, C.: PEGASUS: a peta-scale graph mining system. In: ICDM, pp. 229–238 (2009)

Park, H.-M., Park, N., Myaeng, S.-H., Kang, U.: Partition aware connected component computation in distributed systems. In: ICDM (2016)

Park, H.-M., Myaeng, S.-H., Kang, U.: Pte: enumerating trillion triangles on distributed systems. In: KDD, pp. 1115–1124 (2016)

Kang, U., Tong, H., Sun, J., Lin, C., Faloutsos, C.: GBASE: a scalable and general graph management system. In: KDD

Kalavri, V., Vlassov, V.: Mapreduce: limitations, optimizations and open issues. In: TrustCom, pp. 1031–1038 (2013)

Zaharia, M., Chowdhury, M., Franklin, M.J., Shenker, S., Stoica, I.: Spark: cluster computing with working sets. In: HotCloud (2010)

Lulli, A., Ricci, L., Carlini, E., Dazzi, P., Lucchese, C.: Cracker: crumbling large graphs into connected components. In: ISCC, pp. 574–581 (2015)

Wiewiórka, M.S., Messina, A., Pacholewska, A., Maffioletti, S., Gawrysiak, P., Okoniewski, M.J.: Sparkseq: fast, scalable and cloud-ready tool for the interactive genomic data analysis with nucleotide precision. Bioinformatics 30(18), 2652–2653 (2014)

Gu, R., Tang, Y., Wang, Z., Wang, S., Yin, X., Yuan, C., Huang, Y.: Efficient large scale distributed matrix computation with spark. In: IEEE BigData, pp. 2327–2336 (2015)

Zadeh, R.B., Meng, X., Ulanov, A., Yavuz, B., Pu, L., Venkataraman, S., Sparks, E.R., Staple, A., Zaharia, M.: Matrix computations and optimization in apache spark. In: KDD, pp. 31–38 (2016)

Kim, H., Park, J., Jang, J., Yoon, S.: Deepspark: spark-based deep learning supporting asynchronous updates and caffe compatibility (2016). CoRR arXiv:1602.08191

Miettinen, P., Mielikäinen, T., Gionis, A., Das, G., Mannila, H.: The discrete basis problem. TKDE 20(10), 1348–1362 (2008)

Acknowledgements

This work was supported by the National Research Foundation of Korea (NRF) funded by the Ministry of Science, ICT and Future Planning (NRF-2016M3C4A7952587, PF Class Heterogeneous High Performance Computer Development). The ICT at Seoul National University provides research facilities for this study. The Institute of Engineering Research at Seoul National University provided research facilities for this work.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Proofs

Proofs

1.1 Proof of Lemma 4

Proof

Algorithm 3 is composed of three operations: (1) partitioning (lines 1–3), (2) initialization (line 6), and (3) updating factor matrices (lines 7 and 10).

-

(1)

After unfolding an input tensor \(\varvec{\mathscr {X}}\) into \(\mathbf {X}\), DBTF-CP splits \(\mathbf {X}\) into N partitions, and further divides each partition into a set of blocks (Algorithm 4). Unfolding takes \(O(|\varvec{\mathscr {X}}|)\) time as each entry can be mapped in constant time (Eq. (1)), and partitioning takes \(O(|\mathbf {X}|)\) time since determining which partition and block an entry of \(\mathbf {X}\) belongs to is also a constant-time operation. It takes \(O(|\varvec{\mathscr {X}}|)\) time in total.

-

(2)

Random initialization of factor matrices takes O(IR) time.

-

(3)

The update of a factor matrix (Algorithm 5) consists of the following steps (i, ii, iii, and iv):

-

i.

Caching row summations of a factor matrix (line 1). By Lemma 2, the number of cache tables is \(\lceil R/V \rceil \), and the maximum size of a single cache table is \(2^{\lceil R / \lceil R/V \rceil \rceil }\). Each row summation can be obtained in O(I) time via incremental computations that use prior row summation results. Hence, caching row summations for N partitions takes \(O(N\left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil } I)\).

-

ii.

Fetching cached row summations (lines 7–8). The number of constructing row summations and computing errors to update a factor matrix is 2IR. An entire row summation is constructed by fetching row summations from the cache tables \(O(\max (I,N))\) times across N partitions. If \(R\!\le \!V\), a row summation can be constructed by a single access to the cache. If \(R\!>\!V\), multiple accesses are required to fetch row summations from \(\left\lceil \frac{R}{V} \right\rceil \) tables. Also, constructing a cache key requires \(O(\min (V,R))\) time. Thus, fetching a cached row summation takes \(O(\left\lceil \frac{R}{V} \right\rceil \min (V,R)\)\(\max (I,N))\) time. When \(R\!>\!V\), there is an additional cost to sum up \(\left\lceil \frac{R}{V} \right\rceil \) row summations, which is \(O((\left\lceil \frac{R}{V} \right\rceil - 1) I^{2})\). In total, the time complexity for this step is O(IR\(\big [\left\lceil \frac{R}{V} \right\rceil \min (V,R) \max (I,N) + (\left\lceil \frac{R}{V} \right\rceil - 1) I^{2} \big ])\). Simplifying terms, we get \( O(I^3 R \left\lceil \frac{R}{V} \right\rceil )\).

-

iii.

Computing the error for the fetched row summation (line 9). It takes \(O(I^{2})\) time to calculate an error of one row summation with regard to the corresponding row of the unfolded tensor. For each column entry, DBTF-CP constructs row summations (\(\mathbf {a}_{r:} \boxtimes (\mathbf {M}_{f} \odot \mathbf {M}_{s})^{\top }\) in Algorithm 5) twice (for \(a_{rc}\!=\!0~\text {and}~1\)). Therefore, given a rank R, this step takes \(O(I^{3} R)\) time.

-

iv.

Updating a factor matrix (lines 10–14). Updating an entry in a factor matrix requires summing up errors for each value collected from N partitions, which takes O(N) time. Updating all entries takes O(NIR) time. In case the percentage of zeros in the column being updated is greater than Z, an additional step is performed to make the sparsity of the column less than Z, which takes \(O(I \log (I))\) time as all 2I values may need to be fetched in the order of increasing error in the worst case. Since we have R columns, this additional step takes \( O(R I \log (I)) \) in total. Thus, step iv takes \(O(N I R + R I \log (I))\) time.

After simplifying terms, DBTF-CP ’s time complexity is \( O\big (T I^3 R \left\lceil \frac{R}{V} \right\rceil + T N \left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil } I \big )\). At each iteration, the dominating term is \( O(I^3 R) \) that comes from fetching row summation and calculating its error (steps ii and iii), which is an \( O(I^2) \) operation that is performed 2IR times. Note that the worst-case time complexity for this error calculation is \( O(I^2) \) even when the input tensor is sparse because the time for this operation depends not only on the nonzeros in the row of an input tensor, but also on the nonzeros in the corresponding row of the intermediate matrix product (e.g., \( (\mathbf {C} \odot \mathbf {B})^\top \)), which could be full of nonzeros in the worst case. However, given sparse tensors in practice, factor matrices are updated to be sparse such that the reconstructed tensor gets closer to the sparse input tensor, which makes the time required for the dominating operation much less than \( O(I^2) \).

1.2 Proof of Lemma 5

Proof

For the decomposition of an input tensor \(\varvec{\mathscr {X}} \in \mathbb {B}^{I \times I \times I}\), DBTF-CP stores the following four types of data in memory at each iteration: (1) partitioned unfolded input tensors \({_{p}\mathbf {X}_{(1)}}, {_{p}\mathbf {X}_{(2)}}\), and \({_{p}\mathbf {X}_{(3)}}\), (2) row summation results, (3) factor matrices \(\mathbf {A}, \mathbf {B}\), and \(\mathbf {C}\), and (4) errors for the entries of a column being updated.

-

(1)

While partitioning of an unfolded tensor by DBTF-CP structures it differently from the original one, the total number of elements does not change after partitioning. Thus, \({_{p}\mathbf {X}_{(1)}}, {_{p}\mathbf {X}_{(2)}}\), and \({_{p}\mathbf {X}_{(3)}}\) require \(O(|\varvec{\mathscr {X}}|)\) memory.

-

(2)

By Lemma 2, the total number of row summations of a factor matrix is \(O(\left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil })\). By Lemma 3, each partition has at most three types of blocks. Since an entry in the cache table uses O(I) space, the total amount of memory used for row summation results is \(O(N I \left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil })\). Note that since Boolean factor matrices are normally sparse, many cached row summations are not normally dense. Therefore, the actual amount of memory used is usually smaller than the stated upper bound.

-

(3)

Since \(\mathbf {A}, \mathbf {B},\) and \(\mathbf {C}\) are broadcast to each machine, they require O(MRI) memory in total.

-

(4)

Each partition stores two errors for the entries of the column being updated, which takes O(NI) memory.

1.3 Proof of Lemma 6

Proof

DBTF-CP unfolds an input tensor \(\varvec{\mathscr {X}}\) into three different modes, \(\mathbf {X}_{(1)}\), \(\mathbf {X}_{(2)}\), and \(\mathbf {X}_{(3)}\), and then partitions each one: unfolded tensors are shuffled across machines so that each machine has a specific range of consecutive columns of unfolded tensors. In the process, the entire data can be shuffled, depending on the initial distribution of the data. Thus, the amount of data shuffled for partitioning \(\varvec{\mathscr {X}}\) is \(O(|\varvec{\mathscr {X}}|)\).

1.4 Proof of Lemma 7

Proof

Once the three unfolded input tensors \(\mathbf {X}_{(1)}\), \(\mathbf {X}_{(2)}\), and \(\mathbf {X}_{(3)}\) are partitioned, they are cached across machines, and are not shuffled. In each iteration, DBTF-CP broadcasts three factor matrices \(\mathbf {A}\), \(\mathbf {B}\), and \(\mathbf {C}\) to each machine, which takes O(MRI) space in sum. With only these three matrices, each machine generates the part of row summation it needs to process. Also, in updating a factor matrix of size I-by-R, DBTF-CP collects from all partitions the errors for both cases of when each entry of the factor matrix is set to 0 and 1. This process involves transmitting 2IR errors from each partition to the driver node, which takes O(NIR) space in total. Accordingly, the total amount of data shuffled for T iterations after partitioning \(\varvec{\mathscr {X}}\) is \(O(TRI(M + N))\).

1.5 Proof of Lemma 8

Proof

Algorithm 8 is composed of four operations: (1) partitioning (lines 1–4), (2) initialization (lines 7–8), (3) updating factor matrices (lines 10 and 14), and (4) updating a core tensor (lines 9 and 13).

-

(1)

Partitioning of an input tensor \(\varvec{\mathscr {X}}\) into \({_{p}\mathbf {X}_{(1)}}, {_{p}\mathbf {X}_{(2)}}\), and \({_{p}\mathbf {X}_{(3)}}\) (lines 1–3) takes \(O(|\varvec{\mathscr {X}}|)\) time as in DBTF-CP. Similarly, partitioning of \(\varvec{\mathscr {X}}\) into \({_{p}\varvec{\mathscr {X}}}\) (line 4) takes \(O(|\varvec{\mathscr {X}}|)\) time since determining which partition an entry of \(\varvec{\mathscr {X}}\) belongs to can be done in constant time.

-

(2)

Randomly initializing factor matrices and a core tensor takes O(IR) and \(O(R^3)\) time, respectively.

-

(3)

The update of a factor matrix (Algorithm 10) consists of the following steps (i, ii, iii, and iv):

-

i.

Caching row summations (lines 1–2). Caching row summations of a factor matrix takes \(O(N\left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil } I)\) as in DBTF-CP. Caching row summations of an unfolded core tensor requires \(O(\left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil } R^2)\) as a single row summation can be computed in \(O(R^2)\) time. Assuming \( R^2 \le I \), this step requires \(O(N\left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil } I)\) time.

-

ii.

Fetching cached row summations (lines 9–10). (a) Row summations of an unfolded core tensor are fetched O(IR) times in each partition. If \(R\!\le \!V\), a row summation can be obtained with one access to the cache. If \(R\!>\!V\), multiple accesses are required to fetch row summations from \(\left\lceil \frac{R}{V} \right\rceil \) tables, and there is an additional cost to sum up \(\left\lceil \frac{R}{V} \right\rceil \) row summations. In sum, this operation takes \(O(N I R \big [\left\lceil \frac{R}{V} \right\rceil + (\left\lceil \frac{R}{V} \right\rceil - 1) R^{2} \big ])\) time. (b) Fetching a cached row summation of a factor matrix is identical to that in DBTF-CP, except for the computation of cache key, which takes \(O(R^2)\) time. Therefore, this operation takes \(O(I R \big [\left\lceil \frac{R}{V} \right\rceil R^2\)\(\max (I,N) + (\left\lceil \frac{R}{V} \right\rceil - 1) I^{2} \big ])\) in total. Simplifying (a) and (b) under the assumption that \( R^2 \le I \) and \( \max (I,N) = I \), the time complexity for this step reduces to \( O(I^3 R \left\lceil \frac{R}{V} \right\rceil )\).

-

iii.

Computing the error for the fetched row summation (line 11). This step takes the same time as in DBTF-CP, which is \(O(I^{3} R)\).

-

iv.

Updating a factor matrix (lines 12–16). This step takes the same time as in DBTF-CP, which is \(O(N I R + R I \log (I))\).

-

i.

-

(4)

For the update of a core tensor (Algorithm 11), two operations are repeatedly performed for each core tensor entry. First, rowwise sum of entries in factor matrices are computed (line 3), which takes O(IR) time. Second, DBTF-TK determines whether flipping the core tensor entry would improve accuracy (lines 4–25). This step takes \( O(I^3) \) time in the worst case when the factor matrices are full of nonzeros. In sum, it takes \( O (I^3 R^3) \) to update a core tensor.

In sum, DBTF-TK takes \( O\big (T I^3 R^3 + T N \left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil } I \big )\) time.

1.6 Proof of Lemma 9

Proof

In order to decompose an input tensor \(\varvec{\mathscr {X}} \in \mathbb {B}^{I \times I \times I}\), DBTF-TK stores the following five types of data in memory at each iteration: (1) partitioned input tensors \({_{p}\mathbf {X}_{(1)}}, {_{p}\mathbf {X}_{(2)}}\), \({_{p}\mathbf {X}_{(3)}}\), and \({_{p}\varvec{\mathscr {X}}}\), (2) row summation results, (3) a core tensor \(\varvec{\mathscr {G}}\), (4) factor matrices \(\mathbf {A}, \mathbf {B}\), and \(\mathbf {C}\), and (5) errors for the entries of a column being updated.

-

(1)

Since partitioning does not change the total number of elements, \({_{p}\mathbf {X}_{(1)}}, {_{p}\mathbf {X}_{(2)}}, {_{p}\mathbf {X}_{(3)}}\), and \({_{p}\varvec{\mathscr {X}}}\) require \(O(|\varvec{\mathscr {X}}|)\) memory.

-

(2)

Two types of row summation results are maintained in DBTF-TK: the first for the factor matrix (e.g., \(\mathbf {B}^{\top }\)), and the second for the unfolded core tensors (e.g., \(\mathbf {G}_{(1)}\)). Note that, given R number of rows, the total number of row summations to be cached is \(O(\left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil })\) by Lemma 2. First, the cache tables for the factor matrix are the same as those used in DBTF-CP; thus, they use \(O(N I \left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil })\) memory. Second, across M machines, the cache tables for the unfolded core tensor require \(O(M R^2 \left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil })\) as a single entry uses \(O(R^2)\) space. Assuming \( R^2 \le I \), \(O((N + M) I \left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil })\) memory is required in total for row summation results.

-

(3)

Since the core tensor \(\varvec{\mathscr {G}}\) is broadcast to each machine, \(O(M R^3)\) is required.

-

(4)

Factor matrices require O(MRI) memory as in DBTF-CP.

-

(5)

O(NI) memory is required since each partition stores two errors for each entry of the column being updated as in DBTF-CP.

1.7 Proof of Lemma 10

Proof

In DBTF-TK, an input tensor \(\varvec{\mathscr {X}}\) is partitioned in four different ways, where the first three are \({_{p}\mathbf {X}_{(1)}}, {_{p}\mathbf {X}_{(2)}}\), and \({_{p}\mathbf {X}_{(3)}}\) that are used for updating factor matrices, and the last one is \({_{p}\varvec{\mathscr {X}}}\) that is used for updating a core tensor. Each machine is assigned non-overlapping partitions of the input tensor. The entire data can be shuffled in the worst case, depending on the data distribution. Thus, the total amount of data shuffled for partitioning \(\varvec{\mathscr {X}}\) is \(O(|\varvec{\mathscr {X}}|)\).

1.8 Proof of Lemma 11

Proof

As in DBTF-CP, partitioned input tensors \({_{p}\mathbf {X}_{(1)}}\), \({_{p}\mathbf {X}_{(2)}}\), \({_{p}\mathbf {X}_{(3)}}\), and \({_{p}\varvec{\mathscr {X}}}\) are shuffled only once in the beginning. After that, DBTF-TK performs data shuffling at each iteration in order to update (1) factor matrices \(\mathbf {A}\), \(\mathbf {B}\), and \(\mathbf {C}\), and (2) a core tensor \(\varvec{\mathscr {G}}\).

-

(1)

In updating factor matrices, DBTF-TK uses all data used in DBTF-CP, which is \(O(TRI(M + N))\). Also, DBTF-TK broadcasts the tables containing the combinations of row summations of three unfolded core tensors (\(\mathbf {G}_{(\mathbf {1})}, \mathbf {G}_{(\mathbf {2})}\), and \(\mathbf {G}_{(\mathbf {3})}\)) to each machine at every iteration. Since, given R, the total number of row summations to be cached is \(O(\left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil })\), and each row summation uses \(O(R^2)\) space, broadcasting these tables overall requires \(O(TMR^2\)\(\left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil })\).

-

(2)

DBTF-TK broadcasts the rowwise sum of entries in factor matrices to each machine when a core tensor \(\varvec{\mathscr {G}}\) is updated, which takes \(O(M I R^3)\) space in each iteration. Also, in updating an element of \(\varvec{\mathscr {G}}\), DBTF-TK aggregates partial gains computed from each partition, which requires \(O(N R^3)\) for each iteration.

Accordingly, the total amount of data shuffled for T iterations after partitioning \(\varvec{\mathscr {X}}\) is \(O(TRI(M + N) + TR^3(MI + N) + TMR^2 \left\lceil \frac{R}{V} \right\rceil 2^{\lceil R / \lceil R/V \rceil \rceil })\).

Rights and permissions

About this article

Cite this article

Park, N., Oh, S. & Kang, U. Fast and scalable method for distributed Boolean tensor factorization. The VLDB Journal 28, 549–574 (2019). https://doi.org/10.1007/s00778-019-00538-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s00778-019-00538-z