Abstract

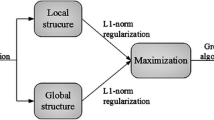

It is difficult to find the optimal sparse solution of a manifold learning based dimensionality reduction algorithm. The lasso or the elastic net penalized manifold learning based dimensionality reduction is not directly a lasso penalized least square problem and thus the least angle regression (LARS) (Efron et al., Ann Stat 32(2):407–499, 2004), one of the most popular algorithms in sparse learning, cannot be applied. Therefore, most current approaches take indirect ways or have strict settings, which can be inconvenient for applications. In this paper, we proposed the manifold elastic net or MEN for short. MEN incorporates the merits of both the manifold learning based dimensionality reduction and the sparse learning based dimensionality reduction. By using a series of equivalent transformations, we show MEN is equivalent to the lasso penalized least square problem and thus LARS is adopted to obtain the optimal sparse solution of MEN. In particular, MEN has the following advantages for subsequent classification: (1) the local geometry of samples is well preserved for low dimensional data representation, (2) both the margin maximization and the classification error minimization are considered for sparse projection calculation, (3) the projection matrix of MEN improves the parsimony in computation, (4) the elastic net penalty reduces the over-fitting problem, and (5) the projection matrix of MEN can be interpreted psychologically and physiologically. Experimental evidence on face recognition over various popular datasets suggests that MEN is superior to top level dimensionality reduction algorithms.

Similar content being viewed by others

References

Belhumeur PN, Hespanha JP, Kriegman DJ (1997) Eigenfaces vs. fisherfaces: recognition using class specific linear projection. IEEE Trans Pattern Anal Mach Intell 19(7): 711–720

Belkin M, Niyogi P (2001) Laplacian eigenmaps and spectral techniques for embedding and clustering. Adv Neural Inf Process Syst 14: 585–591

Belkin M, Niyogi P, Sindhwani V (2006) Manifold regularization: a geometric framework for learning from labeled and unlabeled examples. J Mach Learn Res 7: 2399–2434

Bian W, Tao D (2008) Harmonic mean for subspace selection. In: IEEE ICPR, pp 1–4

Bishop CM, Williams CKI (1998) GTM: the generative topographic mapping. Neural Comput 10: 215–234

Cai D, He X, Han J (2007) Spectral regression for efficient regularized subspace learning. In: IEEE 11th international conference on computer vision, 2007. ICCV 2007, pp 1–8

Cai D, He X, Han J (2008) SRDA: an efficient algorithm for large-scale discriminant analysis. IEEE Trans Knowl Data Eng 20(1): 1–12

Candes E, Tao T (2005) The dantzig selector: statistical estimation when p is much larger than n. Ann Stat 35: 2392–2404

Candes EJ, Wakin MB, Boyd SP (2008) Enhancing sparsity by reweighted L1 minimization. Special issue on sparsity. J Fourier Anal Appl 14(5): 877–905

D’aspremont A, Ghaoui LE, Jordan MI, Lanckriet GRG (2007) A direct formulation for sparse PCA using semidefinite programming. SIAM Rev 49(3): 434–448

Ding C, Li T (2007) Adaptive dimension reduction using discriminant analysis and k-means clustering. In: ICML ’07: Proceedings of the 24th international conference on machine learning. ACM, New York, NY, USA, pp 521–528

Ding CHQ, Li T, Jordan MI (2008) Convex and semi-nonnegative matrix factorizations. IEEE Trans Pattern Anal Mach Intell 32(1): 45–55

Donoho DL, Grimes C (2003) Hessian eigenmaps: locally linear embedding techniques for high-dimensional data. PNAS 100(10): 5591–5596

Efron B, Hastie T, Johnstone I, Tibshirani R (2004) Least angle regression. Ann Stat 32(2): 407–499

Fan J, Li R (2001) Variable selection via nonconcave penalized likelihood and its oracle properties. J Am Stat Assoc 96: 1348–1360

Fan J, Lv J (2008) Sure independence screening for ultrahigh dimensional feature space. J R Stat Soc Ser B 70(5): 849–911

Fisher RA (1936) The use of multiple measurements in taxonomic problems. Ann Eugen 7(2): 179–188

Fyfe C (2007) Two topographic maps for data visualisation. Data Min Knowl Discov 14(2): 207–224

Golub GH, Van Loan CF (1996) Matrix computations. 3. Johns Hopkins University Press, Baltimore, MD

Graham DB, Allinson NM (1936) Characterizing virtual eigensignatures for general purpose face recognition. Face recognition: from theory to applications, NATO ASI series F. Comput Syst Sci 163: 446–456

Hastie T, Tibshirani R, Friedman J (2009) The elements of statistical learning: data mining, inference, and prediction, 2nd edn. Springer Series in Statistics, Springer, 2nd edn. corr. 3rd printing edn

He X, Niyogi P (2004) Locality preserving projections. In: Advances in neural information processing systems. MIT Press, Cambridge, MA

He X, Cai D, Yan S, Zhang HJ (2005a) Neighborhood preserving embedding. In: Proceedings of IEEE international conference on computer vision, vol. 2. IEEE Computer Society, Washington, DC, USA, pp 1208–1213

He X, Yan S, Hu Y, Niyogi P (2005b) Face recognition using laplacianfaces. IEEE Trans Pattern Anal Mach Intell 27(3): 328–340

Hotelling H (1936) Analysis of a complex of statistical variables into principal components. J Educ Phychol 24: 417–441

Huang H, Ding C (2008) Robust tensor factorization using r1 norm. In: IEEE conference on computer vision and pattern recognition, 2008. CVPR 2008, pp 1–8

James GM, Radchenko P, Lv J (2009) Dasso: connections between the dantzig selector and lasso. J R Stat Soc Ser B 71(1): 127–142

Kriegel HP, Borgwardt KM, Kröger P, Pryakhin A, Schubert M, Zimek A (2007) Future trends in data mining. Data Min Knowl Discov 15(1): 87–97

Li T, Zhu S, Ogihara M (2008a) Text categorization via generalized discriminant analysis. Inf Process Manage 44(5): 1684–1697

Li X, Lin S, Yan S, Xu D (2008b) Discriminant locally linear embedding with high-order tensor data. IEEE Trans Syst Man Cybern B 38(2): 342–352

Liu W, Tao D, Liu J (2008) Transductive component analysis. In: ICDM ’08: Proceedings of the 2008 eighth IEEE international conference on data mining. IEEE Computer Society, Washington, DC, USA, pp 433–442

Lv J, Fan Y (2009) A unified approach to model selection and sparse recovery using regularized least squares. Ann Stat 37: 3498

Park MY, Hastie T (2006) L1 regularization path algorithm for generalized linear models. Tech. rep., Department of Statistics, Stanford University

Phillips PJ, Moon H, Rizvi SA, Rauss PJ (2000) The feret evaluation methodology for face-recognition algorithms. IEEE Trans Pattern Anal Mach Intell 22(10): 1090–1104

Roweis ST, Saul LK (2000) Nonlinear dimensionality reduction by locally linear embedding. Science 290(5500): 2323–2326

Shakhnarovich G, Moghaddam B (2004) Face recognition in subspaces. In: Li SZ, Jain AK (eds) Handbook of face recognition. Springer-Verlag

Sun L, Ji S, Ye J (2008) A least squares formulation for canonical correlation analysis. In: ICML ’08: Proceedings of the 25th international conference on machine learning. ACM, New York, NY, USA, pp 1024–1031

Tao D, Li X, Wu X, Hu W, Maybank SJ (2007a) Supervised tensor learning. Knowl Inf Syst 13(1): 1–42

Tao D, Li X, Wu X, Maybank SJ (2007b) General tensor discriminant analysis and gabor features for gait recognition. IEEE Trans Pattern Anal Mach Intell 29(10): 1700–1715

Tao D, Li X, Wu X, Maybank SJ (2009) Geometric mean for subspace selection. IEEE Trans Pattern Anal Mach Intell 31(2): 260–274

Tenenbaum JB (2000) A global geometric framework for nonlinear dimensionality reduction. Science 290(5500): 2319–2323

Tibshirani R (1996) Regression shrinkage and selection via the lasso. J R Stat Soc Ser B 58: 267–288

Turk MA, Pentland AP (1991) Face recognition using eigenfaces. In: IEEE computer society conference on computer vision and pattern recognition, 1991. Proceedings CVPR ’91, pp 586–591

Wang F, Chen S, Zhang C, Li T (2008) Semi-supervised metric learning by maximizing constraint margin. In: CIKM ’08: Proceeding of the 17th ACM conference on information and knowledge management. ACM, New York, NY, USA, pp 1457–1458

Yan S, Xu D, Zhang B, Zhang HJ, Yang Q, Lin S (2007) Graph embedding and extensions: a general framework for dimensionality reduction. IEEE Trans Pattern Anal Mach Intell 29(1): 40–51

Ye J (2007) Least squares linear discriminant analysis. In: ICML ’07: Proceedings of the 24th international conference on machine learning. ACM, New York, NY, USA, pp 1087–1093

Zass R, Shashua A (2007) Nonnegative sparse PCA. In: In neural information processing systems. pp 1561–1568

Zhang Z, Zha H (2005) Principal manifolds and nonlinear dimensionality reduction via tangent space alignment. SIAM J Sci Comput 26(1): 313–338

Zhang T, Tao D, Yang J (2008) Discriminative locality alignment. In: ECCV ’08: Proceedings of the 10th European conference on computer vision. Springer-Verlag, Berlin, Heidelberg, pp 725–738

Zhang T, Tao D, Li X, Yang J (2009) Patch alignment for dimensionality reduction. IEEE Trans Knowl Data Eng 21(9): 1299–1313

Zou H (2006) The adaptive Lasso and its Oracle properties. J Am Stat Assoc 101(476): 1418–1429

Zou H, Hastie T (2005) Regularization and variable selection via the elastic net. J R Stat Soc B 67: 301–320

Zou H, Hastie T, Tibshirani R (2006) Sparse principal component analysis. J Comput Graph Stat 15(2): 262–286

Zou H, Zhang HH (2009) On the adaptive elastic-net with a diverging number of parameters. Ann Stat 37(4): 1733–1751

Author information

Authors and Affiliations

Corresponding author

Additional information

Responsible editors: Tao Li, Chris Ding and Fei Wang.

Rights and permissions

About this article

Cite this article

Zhou, T., Tao, D. & Wu, X. Manifold elastic net: a unified framework for sparse dimension reduction. Data Min Knowl Disc 22, 340–371 (2011). https://doi.org/10.1007/s10618-010-0182-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s10618-010-0182-x