Abstract

It has repeatedly been shown that most classification methods suffer from an imbalanced distribution of training instances among classes. Most learning algorithms expect an approximately even distribution of instances among the different classes and suffer, to different degrees, when that is not the case. Dealing with the class-imbalance problem is a difficult but relevant task, as many of the most interesting and challenging real-world problems have a very uneven class distribution. In this paper we present a new approach for dealing with class-imbalanced datasets based on a new boosting method for the construction of ensembles of classifiers. The approach is based on using the distribution of the weights given by a given boosting algorithm for obtaining a supervised projection. Then, the supervised projection is used to train the next classifier using a uniform distribution of the training instances. We tested our method using 35 class-imbalanced datasets and two different base classifiers: a decision tree and a support vector machine. The proposed methodology proved its usefulness achieving better accuracy than other methods both in terms of the geometric mean of specificity and sensibility and the area under the ROC curve.

Similar content being viewed by others

1 Introduction

A classification problem of \(K\) classes and \(n\) training observations consists of a set of instances whose class membership is known. Let \(S = \{(\mathbf x _1, y_1), (\mathbf x _2, y_2), \ldots , (\mathbf x _n, y_n)\}\) be a set of \(n\) training samples where each instance \(\mathbf x _i\) belongs to a domain \(X\). Each label is an integer from the set \(Y = \{1, \ldots , K\}\). A multiclass classifier is a function \(f: X \rightarrow Y\) that maps an instance \(\mathbf x \in X \subset R^D\) into an element of \(Y\).

The task is to find a definition for the unknown function, \(f(\mathbf x )\), given the set of training instances. In a classifier ensemble framework we have a set of classifiers \(F = \{f_1, f_2, \ldots , f_T\}\), each classifier performing a mapping of an instance vector \(\mathbf x \in R^D\) into the set of labels \(Y = \{1, \ldots , K\}\). The design of classifier ensembles must face two main tasks: constructing the individuals classifiers, \(f_k\), and developing a combination rule that finds a class label for \(\mathbf x \) based on the outputs of the classifiers \(\{f_1(\mathbf x ), f_2(\mathbf x ), \ldots , f_T(\mathbf x )\}\).

One of the distinctive features of many common problems in current data-mining applications is the uneven distribution of the instances of the different classes. In extremely active research areas, such as artificial intelligence in medicine, bioinformatics or intrusion detection, two classes are usually involved: a class of interest, or positive class, and a negative class that is overrepresented in the datasets. This is usually referred to as the class-imbalance problem [28]. In highly imbalanced problems, the ratio between the positive and negative classes can be as high as 1:1,000 or 1:10,000. In recent years much attention has also been given to class-imbalanced multiclass problems [13].

It has repeatedly been shown that most classification methods suffer from an imbalanced distribution of training instances among classes [5]. Many algorithms and methods have been proposed to ameliorate the effect of class imbalance on the performance of learning algorithms. There are three main approaches to these methods:

-

Internal approaches acting on the algorithm. These approaches modify the learning algorithm to deal with the imbalance problem. They can adapt the decision threshold to create a bias toward the minority class or introduce costs in the learning process to compensate the minority class.

-

External approaches acting on the data. These algorithms act on the data instead of the learning method. They have the advantage of being independent from the classifier used. There are two basic approaches: oversampling the minority class and undersampling the majority class.

-

Combined approaches that are based on ensembles of classifier, and most commonly boosting, accounting for the imbalance in the training set. These methods modify the basic boosting method to account for minority class underrepresentation in the dataset.

There are two principal advantages of choosing sampling over cost-sensitive methods. First, sampling is more general, as it does not depend on the possibility of adapting a certain algorithm to work with classification costs. Secondly, the learning algorithm is not modified, which can cause difficulties and add additional parameters to be tuned.

Data-driven algorithms can be broadly classified into two groups: those that undersample the majority class and those that oversample the minority class. There are also algorithms that combine both processes. Both undersampling and oversampling can be achieved randomly or through a more complicated process of searching for least or most useful instances. Previous works have shown that undersampling the majority class usually leads to better results than oversampling the minority class [5] when oversampling is performed using sampling with replacement from the minority class. Furthermore, combining undersampling of the majority class with oversampling of the minority class has not yielded better results than undersampling of the majority class alone [41]. One of the possible sources of the problematic performance of oversampling is the fact that no new information is introduced in the training set, as oversampling must rely on adding new copies of minority class instances already in the dataset. Sampling has proven a very efficient method of dealing with class-imbalanced datasets [11, 56].

Removing instances only from the majority class, usually referred to as one-sided selection [34], has two major problems. Firstly, reduction is limited by the number of instances of the minority class. Secondly, instances from the minority class are never removed, even when their contribution to the models performance is harmful.

The third approach has received less attention. However, we believe that the approaches based on boosting are more promising because these methods have proven their ability to improve the results of many base learners in balanced datasets. Furthermore, when the imbalance ratio is high it is likely that the only way of obtaining good performance is by means of combining many classifiers.

An ensemble of classifiers consists of a combination of different classifiers, homogeneous or heterogeneous, to perform a classification task jointly. Ensemble construction is one of the fields of machine learning that is receiving more research attention, mainly due to the significant performance improvements over single classifiers that have been reported with ensemble methods [1, 3, 25, 32, 55].

Techniques using multiple models usually consist of two independent phases: model generation and model combination [44]. Most techniques are focused on obtaining a group of classifiers which are as accurate as possible but which disagree as much as possible. These two objectives are somewhat conflicting, since if the classifiers are more accurate, it is obvious that they must agree more frequently. Many methods have been developed to enforce diversity on the classifiers that form the ensemble [8].

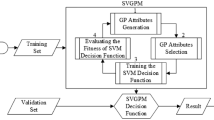

In this paper we propose a new ensemble for class-imbalanced datasets based on boosting. Boosting trains a classifier on a biased distribution of the training set to optimize weighted training error. However, for some problems, optimizing this weighted error may harm the overall performance of the ensemble, because too much relevance is given to incorrectly labelled instances and outliers. Our approach is based on optimizing the weighted error in a less aggressive way. We use the biased distribution of instances given by boosting algorithm to obtain a supervised projection of the original data into a new space where the weighted error is improved. Then, the classifier is trained using this projection and with an uniform distribution of the training instances. In this way, the view of the data the classifier sees is biased towards difficult instances, as the supervised projection is obtained using the biased distribution of instances given by the boosting algorithm, but without putting too much pressure on correctly classifying misclassified instances. Informally, a supervised projection is a projection constructed using both the inputs and the label of the patterns and with the aim of improving the classification accuracy of any given learner.

The projections are constructed using a real-coded genetic algorithm (RCGA). The use of such a method allows a more flexible approach than other boosting methods. Standard boosting methods focus on optimizing weighted accuracy. However, as it is well known, accuracy is not an appropriate method for class-imbalanced datasets. In our approach, the RCGA evolves using as fitness value a measure that is specific of class-imbalanced problems, the geometric mean of specificity and sensitivity (the so-called \(G\)-mean). The presented method is named Genetically Evolved Supervised Projection Boosting, GESuperPBoost, algorithm.

A final feature is introduced to account for the class-imbalanced nature of the datasets. Before obtaining the supervised projection by means of the RCGA, we randomly undersample the majority class. With this process we obtain three relevant benefits. Firstly, we make the algorithm faster; secondly, we obtain better performance due to the balanced datasets used for the RCGA; thirdly, we introduce diversity in the ensemble from the use of the random undersampling.

Unsupervised [49] and supervised projections [22, 23] has been used before for constructing ensembles of classifiers. However, the use of genetically evolved supervised projections is new, and most of the previous approaches were devoted to indirect methods to obtain what we could call semi-supervised projections.

This paper is organized as follows: Sect. 2 describes in detail the proposed methodology and its rationale; Sect. 3 explains the experimental setup; Sect. 4 shows the results of the experiments performed; and finally Sect. 5 states the conclusions of our work.

2 Supervised projection approach for boosting classifiers

In order to avoid the stated harmful effect of maximizing the margin of noisy instances, we do not use the adaptive weighting scheme of boosting methods to train the classifiers, but to obtain a supervised projection. The supervised projection is aimed at optimizing the weighted error given by the boosting algorithm. However, instead of optimizing accuracy, we use the \(G\)-mean measure common in class-imbalanced datasets. Other mechanisms were used to account for the class-imbalance nature of the problems that are described below.

The classifier is trained using the supervised projection obtained with the RCGA and with an uniform distribution of the instances. Thus, we obtain a method that benefits from the adaptive instance weighting of boosting but that is also able to improve its drawbacks. We are extending the philosophy of a previous paper [23] where we showed that the performance of ensembles of classifiers can be improved using non-linear projections constructed with only misclassified instances.

The most widely used boosting method is AdaBoost [15] and its numerous variants. It is based on adaptively increasing the probability of sampling the instances that are not classified correctly by the previous classifiers. For more detailed descriptions of ensembles the reader is referred to [8, 10, 44, 55], or [12]. Several empirical studies have shown that AdaBoost is able to reduce both bias and variance components of the error [1, 2, 50]. Boosting methods “boost” the accuracy of a weak classifier by repeatedly resampling the most difficult instances. Boosting methods construct an additive model. In this way, the classifier ensemble \(F(\mathbf x )\) is constructed using \(T\) individual classifiers, \(f_k(\mathbf x )\):

where the \(\alpha _k\) are appropriately defined, and \([\![ {\pi }]\!]\) is 1 if \(\pi \) is true and 0 otherwise. The basis of boosting is assigning a different weight to each training instance depending on how difficult it has been for the previous classifiers to classify it. Thus, for AdaBoost, each instance \(\mathbf x _i\) receives a weight \(w_i^k\) for training the \(k\)th classifier.Footnote 1 Initially, all the instances are weighted equally \(w_i^1 = 1/n, \forall i\). Then, for classifier \((k+1)\)th the instance is weighted following:

where

\(\epsilon _k\) being the weighted error of classifier \(k\) when the weight vector is normalized \(\sum _{i=1}^n w_i^k = 1\). Once the classifiers are trained, the function \(F(\mathbf x )\) is given by (1) with the weight of each classifier given by \(\alpha _k = \ln \left( {1 \over \beta _k}\right)\).

The popularity of boosting methods is mainly due to the success of AdaBoost. However, AdaBoost tends to perform very well for some problems but can also perform very poorly for other problems. One of the sources of the bad behavior of AdaBoost is that although it is always able to construct diverse ensembles, in some problems the individual classifiers tend to have large training errors [9]. Moreover, AdaBoost usually performs poorly on noisy problems [1, 9]. Schapire and Singer [51] identified two scenarios where AdaBoost is likely to fail: (i) When there is insufficient training data relative to the “complexity” of the base classifiers; and (ii) when the training errors of the base classifiers become too large too quickly. Unfortunately, these two scenarios are likely to occur in real-world problems. Several papers have attributed the failure of boosting methods, especially in the presence of noise, to the fact that the skewed data distribution produced by the algorithm tends to assign too much weight to hard instances [9]. In class-imbalanced datasets this problem may be even harder because the distribution of the patterns in the classes is uneven.

As we have stated, in a general classification problem, we have a training set \(S = \{(\mathbf x _1, y_1), (\mathbf x _2, y_2), \ldots , (\mathbf x _n, y_n)\}\) of \(n\) training samples where each instance \(\mathbf x _i\) belongs to a domain \(X\). Let us assume that we also have a vector \(\mathbf w \) that assigns a weight \(w_i\) to each instance \(\mathbf x _i\). In a general sense, a supervised projection is a projection constructed using both the inputs and the label of the patterns. More specifically, in our framework, a supervised projection, \(\Phi \), is a projection into a new space where the weighted error \(\epsilon = \sum _{i=1}^n w_i \left[\!\left[ {f(\Phi (\mathbf x _i)) = y_i} \right]\!\right]\) for a certain classifier \(f(\mathbf x )\) trained on the projected space is improved with respect to the error using the original variables. Thus, the idea is searching for a projection of the original variables that is able to improve the weighting error even when the learner is trained using a uniform distribution of the patterns. This supervised projection, which is calculated using inputs and pattern labels, leads to an informed or biased feature space, which will be more relevant to the particular supervised learning problem [58].

As it is the case for AdaBoost, the constructed model using this supervised projection is also additive:

where \(\mathbf z _k = \mathbf P _k(\mathbf x )\) and \(\mathbf P _k\) is a non-linear projection constructed using the weights of the instances given by the boosting algorithm. In this way, classifier \(k\) is constructed using the instances projected using \(\mathbf P _k\) and all of them equally weighted.

To illustrate the method, let us explain the differences with a boosting algorithm for step \(k\). In a boosting algorithm after adding \(k-1\) classifiers, we obtain the instance weight vector for training \(k\)th classifier, \(\mathbf w ^k\). Then, \(k\)th classifier is trained associating to each instance \(i\) a weight \(w_i^k\), which is used directly by the classifier, if it admits instance weights, or used to obtain a biased sample from the training set if not. The aim is obtaining a classifier that optimizes the weighted error \(\epsilon _k = \sum _{i=1}^n w_i^k\left[\!\left[ {f_k(\mathbf x _i) = y_i} \right]\!\right]\). Once trained, classifier \(k\)th is added to the ensemble, and the process is repeated for adding classifier \((k+1)\)th. In our method, after adding \(k-1\) classifiers, we obtain the instance weight vector for training \(k\)th classifier, \(\mathbf w ^k\), using the weighting scheme of a certain boosting algorithm of our choice. However, this weight vector is not used to train the new classifier. Instead, a supervised projection, \(P_k\), of the inputs is constructed with the objective of minimizing a certain accuracy criterion that considers that the problems is class-imbalanced. Then, the original instances \(\mathbf x _i\) are projected using this supervised projection to obtain a new training set \(Z_k = \{\mathbf{z}_i :\mathbf{z}_i = P_k(\mathbf{x}_i)\}\). Classifier \(k\) is trained on \(Z_k\) using a uniform distribution. Once trained, it is added to the ensemble and the process is repeated.

The proposed method shares the philosophy of our previous method based on non-linear projections [23]. In that method new classifiers were added to the ensemble considering only the instances misclassified by the previous classifier. To avoid the large bias of using only those instances to learn the classifier, they were not used for training the classifier but for constructing a projection where their separation by the classifier was easier. In the present method, we do not use misclassified instances, but the distribution of instances given by a boosting method. This method has several advantages over the previous one. We can use the theoretical result about convergence of training error of boosting to assure the convergence to perfect classification with similar conditions as for AdaBoost. The weights assigned by boosting and used for constructing the non-linear projection summarizes the difficulty in classifying the instance as the ensemble grows, instead of using just the last classifier. The method in [23] does not use instance weights, and it is inspired more in random subspace method (RSM) [30], using non-linear projections instead of subspace projection. The difference in the philosophy is that, while RSM uses random projections, the method in [23] uses non-linear projections using only misclassified instances. Furthermore, part of the theory developed for boosting is applicable to the new approach but not for the previous one. In addition, the proposed model constructs an additive model as boosting, and the previous one used a simple voting scheme to obtain the final output of the ensemble.

2.1 Constructing supervised projections using a RCGA

We have stated that our method is based on using a supervised projection to train the classifier at round \(k\). But, what exactly do we understand by a supervised projection? The intuitive meaning of a supervised projection using a weight vector \(\mathbf w _k\), is a projection into a space where the weighted error achieved by any classifier trained using the projection is minimized. In a previous work [21] we defined the concept of supervised projection as follows:Footnote 2

Definition 1

Supervised projection. Let \(S = \{(\mathbf x _1, y_1),\) \((\mathbf x _2, y_2), \ldots , (\mathbf x _n, y_n)\}\) be a set of \(n\) training samples where each instance \(\mathbf x _i\) belongs to a domain \(X\), and \(\mathbf w \) a vector that assigns a weight \(w_i\) to each instance \(\mathbf x _i\). A supervised projection \(\mathbf \Phi \) is a projection into a new space where the weighted error \(\epsilon = \sum _{i=1}^n w_i \left[\!\left[ { f(\Phi (\mathbf x _i)) = y_i} \right]\!\right]\) for a certain classifier \(f(\mathbf x )\) trained on the projected space is improved with respect to the error using the original variables \(\epsilon _o = \sum _{i=1}^n w_i \left[\!\left[ { f_o(\mathbf x _i) = y_i} \right]\!\right] \) for training a classifier \(f_o\).

The definition is general, as we do not restrict ourselves to any particular type of classifier. The intuitive idea is to find a projection that improves the weighted error of the classifier. We can consider this problem similar to feature extraction. In feature extraction a mapping \(g\) transform the original variables \(x_1, \ldots , x_d\) of a \(d\)-dimensional input space into a new set of variables \(z_1, \ldots , z_m\) of a \(m\)-dimensional projected space [38], in such a way that a certain criterion \(J\) is optimized. The mapping \(g\) is chosen among all the available transformations \(G\) as the one that optimizes \(J\). Considering a projection that depends on a vector of parameters \(\mathbf \Theta \) the problem can be stated as minimizing the following cost function:

where classifier \(f\) is trained on the projection \(\Phi _\mathbf \Theta (\mathbf x )\), and \(\mathbf \Theta \) is the vector of values to optimize.

Although most methods for projecting data are focused on the features of the input space, and do not take into account the labels of the instances, as most of them are specifically useful for non-labelled data and aimed at data analysis, methods for supervised projection, in the sense that class labels are taken into account, do exist in the literature. For instance, S-Isomap [27] is a supervised version of Isomap [53] projection algorithm which uses class labels to guide the manifold learning. Projection pursuit, a technique initially developed for exploratory data analysis [33], has been also applied to supervised projections [46] where projections are chosen to minimize estimates of the expected overall loss in each projection pursuit stage. Lee et al. [36] introduced new indexes derived from linear discriminant analysis that can be used for exploratory supervised classification. However, all of these methods do not contemplate the possibility of assigning a weight to each instance measuring its relevance.

As we have said, developing a method for obtaining a supervised projection, we face a similar problem as feature selection/extraction. There are two basic approaches for feature selection/extraction: The filter approach separates feature selection/extraction and the learning of the classifier. Measures that do not depend on the learning method are used. The wrapper approach considers that the selection of inputs (or extraction of features) and the learning algorithm cannot be separated. Both approaches are usually developed as an optimization process where certain objective function must be optimized.

Filter methods are based on performance evaluation metrics calculated directly from the data, without direct reference to the results of any induction algorithm. Such algorithms are usually computationally cheaper than those that use a wrapper approach. However, one of the disadvantages of filter approaches is that they may not be appropriate for a given problem. In previous works we have used indirect methods [23] or supervised linear projections [22]. However, none of these methods is able to obtain a supervised projection aimed at optimizing the weighted error given by the boosting resampling scheme.

Thus, in this work we propose the use of a real-coded genetic algorithm to directly optimize the weights of the non-linear projection. The process of obtaining the supervised projection is shown in Algorithm 1. Thus, our solution is based on a wrapper approach by means of an evolutionary computation method.

The input vector \(\mathbf x \) is projected into a vector \(\mathbf z \) using a non-linear projection of the form:

The whole dataset is then transformed according to:

The RCGA must evolve the coefficient matrix, \(\mathbf C \), and constants \(\mathbf B \). Our aim is obtaining the coefficients that produce the optimum accuracy when used to train a classifier using an uniform distribution. For the initialization of individuals the values of the coefficients are randomly distributed in \([-1, 1]\).

To avoid over-fitting we introduce additional diversity into the ensemble using random subspaces. Before obtaining the supervised projection using the RCGA the input space is divided into subspaces of the same size and each subspace is projected separately. In our experiments a subspace size of five features is used. We evolve the whole projection with all the subspaces using the same RCGA. From the practical point of view the only difference when using subspaces is that many of the elements of matrix \(\mathbf C \) are fixed to zero during the evolution.

The main problem of the method described above is the scalability [24]. When we deal with a large dataset, the cost of the RCGA is high. To partially avoid this problem we combine our method with undersampling. Thus, before the construction of each supervised projection is started we undersample the majority class to obtain a balanced distribution of both classes. Then we construct the ensemble as it has been described above. The final version of the method is shown in Algorithm 2.

The RCGA genetic algorithm used is an standard generational genetic algorithm. Initially each individual is randomly obtained. Each individual represents the coefficient matrix, \(\mathbf C \), of the non-linear projection plus the constants, \(\mathbf B \). Then the evolution is carried out for a number of generations where new individuals are obtained by non-uniform mutation [45] and standard BLX-\(\alpha \) [29] crossover with \(\alpha = 0.5\).

Non-uniform mutation mutates and individual \(\mathbf X ^t\) in generation \(t\) to produce another individual \(\mathbf X ^{t+1}\). Each element of the individual, \(x_k^{t+1}\) is obtained from \(x_k^t\) using:

where \(\rho \) is a uniform random value in \([0, 1]\), LB and UB are the lower and upper bounds of the search space, respectively, and \(\Delta (t,y) = y \cdot (1 - r^{(1 - t/T)^b})\), with \(r\) a uniform random number in \([0, 1]\), and \(T\) the maximum number of generations.

The fitness function should measure the performance of each projection. To obtain this fitness value we train a classifier with the projection that the individual represents and evaluate the classifier. However, accuracy is not a useful measure for imbalanced data, especially when the number of instances of the minority class is very small compared with the majority class. If we have a ratio of 1:100, a classifier that assigns all instances to the majority class will have 99 % accuracy. Several measures have been developed to take the imbalanced nature of the problems into account. Given the number of true positives (TP), false positives (FP), true negatives (TN) and false negatives (FN) we can define several measures. Perhaps the most common are the true positive rate (\(\text{ TP}_\mathrm{rate}\)), recall (\(R\)) or sensitivity (Sn):

which is relevant if we are only interested in the performance on the positive class and the true negative rate (\(\text{ TN}_\mathrm{rate}\)) or specificity (Sp), as:

From these basic measures, others have been proposed, such as the \(F\)-measure or, if we are concerned about the performance of both negative and positive classes, the \(G\)-mean measure: \(G-\text{ mean} = \sqrt{Sp \cdot Sn}\). However, this measure does not take into account patterns weights. Thus we modified the standard specificity and sensibility measures using the patterns weighs instead of just counting the numbers of true positive, true negatives, false positives and false negatives. This weighted \(G\)-mean is used as the fitness function of the individuals.

The source code used for all methods is in C and is licensed under the GNU General Public License. The code, the partitions of the datasets and the detailed numerical results of all the experiments are available from the authors upon request and from http://cib.uco.es.

3 Experimental setup

We used a set of 35 problems to test the performance of the proposed method, which is shown in Table 1. The datasets breast-cancer, cancer, euthyroid, german, haberman, hepatitis, ionosphere, ozone1hr, ozone8hr, pima, sick and tic-tac-toe are from the UCI Machine Learning Repository [14]. The remaining datasets were created following García et al. [20] from UCI datasets. To estimate the accuracy we used tenfold cross-validation.

As base learners for the ensembles we used two classifiers: a decision tree using the C4.5 learning algorithm [47] and a support vector machine (SVM) [6] using a Gaussian kernel. The SVM learning algorithm was programmed using functions from the libsvm library [4]. We used these two methods because they are arguably the two most popular classifiers in the literature.

For the RCGA we used a population of 100 individuals evolved during 100 generations. To avoid extremely long running times, an arbitrary maximum running time of 100 seconds was imposed for the RCGA. Once this time limit was reached the best individual so far was used as the non-linear projection.

3.1 Statistical tests

We used the Wilcoxon test as the main statistical test for comparing pairs of algorithms. This test was chosen because it assumes limited commensurability and is safer than parametric tests because it does not assume normal distributions or homogeneity of variance. Furthermore, empirical results [7] show that it is also stronger than other tests. The formulation of the test [57] is the following: Let \(d_i\) be the difference between the error values of the methods in \(i\)th dataset. These differences are ranked according to their absolute values; in case of ties an average rank is assigned. Let \(R^+\) be the sum of ranks for the datasets on which the second algorithm outperformed the first, and \(R^-\) the sum of ranks where the first algorithm outperformed the second. Ranks of \(d_i = 0\) are split evenly among the sums:

and

Let \(T\) be the smaller of the two sums and \(N\) be the number of datasets. For a small \(N\), there are tables with the exact critical values for \(T\). For a larger \(N\), the statistics

is distributed approximately according to \(N(0, 1)\).

In our experiments, we will also compare groups of methods. In such a case it is not advisable to use pairwise statistical tests such as Wilcoxon test. Instead, we first carry out an Iman–Davenport test to ascertain whether there are significant differences among methods. The Iman–Davenport test is based on the \(\chi _F^2\) Friedman test, which compares the average ranks of \(k\) algorithms, but is more powerful than the Friedman test. Let \(r_i^j\) be the rank of \(j\)th algorithm on \(i\)th dataset, where in case of ties, average ranks are assigned, and let \(R_j = {1 \over N} \sum _i r_i^j\) be the average rank for \(N\) datasets. Under the null hypothesis, all algorithms are equivalent, the statistic:

is distributed following a \(\chi _F^2\) with \(k-1\) degrees of freedom for \(k\) and \(N\) sufficiently large. In general, \(N>10\) and \(k>5\) is enough. Iman and Davenport found this statistic to be too conservative and developed a better one:

which is distributed following a \(F\) distribution with \(k-1\) and \((k-1)(N-1)\) degrees of freedom. After carrying out the Iman–Davenport test, we should not perform pairwise comparisons with the Wilcoxon test, because it is not advisable to perform many Wilcoxon tests against the control method. We can instead use one of the general procedures for controlling the family-wise error in multiple hypotheses testing. The test statistic for comparing the \(i\)th and \(j\)th classifier using these methods is as follows:

The \(z\) value is used to find the corresponding probability from the table of normal distribution, which is then compared with an appropriate \(\alpha \). Step-up and step-down procedures sequentially test the hypotheses ordered by their significance. We will denote the ordered \(p\) values by \(p_1, p_2, \ldots \), so that \(p_1 \le p_2 \le \cdots \le p_{k-1}\). One of the simplest such methods was developed by Holm. It compares each \(p_i\) with \(\alpha /(k-i)\). Holms step-down procedure starts with the most significant \(p\) value. If \(p_1\) is below \(\alpha /(k-1)\), the corresponding hypothesis is rejected, and we are allowed to compare \(p_2\) with \(\alpha /(k-2)\). If the second hypothesis is rejected, the test proceeds with the third, and so on. As soon as a certain null hypothesis cannot be rejected, all remaining hypotheses are retained as well. We will use for all statistical tests a significance level of 0.05.

3.2 Evaluation measures

As we have stated, accuracy is not a useful measure for imbalanced data. Thus, as a general accuracy measure we will use the \(G\)-mean defined above.

However, many classifiers are subject to some kind of threshold that can be varied to achieve different values of the above measures. For that kind of classifiers receiver operating characteristic (ROC) curves can be constructed. A ROC curve, is a graphical plot of the \(\text{ TP}_\mathrm{rate}\) (sensitivity) against the \(\text{ FP}_\mathrm{rate}\) (\(1 - \) specificity or \(\text{ FP}_\mathrm{rate} = \frac{\text{ FP}}{\text{ TN} + \text{ FP}}\)) for a binary classifier system as its discrimination threshold is varied. The perfect model would achieve a true positive rate of 1 and a false positive rate of 0. A random guess will be represented by a line connecting the points \((0, 0)\) and \((1, 1)\). ROC curves are a good measure of the performance of the classifiers. Furthermore, from this curve a new measure, area under the curve (AUC), can be obtained, which is a very good overall measure for comparing algorithms. AUC is a useful metric for classifier performance as it is independent of the decision criterion selected and prior probabilities.

In our experiments we report both \(G\)-mean, which gives a snapshot measure of the performance of each method, and AUC which gives a more general vision of its behavior.

3.3 Methods for the comparison

The first method used for comparison is undersampling the majority class until both classes have the same number of instances. We have not used oversampling methods because most previous works agree that as a common rule undersampling performs better than oversampling [40]. However, a few works have found the opposite [11]. Furthermore, methods that add new synthetic patterns, such as SMOTE [5], have also shown a good behavior. Undersampling method was used because it is very simple and offers very good performance. Thus, it is the baseline method for any comparison. Any algorithm not improving over undersampling is of very limited interest.

Random undersampling consists of randomly removing instances from the majority class until a certain criterion is reached. In most works, instances are removed until both classes have the same number of instances. Several studies comparing sophisticated undersampling methods with random undersampling [31] have failed to establish a clear advantage of the formers. Thus, in this work we consider first random undersampling. However, the problem with random undersampling is that many, potentially useful, samples from the majority class are ignored. In this way, when the majority/minority class ratio is large the performance of random undersampling degrades. Furthermore, when the number of minority class samples is very small, we will also have problems due to small training sets.

To avoid these problems, several ensemble methods have been proposed [18]. Rodríguez et al. [48] proposed the use of ensembles of decision trees. AdaCoost [52] has been proposed as an alternative to AdaBoost for imbalanced datasets.

Liu et al. [42] proposed two ensemble methods combining undersampling and boosting to avoid that problem. This methodology is called exploratory undersampling. The two proposed methods are called EasyEnsemble and BalanceCascade. EasyEnsemble consists of applying repeatedly the standard ensemble method AdaBoost [1] to different samples of the majority class. Algorithm 3 shows EasyEnsemble method. The idea behind EasyEnsemble is generating \(T\) balanced subproblems sampling from the majority class.

EasyEnsemble is an unsupervised strategy to explore the set of negative instances, \(\mathcal N \), as the sampling is made without using information from the classification performed by the previous members of the ensemble. On the other hand, BalanceCascade method explores \(\mathcal N \) in a supervised manner, removing from the majority class those instances that have been correctly classified by the previous classifiers added to the ensemble. BalanceCascade is shown in Algorithm 4.

As an additional method we also used in our experiments standard AdaBoost. Thus, our algorithm was compared against four state-of-the-art class-imbalanced methods: undersampling, EasyEnsemble, BalanceCascade and AdaBoost.

4 Experimental results

In this section we present the comparison of GESuperPBoost with the standard methods described above. We also present a control experiment to rule out the possibility that the good performance of GESuperPBoost is due to the introduction of a non-linear projection regardless how the projection is obtained.

4.1 Comparison with standard methods

The aim of these experiments was testing whether our approach based on non-linear projections was competitive when compared with the standard methods. Thus, we carried out experiments using the two base learners and the methods described in Sect. 3.3. The results, in terms of \(G\)-mean and AUC, for C4.5 are shown in Table 2. The results for SVM are shown in Table 3. The Iman–Davenport test obtained a \(p\) value of 0.0000 for the four comparisons, meaning that there were significant differences among the methods.

Figures 1 and 2 show average Friedman ranks for C4.5 and SVM respectively. These ranks are, by themselves, a good measure of the relative performance of a group of methods. In terms of rankings, using a decision tree, GESuperPBoost was the best method both for AUC and \(G\)-mean. Undersampling achieved good results for \(G\)-mean but its performance in terms of AUC was poor. From the standard methods, BalanceCascade achieved the most balanced behavior, with good results for both measures.

The results using a SVM as base learner had some sensible differences. In terms of AUC, GESuperPBoost was better than the remaining methods. However, in terms of \(G\)-mean, all methods, with the exception of AdaBoost, showed a similar behavior. AdaBoost had the worse combined behavior of the five methods. This is not an unexpected result as AdaBoost is an stable method with respect to sampling. This feature makes AdaBoost less efficient when using a SVM as base learner.

The results of the four standard methods against GESuperPBoost are illustrated in Figs. 3 and 4, for C4.5 and SVM, respectively. The figures show results for accuracy using both AUC and \(G\)-mean. This graphic representation is based on the \(\kappa \)-error relative movement diagrams [43]. However, here we use the AUC and \(G\)-mean differences instead of the \(\kappa \) difference value and the accuracy. These diagrams use an arrow to represent the results of two methods applied to the same dataset. The arrow starts at the coordinate origin, and the coordinates of the tip of the arrow represent the difference between the AUC and \(G\)-mean of our method and those of the standard methods. These graphs are a convenient way to summarize the results. A positive value in either AUC and \(G\)-mean means that our method performed better. Thus, arrows pointing up-right represent datasets for which our method outperformed the standard algorithm in both AUC and \(G\)-mean. Arrows pointing up-left indicate that our algorithm improved \(G\)-mean but had worse AUC, whereas arrows pointing down-right indicate that our algorithm improved AUC but had a worse \(G\)-mean. Arrows pointing down-left indicate that our algorithm performed worse in both values.

If we inspect the results, we see that most of the arrows are pointing up-right. This behavior is specially common when using C4.5 as base learner. For SVM, there is a clear advantage in terms of AUC, most arrows are pointing right, but the differences in terms of \(G\)-mean are most homogeneously distributed.

However, the differences showed in the figures must be corroborated by statistical tests to assure the advantage of GESuperPBoost. We performed a Holm procedure to ascertain the differences between our methods and the four standard algorithms as described in Sect. 3.1. Figures 5 and 6 show the results of the Holm test using our approach as control method and a decision tree and an SVM as base learners, respectively.

For C4.5, the test shows that GESuperPBoost was better than all the other methods for both AUC and \(G\)-mean. The differences are significant at a confidence level of 95 %. These results corroborate the differences showed by the ranks in Fig. 1. The case for a SVM as base learner is somewhat different. The differences are significant in favor of our method for all the standard methods and AUC as accuracy measure. However, Holm test fails to find significant differences between our method and undersampling, EasyEnsemble and BalanceCascade for \(G\)-mean measure. However, as explained above, AUC is a more reliable measure than \(G\)-mean.

It is noticeable that AdaBoost obtained very bad results for \(G\)-mean for both base learners. There is an explanation of these results. AdaBoost improved as a rule the specificity values while worsening sensitivity. As AdaBoost is focused on overall error it invested its effort in the negative class, because it is more numerous and thus has a major impact on the overall error. This feature, useful for balanced datasets, is a serious drawback for class-imbalanced datasets.

4.2 Control experiment

It is well known that classifier diversity is an important feature [35] for the construction of any ensemble of classifiers. It is also the case for class-imbalanced datasets [54]. It may be argued that the performance of our approach was due to the diversity introduced by the non-linear projection itself, and not by the supervised projection obtained by means of the RCGA. To test this possibility we performed a control experiments. We constructed ensembles with an additional non-linear projection, as in GESuperPBoost, but the coefficients of the projection were randomly distributed in the interval \([-1, 1]\). That way, we could compared whether the mere introduction of a random projection was able to obtain the same results as our method. Table 4 shows the comparison between GESuperPBoost and this random projection method using Wilcoxon test.

The table shows that the source of the good performance of GESuperPBoost was not due to the projection of the inputs alone, as the random projection method was worse than GESuperPBoost for both base learners and according to both measures.

5 Conclusions and future work

In this paper we have presented a new method for constructing ensembles based on combining the principles of boosting and the construction of supervised projections by means of a real-coded genetic algorithm. The idea of using a supervised projection, instead of the standard way of resampling or reweighting of boosting, seems appropriate for class-imbalanced datasets. We combine this method with undersampling to make if more scalable and to obtain better results.

Our experiments have shown that the proposed method achieved better results than undersampling and three different boosting methods. Two of these methods are specifically designed for class-imbalanced datasets and have shown their performance in previous papers [26].

The main drawback of our method is the scalability of the approach. Although this problem is ameliorated introducing undersampling in a previous step, it may be still a serious handicap if we deal with large datasets. In this way, our current research line is focused on improving the scalability of the method by means of the paradigm of the democratization [19] of learning algorithms.

Notes

References

Bauer, E., Kohavi, R.: An empirical comparison of voting classification algorithms: bagging, boosting, and variants. Mach. Learn. 36(1/2), 105–142 (1999)

Breiman, L.: Bias, variance, and arcing classifiers. Tech. Rep. 460, Department of Statistics, University of California, Berkeley (1996)

Breiman, L.: Stacked regressions. Mach. Learn. 24(1), 49–64 (1996)

Chang, C.C., Lin, C.J.: LIBSVM: a library for support vector machines (2001)

Chawla, N.V., Bowyer, K.W., Hall, L.O., Kegelmeyer, W.P.: SMOTE: synthetic minority over-sampling technique. J. Artif. Intell. Res. 16, 321–357 (2002)

Cristianini, N., Shawe-Taylor, J.: An Introduction to Support Vector Machines. Cambridge University Press, Cambridge (2000)

Demšar, J.: Statistical comparisons of classifiers over multiple data sets. J. Mach. Learn. Res. 7, 1–30 (2006)

Dietterich, T.G.: Ensemble methods in machine learning. In: Kittler, J., Roli, F. (eds.) Proceedings of the First International Workshop on Multiple Classifier Systems, Lecture Notes in Computer Science, vol. 1857, pp. 1–15. Springer (2000)

Dietterich, T.G.: An experimental comparison of three methods for constructing ensembles of decision trees: bagging, boosting, and randomization. Mach. Learn. 40, 139–157 (2000)

Dzeroski, S., Zenko, B.: Is combining classifiers with stacking better than selecting the best one? Mach. Learn. 54, 255–273 (2004)

Estabrooks, A., Jo, T., Japkowicz, N.: A multiple resampling method for learning from imbalanced data sets. Comput. Intell. 20(1), 18–36 (2004)

Fern, A., Givan, R.: Online ensemble learning: an empirical study. Mach. Learn. 53, 71–109 (2003)

Fernández, A., Jesús, M.J.D., Herrera, F.: Multi-class imbalanced data-sets with linguistic fuzzy rule based classification systems based on pairwise learning. In: Proceedings of the Computational intelligence for knowledge-based systems design, and 13th international conference on Information processing and management of uncertainty, IPMU’10, pp. 89–98. Springer, Berlin (2010)

Frank, A., Asuncion, A.: UCI machine learning repository (2010). http://archive.ics.uci.edu/ml

Freund, Y., Schapire, R.: Experiments with a new boosting algorithm. In: Proceedings of the Thirteenth International Conference on Machine Learning, pp. 148–156. Bari (1996)

Freund, Y., Schapire, R.E.: A decision-theoretic generalization of on-line learning and an application to boosting. J. Comput. Syst. Sci. 55(1), 119–139 (1997)

Friedman, J.H., Hastie, T., Tibshirani, R.: Additive logistic regression:a statistical view of boosting. Ann. Stat. 28(2), 337–407 (2000)

Galar, M., Fernández, A., Barrenechea, E., Bustince, H., Herrera, F.: A review on ensembles for the class imbalance problem: bagging-, boosting-, and hybrid-based approaches. IEEE Trans. Syst. Man Cybern. C Appl. Rev. 42, 463–484 (2012)

García-Osorio, C., de Haro-García, A., García-Pedrajas, N.: Democratic instance selection: a linear complexity instance selection algorithm based on classifier ensemble concepts. Artif. Intell. 174, 410–441 (2010)

García-Pedrajas, N.: Constructing ensembles of classifiers by means of weighted instance selection. IEEE Trans. Neural Netw. 20(2), 258–277 (2008)

García-Pedrajas, N.: Supervised projection approach for boosting classifiers. Pattern Recognit. 42, 1741–1760 (2009)

García-Pedrajas, N., García-Osorio, C.: Constructing ensembles of classifiers using supervised projection methods based on misclassified instances. Expert Syst. Appl. 38(1), 343–359 (2010)

García-Pedrajas, N., García-Osorio, C., Fyfe, C.: Nonlinear boosting projections for ensemble construction. J. Mach. Learn. Res. 8, 1–33 (2007)

García-Pedrajas, N., de Haro-García, A.: Scaling up data mining algorithms: review and taxonomy. Progr. Artif. Intell. 1, 71–87 (2012)

García-Pedrajas, N., Hervás-Martínez, C., Ortiz-Boyer, D.: Cooperative coevolution of artificial neural network ensembles for pattern classification. IEEE Trans. Evol. Comput. 9(3), 271–302 (2005)

García-Pedrajas, N., Pérez-Rodríguez, J., García-Pedrajas, M.D., Ortiz-Boyer, D., Fyfe, C.: Class imbalance methods for translation initiation site recognition in dna sequences. Knowl. Based Syst. 25, 22–34 (2012)

Geng, X., Zhan, D.C., Zhou, Z.H.: Supervised nonlinear dimensionality reduction for visualization and classification. IEEE Trans. Syst. Man Cybern. B Cybern. 35(6), 1098–1107 (2005)

He, H., Garcia, E.A.: Learning from imbalanced data. IEEE Trans. Knowl. Data Eng. 21, 1263–1284 (2009)

Herrera, F., Lozano, M., Verdegay, J.L.: Tackling real-coded genetic algorithms: operators and tools for behavioural analysis. Artif. Intell. Rev. 12, 265–319 (1998)

Ho, T.K.: The random subspace method for constructing decision forests. IEEE Trans. Pattern Anal. Mach. Intell. 20(8), 832–844 (1998)

Japkowicz, N.: The class imbalance problem: significance and strategies. In: Proceedings of the 200 International Conference on Artificial Intelligence (IC-AI’2000): Special Track on Inductive Learning, vol. 1, pp. 111–117. Las Vegas (2000)

Kohavi, R., Kunz, C.: Option decision trees with majority voting. In: Proceedings of the Fourteenth International Conference on Machine Learning, pp. 161–169. Morgan Kaufman, San Francisco (1997)

Kruskal, J.B.: Toward a practical method which helps uncover the structure of a set of multivariate observations by finding the linear transformation which optimizes a new ‘index of condensation’. In: Milton, R.C., Nelder, J.A. (eds.) Statistical Computing, pp. 427–440. Academic Press, London (1969)

Kubat, M., Matwin, S.: Addressing the curse of imbalanced training sets: One-sided selection. In: Proceedings of the Fourteenth International Conference on Machine Learning, pp. 179–186. Morgan Kaufmann (1997)

Kuncheva, L., Whitaker, C.J.: Measures of diversity in classifier ensembles and their relationship with the ensemble accuracy. Mach. Learn. 51(2), 181–207 (2003)

Lee, E., Cook, D., Klinke, S., Lumley, T.: Projection pursuit for exploratory supervised classification. J. Comput. Graph. Stat. 14(4), 831–846 (2005)

Lee, Y., Ahn, D., Moon, K.: Margin preserving projections. Electron. Lett. 42(21), 1249–1250 (2006)

Lerner, B., Guterman, H., Aladjem, M., Dinstein, I., Romem, Y.: On pattern classification with sammon’s nonlinear mapping—an experimental study. Pattern Recognit. 31(4), 371–381 (1998)

Li, C.J., Jansuwan, C.: Dynamic projection network for supervised pattern classification. Int. J. Approx. Reason. 40, 243–261 (2005)

Li, X., Yan, Y., Peng, Y.: The method of text categorization on imbalanced datasets. In: Proceedings of the 2009 International Conference on Communication Software and Networks, pp. 650–653 (2009)

Ling, C., Li, G.: Data mining for direct marketing problems and solutions, Proceedings of the Fourth International Conference on Knowledge Discovery and Data Mining (KDD-98), pp. 73–79. AAAI Press, New York (1998)

Liu, X.Y., Wu, J., Zhou, Z.H.: Exploratory undersampling for class-imbalance learning. IEEE Trans. Syst. Man Cybern. B Cybern. 39(2), 539–550 (2009)

Maudes-Raedo, J., Rodríguez-Díez, J.J., García-Osorio, C.: Disturbing neighbors diversity for decision forest. In: G. Valentini, O. Okun (eds.) Workshop on Supervised and Unsupervised Ensemble Methods and Their Applications (SUEMA 2008), pp. 67–71. Patras, Grecia (2008)

Merz, C.J.: Using correspondence analysis to combine classifiers. Mach. Learn. 36(1), 33–58 (1999)

Michalewicz, Z.: Genetic Algorithms + Data Structures = Evolution Programs. Springer, New York (1994)

Polzehl, J.: Projection pursuit discriminant analysis. Comput. Stat. Data Anal. 20(2), 141–157 (1995)

Quinlan, J.R.: C4.5: Programs for Machine Learning. Morgan Kaufmann, San Mateo (1993)

Rodríguez, J.J., Díez-Pastor, J.F., García-Osorio, C.: Ensembles of decision trees for imbalanced data. Lect. Notes Comput. Sci. 6713, 76–85 (2011)

Rodríguez, J.J., Kuncheva, L.I., Alonso, C.J.: Rotation forest: a new classifier ensemble method. IEEE Trans. Pattern Anal. Mach. Intell. 28(10), 1619–1630 (2006)

Schapire, R.E., Freund, Y., Bartlett, P.L., Lee, W.S.: Boosting the margin: a new explanation for the effectiveness of voting methods. Ann. Stat. 26(5), 1651–1686 (1998)

Schapire, R.E., Singer, Y.: Improved boosting algorithms using confidence-rated predictions. Mach. Learn. 37, 297–336 (1999)

Sun, Y., Kamel, M.S., Wong, A.K., Wang, Y.: Cost-sensitive boosting for classification of imbalanced data. Pattern Recognit. 40, 3358–3378 (2007)

Tenenbaum, J.B., de Silva, V., Langford, J.C.: A global geometric framework for nonlinear dimensionality reduction. Science 290(5500), 2319–2323 (2000)

Ensemble diversity for class imbalance learning. Ph.D. thesis, University of Birmingham (2011)

Webb, G.I.: Multiboosting: a technique for combining boosting and wagging. Mach.Learn. 40(2), 159–196 (2000)

Weiss, G.M., Provost, F.: The effect of class distribution on classifier learning: An empirical study. Rutgers University, Tech. Rep. TR-43, Department of Computer Science (2001)

Wilcoxon, F.: Individual comparisons by ranking methods. Biometrics 1, 80–83 (1945)

Yu, S., Yu, K., Tresp, V., Kriegel, H.P.: Multi-output regularized feature projection. IEEE Trans. Knowl. Data Eng. 18(12), 1600–1613 (2006)

Zhao, H., Sun, S., Jing, Z., Yang, J.: Local structure based supervised feature extraction. Pattern Recognit. 39, 1546–1550 (2006)

Acknowledgments

This work was supported in part by the Grant TIN2008-03151 of the Spanish “Comisin Interministerial de Ciencia y Tecnología” and the Grant P09-TIC-4623 of the Regional Government of Andalucía.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

García-Pedrajas, N., García-Osorio, C. Boosting for class-imbalanced datasets using genetically evolved supervised non-linear projections. Prog Artif Intell 2, 29–44 (2013). https://doi.org/10.1007/s13748-012-0028-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13748-012-0028-4