Abstract

In this brief review, we argue that attention operates along a hierarchy from peripheral through central mechanisms. We further argue that these mechanisms are distinguished not just by their functional roles in cognition, but also by a distinction between serial mechanisms (associated with central attention) and parallel mechanisms (associated with midlevel and peripheral attention). In particular, we suggest that peripheral attentional deployments in distinct representational systems may be maintained simultaneously with little or no interference, but that the serial nature of central attention means that even tasks that largely rely on distinct representational systems will come into conflict when central attention is demanded. We go on to review both the behavioral and neural evidence for this prediction. We conclude that even though the existing evidence mostly favors our account of serial central and parallel noncentral attention, we know of no experiment that has conclusively borne out these claims. As such, this article offers a framework of attentional mechanisms that will aid in guiding future research on this topic.

Similar content being viewed by others

Varieties of attention

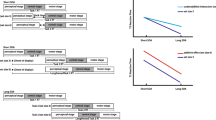

Attention is often divided into two broad categories, vigilance/arousal and selective attention.Footnote 1 This review focuses on the latter category. Most often, selective attention is used to refer to peripheral selective attention—that is, selective attention to perceptual items and events. However, selective attention is not a single phenomenon, but instead consists of several mechanisms lying within a hierarchy spanning two extremes: central and peripheral attention (Broadbent, 1958; Kahneman, 1973; Posner & Boies, 1971). Though we argue that peripheral and central forms of attention mark two ends of a hierarchy (Fig. 1), much of the literature has adopted a simpler view in which selective attention is dichotomized between central and peripheral forms. In both simplified and more complex views, peripheral attention engages with the external world and with representations closely associated with the external world (e.g., working memory representations of sensory information), whereas central representations and processes are more “cognitive,” and often are much more abstract in nature (see, e.g., Badre, 2008). In our view, central attention controls peripheral attention indirectly, in that central attention represents and updates goals and task sets that ultimately influence peripheral representations via the action of middle levels of the attentional hierarchy. Central attention may also influence future central attentional states, such as by sequencing subgoals (Duncan, 2010). Classically, the most central attentional processes have been associated with executive or cognitive control—that is, with the ability to shape cognitive processes, representations, and behaviors in accord with task goals. However, the scope of central attention beyond these executive functions has been imprecisely defined, sometimes extending to more prosaic control functions such as control of the spatial locus of attention (e.g., Serences & Yantis, 2006; Yantis, 2008) that we suggest are better viewed as midlevel attention. Below we briefly summarize the conventional views of peripheral and central attention, and then describe our own view.

Proposed hierarchy of attention. Central attention is serial, whereas midlevel and peripheral attention are parallel across representational formats; that is, they may be deployed over distinct formats without interference. Though representational formats and sensory modalities are often conflated, we propose that they may be dissociated. However, there are preferential relationships between modalities and formats, as we discuss in the text (cf. Michalka et al., 2015). Here, note that we use heavy lines to depict the strong/preferred connections from visual sensation to the visual object-feature and spatial representational formats. However, we use a light line to depict the weaker/nonpreferred connection from visual sensation to the sequence representational format, and we draw no connection from visual sensation to the auditory object-feature representational format (see the main text and note 3). A similar pattern holds for the connections from auditory sensation

Peripheral selective attention

Selective attention is the allocation of limited processing capacity to one or a small number of items at the expense of other items. Selective attention has been primarily studied in the context of sensory perception, generally yielding the result that attended sensory items are perceived more quickly and/or accurately than unattended items. Such processing benefits have been demonstrated extensively for locations in space, but also for perceptual objects and features. Attention deployed within sensory systems and the representations they generate is generally considered to be peripheral in nature; that is, perceptual attention is sustained locally in the relevant representations via biases in stimulus competition that favor the representation of attended stimuli (Desimone & Duncan, 1995; Serences & Yantis, 2006, 2007; Yantis, 2008), even though these peripheral biases are the result of sustained input from elsewhere in the brain (Kelley, Serences, Giesbrecht, & Yantis, 2008; Tamber-Rosenau, Asplund, & Marois, Manuscript in revision; Yantis et al., 2002).

Selective attention may also be exerted over internal representations that do not directly derive from immediate sensory input (Chun, Golomb, & Turk-Browne, 2011), such as items in short-term or working memory (Garavan, 1998; Tamber-Rosenau, Esterman, Chiu, & Yantis, 2011). Identifying selective attention to working memory items as being central, midlevel, or peripheral in nature is difficult, in part because items in memory are represented redundantly throughout the brain. Such representations have been reported in human sensory cortex (e.g., early visual cortex; Harrison & Tong, 2009; Serences, Ester, Vogel, & Awh, 2009), parietal cortex (Bettencourt & Xu, 2016; Christophel, Hebart, & Haynes, 2012; Todd & Marois, 2004; Xu & Chun, 2006), and frontal/prefrontal cortex (Ester, Sprague, & Serences, 2015; Sprague, Ester, & Serences, 2014)—spanning brain regions associated with all levels of the attentional hierarchy, from the most peripheral to the most central. Understanding the role of seemingly redundant memory representations (including whether they actually play a causal role in working memory; Mackey, Devinsky, Doyle, Meager, & Curtis, 2016) is a fundamental problem of cognitive neuroscience and is beyond the scope of this article, but it seems likely that these multiple representations serve distinct roles, even if typical analysis methods (such as spiking activity, blood oxygenation level dependent activation, multivariate pattern analysis, and forward-encoding models) can extract duplicate information from these multiple representations. Our working hypothesis is that posterior representations of working memory items are closely related to sensory representations (Serences et al., 2009), and thus are peripheral in nature. We do not suppose that all neural representations from which memory item information may be extracted are similarly peripheral. For instance, prefrontal representations may be deeply related to task rules (Cole, Bagic, Kass, & Schneider, 2010; Cole, Etzel, Zacks, Schneider, & Braver, 2011; Cole, Ito, & Braver, 2016; Montojo & Courtney, 2008) or may represent an interaction of memory information and task goals (Duncan, 2001; Kennerley & Wallis, 2009; Woolgar, Hampshire, Thompson, & Duncan, 2011), and thus may be more central than peripheral in nature.

Central attention

In addition to the ability to select subsets of external or internal representations, the term attention has been applied to more “central” kinds of selective information processing, such as that associated with the mapping of perceptual decisions to motor or other responses—that is, response selection (Dux, Ivanoff, Asplund, & Marois, 2006; Dux et al., 2009; Pashler, 1994). The same central attention processes have been shown to be demanded by a wide variety of additional tasks and their underlying information-processing demands (Marois & Ivanoff, 2005), including working memory encoding of selected information from perception (Jolicœur & Dell’Acqua, 1999; Scalf, Dux, & Marois, 2011; Tombu et al., 2011); rapid allocation of attention and working memory encoding in time, so as to encode only a briefly presented target while excluding temporally preceding and following distractors, such as in the attentional blink paradigm (Dux & Marois, 2009; Marti, Sigman, & Dehaene, 2012); and task switching (Norman & Shallice, 1986). In such studies, simultaneous or temporally proximate demands on central attention lead to behavioral decrements in accuracy or slowed response times, revealing that central attention is the locus of a capacity-limiting bottleneck on information processing in a wide variety of tasks. Much research has been devoted to describing the structure of such central attention, and models have proposed everything from a single, domain-general, unified central executive (Baddeley, 1986; Bunge, Klingberg, Jacobsen, & Gabrieli, 2000; Fedorenko, Duncan, & Kanwisher, 2013; Kahneman, 1973; Moray, 1967) or a central “procedural resource” that maps the outputs from peripheral systems to new demands on the peripheral systems (Salvucci & Taatgen, 2008), to a series of central resources or processes that may be organized along one or more dimensions (Baddeley, 1998; Miyake et al., 2000; Navon & Gopher, 1979; Tamber-Rosenau, Newton, & Marois, Manuscript in revision; Wickens, 1984, 2008). What is clear is that central attention is closely associated or overlaps with what have been termed executive or cognitive control functions. Central attention is thus thought to be the locus of important capacity limits on cognition.

Proposed architecture of attention

We suggest that attention is best viewed as operating at a series of levels arranged in a hierarchy, from central control of goals and tasks in the prefrontal cortex, through midlevel translation of goals into biasing signals by dorsal brain regions (the frontal eye fields [FEF] and intraparietal sulcus [IPS]), to peripheral representations (in posterior cortex) that receive the biasing signals and reconcile them with sensory and mnemonic signals (Fig. 1). We will describe this architecture in more detail later in this article, after reviewing the existing models from which we draw inspiration and that we wish to reconcile with one another. A key reason for laying out our proposal in this review is that previous models have frequently dichotomized attention into central and peripheral, without agreement on where the boundaries between these extremes belong, whereas we argue that (at least) three, not two, levels are necessary to encompass the previously reported data and to describe the hierarchy of selective-attentional phenomena. A secondary goal of this article is to provide a consistent set of terminology, so that future works will not apply the same label (e.g., “central”) to distinct levels of the attentional hierarchy.

Summary

The term attention has long been applied to a diverse set of processes, rather than to a single phenomenon. Though many taxonomies of attention have been proposed, we find it useful to envision a multi-level hierarchy from peripheral attention, deployed within representational systems that are closely tied to sensory systems (at the bottom of the hierarchy), to central attention, as a resource or critical stage of representing and processing abstract information such as goals and rules (at the top of the hierarchy). Such central attention is the locus of capacity limits in many behaviors. In the following section, we address the relationship between peripheral and central attention.

Models of central and peripheral attention

Early versus late selection and perceptual load theory

As should be evident from the foregoing section, the distinction between peripheral and central attention is not always completely clear, and we favor a model that envisions more than just two levels of attention (Fig. 1). Much research has attempted to delineate the divisions and relationships between peripheral and central attention. An early example of such a linkage was the early-versus-late-selection debate. Early-selection models propose that peripheral attentional systems filter task-irrelevant information, such that the information is not processed beyond initial perceptual registration (Broadbent, 1958). Late-selection models instead propose that all incoming perceptual information is processed to a high—for instance, semantic—level, and task-relevant and task-irrelevant information are distinguished at the level of response selection (Deutsch & Deutsch, 1963)—that is, by central attention. After decades of debate, a resolution seemed to be at hand when hybrid early-/late-selection models (Yantis & Johnston, 1990), and in particular, perceptual load theory (Lavie, Hirst, de Fockert, & Viding, 2004; Lavie & Tsal, 1994; Vogel, Woodman, & Luck, 2005), proposed that selection occurs at multiple levels. The key contribution of these theories is that selection occurs at multiple levels of representation and processing (see also Hopf et al., 2006; Johnston & McCann, 2006; Treisman & Davies, 1973). Our proposal specifies the nature of selection at several levels of this hierarchy.

Sources and targets of attention

Another proposed linkage between central and peripheral attention is the idea that representations of attentional priority (embodied in frontal and parietal cortex, and which we consider to be at the middle level of the attentional hierarchy) instantiate an attentional set in peripheral representations (Serences & Yantis, 2006; Yantis, 2008) by biasing competition within loci of peripheral representation such as sensory cortex (Desimone & Duncan, 1995). According to this framework, the action of these biases to preferentially process some over other information is identical to the phenomenon of peripheral attention. Thus, frontoparietal brain regions (including the spatiotopic maps in FEF and IPS) serve as sources of control, and they exert their effects on targets of control such as sensory cortex and posterior working memory representations—which thus contain biased representations, instantiating peripheral attention. In our view, sources of control in FEF and IPS serve to translate the high-level goals set by the most central attention (primarily localized to prefrontal cortex) into concrete biases in peripheral representations (in sensory cortex and posterior working memory representations).

These peripheral targets of the biasing signals from sources of control (midlevel attention) are embodied throughout early and high-level sensory cortex (Serences & Yantis, 2006; Yantis, 2008), including parietal working memory representations (Bettencourt & Xu, 2016; Christophel et al., 2012), and comprise the most peripheral level of attention (Fig. 1). We divide this lowest level into two domains: representational formats and sensory systems. Examples of representational formats include spatial information, visual object features (e.g., color, spatial frequency, orientation), auditory object featuresFootnote 2 (e.g., the pitch of a tone, or individual phonemes), and sequence/temporal information (e.g., language or music); examples of sensory systems include the visual system, the auditory system, and other modality-specific perceptual systems. Critically, though the information from each sensory system can in principle give rise to representation in several formats (perhaps directly, but certainly via active recoding that relies on more central attention), each sensory system has one or more preferred representational formats and associated attentional control mechanisms (Michalka, Kong, Rosen, Shinn-Cunningham, & Somers, 2015; O’Connor & Hermelin, 1972; Welch & Warren, 1980). In particular, the visual system defaults to spatial and visual object-feature representational formats—the familiar what and where streams (Mishkin, Ungerleider, & Macko, 1983)—whereas the auditory system defaults to sequence and auditory object-feature formats. We emphasize that although each sensory system has such preferred representational formats, it is possible for a sensory system to feed into other representational formats, as well (see the bold vs. light lines at the bottom levels of Fig. 1). This nonorthogonal relationship between sensory systems and representational formats has frequently posed challenges in interpreting experimental results (e.g., Fougnie, Zughni, Godwin, & Marois, 2015; Saults & Cowan, 2007). The distinction between representational formats and sensory systems is important, because we suggest that attention functions in parallel across representational formats, not across sensory systems per se.Footnote 3

Prefrontal control over sources of attention

Though sources of attention in FEF and IPS (midlevel attention) implement peripheral biases, these sources of attention are themselves controlled by prefrontal brain mechanisms that set high-level task goals—that is, cognitive control. Various brain networks, mainly localized in prefrontal cortex, have been proposed to control FEF and IPS in this manner (e.g., Dosenbach, Fair, Cohen, Schlaggar, & Petersen, 2008; Higo, Mars, Boorman, Buch, & Rushworth, 2011; Sestieri, Corbetta, Spadone, Romani, & Shulman, 2014). These networks, and the lateral and medial prefrontal cortex in particular, have been proposed to be organized along a continuum of task or information abstraction (Badre, 2008; Fuster, 2001; Hazy, Frank, & O’Reilly, 2007; Koechlin, Ody, & Kouneiher, 2003; Nee, Jahn, & Brown, 2014; Zarr & Brown, 2016). It is important to note that not all accounts accord with this view: Others have proposed that prefrontal, frontal, and parietal brain regions constitute a single multiple-demand network for flexible cognition, whose component regions are difficult to functionally dissociate, at least using neuroimaging (Duncan, 2010; Erez & Duncan, 2015; Woolgar et al., 2011), and which shows little evidence of a gradient of abstraction (Crittenden & Duncan, 2014). Furthermore, other accounts have proposed a dichotomy between different regions/networks for task set control versus momentary adaptive control, instead of a continuum (e.g., Dosenbach et al., 2008). Regardless of whether prefrontal cortex is organized along a continuum of abstraction, it is generally agreed to be the locus of task control, where goals and high-level task rules are represented, coordinated, and combined (Cole et al., 2010; Cole et al., 2011; Cole et al., 2016; Montojo & Courtney, 2008), a role that distinguishes prefrontal cortex from midlevel regions such as FEF and IPS.

Related models: Multipartite working memory and threaded cognition

Our proposed architecture of attention shares much with aspects of two previous models: Baddeley’s multipartite working memory model (e.g., Baddeley, 1986) and the threaded cognition model of Salvucci and Taatgen (2008). The multipartite working memory model proposes two (or more, in some later versions of the model) peripheral stores for working memory—a “visuospatial sketchpad” and a “phonological loop”—controlled by a “central executive.” Like the present model, distinct representational formats (visuospatial and phonological) can represent information independently of one another. However, more complex operations require the central executive, placing a common capacity limit on the “working” part of “working memory,” regardless of the format of the information being processed. Unlike our model, Baddeley’s multipartite working memory model is restricted to the domain of working memory and posits only two levels, though the central executive is proposed to play roles beyond those typically associated with memory, such as coordinating dual-task performance (e.g., Baddeley, 1986; Baddeley, Logie, Bressi, Della Sala, & Spinnler, 1986).

The threaded cognition model (Salvucci & Taatgen, 2008) is a production model in the ACT-R framework (Anderson et al., 2004); thus, unlike the other models discussed in this article, it can make quantitative predictions. Threaded cognition suggests a series of cognitive modules (vision, audition, etc.) and two special modules—a declarative memory that guides behavior when first learning a task, and a procedural resource that plays a role that is very similar to cognitive control in that it coordinates the use of other resources. All modules can run in parallel with one another, but when multiple threads (tasks) demand the same module—a frequent occurrence with the procedural resource—performance bottlenecks occur, because each module processes information in a serial manner. This is similar to our proposal, but with a few important differences: Threaded cognition is focused on multitasking and related paradigms (e.g., the attentional blink), rather than on attention more broadly; it makes no proposals as to the neural loci of its modules, beyond the very general proposals associated with ACT-R; it does not propose an exhaustive list of modules at more peripheral levels; and it proposes a system with only two levels, with the internal structure of modules being largely unspecified.

Summary

A wide range of links between central and peripheral attention have been proposed. These include proposals about the locus of attentional control (e.g., early vs. late selection), the fractionation of attention into sources and targets of control, descriptions of the control of sources of attention by prefrontal cortex, and the proposal that control itself is organized along a continuum of abstraction. None of these linkages has fully explained the architecture of attention, but they all contribute important insights. Perhaps most importantly, all of these proposals allow for multiple levels of attention. We propose that previous accounts can be roughly composited to yield the three levels we discuss here—central attention (goal and rule processes and representations, cognitive control; embodied in prefrontal cortex), midlevel sources of attention (translating central attentional goals into sustained biasing signals exerted on peripheral targets of attention; embodied in FEF and IPS), and peripheral targets of attention (representations in particular formats; embodied throughout sensory cortex). Each of these levels might be fractionated further with additional research, though we here only make the specific proposal that the most peripheral level of attention may be subdivided into representational formats and sensory systems, with specific preferential relationships between formats and sensory systems. In the following section, we review the evidence supporting the proposed framework, with a special emphasis on the parallel versus serial nature of attention at each level of the hierarchy.

Central attention is serial, midlevel and peripheral attention are parallel

Here we propose a fundamental difference in the information-processing capacities of central versus midlevel and peripheral attention: Central attention is serial in nature, whereas midlevel and peripheral attention are parallel across representational systems (Fig. 1). Central attention is widely thought to be serial already (Han & Marois, 2013b; Liu & Becker, 2013; Pashler, 1994; Zylberberg, Fernandez Slezak, Roelfsema, Dehaene, & Sigman, 2010)—or at least very limited in its capacity (e.g., Tombu & Jolicœur, 2003)—though some have argued that this is for strategic rather than structural reasons (Meyer & Kieras, 1997; Schumacher et al., 2001). However, the proposal that midlevel and peripheral attention are parallel requires further elaboration, since it is not well established.

Midlevel and peripheral attention are parallel

Biasing signals exerted over sensory cortex originate in sources of control (FEF, IPS), leading to results such as the well-known phenomenon that the spatial locus of attention may be observed in a series of spatiotopic maps in the IPS and near FEF (Silver & Kastner, 2009). These midlevel biasing signals are temporally sustained in nature (Esterman et al., 2015; Kastner, Pinsk, De Weerd, Desimone, & Ungerleider, 1999; Offen, Gardner, Schluppeck, & Heeger, 2010; Tamber-Rosenau, Asplund, & Marois, Manuscript in revision), because their sustained action is what continually biases peripheral targets of attention (Yantis, 2008). Thus, if attentional biases can be simultaneously and independently exerted in parallel on multiple peripheral representations at the same time, then it follows that midlevel sources of attention must also be parallel in nature. In particular, we suggest that this is a limited parallelism, not based on a completely flexible capacity, but on a series of distinct biasing signals, one per peripheral representational format. Each representational format, then, receives its own biasing signal, and thus has a locus of attention within it. We propose that each peripheral locus of attention, instantiated by sustained biasing signals from midlevel attention, is subject to little or no interference from attention in other representational formats. This proposal stands alongside our proposal (above) that central attention is serial. Thus, two tasks that rely on distinct representational formats may well interfere with one another, so long as they also demand central attention. But if both tasks can be completed without (simultaneous) demands on central attention, we do not predict interference.

Evidence adjudicating these proposals has come from four lines of research, discussed in the following four subsections. The first line of evidence considers the general neural architecture that implements attention, whereas the remaining three are primarily concerned with the consequences of dual-task interference under varying circumstances. Of the latter three lines of evidence, one examines interference in dual-task experiments with tasks that rely on only peripheral and midlevel attention, relative to tasks that also require central attention. A second examines the neural correlates of dividing attention across representational formats. The third examines interference between the maintenance of information in short-term or working memory representations and either the maintenance of other working memory representations or more traditional attention tasks, such as visual search.

Neural architecture of attention

A general principle of the proposed linkages between central and peripheral attention—most strongly exemplified in the dichotomy between sources and targets of attention (e.g., Yantis, 2008) on the one hand and the control of sources of attention by prefrontal networks (e.g., Dosenbach et al., 2008) on the other hand—is that “higher,” more abstract processes and representations set goals that are implemented by “lower,” more concrete processes and representations. For instance, Dosenbach et al. (2008) distinguished frontoparietal and cingulo-opercular networks, arguing that the frontoparietal network (including IPS, medial superior parietal lobule/superior precuneus, inferior parietal lobule, lateral frontal cortex near the inferior frontal junction, dorsolateral prefrontal cortex, and middle cingulate cortex) is involved in adjusting attentional configurations at single-trial timescales—consistent with the view that these regions are sources of attentional control that provide biasing signals to sensory cortex to perform what Dosenbach and colleagues term adaptive control, and consistent with the view that frontoparietal regions are sources of control over sensory attention (see above). On the other hand, the cingulo-opercular network (anterior prefrontal cortex/frontopolar cortex, anterior insula/frontal operculum, dorsal anterior cingulate cortex/medial superior frontal cortex, and thalamus) is proposed to perform set maintenance, or high-level, longer-term (e.g., block-level) goal setting (Dosenbach et al., 2008; Sestieri et al., 2014)—that is, control over the frontoparietal network that provides biasing signals to sensory cortex. Thus, the cingulo-opercular network controls the frontoparietal network; that is, it is a more central locus of attentional control. In terms of our model, Dosenbach’s cingulo-opercular network is related to central attention, and his frontoparietal network is related to midlevel attention. Dosenbach’s model does not explicitly incorporate a network for what we consider to be peripheral attention. In general, previous accounts have not combined the full hierarchy that we here propose (Fig. 1) in a single model. However, considering all three levels together with recent empirical evidence from neuroimaging can lead to additional insights, as we describe below.

We identify central attention with task control and other high-level central attentional mechanisms located in prefrontal cortex for three primary reasons: First, lateral prefrontal cortex is recruited across a wide range of central attention tasks (Marois & Ivanoff, 2005; Marti et al., 2012; Tombu et al., 2011); is thought to control task rules (Cole et al., 2010; Cole et al., 2011; Cole et al., 2016; Montojo & Courtney, 2008); and is the region of convergence for stimulus-driven and goal-driven attention (Asplund, Todd, Snyder, & Marois, 2010), whose conflicting influences must be resolved for successful task execution. Second, regions of the prefrontal cortex—including anterior cingulate, frontal operculum, and anterior insula—are activated across a very wide variety of cognitive tasks (Duncan, 2010), consistent with central attention being required for any nonautomatic task, and seem to change control settings at task-level, rather than trial-level, intervals (Dosenbach et al., 2008). Third, subsets of the lateral prefrontal cortex and anterior insula are invariant to perceptual modality and to representational format (i.e., location vs. shape; Tamber-Rosenau, Dux, Tombu, Asplund, & Marois, 2013; Tamber-Rosenau, Newton, & Marois, Manuscript in revision), consistent with highly abstract processes or representations being involved in central attention. The latter results are especially suggestive of the serial nature of central attention, because if a representation does not contain sensory information, but instead represents only abstract, task-relevant information, one would predict interference or cross-talk between simultaneous representations (Huestegge & Koch, 2009; Navon & Miller, 1987), consistent with the need for serial processing.

As for the “middle” level of control—sources of attention—FEF and IPS are both thought to contain attentional priority maps whose signals bias competition in sensory brain regions (Bisley & Goldberg, 2010; Silver & Kastner, 2009; Thompson & Bichot, 2005; Yantis, 2008). The effect of attention on these sensory regions that embody peripheral representations in various formats has been demonstrated over and over again (see above). Although the most peripheral level is generally assumed to be modality-specific, this has not always been the case for the middle level of attention—the sources of attention in FEF and IPS. Because FEF and IPS are involved in attention across perceptual modalities and features (Greenberg, Esterman, Wilson, Serences, & Yantis, 2010; Sathian et al., 2011; Shomstein & Yantis, 2004, 2006), one might surmise that they are general sources of attention that, like central attention mechanisms, might be serial in nature, with a single focus of attention represented at middle levels at any one time. However, contrary to such a serial account of middle levels of attention, humans can simultaneously maintain attentional deployments over multiple representations (e.g., visual and mnemonic; Tamber-Rosenau et al., 2011) or features (e.g., location and color; Greenberg et al., 2010), suggesting that a limited-capacity parallel mechanism is at work. Furthermore, we recently demonstrated that FEF and IPS contain distinct neural populations for distinct perceptual modalities (Tamber-Rosenau et al., 2013) and representational systems (Tamber-Rosenau et al., 2011). Finally, IPS contains a number of specialized regions, consistent with multiple signals rather than a unified representation or process (Culham & Kanwisher, 2001). These findings are more consistent with separate biasing signals originating at middle levels of attention, something that could allow the maintenance of distinct foci of attention for different representational formats (especially given the preferential relationships between modalities and representational formats; see above). According to such an account, behavioral interference should be observed across representational formats only when the processing of information requires central attention. The remaining three lines of evidence reviewed below primarily examine this possibility.

Studies of perceptual sensitivity in dual-tasking across representational formats

It is universally understood that the detection of a stimulus is a less demanding task than discrimination between multiple alternative stimuli.Footnote 4 Mere detection has been proposed to rely on very peripheral processes (Pylyshyn, 1989; Treisman & Gelade, 1980; Trick & Pylyshyn, 1993; Wolfe, 1994). On the other hand, discrimination between alternatives—that is, response selection (and possibly late stages of perceptual categorization that are intimately related to response selection; Johnston & McCann, 2006; Zylberberg, Ouellette, Sigman, & Roelfsema, 2012)—is one of the chief proposed roles of central attention, as we outlined above. Thus, if we can assume that detection tasks load mostly on peripheral and midlevel attention (and therefore on representational-format-specific resources), and discrimination tasks load on central attention as well, experiments comparing the degree of interference between two detection tasks to the degree of interference between two discrimination tasks when both tasks rely on distinct representational formats could be taken as evidence adjudicating our proposal that peripheral and midlevel—but not central—attentional deployments may be sustained in parallel. To assess our hypothesis that central attention is serial, but midlevel and peripheral attention are parallel across representational formats, we focus our discussion on studies in which the two tasks relied on distinct representational formats. Most often, this is accomplished by presenting tasks in distinct perceptual modalities as well, though we suggest that it is at least theoretically possible to accomplish this by using two tasks in the same perceptual modality that rely on distinct representational formats, and it is important to reiterate that presenting tasks in distinct modalities does not automatically entail the use of distinct representational formats (Fougnie et al., 2015).

One cross-modal and cross-format dual-task experiment was conducted by Bonnel and Hafter (1998). They measured behavioral sensitivity for detection of the presence of a stimulus and for discrimination between two alternative stimuli when the stimuli were presented in distinct perceptual modalities (audition and vision). The visual stimuli were brightness modulations of a pedestal (which we classify as belonging to a visual object-feature representational format), and the auditory stimuli were volume modulations of a pedestal (which we classify as belonging to an auditory object-feature representational format). The key result was that sensitivity for the detection of either stimulus was not affected by the need to detect stimuli in the other modality/format as well, but that sensitivity to discriminate between two alternatives in each modality/format (increased vs. decreased brightness/volume) was reduced by the need to discriminate in both modalities. These data are consistent with interference at central (discrimination), but not peripheral or midlevel, attention, because the key difference between the detection and discrimination tasks is the increased need for central attention—here, response selection—during discrimination. These results have been partially replicated by de Jong, Toffanin, and Harbers (2010), who observed small cross-modal/format interference effects for detection, but much larger cross-modal/format interference for discrimination. In addition, de Jong et al., using steady-state sensory-evoked potentials, observed cross-modal modulatory attentional effects that were similar for detection and discrimination, but only for auditory attention effects on visual cortex. Thus, de Jong et al.’s electrophysiological results do not clearly endorse either independent or interfering effects of dividing attention across modalities for detection tasks.

Another informative study was conducted by Alais, Morrone, and Burr (2006). In this study, participants performed one of two primary tasks—a visual primary task in which participants decided which of two gratings had higher contrast, or an auditory primary task in which participants discriminated which of two tones had higher pitch. The primary visual and primary auditory tasks were always performed separately, but each could be performed alone or accompanied by either a visual or an auditory secondary task. The secondary visual task was to determine whether there was an oddball luminance in an array of briefly presented dots, and the secondary auditory task was to discriminate a major from a minor chord. For each modality, we would classify both the primary and secondary tasks as relying on object features (brightness and contrast for vision, pitch for audition); thus, because we propose distinct visual object-feature and auditory object-feature representational formats (Fig. 1), the within-modality dual-task trials also divided attention within a representational format, whereas the cross-modality dual-task trials did not. Alais et al. observed that participants were similarly sensitive for single- and dual-task performance, except when they performed two tasks that relied on the same perceptual modality and—we would propose—representational format. When participants performed within-modality/format dual-tasking, they were far less sensitive than under either the single-task or cross-modal/format dual-task conditions. One account of these results is that participants could maintain attention within two representational formats at the same time (auditory object features plus visual object features), but could not maintain two distinct attentional deployments within a single modality/format. However, the interpretation of these results is complicated by the nature of the tasks: Both primary tasks were discriminations, which are thought to rely on central attention (see above). A likely explanation for these results is that all of the tasks could have become automatized over practice (Schneider et al., 1984), because participants practiced for several days until single-task performance was asymptotic. Furthermore, the need for other central attentional processing, such as dual-task coordination/switching, may have been attenuated as well, speeding or reducing its demands on central attention (Dux et al., 2009; Schumacher et al., 2001), since each participant took part in six to ten sessions of the experiment. Whatever the reason for the minimal effects of central attentional interference in this study, significant dual-task costs emerged for within-modality/format, but not between-modality/format, dual-task trials—consistent with parallel midlevel and peripheral attention.

More recently, Arrighi, Lunardi, and Burr (2011) used a multiple-object tracking (MOT) paradigm to argue for distinct attentional resources for vision and audition. Participants performed MOT concurrently with an oddball task in which they had to report which of three serially presented visual gratings, covering the entire display screen, was a luminance oddball relative to the other two, or which of three serially presented tones was a tone oddball relative to the other two. Similar to Alais et al. (2006), Arrighi et al. observed dual-task sensitivity reductions only for same-modality dual-tasking, not for cross-modal divided attention. One account of these data is that the multiple-object-tracking primary task relied on the spatial representational format, whereas the visual and auditory secondary tasks relied on visual and auditory object-feature representational formats, respectively. If this were the case, we would not have predicted the MOT sensitivity difference for visual versus auditory secondary tasks. However, the visual secondary task—unlike the auditory secondary task—may have led to spatial “zooming out” of attention—which is slower, and thus presumably more demanding, than other spatial shifts of attention (Stoffer, 1993)—leading to interference at the level of the spatial representational format. Furthermore, the two visual tasks may have required frequent task switches between MOT and contrast discrimination to evaluate grating contrast at the moment of grating display. This is because the MOT displays could have masked the three sequential gratings presented on each trial. Thus, the visual–visual dual task may have placed significant demands on central attention (task switching). Because there was no auditory mask and because of the relatively long duration of echoic memory, participants may have been able to defer auditory task judgments until after MOT, reducing interference at the level of central attention in the visual–auditory dual task. Thus, the results of Arrighi et al. neither clearly support nor contradict our hypothesis.

Arrighi et al. (2011), Alais et al. (2006), and Bonnel and Hafter (1998) all reported changes in perceptual sensitivity as a function of task conditions and were generally consistent with the idea of serial central attention but parallel peripheral and midlevel attention. However, perceptual sensitivity may not be optimal for assessing the ability to divide attention between modalities, because it is possible that participants attended to the modalities serially instead of simultaneously, relying on iconic or echoic immediate sensory memory to perform the tasks and/or on a strategy of rapidly switching attention between items instead of truly dividing attention. Thus, these paradigms might be less sensitive to dual-task costs than are alternative paradigms, especially if a primary task is prioritized and the secondary task relies on sensory memories and/or rapid attentional switching. This concern could be relieved by the use of studies designed to evoke differences in reaction times instead of perceptual sensitivities, since reaction times should be prolonged in serial switching as compared to simultaneous attention because it takes measurable time to shift attention.Footnote 5 Unfortunately, we know of no studies that have clearly compared dual-task costs for detection and discrimination tasks that relied on distinct representational formats in terms of reaction time costs instead of sensitivity; it will be important to conduct such studies in the future.

Studies of the neural consequences of cross-representational-format attention

A number of studies have used either electrophysiological or neuroimaging methods to assess the mechanisms of dividing attention across multiple peripheral representations—generally the sensory modalities of vision and audition, confounded with what we propose to be their distinct object-feature representational formats. These studies have been most consistent with the view that noncentral mechanisms of attention for distinct representational formats do not interfere with one another, though because most of these studies did not distinguish between modalities and representational formats, they are also consistent with the proposal that noncentral mechanisms of attention for distinct modalities do not interfere with one another. We have already made reference to one such study—de Jong et al. (2010), which provided asymmetric results for the cross-modal/cross-representational-format neural effects of peripheral attentional deployments to vision and audition, thus making the neural results somewhat difficult to interpret. In another study utilizing steady-state visual evoked potentials (SSVEPs; Talsma, Doty, Strowd, & Woldorff, 2006), SSVEPs were evoked by a continuous visual stream of letters and were used to index attention to the visual modality. SSVEPs were greater for attention to auditory events than either when attending to visual events (separate from the SSVEP-evoking stream) or when attending to audiovisual events (also separate from the SSVEP-evoking stream). The authors interpreted these results as evidence against central attention, because SSVEPs were not diminished during auditory attention, suggesting that auditory attention does not interfere with visual attention, and that total attentional capacity is larger across modalities than for divided attention within a single modality (measured by comparing the SSVEPs during attention to visual events to those during attention to the SSVEP-evoking stimulus, which was distinct from the visual target events). All tasks in this experiment consisted of detection, not discrimination, so our model would not predict a demand on central attention. Furthermore, the audiovisual events consisted of simultaneous visual and auditory targets, making participants’ strategies ambiguous.Footnote 6 Finally, it should be noted that the targets were defined as visual or audio intensity reductions; that is, they depended on distinct representational formats—either visual or auditory object features—according to our framework. Thus, modality and representational format cannot be distinguished in these data.

Another line of research investigating cross-format/cross-modal divided attention has instead been based on fMRI. Such studies have shown common frontal and parietal brain regions activating for shifts of attention between and within modalities (Shomstein & Yantis, 2004, 2006) and for shifts of attention within spatial and nonspatial representational formats (Shomstein & Yantis, 2006), consistent with a role for central attention in overall task control. However, this work (Shomstein & Yantis, 2004) examined only shifts of attention between modalities rather than divided (simultaneous) attention across modalities, so it cannot speak to our hypothesis that midlevel and peripheral attention are parallel across representational formats. Another fMRI study demonstrated that attending to a single modality increased activity in sensory cortex for that modality and decreased activity in sensory cortex for a competing modality (Johnson & Zatorre, 2005). The results of Johnson and Zatorre (2005) might be interpreted as conflicting with those of Talsma et al. (2006), but an alternative interpretation is that because Johnson and Zatorre (2005) never required divided attention between modalities, the task demands incentivized participants to devote all possible resources to a single modality. However, in a follow-up study, Johnson and Zatorre (2006) included a divided-attention condition. They found no increase of activity in sensory cortices during divided attention, instead observing frontal activation (consistent with increased central attention needed to instantiate multiple peripheral attentional sets). Nevertheless, Johnson and Zatorre (2006) did observe individual differences, such that the best-performing individuals exhibited greater activation in sensory cortices during cross-modal divided attention, relative to a passive baseline. Both of Johnson and Zatorre’s studies (2005, 2006) used a task in which participants memorized stimuli for a subsequent memory test. The stimuli used distinct representational formats for the two modalities: For audition, they relied on 7-s tonal melodies—that is, on the sequence representational format. For vision, they relied on 14-sided shapes; though the line segments comprising the shapes were gradually added to the image over 7 s, the task was presented in terms of matching the final shapes, and there was no evidence that participants attended, encoded, or recalled the sequence of line segment presentations. Thus, the most plausible interpretation is that participants attended and memorized the visual shapes—that is, they relied on the object-feature representational format. In sum, neural evidence offers at least some support for the hypothesis that multiple peripheral attentional deployments in distinct representational formats may be maintained simultaneously without interference.

Studies of working memory interference

Another useful line of research for assessing the hypothesis that multiple midlevel and peripheral attentional deployments with distinct representational formats may be maintained simultaneously without interference has examined interference between the maintenance of information in working memory and either perceptual (e.g., visual search) or other working memory tasks. The relevance of these studies hinges on the perspectives that (1) peripheral/midlevel attention can be deployed over working memory representations as well as over immediate sensory representations (Garavan, 1998; Griffin & Nobre, 2003; Tamber-Rosenau et al., 2011), and (2) working memory maintenance entails the deployment of selective attention to internal representations of stimuli, to maintain them after the stimuli themselves are no longer present (e.g., Awh & Jonides, 2001).

Under these suppositions, successful maintenance of working memory in one representational format would be predicted to suffer if the same representational format were called upon for another aspect of a task, but not if a different representational format were demanded instead. In early experiments that shed light on this prediction, Baddeley reported that an imagery task was disrupted by auditory spatial tracking, but not by nonspatial visual brightness judgments (briefly reported in Baddeley & Hitch, 1977); this is consistent with a distinction between visual object-feature (brightness) and spatial (auditory tracking, spatial imagery) representational formats. Similarly, verbal working memory and verbal reasoning are disrupted by articulatory suppression or concurrent verbal memory load (e.g., Baddeley, 1986; Baddeley & Hitch, 1974), presumably because both draw on the same representational format (likely primarily the sequence representational format). It should be noted that verbal tasks such as these have the advantage of ecological validity—humans use language constantly—but also incorporate sequence and auditory object-feature representational formats with long-term semantic memory, making it more difficult to use them to support or refute our proposals. Nevertheless, attention and linguistic representation is an important topic for ongoing research.

In more recent work, participants performed a spatial visual search task while maintaining a visual object-feature working memory load (Woodman et al., 2001). In this study, the visuospatial task was not impaired by the visual object-feature working memory load. In a follow-up study (Woodman & Luck, 2004), participants performed visual search (spatial representational format) while maintaining spatial information in working memory. When both tasks placed demands on the spatial representational format, performance suffered. The selective nature of this interference (between spatial attention and spatial memory, but not between spatial attention and object memory) lends support to the view that multiple attentional deployments can be maintained, so long as they are within distinct representational formats.

Other studies have searched for interference between two working memory loads in distinct modalities or representational formats. For instance, Saults and Cowan (2007) required participants to maintain visual and auditory information simultaneously (with each modality relying on a combination of spatial and object-feature information in all but one experiment, complicating the assignment of modality to representational format) and found that the two kinds of information were subject to a common capacity limit. Much of this interference was at the level of central attention, not representational-format-specific peripheral or midlevel attention, because (1) interference was minimal when stimuli were not masked—that is, when the peripheral representations were maximally available—and (2) both tasks relied on simultaneous or near-simultaneous working memory encoding, which demands central attention (Jolicœur & Dell’Acqua, 1999; Tombu et al., 2011). Saults and Cowan viewed this as evidence for a central working memory store, though we would suggest that the results do not distinguish between central storage and processing. At any rate, when tasks in a similar paradigm were chosen to depend on completely distinct storage formats (visual spatial vs. auditory object-feature formats) and longer delays were interposed between encoding of the two working memory loads, there no longer was interference (Fougnie et al., 2015). Instead, Fougnie et al. provided evidence supporting the view that multiple attentional loci may be maintained at middle and peripheral levels of the hierarchy, if we can rely on the view that noncentral attention is required for working memory maintenance. Specifically, Fougnie et al. used a Bayesian meta-analysis of their seven experiments to demonstrate almost ten times as much evidence against cross-format interference in working memory capacity as in favor of such interference.

Taken together, the evidence from Woodman et al. (2001), Woodman and Luck (2004), Saults and Cowan (2007), and Fougnie et al. (2015) leads to the view that working memory and attention are parallel across representational formats. However, these studies also demonstrated interference when central attention was demanded by two distinct tasks, or when two tasks relied on the same peripheral process or representation. The weakness of these studies as evidence for our framework is that drawing conclusions about attention from them requires additional assumptions about the relationship of attention to working memory.

Summary

Despite the evidence reviewed above, we know of no experiment that has directly and unambiguously tested our proposal that midlevel and peripheral attentional deployments may be maintained in parallel, but central attention is serial and unitary. One set of evidence supporting this view hinges on the finding that at least some brain regions thought to embody central attention are invariant to perceptual modalities or formats of information representation (e.g., object shape vs. spatial location), whereas midlevel sources of attentional control contain distinct neural populations for biasing competition in different modalities and formats (Tamber-Rosenau et al., 2013; Tamber-Rosenau, Newton, & Marois, Manuscript in revision). More evidence adjudicating this view has come from dual-task experiments that measure perceptual accuracy or sensitivity, not reaction times (Alais et al., 2006; Arrighi et al., 2011; Bonnel & Hafter, 1998), though accuracy may be less sensitive than reaction times to attentional interference among peripheral systems. Additional evidence has come from studies assessing the neural effects of dividing attention (de Jong et al., 2010; Johnson & Zatorre, 2006; Talsma et al., 2006). A final set of evidence came from studies searching for interference between working memory representation and either working memory representation in another modality or representational format (Fougnie et al., 2015; Saults & Cowan, 2007) or more traditional attention tasks (e.g., visual search; Woodman & Luck, 2004; Woodman et al., 2001). Overall, these lines of research seem to indicate that peripheral and midlevel attention are parallel, and that they are organized into distinct representational formats or codes, not strictly along sensory modalities (Mishkin et al., 1983; Wickens, 1984, 2008; see also Farah, Hammond, Levine, & Calvanio, 1988). This is an important topic for future research, as we will outline in our conclusion.

Conclusion and proposed future research

Synthesizing prior models (e.g., Baddeley, 1986; Dosenbach et al., 2008; Marois & Ivanoff, 2005; Salvucci & Taatgen, 2008; Serences & Yantis, 2006; Yantis, 2008) and current empirical results (Asplund et al., 2010; Tamber-Rosenau, Asplund, & Marois, Manuscript in revision; Tamber-Rosenau et al., 2013; Tamber-Rosenau, Newton, & Marois, Manuscript in revision), we have proposed a hierarchy of attentional control from central attentional/prefrontal control of task goals, through midlevel/FEF and IPS sources of attentional biasing signals, to peripheral sensory and working memory representations that are biased by midlevel inputs. We further propose that attention is parallel across both midlevel sources of control and peripheral perceptual and representational systems. Thus, we would not expect any interference between distinct attentional deployments in distinct representational formats. On the other hand, because central attention is general with regard to representational formats, it is not possible for more than one central-attention-demanding task to be performed at once. Though these claims are supported by previous research, as we have detailed in this article, the proposal that midlevel and peripheral attention in distinct representational formats does not suffer from cross-format interference has not been adequately tested. Existing results show that sensory sensitivity is diminished minimally or not at all by simultaneously attending to a second representational format, but alternative explanations for these results make them far less than definitive, as we reviewed above. Most of this work failed to take into account the level of representational format, which we propose is more important than the sensory modality in which information originates, in many cases. Most of this prior work was performed with other goals in mind, so it is unfair to place the burden of evaluating our predictions on this work. It is thus important that future experiments be conducted that are designed explicitly to test this proposal using sensitive reaction time measures in sensory attention. Focusing on reaction time measures in sensory attention would limit the interpretational complications associated with working memory, such as recoding information between representational formats and central interference due to encoding/gating into working memory. The proposed sensory attention experiments could be modeled after the working memory design of Fougnie et al. (2015)—searching for interference between spatial and object-feature-based attentional sets in distinct perceptual modalities—or experiments could search for interference between attentional sets in distinct representational formats within a single modality, to unconfound modalities and representational formats. In addition, further neuroimaging work should explicitly test the central/amodal, midlevel, and peripheral/representational format-specific model that we advance here. Resolving the mechanistic properties of the different levels of attention, and further elaborating the hierarchical nature of their relationship, would represent a significant leap forward in revealing the functional architecture of attention and in understanding the roots of its limitations.

Notes

A third category, divided attention, has sometimes been discussed. However, we do not see divided attention as a distinct cognitive or biological system, but instead as a paradigm for studying attention that requires multiple deployments of selective attention in close temporal proximity (Schneider, Dumais, & Shiffrin, 1984). Later in this article, we will discuss a number of divided-attention results in order to make inferences about the parallel or serial nature of peripheral, midlevel, and central attention.

We propose distinct object-feature representational formats for each modality in part on logical grounds—outside of synesthesia, vision cannot have a pitch, audition cannot have a color, and neither can represent features from the chemical senses. However, we group multiple features within a modality (e.g., for vision: color, orientation, and form) into a single representational format on the basis of evidence that multiple nonspatial features are not processed independently under conditions of divided attention (e.g., Mordkoff & Yantis, 1993). Modality-specific object-feature representational formats, coupled with modality-general space and sequence representational formats, also fit the data reviewed later in this article better than do alternative proposals.

It is also possible to entertain a model with only a single, modality-general object-feature representational format, rather than a series of modality-specific object-feature representational formats. However, such a model is hard to reconcile with the experiments reviewed below that revealed no interference between peripheral attention for object features in distinct modalities (e.g., Bonnel & Hafter, 1998). Similarly, one could entertain a model in which interference occurs at the level of sensory modality only, instead of on representational formats. However, such a model is hard to reconcile with other data reviewed below, showing that interference is a property of representational format, not modality (e.g., Woodman & Luck, 2004; Woodman, Vogel, & Luck, 2001). Finally, other research has revealed interference between information in some representational formats regardless of modality, such as for spatial information (Fougnie et al., 2015, Exp. 8). Thus, we favor a model that takes into account sensory modality by proposing modality-specific object-feature representational formats, but modality-general space and sequence representational formats (Fig. 1), subject to nonobligatory modality preferences for space (vision) and sequence (audition) (Michalka et al., 2015).

Questions of difficulty and level of attention are closely related. We suggest that difficulty in the tasks reviewed here is a very coarse measure of whether a task requires central attention or can be carried out with little or no need for central attention. When a task depends on central attention, we suggest that the reason it is viewed as more difficult is because it is resource-limited (Han & Marois, 2013a; Norman & Bobrow, 1975). It is also possible to increase difficulty by degrading the stimulus (“data-limited” difficulty), but the experiments we review have dealt primarily with resource, not data, limits. In the realm of resource limits, we prefer to suggest a mechanistic account of behavioral changes across task circumstances, rather than relying on the more loosely defined phenomenon of “difficulty.”

For example, estimates from visual search paradigms—which should underestimate the time to shift attention because of potential parallel processing, configural processing, or bottom-up attentional time savings—are often in the range of tens of milliseconds (e.g., Wolfe, 1998), and more direct estimates of the time to shift attention suggest larger values, up to about a quarter of a second or more (e.g., Carlson, Hogendoorn, & Verstraten, 2006).

The task could be completed on the basis of detecting either target, since the presence of a target in one modality perfectly predicted the presence of a target in the other modality on the audiovisual blocks of the task.

References

Alais, D., Morrone, C., & Burr, D. (2006). Separate attentional resources for vision and audition. Proceedings of the Royal Society B, 273, 1339–1345. doi:10.1098/rspb.2005.3420

Anderson, J. R., Bothell, D., Byrne, M. D., Douglass, S., Lebiere, C., & Qin, Y. (2004). An integrated theory of the mind. Psychological Review, 111, 1036–1060. doi:10.1037/0033-295X.111.4.1036

Arrighi, R., Lunardi, R., & Burr, D. (2011). Vision and audition do not share attentional resources in sustained tasks. Frontiers in Psychology, 2, 56. doi:10.3389/fpsyg.2011.00056

Asplund, C. L., Todd, J. J., Snyder, A. P., & Marois, R. (2010). A central role for the lateral prefrontal cortex in goal-directed and stimulus-driven attention. Nature Neuroscience, 13, 507–136. doi:10.1038/nn.2509

Awh, E., & Jonides, J. (2001). Overlapping mechanisms of attention and spatial working memory. Trends in Cognitive Sciences, 5, 119–126. doi:10.1016/S1364-6613(00)01593-X

Baddeley, A. (1986). Working memory. Oxford, UK: Clarendon Press.

Baddeley, A. (1998). Recent developments in working memory. Current Opinion in Neurobiology, 8, 234–238.

Baddeley, A., & Hitch, G. (1977). Commentary on “working memory.”. In G. Bower (Ed.), Human memory: Basic processes (pp. 191–196). New York, NY: Academic Press.

Baddeley, A., & Hitch, G. J. (1974). Working memory. In G. H. Bower (Ed.), The psychology of learning and motivation (Vol. 8, pp. 47–89). New York, NY: Academic Press.

Baddeley, A., Logie, R., Bressi, S., Della Sala, S., & Spinnler, H. (1986). Dementia and working memory. Quarterly Journal of Experimental Psychology, 38A, 603–618. doi:10.1080/14640748608401616

Badre, D. (2008). Cognitive control, hierarchy, and the rostro-caudal organization of the frontal lobes. Trends in Cognitive Sciences, 12, 193–200. doi:10.1016/j.tics.2008.02.004

Bettencourt, K. C., & Xu, Y. (2016). Decoding the content of visual short-term memory under distraction in occipital and parietal areas. Nature Neuroscience, 19, 150–157. doi:10.1038/nn.4174

Bisley, J. W., & Goldberg, M. E. (2010). Attention, intention, and priority in the parietal lobe. Annual Review of Neuroscience, 33, 1–21. doi:10.1146/annurev-neuro-060909-152823

Bonnel, A.-M., & Hafter, E. R. (1998). Divided attention between simultaneous auditory and visual signals. Perception & Psychophysics, 60, 179–190.

Broadbent, D. E. (1958). Perception and communication. New York, NY: Pergamon Press.

Bunge, S. A., Klingberg, T., Jacobsen, R. B., & Gabrieli, J. D. (2000). A resource model of the neural basis of executive working memory. Proceedings of the National Academy of Sciences, 97, 3573–3578. doi:10.1073/pnas.050583797

Carlson, T. A., Hogendoorn, H., & Verstraten, F. A. (2006). The speed of visual attention: What time is it? Journal of Vision, 6, 1406–1411. doi:10.1167/6.12.6

Christophel, T. B., Hebart, M. N., & Haynes, J.-D. (2012). Decoding the contents of visual short-term memory from human visual and parietal cortex. Journal of Neuroscience, 32, 12983–12989. doi:10.1523/jneurosci.0184-12.2012

Chun, M. M., Golomb, J. D., & Turk-Browne, N. B. (2011). A taxonomy of external and internal attention. Annual Review of Psychology, 62, 73–101. doi:10.1146/annurev.psych.093008.100427

Cole, M. W., Bagic, A., Kass, R., & Schneider, W. (2010). Prefrontal dynamics underlying rapid instructed task learning reverse with practice. Journal of Neuroscience, 30, 14245–14254. doi:10.1523/jneurosci.1662-10.2010

Cole, M. W., Etzel, J. A., Zacks, J. M., Schneider, W., & Braver, T. S. (2011). Rapid transfer of abstract rules to novel contexts in human lateral prefrontal cortex. Frontiers in Human Neuroscience, 5, 142. doi:10.3389/fnhum.2011.00142

Cole, M. W., Ito, T., & Braver, T. S. (2016). The behavioral relevance of task information in human prefrontal cortex. Cerebral Cortex, 26, 2497–2505. doi:10.1093/cercor/bhv072

Crittenden, B. M., & Duncan, J. (2014). Task difficulty manipulation reveals multiple demand activity but no frontal lobe hierarchy. Cerebral Cortex, 24, 532–540. doi:10.1093/cercor/bhs333

Culham, J. C., & Kanwisher, N. G. (2001). Neuroimaging of cognitive functions in human parietal cortex. Current Opinion in Neurobiology, 11, 157–163.

de Jong, R., Toffanin, P., & Harbers, M. (2010). Dynamic crossmodal links revealed by steady-state responses in auditory-visual divided attention. International Journal of Psychophysiology, 75, 3–15. doi:10.1016/j.ijpsycho.2009.09.013

Desimone, R., & Duncan, J. (1995). Neural mechanisms of selective visual-attention. Annual Review of Neuroscience, 18, 193–222. doi:10.1146/annurev.ne.18.030195.001205

Deutsch, J. A., & Deutsch, D. (1963). Attention: Some theoretical considerations. Psychological Review, 70, 80–90.

Dosenbach, N. U., Fair, D. A., Cohen, A. L., Schlaggar, B. L., & Petersen, S. E. (2008). A dual-networks architecture of top-down control. Trends in Cognitive Sciences, 12, 99–105. doi:10.1016/j.tics.2008.01.001

Duncan, J. (2001). An adaptive coding model of neural function in prefrontal cortex. Nature Reviews Neuroscience, 2, 820–829.

Duncan, J. (2010). The multiple-demand (MD) system of the primate brain: Mental programs for intelligent behaviour. Trends in Cognitive Sciences, 14, 172–179. doi:10.1016/J.Tics.2010.01.004

Dux, P. E., Ivanoff, J., Asplund, C. L., & Marois, R. (2006). Isolation of a central bottleneck of information processing with time-resolved fMRI. Neuron, 52, 1109–1120. doi:10.1016/J.Neuron.2006.11.009

Dux, P. E., & Marois, R. (2009). The attentional blink: A review of data and theory. Attention, Perception, & Psychophysics, 71, 1683–1700. doi:10.3758/APP.71.8.1683

Dux, P. E., Tombu, M. N., Harrison, S., Rogers, B. P., Tong, F., & Marois, R. (2009). Training improves multitasking performance by increasing the speed of information processing in human prefrontal cortex. Neuron, 63, 127–138. doi:10.1016/j.neuron.2009.06.005

Erez, Y., & Duncan, J. (2015). Discrimination of visual categories based on behavioral relevance in widespread regions of frontoparietal cortex. Journal of Neuroscience, 35, 12383–12393. doi:10.1523/JNEUROSCI.1134-15.2015

Ester, E. F., Sprague, T. C., & Serences, J. T. (2015). Parietal and frontal cortex encode stimulus-specific mnemonic representations during visual working memory. Neuron, 87, 893–905. doi:10.1016/j.neuron.2015.07.013

Esterman, M., Liu, G., Okabe, H., Reagan, A., Thai, M., & DeGutis, J. (2015). Frontal eye field involvement in sustaining visual attention: Evidence from transcranial magnetic stimulation. NeuroImage, 111, 542–548. doi:10.1016/j.neuroimage.2015.01.044

Farah, M. J., Hammond, K. M., Levine, D. N., & Calvanio, R. (1988). Visual and spatial mental imagery: Dissociable systems of representation. Cognitive Psychology, 20, 439–462.

Fedorenko, E., Duncan, J., & Kanwisher, N. (2013). Broad domain generality in focal regions of frontal and parietal cortex. Proceedings of the National Academy of Sciences, 110, 16616–16621. doi:10.1073/pnas.1315235110

Fougnie, D., Zughni, S., Godwin, D., & Marois, R. (2015). Working memory storage is intrinsically domain specific. Journal of Experimental Psychology: General, 144, 30–47. doi:10.1037/a0038211

Fuster, J. M. (2001). The prefrontal cortex—An update: Time is of the essence. Neuron, 30, 319–333. doi:10.1016/S0896-6273(01)00285-9

Garavan, H. (1998). Serial attention within working memory. Memory & Cognition, 26, 263–276.

Greenberg, A. S., Esterman, M., Wilson, D., Serences, J. T., & Yantis, S. (2010). Control of spatial and feature-based attention in frontoparietal cortex. Journal of Neuroscience, 30, 14330–14339. doi:10.1523/Jneurosci.4248-09.2010

Griffin, I. C., & Nobre, A. C. (2003). Orienting attention to locations in internal representations. Journal of Cognitive Neuroscience, 15, 1176–1194. doi:10.1162/089892903322598139

Han, S. W., & Marois, R. (2013a). Dissociation between process-based and data-based limitations for conscious perception in the human brain. NeuroImage, 64, 399–406. doi:10.1016/j.neuroimage.2012.09.016

Han, S. W., & Marois, R. (2013b). The source of dual-task limitations: Serial or parallel processing of multiple response selections? Attention, Perception, & Psychophysics, 75, 1395–1405. doi:10.3758/s13414-013-0513-2

Harrison, S. A., & Tong, F. (2009). Decoding reveals the contents of visual working memory in early visual areas. Nature, 458, 632–635. doi:10.1038/nature07832

Hazy, T. E., Frank, M. J., & O’Reilly, R. C. (2007). Towards an executive without a homunculus: Computational models of the prefrontal cortex/basal ganglia system. Philosophical Transactions of the Royal Society B, 362, 1601–1613.

Higo, T., Mars, R. B., Boorman, E. D., Buch, E. R., & Rushworth, M. F. (2011). Distributed and causal influence of frontal operculum in task control. Proceedings of the National Academy of Sciences, 108, 4230–4235. doi:10.1073/pnas.1013361108

Hopf, J. M., Luck, S. J., Boelmans, K., Schoenfeld, M. A., Boehler, C. N., Rieger, J., & Heinze, H. J. (2006). The neural site of attention matches the spatial scale of perception. Journal of Neuroscience, 26, 3532–3540. doi:10.1523/JNEUROSCI.4510-05.2006

Huestegge, L., & Koch, I. (2009). Dual-task crosstalk between saccades and manual responses. Journal of Experimental Psychology: Human Perception and Performance, 35, 352–362. doi:10.1037/a0013897

Johnson, J. A., & Zatorre, R. J. (2005). Attention to simultaneous unrelated auditory and visual events: Behavioral and neural correlates. Cerebral Cortex, 15, 1609–1620. doi:10.1093/cercor/bhi039

Johnson, J. A., & Zatorre, R. J. (2006). Neural substrates for dividing and focusing attention between simultaneous auditory and visual events. NeuroImage, 31, 1673–1681. doi:10.1016/j.neuroimage.2006.02.026

Johnston, J. C., & McCann, R. S. (2006). On the locus of dual-task interference: Is there a bottleneck at the stimulus classification stage? Quarterly Journal of Experimental Psychology, 59, 694–719. doi:10.1080/02724980543000015

Jolicœur, P., & Dell’Acqua, R. (1999). Attentional and structural constraints on visual encoding. Psychological Research, 62, 154–164. doi:10.1007/s004260050048

Kahneman, D. (1973). Attention and effort. Englewood Cliffs, NJ: Prentice-Hall.

Kastner, S., Pinsk, M. A., De Weerd, P., Desimone, R., & Ungerleider, L. G. (1999). Increased activity in human visual cortex during directed attention in the absence of visual stimulation. Neuron, 22, 751–761.

Kelley, T. A., Serences, J. T., Giesbrecht, B., & Yantis, S. (2008). Cortical mechanisms for shifting and holding visuospatial attention. Cerebral Cortex, 18, 114–125.

Kennerley, S. W., & Wallis, J. D. (2009). Reward-dependent modulation of working memory in lateral prefrontal cortex. Journal of Neuroscience, 29, 3259–3270. doi:10.1523/jneurosci.5353-08.2009

Koechlin, E., Ody, C., & Kouneiher, F. (2003). The architecture of cognitive control in the human prefrontal cortex. Science, 302, 1181–1185. doi:10.1126/science.1088545

Lavie, N., Hirst, A., de Fockert, J. W., & Viding, E. (2004). Load theory of selective attention and cognitive control. Journal of Experimental Psychology: General, 133, 339–354. doi:10.1037/0096-3445.133.3.339

Lavie, N., & Tsal, Y. (1994). Perceptual load as a major determinant of the locus of selection in visual attention. Perception & Psychophysics, 56, 183–197. doi:10.3758/BF03213897

Liu, T., & Becker, M. W. (2013). Serial consolidation of orientation information into visual short-term memory. Psychological Science, 24, 1044–1050. doi:10.1177/0956797612464381

Mackey, W. E., Devinsky, O., Doyle, W. K., Meager, M. R., & Curtis, C. E. (2016). Human dorsolateral prefrontal cortex is not necessary for spatial working memory. Journal of Neuroscience, 36, 2847–2856. doi:10.1523/JNEUROSCI.3618-15.2016

Marois, R., & Ivanoff, J. (2005). Capacity limits of information processing in the brain. Trends in Cognitive Sciences, 9, 296–305. doi:10.1016/j.tics.2005.04.010

Marti, S., Sigman, M., & Dehaene, S. (2012). A shared cortical bottleneck underlying attentional blink and psychological refractory period. NeuroImage, 59, 2883–2898. doi:10.1016/j.neuroimage.2011.09.063

Meyer, D. E., & Kieras, D. E. (1997). A computational theory of executive cognitive processes and multiple-task performance: Part 1. Basic mechanisms. Psychological Review, 104, 3–65. doi:10.1037/0033-295x.104.1.3

Michalka, S. W., Kong, L., Rosen, M. L., Shinn-Cunningham, B. G., & Somers, D. C. (2015). Short-term memory for space and time flexibly recruit complementary sensory-biased frontal lobe attention networks. Neuron, 87, 882–892. doi:10.1016/j.neuron.2015.07.028

Mishkin, M., Ungerleider, L. G., & Macko, K. A. (1983). Object vision and spatial vision: Two cortical pathways. Trends in Neurosciences, 6, 414–417. doi:10.1016/0166-2236(83)90190-X

Miyake, A., Friedman, N. P., Emerson, M. J., Witzki, A. H., Howerter, A., & Wager, T. D. (2000). The unity and diversity of executive functions and their contributions to complex “frontal lobe” tasks: A latent variable analysis. Cognitive Psychology, 41, 49–100. doi:10.1006/cogp.1999.0734

Montojo, C. A., & Courtney, S. M. (2008). Differential neural activation for updating rule versus stimulus information in working memory. Neuron, 59, 173–182.

Moray, N. (1967). Where is capacity limited? A survey and a model. Acta Psychologica, 27, 84–92.

Mordkoff, J. T., & Yantis, S. (1993). Dividing attention between color and shape: Evidence of coactivation. Perception & Psychophysics, 53, 357–366.

Navon, D., & Gopher, D. (1979). On the economy of the human-processing system. Psychological Review, 86, 214–255. doi:10.1037/0033-295X.86.3.214

Navon, D., & Miller, J. (1987). Role of outcome conflict in dual-task interference. Journal of Experimental Psychology: Human Perception and Performance, 13, 435–448. doi:10.1037/0096-1523.13.3.435

Nee, D. E., Jahn, A., & Brown, J. W. (2014). Prefrontal cortex organization: Dissociating effects of temporal abstraction, relational abstraction, and integration with FMRI. Cerebral Cortex, 24, 2377–2387. doi:10.1093/cercor/bht091

Norman, D. A., & Bobrow, D. G. (1975). On data-limited and resource-limited processes. Cognitive Psychology, 7, 44–64. doi:10.1016/0010-0285(75)90004-3

Norman, D. A., & Shallice, T. (1986). Attention to action: Willed and automatic control of behaviour. In R. J. Davidson, G. E. Schwarts, & D. Shapiro (Eds.), Consciousness and self-regulation: Advances in research and theory (Vol. 1, pp. 1–18). New York, NY: Plenum.

O’Connor, N., & Hermelin, B. (1972). Seeing and hearing and space and time. Perception & Psychophysics, 11, 46–48. doi:10.3758/BF03212682

Offen, S., Gardner, J. L., Schluppeck, D., & Heeger, D. J. (2010). Differential roles for frontal eye fields (FEFs) and intraparietal sulcus (IPS) in visual working memory and visual attention. Journal of Vision, 10, 28. doi:10.1167/10.11.28

Pashler, H. (1994). Dual-task interference in simple tasks—Data and theory. Psychological Bulletin, 116, 220–244. doi:10.1037/0033-2909.116.2.220

Posner, M. I., & Boies, S. J. (1971). Components of attention. Psychological Review, 78, 391–408. doi:10.1037/H0031333