Abstract

Deep neural perception and control networks have become key components of self-driving vehicles. User acceptance is likely to benefit from easy-to-interpret textual explanations which allow end-users to understand what triggered a particular behavior. Explanations may be triggered by the neural controller, namely introspective explanations, or informed by the neural controller’s output, namely rationalizations. We propose a new approach to introspective explanations which consists of two parts. First, we use a visual (spatial) attention model to train a convolutional network end-to-end from images to the vehicle control commands, i.e., acceleration and change of course. The controller’s attention identifies image regions that potentially influence the network’s output. Second, we use an attention-based video-to-text model to produce textual explanations of model actions. The attention maps of controller and explanation model are aligned so that explanations are grounded in the parts of the scene that mattered to the controller. We explore two approaches to attention alignment, strong- and weak-alignment. Finally, we explore a version of our model that generates rationalizations, and compare with introspective explanations on the same video segments. We evaluate these models on a novel driving dataset with ground-truth human explanations, the Berkeley DeepDrive eXplanation (BDD-X) dataset. Code is available at https://github.com/JinkyuKimUCB/explainable-deep-driving.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

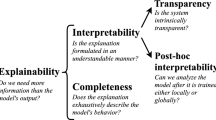

Deep neural networks are an effective tool [3, 26] to learn vehicle controllers for self-driving cars in an end-to-end manner. Despite their effectiveness as function estimators, DNNs are typically cryptic black-boxes. There are no explainable states or labels in such a network, and representations are fully distributed as sets of activations. Explainable models that make deep models more transparent are important for a number of reasons: (i) user acceptance – self-driving vehicles are a radical technology for users to accept, and require a very high level of trust, (ii) understanding and extrapolation of vehicle behavior – users ideally should be able to anticipate what the vehicle will do in most situations, (iii) effective communication – they help user communicate preferences to the vehicle and vice versa.

Our model predicts vehicle’s control commands, i.e., an acceleration and a change of course, at each timestep, while an explanation model generates a natural language explanation of the rationales, e.g., “The car is driving forward because there are no other cars in its lane”, and a visual explanation in the form of attention – attended regions directly influence the textual explanation generation process. (color figure online)

Explanations can be either rationalizations – explanations that justify the system’s behavior in a post-hoc manner, or introspective explanations – explanations that are based on the system’s internal state. Introspective explanations represent causal relationships between the system’s input and its behavior, and address all the goals above. Rationalizations can address acceptance, (i) above, but are less helpful with (ii) understanding the causal behavior of the model or (iii) communication which is grounded in the vehicle’s internal state (known as theory of mind in human communication).

One way of generating introspective explanations is via visual attention [11, 27]. Visual attention filters out non-salient image regions, and image areas inside the attended region have potential causal effect on the output (those outside cannot). As shown in [11], additional salience filtering can be applied so that the attention map shows only regions that causally affect the output. Visual attention constrains the reasons for the controllers actions but does not e.g., tie specific actions to specific input regions e.g., “the vehicle slowed down because the light controlling the intersection is red”. It is also likely to be less convenient for passengers to replay the attention map vs. a (typically on-demand) speech presentation of a textual explanation.

In this work, we focus on generating textual descriptions and explanations, such as the pair: “vehicle slows down” and“because it is approaching an intersection and the light is red” as in Fig. 1. Natural language has an advantage of being inherently understandable and does not require familiarity with the design of an intelligent system in order to provide useful information. In order to train such a model, we collect explanations from human annotators. Our explanation dataset is built on top of another large-scale driving dataset [26] collected from dashboard cameras in human driven vehicles. Annotators view the video dataset, compose descriptions of the vehicle’s activity and explanations for the actions that the vehicle driver performed.

Obtaining training data for vehicle explanations is by itself a significant challenge. The ground truth explanations are in fact often rationalizations (generated by an observer rather than the driver), and there are additional challenges with acquiring driver data. But even more than that, it is currently impossible to obtain human explanations of what the vehicle controller was thinking, i.e., a real ground truth. Nevertheless our experiments show that using attention alignment between controller and explanation models generally improves the quality of explanations, i.e., generates explanations which better match the human rationalizations of the driving videos.

Our contributions are as follows. (1) We propose an introspective textual explanation model for self-driving cars to provide easy-to-interpret explanations for the behavior of a deep vehicle control network. (2) We integrate our explanation generator with the vehicle controller by aligning their attentions to ground the explanation, and compare two approaches: attention-aligned explanations and non-aligned rationalizations. (3) We generated a large-scale Berkeley DeepDrive eXplanation (BDD-X) dataset with over 6,984 video clips annotated with driving descriptions, e.g., “The car slows down” and explanations, e.g., “because it is about to merge with the busy highway”. Our dataset provides a new test-bed for measuring progress towards developing explainable models for self-driving cars.

2 Related Work

In this section, we review existing work on end-to-end learning for self-driving cars as well as work on visual explanation and justification.

End-to-End Learning for Self-Driving Cars: Most of vehicle controllers for self-driving cars can be divided in two types of approaches [5]: (1) a mediated perception-based approach and (2) an end-to-end learning approach. The mediated perception-based approach depends on recognizing human-designated features, such as lane markings, traffic lights, pedestrians or cars, which generally require demanding parameter tuning for a balanced performance [19]. Notable examples include [4, 23], and [16].

As for the end-to-end approaches, recent works [3, 26] suggest that neural networks can be successfully applied to self-driving cars in an end-to-end manner. Most of these approaches use behavioral cloning that learns a driving policy as a supervised learning problem over observation-action pairs from human driving demonstrations. Among these, [3] present a deep neural vehicle controller network that directly maps a stream of dashcam images to steering controls, while [26] use a deep neural network that takes input raw pixels and prior vehicle states and predict vehicle’s future motion. Despite their potential, the effectiveness of these approaches is limited by their inability to explain the rationale for the system’s decisions, which makes their behavior opaque and uninterpretable. In this work, we propose an end-to-end trainable system for self driving cars that is able to justify its predictions visually via attention maps and textually via natural language.

Visual and Textual Explanations: The importance of explanations for an end-user has been studied from the psychological perspective [17, 18], showing that humans use explanations as a guide for learning and understanding by building inferences and seeking propositions or judgments that enrich their prior knowledge. They usually seek for explanations to fill the requested gap depending on prior knowledge and goal in question.

Vehicle controller generates spatial attention maps \(\alpha ^c\) for each frame, predicts acceleration and change of course (\(\hat{c}_t, \hat{a}_t\)) that condition the explanation. Explanation generator predicts temporal attention across frames (\(\beta \)) and a spatial attention in each frame (\(\alpha ^j\)). SAA uses \(\alpha ^c\), WAA enforces a loss between \(\alpha ^j\) and \(\alpha ^c\).

In support of this trend, recently explainability has been growing as a field in computer vision and machine learning. Especially, there is a growing interest in introspective deep neural networks. [28] use deconvolution to visualize inner-layer activations of convolutional networks. [14] propose automatically-generated captions for textual explanations of images. [2] develop a richer notion of contribution of a pixel to the output. However, a difficulty with deconvolution-style approaches is the lack of formal measures of how the network output is affected by spatially-extended features (rather than pixels). Exceptions to this rule are attention-based approaches. [11] propose attention-based approach with causal filtering that removes spurious attention blobs. However, it is also important to be able to justify the decisions that were made and explain why they are reasonable in a human understandable manner, i.e., a natural language. For an image classification problem, [7, 8] used an LSTM [9] caption generation model that generates textual justifications for a CNN model. [21] combine attention-based model and a textual justification system to produce an interpretable model. To our knowledge, ours is the first attempt to justify the decisions of a real-time deep controller through a combination of attention and natural language explanations on a stream of images.

3 Explainable Driving Model

In this paper, we propose a driving model that explains how a driving decision was made both (i) by visualizing image regions where the decision maker attends to and (ii) by generating a textual description and explanation of what has triggered a particular driving decision, e.g., “the car continues (description) because traffic flows freely (explanation)”. As we summarize in Fig. 2, our model involves two parts: (1) a Vehicle controller, which is trained to learn human-demonstrated vehicle control commands, e.g., an acceleration and a change of course; our controller uses a visual (spatial) attention mechanism that identifies potentially influential image regions for the network’s output; (2) a Textual explanation generator, which generates textual descriptions and explanations controller behavior. The key to the approach is to align the attention maps.

Preprocessing. Our model is trained to predict two vehicle control commands, i.e., an acceleration and a change of course. At each time t, an acceleration, \(a_t\), is measured by taking the derivative of speed measurements, and a change of course, \(c_t\), is computed by taking a difference between a current vehicle’s course and a smoothed value by using simple exponential smoothing method [10]. We provide details in supplemental material. To reduce computational burden, we down-sample to 10 Hz and reduce the input dimensionality by resizing raw images to a 90\(\times \)160\(\times \)3 image with nearest-neighbor scaling algorithm. Each image is then normalized by subtracting the mean from the raw input pixels and dividing by its standard deviation. This preprocessing is applied to the latest 4 frames, which are then stacked to produce the final input to the neural network.

Convolutional Feature Encoder. We use a convolutional neural network to encode the visual information into a set of visual feature vectors at time t, i.e., convolutional feature cube \(\mathbf{{X}}_{t} = \{ \mathbf{{x}}_{t,1}, \mathbf{{x}}_{t,2},\ldots , \mathbf{{x}}_{t, l} \} \) where \(\mathbf{{x}}_{t, i}\in \mathcal{{R}}^{d}\) for \(i\in \{1,2, \ldots , l\}\) and l is the number of different spatial regions of the given input. Each feature vector contains a high-level description of objects present in a certain input region. This allows us to focus selectively on different regions of the given image by choosing a subset of these feature vectors. We use a five-layered convolutional network as in [3, 11] and omit max-pooling layers to prevent spatial information loss [15]. The output is a three-dimensional feature cube \({\mathbf{{X}}_t}\) and the feature block has the size \(w\) \(\times \) \(h\) \(\times \) \(d\) at each time t.

3.1 Vehicle Controller

Our vehicle controller is trained in an end-to-end manner. Given a stream of dashcam images and the vehicle’s (current) sensor measurements, e.g., speed, the controller predicts the acceleration and the change of course at each timestep. We utilize a deterministic soft attention mechanism that is trainable by standard back-propagation methods. The soft attention mechanism applies attention weights multiplicatively to the features and additively pools the results through the maps \(\pi \). Our model feeds the context vectors \(\mathbf{{y}}_t^{c}\) produced by the controller map \(\pi ^{c}\) to the controller LSTM:

where \(i=\{1,2,\dots ,l\}\). \(\alpha _{t,i}^{c}\) is an attention weight map output by a spatial softmax and satisfies \(\sum _{i}{\alpha _{t,i}^{c}}=1\). These attention weights can be interpreted as the probability over l convolutional feature vectors. A location with a high attention weight is salient for the task (driving). The attention model \(f_{ attn }^{c}(\mathbf{{X}}_{t},\mathbf{{h}}_{t-1}^{c})\) is conditioned on the previous LSTM state \(\mathbf{{h}}_{t-1}^{c}\), and the current feature vectors \(\mathbf{{X}}_{t}\). It comprises a fully-connected layer and a spatial softmax to yield normalized \(\{\alpha _{t,i}^{c}\}\).

The outputs of the vehicle controller are the vehicle’s acceleration \(\hat{a}_{t}\) and the change of course \(\hat{c}_{t}\). To this end, we use additional multi-layer fully-connected blocks with ReLU non-linearities, denoted by \(f_{ a }(\mathbf{{y}}_t^{c}, \mathbf{{h}}_t^{c})\) and \(f_{ c }(\mathbf{{y}}_t^{c}, \mathbf{{h}}_t^{c})\). We also add the entropy H of the attention weight to the objective function:

The entropy is computed on the attention map as though it were a probability distribution. Minimizing loss corresponds to minimizing entropy. Low entropy attention maps are sparse and emphasize relatively few regions. We use a hyperparameter \(\lambda _ c \) to control the strength of the entropy regularization term.

3.2 Attention Alignments

The controller attention map provides input regions that the network attends to, and these regions have a direct influence on the network’s output. Thus, to yield “introspective” explanation, we argue that the agent must attend to those areas. For example, if a vehicle controller predicts“acceleration” by detecting a green traffic light, the textual justification must mention this evidence, e.g., “because the light has turned green”. Here, we explain two approaches to align the vehicle controller and the textual justifier such that they look at the same input regions.

Strongly Aligned Attention (SAA): A consecutive set of spatially attended input regions, each of which is encoded as a context vector \(\mathbf{{y}}_t^{c}\) by the vehicle controller, can be directly used to generate a textual explanation (see Fig. 2, right-top). Thus, models share a single layer of an attention. As we detail in Sect. 3.3, our explanation module uses temporal attention with weights \(\beta \) to the controller context vectors \(\{\mathbf{{y}}_t^j, t=1,\ldots \}\) directly, and thus allows flexibility in output tokens relative to input samples.

Weakly Aligned Attention (WAA): Instead of directly using vehicle controller’s attention, an explanation generator can have its own spatial attention network (see Fig. 2, right-bottom). A loss, i.e., the Kullback-Leibler divergence (\(D_{ KL }\)), between the two attention maps makes the explanation generator refer to the salient objects:

where \(\alpha ^{c}\) and \(\alpha ^{j}\) are the attention maps generated by the vehicle controller and the explanation generator model, respectively. We use a hyperparameter \(\lambda _{a}\) to control the strength of the regularization term.

3.3 Textual Explanation Generator

Our textual explanation generator takes sequence of video frames of variable length and generates a variable-length description/explanation. Descriptions and explanations are typically part of the same sentence in the training data but are annotated with a separator. In training and testing we use a synthetic separator token \(\texttt {<sep>}\) between description and explanation, but treat them as a single sequence. The explanation LSTM predicts the description/explanation sequence and outputs per-word softmax probabilities.

The source of context vectors for the description generator depends on the type of alignment between attention maps. For weakly aligned attention or rationalizations, the explanation generator creates its own spatial attention map \(\alpha ^j\) at each time step t. This map includes a loss against the controller attention map for weakly-aligned attention, but has no such loss when generating rationalizations. The attention map \(\alpha ^j\) is applied to the CNN output yielding context vectors \(\mathbf{{y}}_t^j\).

Our textual explanation generator explains the rationale behind the driving model, and thus we argue that a justifier needs the outputs from the vehicle motion predictor as an input. We concatenate a tuple \((\hat{a}_{t}, \hat{c}_{t})\) with a spatially-attended context vector \(\mathbf{{y}}_t^{j}\) and \(\mathbf{{y}}_t^{c}\) respectively for weakly and strongly aligned attention approaches. This concatenated vector is then used to update the LSTM for a textual explanation generation.

The explanation module applies temporal attention with weights \(\beta \) to either the controller context vectors directly \(\{\mathbf{{y}}_t^c, t\,=\,1,\ldots \}\) (strong alignment), or to the explanation vectors \(\{\mathbf{{y}}_t^j, t=1,\ldots \}\) (weak alignment or rationalization). Such input sequence attention is common in sequence-to-sequence models and allows flexibility in output tokens relative to input samples [1]. The result of temporal attention application is (dropping the c or j superscripts on \(\mathbf{{y}}\)):

where \(\sum _{t}{\beta _{k, t}}=1\). The weight \(\beta _{k, t}\) at each time k (for sentence generation) is computed by an attention model \(f_{ attn }^{e}(\{\mathbf{{y}}_{t}\},\mathbf{{h}}_{k-1}^{e})\), which is similar to the spatial attention as we explained in previous section (see supplemental material for details).

To summarize, we minimize the following negative log-likelihood (for training our justifier) as well as vehicle control estimation loss \(\mathcal {L}_{c}\) and attention alignment loss \(\mathcal {L}_{a}\):

(A) Examples of input frames and corresponding human-annotated action description and justification of how a driving decision was made. For visualization, we sample frames at every two seconds. (B) BDD-X dataset details. Over 77 h of driving with time-stamped human annotations for action descriptions and justifications.

4 Berkeley DeepDrive eXplanation Dataset (BDD-X)

In order to effectively generate and evaluate textual driving rationales we have collected textual justifications for a subset of the Berkeley Deep Drive (BDD) dataset [26]. This dataset contains videos, approximately 40 s in length, captured by a dashcam mounted behind the front mirror of the vehicle. Videos are mostly captured during urban driving in various weather conditions, featuring day and nighttime. The dataset also includes driving on other road types, such as residential roads (with and without lane markings), and contains all the typical driver’s activities such as staying in a lane, turning, switching lanes, etc. Alongside the video data, the dataset provides a set of time-stamped sensor measurements, such as vehicle’s velocity, course, and GPS location. For sensor logs unsynchronized with the time-stamps of video data, we use the estimates of the interpolated measurements.

In order to increase trust and reliability, the machine learning system underlying self driving cars should be able to explain why at a certain time they make certain decisions. Moreover, a car that justifies its decision through natural language would also be user friendly. Hence, we populate a subset of the BDD dataset with action description and justification for all the driving events along with their timestamps. We provide examples from our Berkeley Deep Drive eXplanation (BDD-X) dataset in Fig. 3(A).

Annotation. We provide a driving video and ask a human annotator in Amazon Mechanical Turk to imagine herself being a driving instructor. Note that we specifically select human annotators who are familiar with US driving rules. The annotator has to describe what the driver is doing (especially when the behavior changes) and why, from a point of view of a driving instructor. Each described action has to be accompanied with a start and end time-stamp. The annotator may stop the video, forward and backward through it while searching for the activities that are interesting and justifiable.

To ensure that the annotators provide us the driving rationales as well as descriptions, we require that they separately enter the action description and the action justification: e.g., “The car is moving into the left lane” and “because the school bus in front of it is stopping.”. In our preliminary annotation studies, we found that giving separate annotation boxes is helpful for the annotator to understand the task and perform better.

Dataset Statistics. Our dataset (see Fig. 3(B)) is composed of over 77 h of driving within 6,984 videos. The videos are taken in diverse driving conditions, e.g., day/night, highway/city/countryside, summer/winter etc. On an average of 40 s, each video contains around 3–4 actions, e.g., speeding up, slowing down, turning right etc., all of which are annotated with a description and an explanation. Our dataset contains over 26K activities in over 8.4M frames. We introduce a training, a validation and a test set, containing 5,588, 698 and 698 videos, respectively.

Inter-human Agreement. Although we cannot have access to the internal thought process of the drivers, one can infer the reason behind their actions using the visual evidence of the scene. Besides, it would be challenging to setup the data collection process which enables drivers to report justifications for all their actions, if at all possible. We ensure the high quality of the collected annotations by relying on a pool of qualified workers (i.e., they pass a qualification test) and selective manual inspection.

Further, we measure the inter-human agreement on a subset of 998 training videos, each of which has been annotated by two different workers. Our analysis is as follows. In 72% of videos the number of annotated intervals differs by less than 3. The average temporal IoU across annotators is 0.63 (\(SD=0.21\)). When \(IoU>0.5\) the CIDEr score across action descriptions is 142.60, across action justifications it is 97.49 (random choice: 39.40/28.39, respectively). When \(IoU>0.5\) and action descriptions from two annotators are identical (165 clipsFootnote 1) the CIDEr score across justifications is 200.72, while a strong baseline, selecting a justification from a different video with the same action description, results in CIDEr score 136.72. These results show an agreement among annotators and relevance of collected action descriptions and justifications.

Coverage of Justifications. BDD-X dataset has over 26k annotations (77 h) collected from a substantial random subset of large-scale crowd-sourced driving video dataset, which consists of all the typical driver’s activities during urban driving. The vocabulary of training action descriptions and justifications is 906 and 1,668 words respectively, suggesting that justifications are more diverse than descriptions. Some of the common actions are (frequency decreasing): moving forward, stopping, accelerating, slowing, turning, merging, veering, pulling [in]. Justifications cover most of the relevant concepts: traffic signs/lights, cars, lanes, crosswalks, passing, parking, pedestrians, waiting, blocking, safety etc.

Vehicle controller’s attention maps in terms of four different entropy regularization coefficient \(\lambda _{c}\)={0,10,100,1000}. Red parts indicate where the model pays more attention. Higher value of \(\lambda _{c}\) makes the attention maps sparser. We observe that sparser attention maps improves the performance of generating textual explanations, while control performance is slightly degraded.

5 Results and Discussion

Here, we first provide our training and evaluation details, then make a quantitative and qualitative analysis of our vehicle controller and our textual justifier.

Training and Evaluation Details. As the convolutional feature encoder, we use 5-layer CNN [3] that produces a 12\(\times \)20\(\times \)64-dimensional convolutional feature cube from the last layer. The controller following the CNN has 5 fully connected layers (i.e., #hidden dims: 1164, 100, 50, 10, respectively), which predict the acceleration and the change of course, and is trained end-to-end from scratch. Using other more expressive networks may give a performance boost over our base CNN configuration, but these explorations are out of our scope. Given the obtained convolutional feature cube, we first train our vehicle controller, and then the explanation generator (single layer LSTM unless stated otherwise) by freezing the control network. For training, we use Adam optimizer [12] and dropout [22] of 0.5 at hidden state connections and Xavier initialization [6]. The standard dataset is split as 80% (5,588 videos) as the training set, 10% (698 videos) as the test, and 10% (698 videos) as the validation set. Our model takes less than a day to train on a single NVIDIA Titan X GPU.

For evaluating the vehicle controller we use the mean absolute error (lower is better) and the distance correlation (higher is better) and for the justifier we use BLEU [20], METEOR [13], and CIDEr-D [24], as well as human evaluation. The former metrics are widely used for the evaluation of video and image captioning models automatically against ground truth.

5.1 Evaluating Vehicle Controller

We start by quantitatively comparing variants of our vehicle controller and the state of the art, which include variants of the work by Bojarski et al. [3] and Kim et al. [11] in Table 1. Note that these works differ from ours in that their output is the curvature of driving, while our model estimates continuous acceleration and the change of course values. Thus, their models have a single output, while ours estimate both control commands. In this experiment, we replaced their output layer with ours. For a fair comparison, we use an identical CNN for all models.

In this experiment, each model estimates vehicle’s acceleration and the change of course. Our vehicle controller predicts acceleration and the change of course, which generally requires prior knowledge of vehicle’s current state, i.e., speed and course, and navigational inputs, especially in urban driving. We observe that the use of the latest four consecutive frames and prior inputs (i.e., vehicle’s motion measurement and navigational information) improves the control prediction accuracy (see 3rd vs. 7th row), while the use of visual attention also provides improvements (see 1st vs. 3rd row). Specifically, our model without the entropy regularization term (last row) performs the best compared to CNN based approaches [3] and [11]. The improvement is especially pronounced for acceleration estimation.

In Fig. 4 we compare input images (first column) and corresponding attention maps for different entropy regularization coefficients \(\lambda _{c}\)=\(\{0,10,100,1000\}\). Red is high attention, blue is low. As we see, higher \(\lambda _{c}\) lead to sparser maps. For better visualization, an attention map is overlaid by its contour lines and an input image.

Quantitatively, the controller performance (error and correlation) slightly degrade as \(\lambda _c\) increases and the attention maps become more sparse (see bottom four rows in Table 1). So there is some tension between sparse maps (which are more interpretable), and controller performance. An alternative to regularization, [11] use causal filtering over the controller’s attention maps and achieve about 60% reduction in “hot” attention pixels. Causal filtering is desirable for the present work not only to improve sparseness but because after causal filtering,“hot” regions necessarily do have a causal effect on controller behavior, whereas unfiltered attention regions may not. We will explore it in future work.

5.2 Evaluating Textual Explanations

In this section, we evaluate textual explanations against the ground truth explanation using automatic evaluation measures, and also provide human evaluation followed by a qualitative analysis.

Automatic Evaluation. For state-of-the-art comparison, we implement the S2VT [25] and its variants. Note that in our implementation S2VT uses our CNN and does not use optical flow features. In Table 2, we report a summary of our experiment validating the quantitative effectiveness of our approach. Rows 5–10 show that best explanation results are generally obtained with weakly-aligned attention. Comparing with row 4, the introspective models all gave higher scores than the rationalization model for explanation generation. Description scores are more mixed, but most of the introspective model scores are higher. As we will see in the next section, our rationalization model focuses on visual saliencies, which is sometimes different from what controller actually “looks at”. For example, in Fig. 5 (5th example), our controller sees the front vehicle and our introspective models generate explanations such as“because the car in front is moving slowly”, while our rationalization model does not see the front vehicle and generates “because it’s turning to the right”.

As our training data are human observer annotations of driving videos, and they are not the explanations of drivers, they are post-hoc rationalizations. However, based on the visual evidence, (e.g., the existence of a turn right sign explains why the driver has turned right even if we do not have access to the exact thought process of the driver), they reflect typical causes of human driver behavior. The data suggest that grounding the explanations in controller internal state helps produce explanations that better align with human third-party explanations. Biasing the explanations toward controller state (which the WAA and SAA models do) improves their plausibility from a human perspective, which is a good sign. We further analyze human preference in the evaluation below.

Human Evaluation. In our first human evaluation experiment the human judges are only shown the descriptions, while in the second experiment they only see the explanations (e.g. “The car ... because \(<explanation>\)”), to exclude the effect of explanations/descriptions on the ratings, respectively. We randomly select 250 video intervals and compare the Rationalization, WAA (\(\lambda _{a}\)=10, \(\lambda _{c}\)=100) and SAA (\(\lambda _{c}\)=100) predictions. The humans are asked to rate a description/explanation on the scale {1..4} (1: correct and specific/detailed, 2: correct, 3: minor error, 4: major error). We collect ratings from 3 human judges for each task. Finally, we compute the majority vote, i.e., at least 2 out of 3 judges should rate the description/explanation with a score 1 or 2.

Example descriptions and explanations generated by our model compared to human annotations. We provide (top row) input raw images and attention maps by (from the 2nd row) vehicle controller, textual explanation generator, and rationalization model (Note: (\(\lambda _{c}, \lambda _{a}\)) = (100,10) and the synthetic separator token is replaced by ‘+’).

As shown in Table 3, our WAA model outperforms the other two, supporting the results above. Interestingly, Rationalization does better than SAA on this subset, according to humans. This is perhaps because the explanation in SAA relies on the exact same visual evidence as the controller, which may include counterfactually important regions (i.e., there could be a stop sign here), but may confuse the explanation module.

Qualitative Analysis of Textual Justifier. As Fig. 5 shows, our proposed textual explanation model generates plausible descriptions and explanations, while our model also provides attention visualization of their evidence. In the first example of Fig. 5, controller sees neighboring vehicles and lane markings, while explanation model generates “the car is driving forward (description)” and“because traffic is moving freely (explanation)”. In Fig. 5, we also provide other examples that cover common driving situations, such as driving forward (1st example), slowing/stopping (2nd, 3rd, and 5th), and turning (4th and 6th). We also observe that our explanations have significant diversity, e.g., they provide various reasons for stopping: red lights, stop signs, and traffic. We provide more diverse examples as supplemental materials.

6 Conclusion

We described an end-to-end explainable driving model for self-driving cars by incorporating a grounded introspective explanation model. We showed that (i) incorporation of an attention mechanism and prior inputs improves vehicle control prediction accuracy compared to baselines, (ii) our grounded (introspective) model generates accurate human understandable textual descriptions and explanations for driving behaviors, (iii) attention alignment is shown to be effective at combining the vehicle controller and the justification model, and (iv) our BDD-X dataset allows us to train and automatically evaluate our interpretable justification model by comparing with human annotations.

Recent work [11] suggests that causal filtering over attention heat maps can achieve a useful reduction in explanation complexity by removing spurious blobs, which do not significantly affect the output. Causal filtering idea would be worth exploring to obtain causal attention heat maps, which can provide the causal ground of reasoning. Furthermore, it would be beneficial to incorporate stronger perception pipeline, e.g. object detectors, to introduce more “grounded” visual representations and further improve the quality and diversity of the generated explanations. Besides, incorporating driver’s eye gaze into our explanation model for mimicking driver’s behavior, would be an interesting potential future direction.

Notes

- 1.

The number of video intervals (not full videos), where the provided action descriptions (not explanations) are identical (common actions e.g., “the car slows down”).

References

Bahdanau, D., Cho, K., Bengio, Y.: Neural machine translation by jointly learning to align and translate. In: Conference on Learning Representations (2014)

Bojarski, M., et al.: VisualBackProp: visualizing CNNs for autonomous driving. CoRR, vol. abs/1611.05418 (2016)

Bojarski, M., et al.: End to end learning for self-driving cars. CoRR abs/1604.07316 (2016)

Buehler, M., Iagnemma, K., Singh, S.: The DARPA Urban Challenge: Autonomous Vehicles in City Traffic. Springer, Heidelberg (2009). https://doi.org/10.1007/978-3-642-03991-1

Chen, C., Seff, A., Kornhauser, A., Xiao, J.: Deepdriving: learning affordance for direct perception in autonomous driving. In: 2015 IEEE International Conference on Computer Vision (ICCV), pp. 2722–2730. IEEE (2015)

Glorot, X., Bengio, Y.: Understanding the difficulty of training deep feedforward neural networks. In: Aistats, vol. 9, pp. 249–256 (2010)

Hendricks, L.A., Akata, Z., Rohrbach, M., Donahue, J., Schiele, B., Darrell, T.: Generating visual explanations. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9908, pp. 3–19. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46493-0_1

Hendricks, L.A., Hu, R., Darrell, T., Akata, Z.: Grounding visual explanations. In: European Conference on Computer Vision (ECCV) (2018)

Hochreiter, S., Schmidhuber, J.: LSTM can solve hard long time lag problems. In: Advances in Neural Information Processing Systems, pp. 473–479 (1997)

Hyndman, R., Koehler, A.B., Ord, J.K., Snyder, R.D.: Forecasting with Exponential Smoothing: the State Space Approach. Springer, Heidelberg (2008). https://doi.org/10.1007/978-3-540-71918-2

Kim, J., Canny, J.: Interpretable learning for self-driving cars by visualizing causal attention. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 2942–2950 (2017)

Kinga, D., Adam, J.B.: A method for stochastic optimization. In: International Conference on Learning Representations (ICLR) (2015)

Lavie, A., Agarwal, A.: Meteor: an automatic metric for MT evaluation with improved correlation with human judgments. In: Proceedings of the EMNLP 2011 Workshop on Statistical Machine Translation, pp. 65–72 (2005)

LeCun, Y., Bengio, Y., Hinton, G.: Deep learning. Nature 521(7553), 436–444 (2015)

Lee, H., Grosse, R., Ranganath, R., Ng, A.Y.: Convolutional deep belief networks for scalable unsupervised learning of hierarchical representations. In: ICML, pp. 609–616. ACM (2009)

Levinson, J., et al.: Towards fully autonomous driving: systems and algorithms. In: Intelligent Vehicles Symposium (IV), pp. 163–168. IEEE (2011)

Lombrozo, T.: Explanation and abductive inference. In: The Oxford Handbook of Thinking And Reasoning (2012)

Lombrozo, T.: The structure and function of explanations. Trends Cogn. Sci. 10(10), 464–470 (2006)

Paden, B., Čáp, M., Yong, S.Z., Yershov, D., Frazzoli, E.: A survey of motion planning and control techniques for self-driving urban vehicles. IEEE Trans. Intell. Veh. 1(1), 33–55 (2016)

Papineni, K., Roukos, S., Ward, T., Zhu, W.J.: BLEU: a method for automatic evaluation of machine translation. In: Proceedings of the 40th Annual Meeting on Association for Computational Linguistics, pp. 311–318. Association for Computational Linguistics (2002)

Park, D.H., Hendricks, L.A., Akata, Z., Schiele, B., Darrell, T., Rohrbach, M.: Multimodal explanations: justifying decisions and pointing to the evidence. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2018)

Srivastava, N., Hinton, G.E., Krizhevsky, A., Sutskever, I., Salakhutdinov, R.: Dropout: a simple way to prevent neural networks from overfitting. J. Mach. Learn. Res. 15(1), 1929–1958 (2014)

Urmson, C.: Autonomous driving in urban environments: boss and the urban challenge. J. Field Robot. 25(8), 425–466 (2008)

Vedantam, R., Lawrence Zitnick, C., Parikh, D.: Cider: consensus-based image description evaluation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 4566–4575 (2015)

Venugopalan, S., Rohrbach, M., Donahue, J., Mooney, R., Darrell, T., Saenko, K.: Sequence to sequence-video to text. In: Proceedings of the IEEE International Conference on Computer Vision, pp. 4534–4542 (2015)

Xu, H., Gao, Y., Yu, F., Darrell, T.: End-to-end learning of driving models from large-scale video datasets. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 2174–2182 (2017)

Xu, K., et al.: Show, attend and tell: neural image caption generation with visual attention. In: International Conference on Machine Learning, pp. 2048–2057 (2015)

Zeiler, M.D., Fergus, R.: Visualizing and understanding convolutional networks. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8689, pp. 818–833. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10590-1_53

Acknowledgements

This work was supported by DARPA XAI program and Berkeley DeepDrive.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Kim, J., Rohrbach, A., Darrell, T., Canny, J., Akata, Z. (2018). Textual Explanations for Self-Driving Vehicles. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds) Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science(), vol 11206. Springer, Cham. https://doi.org/10.1007/978-3-030-01216-8_35

Download citation

DOI: https://doi.org/10.1007/978-3-030-01216-8_35

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01215-1

Online ISBN: 978-3-030-01216-8

eBook Packages: Computer ScienceComputer Science (R0)