Abstract

In this paper, an automatic segmentation and counting method for insect monitoring in orchard was proposed. The method based on image processing consisted of: (1) touching insect detection, (2) local segmentation points search using boundary tracking and morphological thinning operation, recursively, (3) segmentation lines implementation using the shortest distance idea. Algorithm performance was evaluated in terms of segmentation ratio and segmentation accuracy. Compared with the watershed method, the proposed method had improvement in evaluation criteria. Its average segmentation ratio was 1.03 and average segmentation accuracy was 96.7%, respectively. The results demonstrate the proposed method is an alternative solution for insect monitoring in integrated pest management.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Monitoring orchard pest population dynamics by surveying pest species and assessing the density of the pest in orchards are very important for integrated pest management (IPM). Lamps and attractants are widely used to trap insects in orchards for monitoring the fruit pests. Furthermore, the trapped insects are identified and counted by the plant protection technicians manually. The resulting counts are used to estimate the pest density in the orchards. However, the task is time-consuming and tedious for limited pest scouting expertise, especially near the pest occurrence peak [1]. With development of information and image technologies, this situation can be alleviated by image-based automated pest identification and counting methods [2, 3].

Some segmentation methods have been used to insect segmentation and counting. But most of them focused on segmentation of individual insects from background [4]. For instance, the watershed algorithm was used to segment insects from the background images [5]. Different color models were tested to infer which color transformations should be applied prior to segmentation for whiteflies from soybean leaves [6]. Some researchers discussed a novel method to separate the whitefly in the field environment apart from normal part of leaves and background by the discrete cosine transformation and region growing methods [7].

Segmentation of touching insects has seldom been researched. A watershed algorithm was used to separate conglutinant stored-grain pest images based on mathematical morphology for detecting pests [8]. And some researchers used mathematical morphology and watershed segmentation algorithm to restrain the impact of background [9], which was designed for large insects, such as locusts. By tapping table manually, a method combined optical flow with NCuts was described to segment the touching insects [10].

From the foregoing analysis, although the watershed algorithm has widely applied in image segmentation [11,12,13], it has some problems such as over-segmentation, especially in non-uniform illumination and field situation, such as field trap images. In addition, the optical flow segmentation method needs to tap the plate lightly and manually to extract the flow feature, which is unsuitable to automatic segmentation. In most situations, there are different shapes between touching insect region and isolated one in the trap image. Based on shape discrimination among objects, in this study, a novel method using morphological thinning and separation point location was proposed to segment and count insects in trap image automatically.

The objectives of this study were (I) to discriminate touching insect regions from isolated ones, (II) to develop an image segmentation algorithm to detect local segmentation points in touching regions. This study has shown that morphological thinning and recursive procedure are able to detect the touching locations among different insects. Its ultimate aim is to propose a practical method to design the software system for insect counting in the real field.

2 Materials and Methods

2.1 Data Acquisition

RGB colour images are captured and collected by pheromone traps (Fig. 1) installed at multiple locations in national research and demonstration base of precision agriculture in Beijing, China.

As it is shown in Fig. 1, the JV205 camera (Sony corporation, Japan) is fixed at the top of this trap and takes a top view of sticky trap. The distance is 40 cm from the camera lens to sticky trap surface. The length and width of sticky trap are 28 cm and 22 cm, respectively. There is a 5 cm high gap (red part in Fig. 1) over the surrounding of sticky trap, where the insects can go into sticky trap and attached on it after being attracted by pheromone lure. In this study, the research target insect is Grapholitha molesta (Busck). The camera is set to capture an image at 5:30 am each day when it can alleviate the influence of strong illumination from sunshine, thus, the camera can capture good quality images. Also, the sticky trap will be replace with a new one every week. The duration of experiment is from April 20th to August 20th, 2015. At data analysis stage, 100 images are selected totally and split randomly into 2 sets: 50 images for the training set and the other 50 images for the test set. The training set is used for parameters determination and the test set will be used in segmentation experiment. The digital images are captured in JPEG format at \( 3216 \times 2136 \) which will be then rescaled to \( 804 \times 534 \) for improving computational efficiency and memory capacity. Image sample from the above mentioned environment is shown in Fig. 3a.

2.2 Image Preprocessing

Trap images were collected in real production environments, which led to different imaging conditions at different points in time. This is most apparent in illumination, which can be seen in Fig. 3a. In order to effectively separate the insects from background, the image was preprocessed through the flowchart in Fig. 2. Firstly, the original RGB image was converted into HSV color space and H channel image was extracted as gray image. As we can see from Fig. 3b, there was relatively distinguishable contrast between background and insects in the H channel image. Then, the H channel image was transformed into one binary image (Fig. 3c) using the automatic threshold method [14]. And a median filtering was performed to remove noise areas and smooth insect areas (ref Fig. 3d), where output pixel is the median value in the 5-by-5 neighborhood around the corresponding pixel in the input image. Finally, the ultimate binary image was acquired and showed in Fig. 3e after removing and clearing border using morphological method.

2.3 Segmentation Algorithm

As we can see in Fig. 4e, there are some touching insect regions which are needed to be separated before insect counting. So a segmentation algorithm which mainly includes touching region decision, local separation points search and segmentation lines implementation was proposed to split the touching insects.

2.3.1 Touching Region Detection

It must be mentioned that, in this study, we only consider the end to end touching case to improve the counting accuracy. However, the stacked touching case is excluded in our algorithm. In end to end touching case, the boundary of touching area is more complex than the one of single insect (ref. Fig. 4). So, a shape factor [15] which can describe the complex degree of object boundary is defined as criteria to determine whether one region in binary image is touching one or not. The shape factor is denoted as \( SF \):

Where \( A \) denotes the area of one connected region, and \( C \) denotes perimeter of the region. In one binary image, there may be some holes (background pixels) in the interior of touching region (object pixels), so it must be mentioned that the perimeter \( C \) is the sum of outer perimeter and inner perimeter.

More complex concave feature in boundary will result in longer perimeter and smaller \( SF \). For example, as we can see in Fig. 4, These \( SF \) values of region 1, region 2 and region 3 are 0.56, 0.57 and 0.24, respectively. The \( SF \) value of touching region 3 is smaller than those of single regions, such as region 1 and region 2. So, a threshold can be selected as the determined criterion and we can estimate whether region \( i \) is a touching one or not based on the \( SF \) value.

2.3.2 Local Separation Points Search

Once the touching region being detected, the next important step is how to search separation locations. In end to end touching case, if the boundary of one touching region being processed using morphological thinning operation recursively, the region will be separated into different parts at some points at certain time. The local separation points are these points where touching region is just separated. Figure 5 shows four standard regions which will be split after morphological thinning once again. The four types of local separation points are marked with red rectangle boxes in this figure.

The local separation points in Fig. 5c are the extended type of the one in Fig. 5a. They have similar feature which will be traversed twice at the same recursion of boundary traversal. They are denoted as the first standard local separation points. As well, the local separation points in Fig. 5d are the extended type of the one in Fig. 5b. In these two cases, local separation points are 4 neighborhood points and all will be traversed once in the same traversal of boundary points. And these two cases are defined as the second standard local separation points. According to the respective features, the separation points search procedure was proposed and shown in Fig. 6. And the detailed steps are as followed:

-

Step 1: Numbering each point in current boundary and traversal these points;

-

Step 2: If point \( S \) is traversed twice and the traversed numbers are \( {\text{i}}_{s} \) and \( j_{s} \), respectively, then, point S is a candidate of the first standard local separation points and go to Step 4, else go to Step 3;

-

Step 3: If point \( S \) and its neighborhood point \( R \) are in the same traversal, then point S is a candidate of the second standard local separation points, and go to Step 5, else go to Step 7;

-

Step 4: Calculating the absolute difference of the first candidate points, \( diff = \left| {i_{s} - j_{s} } \right| \), go to Step 6;

-

Step 5: Calculating the absolute difference of the second candidate points using the following function: \( diff = \left| {N_{s} - N_{r} } \right| \), where \( N_{s} \) and \( N_{r} \) are traversal number of point \( S \) and \( R \), respectively;

-

Step 6: If \( T_{1} < diff < T_{2} \), then the candidate point S is the first standard local segmentation point, otherwise, it is a noise point which should be excluded. Where \( T_{1} \) and \( T_{2} \) are threshold and determined using trial-error method. \( T_{1} = \frac{L}{5} \), \( T_{2} = \frac{4 \times L}{5} \), and \( L \) is the perimeter of current traversal boundary.

-

Step 7: Calculating the area of current region, denoted as current_area. If current_area being less than min_area, the search procedure of current region was over, else continue morphological thinning operation once again, go to Step 1. Where, min_area denotes the minimum area of single insect in the experiment image.

The procedure of local separated points search was illustrated using a sample image in Fig. 7. The result of image preprocessing is shown in Fig. 7a. Then, \( SF \) values of all regions in Fig. 7a are calculated, and the results are marked and shown with red font in Fig. 7b. Using the threshold value SF0, three touching insect regions are detected and selected (Fig. 7c). Until now, the local separation points can be searched using the method mentioned in Fig. 6. For instance, it begins to process the first touching region (Fig. 7d). The local separation points are not detected until the boundary is morphological thinned after 10 times recursively, and the enlarge version of this stage is shown in Fig. 7e. Further, this touching region is separated into three different regions (Fig. 7f) after morphological thinning once again. It must be mentioned that the three insects of touching region are separated at the same time in this case, but it is not a representative case. Oppositely, the insects in a touching region will be separated into different parts sequentially using recursive idea in most cases. The recursive procedure will not be terminated until the area of current region was less than the threshold value, min_area. In this example, when all sub-regions are traversed, there are only three local separated points in the touching region, which were marked with red plus symbol in Fig. 7g.

Example of the local separation points search. (a) binary image after image preprocessing, (b) result of shape factor calculation, (c) all detected touching regions, (d) one of touching regions, (e) result after boundary stripping with 10 times, (f) result after boundary stripping with 11 times, and (g) results of local separation points search for one of touching region (Color figure online)

2.3.3 Segmentation Lines Implementation

After all local segmentation points having been detected, separation lines which go through the local segmentation points should be implemented to split the touching region and get isolated insects. The method of segmentation line implementation is shown in Fig. 8. The segmentation line is from the local segmentation point to the boundary point which is the most adjacent to the local segmentation point. In Fig. 8, the segmentation lines \( SP \) and \( SQ \) are plotted for splitting the touching insects successfully after the local segmentation point \( S \) being located. The most important thing is point \( P \) and \( Q \) search from the boundary points. The search method consists of the following steps:

-

Step1: In all boundary points, point \( P \) is the point which has the shortest Euclidean distance with point \( S \).

-

Step2: Plotting line \( AB \) which is perpendicular to line \( SP \);

-

Step3: Excluding these points on \( \widehat{APB} \) segment, and searching the point \( Q \) from the opposite boundary segment, which also has the shortest Euclidean distance with point \( S \).

2.4 Algorithm Evaluation and Criterion

In order to evaluate the performance, the proposed method is compared with the marker-controlled watershed algorithm [16, 17]. The watersheds concept is one of the classic tools in the field of topography. It is the line that determines where a drop of water will fall into particular region. But over-segmentation is the embedded problem of watershed method which comes mostly from the noise and quantization error. Especially, this phenomenon is more serious in our study. Because the insects have many spots on their bodies and wings. In the watershed method, in order to decrease the over-segmentation phenomenon, adjacent minima have been merged using morphological dilation method. The morphological parameter is disk-shaped structuring element with 5 pixels length radius.

The segmentation ratio (SR), and segmentation accuracy (SA) are used as the evaluation indexes. These indexes can be calculated by the following equations.

Where \( N_{1} \) denotes the manual counting result, \( N_{2} \) denotes segmentation result automatically, and \( N_{c} \) denotes the correct segmentation numbers in \( N_{2} \).

Segmentation ratio, \( SR \), is an index to evaluate the segmentation degree, that is, \( SR > 1 \) denoting the result has over-segmentation phenomenon and \( SR < 1 \) denoting there are under-segmentation regions in the result.

Segmentation accuracy, \( SA \), is the percentage of correct segmentation numbers in total segmentation result. It is a measurement index to represent the correct ratio of all segmentation positions.

3 Results and Discussion

3.1 Threshold \( SF_{0} \) Determination

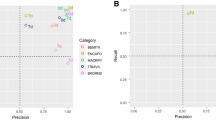

As it was discussed in Sect. 2.3.1, \( SF_{0} \) is an important parameter for touching region detection. Statistical analysis, such as \( SF \) mean and standard error are applied into threshold \( SF_{0} \) determination by using 50 test images. By calculating \( SF \) of all regions in each test image, the mean and standard error of the touching region and single region in each image were summarized in Fig. 9.

As we can see from Fig. 9a, there was significant discrimination between \( SF \) mean of touching region and the one of single region for each test image. Further, as it is shown in Fig. 9b, the fluctuation of standard error in touching region was more obvious than the one in single region, which was resulted from the various touching ways among insects. So, in the study, borrowing the idea from Otsu method [14], a maximum between-class (touching region and single region) variance method was used to calculate threshold \( SF_{0} \) and the result was 0.51.

3.2 Field Samples Segmentation Experiment

In this experiment, the test images were used to compare the performance between proposed method and the marker-controlled watershed method. As we can see in Fig. 10a and Table 1, although the results from both methods have over-segmentation (SR > 1), there are improvement in the proposed method than the watershed method. The mean of segmentation ratios has been reduced 0.04 by using the proposed method. In addition, the proposed method also had better performance in accuracy, such as more mean, 96.7% and less variance, 1.027%.

Meanwhile, for instance, as it was marked with red ellipse in Fig. 11, we found that over-segmentation in the proposed method was mainly caused by big-size insect which was detected as a touching region. But, actually, it was a single non-target insect. So, it will result in some over-segmentation when there are bigger size insects than object insect in trap images. But the insects in this study are attracted using sex-pheromone method, so they have similar size during a propagation generation. In this case, there are very few non-target objects. Further, the proposed method improved the over-segmentation phenomenon in such location as it was marked with red arrow in Fig. 11c. Because there are different illumination and spots in different locations on the insect’s surface, the watershed method will result in over-segmentation in this similar situation.

Another point that touching insects will generate a higher complexity object compared with isolated ones is true for the majority of cases. However, there also may be some objects which cluster quite parallel and regularly and being detected as an isolated insect based on the threshold method mentioned in Sect. 2.3.1. It is the reason that there is some under-segmentation phenomenon in proposed method. So the proposed method has insufficient in such situations. In addition, the implementation and programming of the proposed method is easy and more suitable to apply into embedded platform. So it is another more practical option to design the software system for insect counting in the real field.

4 Conclusion

A new algorithm for segmenting and counting insects in automatic insect identification system was proposed. The processing algorithm consisted of: (1) touching region determination, (2) boundary tracking and local segmentation points search, (3) segmentation lines implementation. The main achievements of this study were:

-

1.

Touching region detection: a shape factor was verified and used to describe the boundary complexity of regions in binary image by statistical analysis. In addition, a maximum between-class variance method was used to calculate the shape factor threshold which can judge whether one region is a touching object or not.

-

2.

Local segmentation points search: four types of local segmentation points were illustrated, and a recursive algorithm was designed to search the segmentation points by morphological thinning method.

-

3.

Segmentation implementation: taking the local segmentation point as a base point, another two points on the original boundary of touching region were search using the shortest distance method. Touching region was split by connecting the local point with those two boundary points mentioned above.

-

4.

Performance analysis. Compared with watershed method, the proposed method could improve over-segmentation phenomenon and its segmentation accuracy increased with more than 3%. And it is devoted in sex-pheromone trap images segmentation and more practical in embedded platform.

For an extension of this research, multiple pest species are currently being tested using the segmentation method, respectively. For future work, the segmentation method developed in this research can be extended to application such as pest population estimation.

References

Wen, C., Guyer, D.: Image-based orchard insect automated identification and classification method. Comput. Electron. Agric. 89, 110–115 (2012)

Kang, S.-H., Cho, J.-H., Lee, S.-H.: Identification of butterfly based on their shapes when viewed from different angles using an artificial neural network. J. Asia-Pac. Entomol. 17(2), 143–149 (2014)

Kaya, Y., Kayci, L., Uyar, M.: Automatic identification of butterfly species based on local binary patterns and artificial neural network. Appl. Soft Comput. 28, 132–137 (2015)

Chen, Y., Hu, X., Zhang, C.: Algorithm for segmentation of insect pest images from wheat leaves based on machine vision. Trans. Chin. Soc. Agric. Eng. 23(12), 187–191 (2007)

Xia, C., et al.: Automatic identification and counting of small size pests in greenhouse conditions with low computational cost. Ecol. Inform. 29, 139–146 (2015). Part 2

Barbedo, J.G.A.: Using digital image processing for counting whiteflies on soybean leaves. J. Asia-Pac. Entomol. 17(4), 685–694 (2014)

Zhang, S., et al.: Algorithm for segmentation of whitefly images based on DCT and region growing. Trans. Chin. Soc. Agric. Eng. 29(17), 121–128 (2013)

Wang, Y.-Y., Peng, Y.-J.: Application of watershed algorithm in image of food insects. J. Shandong Univ. Sci. Technol. 26(2), 79–82 (2007)

Weng, G.: Monitoring population density of pests based on mathematical morphology. Trans. Chin. Soc. Agric. Eng. 24(11), 135–138 (2008)

Yao, Q., et al.: Segmentation of touching insects based on optical flow and NCuts. Biosys. Eng. 114(2), 67–77 (2013)

Zhong, Q.F., et al.: A novel segmentation algorithm for clustered slender-particles. Comput. Electron. Agric. 69(2), 118–127 (2009)

Aymen, M., et al.: Automatic image segmentation of nuclear stained breast tissue sections using color active contour model and an improved watershed method. Biomed. Signal Process. Control 8(5), 421–436 (2013)

Zhang, X.D., et al.: A marker-based watershed method for X-ray image segmentation. Comput. Methods Programs Biomed. 113(3), 894–903 (2014)

Otsu, N.: A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 9(1), 62–66 (1979)

Lao, F., et al.: Recognition and conglutination separation of individual hens based on machine vision in complex environment. Trans. Chin. Soc. Agric. Mach. 44(4), 213–216 (2013)

Gaetano, R., et al.: Marker-controlled watershed-based segmentation of multiresolution remote sensing images. IEEE Trans. Geosci. Remote Sens. 53(6), 2987–3004 (2015)

Xu, L., Lu, H.: Automatic morphological measurement of the quantum dots based on marker-controlled watershed algorithm. IEEE Trans. Nanotechnol. 12(1), 51–56 (2013)

Acknowledgements

This research was supported by Beijing Natural Science Foundation (6164034) and National Natural Science Foundation of China (61601034). All of the mentioned support and assistance are gratefully acknowledged.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 IFIP International Federation for Information Processing

About this paper

Cite this paper

Li, W., Chen, M., Li, M., Sun, C., Wang, L. (2019). Automated Counting of Sex-Pheromone Attracted Insects Using Trapped Images. In: Li, D., Zhao, C. (eds) Computer and Computing Technologies in Agriculture XI. CCTA 2017. IFIP Advances in Information and Communication Technology, vol 545. Springer, Cham. https://doi.org/10.1007/978-3-030-06137-1_5

Download citation

DOI: https://doi.org/10.1007/978-3-030-06137-1_5

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-06136-4

Online ISBN: 978-3-030-06137-1

eBook Packages: Computer ScienceComputer Science (R0)