Abstract

Programmable manufacturing systems capable of self-replication closely coupled with (and likewise capable of producing) energy conversion subsystems and environmental raw materials collection and processing subsystems (e.g. robotics) promise to revolutionize many aspects of technology and economy, particularly in conjunction with molecular manufacturing. The inherent ability of these technologies to self-amplify and scale offers vast advantages over conventional manufacturing paradigms, but if poorly designed or operated could pose unacceptable risks. To ensure that the benefits of these technologies, which include significantly improved feasibility of near-term restoration of preindustrial atmospheric CO2 levels and ocean pH, environmental remediation, significant and rapid reduction in global poverty and widespread improvements in manufacturing, energy, medicine, agriculture, materials, communications and information technology, construction, infrastructure, transportation, aerospace, standard of living, and longevity, are not eclipsed by either public fears of nebulous catastrophe or actual consequential accidents, we propose safe design, operation and use paradigms. We discuss design of control and operational management paradigms that preclude uncontrolled replication, with emphasis on the comprehensibility of these safety measures in order to facilitate both clear analyzability and public acceptance of these technologies. Finite state machines are chosen for control of self-replicating systems because they are susceptible to comprehensive analysis (exhaustive enumeration of states and transition vectors, as well as analysis with established logic synthesis tools) with predictability more practical than with more complex Turing-complete control systems (cf. undecidability of the Halting Problem) [1]. Organizations must give unconditional priority to safety and do so transparently and auditably, with decision-makers and actors continuously evaluated systematically; some ramifications of this are discussed. Radical transparency likewise reduces the chances of misuse or abuse.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Self-replicating systems

- Molecular nanotechnology

- Finite state machines

- Molecular assembler

- Replicator safety

- Exponential manufacturing

- Nanoscale 3-D printing

- Material proficiency

- Reliability engineering

- Environmental remediation

- Climate remediation

- Infrastructure

- System-of-systems analysis

- AI-boxing problem

- Existential threats

- Autopoiesis

1 Introduction

1.1 Premise

Emerging technologies offer great increases in our capabilities to confront both emerging and longstanding challenges, but must be implemented and deployed in ways that are demonstrably safe and do not create threats or problems commensurate with these enhanced capabilities. While artificial intelligence is widely seen as fitting this description, the same is more true for self-replicating manufacturing systems. Programmable, self-sufficient self-replicating manufacturing systems can potentially rapidly scale productive capabilities to globally significant extents, and will enable the restoration of preindustrial atmospheric CO2 levels and ocean pH as well as universal provision of basic human needs at economies and in time-frames presently not generally thought feasible.

Heretofore, despite even theoretical interest showing feasibility of large-scale practical utility [2], self-replication of man-made productive systems not relying on significant pre-fabrication, let alone closure of the capability for self-sufficient self-replication from environmental raw materials and energy sources has been largely elusive. The significance of this is many-fold: self-replication of capital equipment (sometimes termed exponential manufacturing) which is capable of customized fabrication combines economies of scale with agility of production; ability to produce high-performance materials yields efficiencies in both materials utilization and energy use (both during production and end-use), and additionally offers options of materials substitution when desired. Direct utilization of abundant raw materials as inputs and close coupling with highly controlled means of collecting raw materials, and the capability of producing photovoltaics or other energy harvesting means enables physical self-sufficiency of these systems, facilitates shorter generation times and better economies. This does not, however, necessarily constitute autonomy, and we discuss how autonomy can be limited, controlled or completely avoided; as discussed herein, control over to complete information required to effect self replication is the most preferable hard limitation on autonomy of replication or action. When programmable self-replicating productive systems avail molecular nanofabrication (notably, molecular assemblers or matter compilers) this permits the efficient fabrication of defect-free materials either at the nanoscale or macroscale with improvements in quality, strength, durability (e.g. atomic smoothness minimizing wear) and other properties in addition to feasible miniaturization to the ultimate limits imposed by physics. In sum, this convergence of technologies can bring an end to the era of material scarcity and both offer and go beyond material and energy efficiency to enable an era of material proficiency.

Self-replication carries with it not only these and many other important beneficial prospects, but also risks to be avoided, particularly since the technology can inherently self-amplify. Here we show that with the establishment of these capabilities, modes of control and restriction of information can be reliable limitations on properly designed self-replicating systems. Autonomy of action for such systems is limited to constrained subsystems or specified functions, with at least an external input of information required for replication after a specified number of generations (e.g. after n = 10 to 16 generations from an initial seed, with n decreasing according to a schedule with each set of generations, finally to 1 before potential risk effect-size becomes significant.) Beyond error-correction and detection (esp. through hashes—in properly designed systems, errors will never lead to anything like mutations, so there can never be anything like evolution of properly designed systems) this can be accomplished by enforcing (by design) a distinction between the program a parent replicator follows and that which it builds-in to progeny systems: the parent’s program can be execute-only (no-copy, no-write) while the content it provides to progeny systems can for it be read-only (i.e. unmodifiable) and mechanistically constrained to be read a limited number of times (e.g. inability to autonomously rewind a tape or reset an address counter), such that only a specified number of progeny can be produced, themselves with limitations set correspondingly lower such that the number of generations is limited and the maximum population of the final generation is constrained. Once constructed, systems are mechanically unable to effect increase of these values—such limits may even be entailed structurally. As an additional mechanism, since there is a necessary mapping between low-level instruction encoding and fabricator design details, programs need not be intelligible to any generation or progeny individual other than that for which it is intended; when read-count-limited, this imposes a strict limit on the population size and composition (capabilities, including inclusive fecundity) in each generation. Of course, designing controls or limits on resource or energy utilization can be further safety measures or check-points.

While it might be easy to dismiss out-of-control replication scenarios as a mere trope of science fiction, first, since this is well-established in cultural consciousness, public acceptance of these powerful technologies will demand that such concerns be properly addressed and allayed, and, second, there are natural phenomena such as invasive species, cancer and viral infection which show these concerns to be rooted not only in fiction. These concerns thus set important requirements on acceptable large-scale self-replicating manufacturing systems. Additionally, commonplace experiences of computer crashes and frequent news of information-security breaches justify skepticism until it can be shown that distinctions make these concerns inapplicable.

Here, limitation of system time evolution (in the sense of physical dynamics) through restriction to a simplified state-space is proposed to preclude chaotic dynamics both from developing or from complicating understanding of these systems.

To address these requirements, control systems are made minimally complex to facilitate clear and concise analysis which is relatable to non-experts to demonstrate safety, and systems are operated in contexts which include secure human control at key decision points such as the decision to replicate above a threshold effect size, with information constraints additionally precluding operative progeny systems arising by any other pathway. Organizational contexts for human operators are also discussed. This paper concerns how we can design such systems and organize control thereof to preclude any out-of-control replication scenarios and other accident risks; intentional misuse of advanced technologies represents a separate problem to be treated elsewhere. Finite state machines are selected as a minimally complex and analytically tractable type of control system, and, we further restrict these to the subset having simple transition rules; thus, transitions between states can be seen as a linear program that only include advance to a next functional state (absolutely or conditionally on inputs or success of the step controlled by the present state), with exceptions being a step-error-recovery operation (with repetition number limits) where acceptable, and a failure state which initiates entry into a failure condition which causes the system to initiate a “safest-course of action” sequence or a pause until human control instructs otherwise. This paradigm restricts control systems to those to which the halting problem does not fully apply. While the unpredictability of computer-glitches is an obvious frame of reference for the larger problem we seek to treat, restriction to finite-state machines yields systems wherein the domain of valid states and control sequences (from program and inputs) is restricted to those for which the halting problem is reduced to a simplified and tractable form. The operations of the subject systems are controlled kinematically (at a low level, with desired kinematic vectors mapping simply to state vectors) by signals produced by state machines to operate actuators, and sensors provide inputs to the state machine concerning the results of operations and the environment, with input states making up part of the machine state. While additional inputs might in some instances include outputs from other computational systems of different type performing functions such as image recognition or data analysis, these would only be admitted to system design in this paradigm if well-characterized reliability for a use-case can be established and non-high-confidence outputs or errors are reliably detectable to enable transition to a failure state, and where possible involve heterogeneous redundancy such that coincident systematic errors are made unlikely, but would also only be admitted if used in such a way that their errors lead only to self-limiting conditions, i.e. non-replication until resolved, rather than leading to erroneous replication-permissive states; for these cases, design is similarly restricted to the subspace for which risk analysis is straightforward. Because the number of machine states and accessible or traversable transition pathways can be prespecified or readily determined, the state space and all accessible state-trajectories can be enumerated exhaustively.

Although human error is a frequent cause of accidents, inclusion of humans in all significant decision-points to limit replication can be an important pillar of safe system design and part of the answer to public safety concerns, provided control modalities meaningfully involve adequately informed human oversight, and the organizational structures in which humans involved operate as well as the interfaces they use facilitate rather than undermine the role they serve. For large-scale deployments, where both the economic advantages gained and the extent of damage potentially risked in the event of accident could be large, redundancy of human operators is justified. Particularly in this context, where proper design would make the actual need for intervention unlikely, it will be important to give operators a mix of real and simulation-accident data streams such that training is intrinsically built-in to operating procedures and inattention is both largely prevented and made detectable through redundant cross-checking.

Coda:

An open question is the utility and desirability of augmenting human oversight with artificial intelligence, and modes for maximizing these while precluding added risk. Although in this application we advise against on-line use of artificial general intelligence (AGI) or superintelligence in any capacity other than advisory, if at all, we propose strategies or tactics applicable to the AI-boxing problem in general. Instead, for operations for the present use case, specialized intelligence (artificial idiot savant, AIS) to identify out-of-band parameters or unusual conditions or events are recommended. In particular, executive function is constrained and both learning and operation are observational rather than embodied (i.e. not able to act on surroundings). AGI might instead have a role in simulations and especially as an adversary or generator for challenge scenarios (deep-fake risk scenarios).

1.2 Introduction to Molecular Nanotechnology

The idea of self-replicating machines (which in modern form traces to von Neuman [3], computationally implemented in cellular automata with better capability for parallel operation more recently by Pesano [4] and reviewed by Sipper [5]) and the idea of approaching the physical limits of miniaturization (which traces at least to Richard P. Feynman’s 1959 There’s Plenty of Room at The Bottom: An Invitation to Enter a New Field of Physics Lecture [6],) in combination were analyzed rigorously by Drexler [7,8,9], showing these to be within the bounds of physical laws and that exponential fabrication would enable production of high-performance materials and devices in large quantity and with large effect. Physical implementations of macroscale self-replicating systems are found in [10,11,12].

Molecular nanotechnology is principally concerned with building things precisely from individual molecules or atoms; miniaturization is inherent and ultimate, and when reliable mechanosynthetic operations can be employed, precise, uniformly defect-free products become feasible. These characteristics enable the fabrication of high-performance materials, and because the capital equipment for this is both self-replicating and the product of self-replication, these may be realized at highly advantageous economies, with economies similar to those of software creation—design or engineering and operation rather than conventional batch or sequential production are the principal costs.

One of us (EMR) has developed a range of nanoscale fabrication and assembly methods for a range of materials from abundant raw materials found in the environment, suitable for the production of programmable self-replicating manufacturing systems, details of which were also disclosed [13]; see also references therein. Earlier publications by others [14,15,16] have treated theoretical aspects of mechanosynthesis.

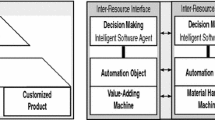

An important class of fabrication operations utilized are convergent fabrication or assembly [17, 18], which in contrast to additive fabrication by accretion of infinitesimals of material (e.g. epitaxy) combines increasingly larger intermediates in successive steps to yield larger products; in a step of the stereotypical case, one block is surface-bonded to another similar block to yield an intermediate twice the size of inputs to that step, this is particularly facile for halite materials (see, for example, Fig. 1A and N.i–vii for examples of convergent halite fabrication [13]). In such cases, addition can occur with the help of alignment means (surfaces or rods along which precursors and/or tools are slid, for example) to ensure precise registry, and products of successful addition are readily distinguished (e.g. by twofold difference in size), as is transfer from one manipulator to another determined by motions or bond-strength). In all cases, high operation reliability to afford low defect rates and uninterrupted operation are important design criteria, as is facile measurability of products.

Schematic view of a convergent fabrication system composed of subsystems each for performing unit operations together implementing fabrication according to a design; each subsystem is controlled by an individual finite state machine having the state diagram shown. Each operation effectuated by an associated state may include at most n retry transitions (e.g. determined by sensors not detecting desired product formation and transfer) before entering a failure condition; wait-states (w) for synchronization necessitated e.g. by retries in other coupled subsystems (not shown). Halt-1 represents successful task completion; halt of this subsystem will necessarily occur in ≤ (nN + w) transitions.

It is important to note that while nanosystems are effectively in practice necessarily the products of self-relicating nanosystems (at least in any significant numbers), not all nanosystems or nanodevices are necessarily self-replicating, and in fact most would instead be end-effector devices or systems. Thus, the present focus on risks associated with self-replication do not directly pertain to all nanodevices or nanosystems nor all uses thereof.

1.3 Prior Treatments of the Problem

A mathematical analysis of the physical limits on out-of-control self-replication by self-replicating machines which consume biomass or compete with biological life for resources such as carbon and sunlight was performed by Freitas [19], which found that in various scenarios uncontrolled replication could reach global proportions with catastrophic ecological consequences in short timeframes if not checked, but concluded that with prior preparation, detection and response strategies could arrest the offending replication processes. However, some detection strategies involve such measures as examining every cell of every organism periodically for potential replicator threats; while this might be appropriate if necessary during an active threat situation, as an ongoing preventive measure for a speculative threat, the justification of such extensive and invasive monitoring regime seems to us problematic in multiple regards, including the fact that camouflage or evasion strategies could complicate detection. In general, while the analysis of [19] is an important point-of-reference, as an analysis of practical threats it is limited by restriction to unsophisticated replicators arising either through profound carelessness (which we discuss the avoidance of here and is addressed in [20]) or malicious intent but only the sophistication of a script-kiddie exploit.

The Foresight Institute has considered the problem of avoiding catastrophic self-replication and various abuses of these technologies and produced a policy proposal. [20] These include a class distinction which we also make, autonomy of self-replication, observe the same logical prerequisites of material, energy and information, and note:

[…] molecular nanotechnology not specifically developed for manufacturing could be implemented as non autonomous replicating systems that have many layers of security controls and designed-in physical limitations. This class of system could potentially be used under controlled circumstances for nanomedicine, environmental monitoring, and specialized security applications. There are good reasons to believe that when designed and operated by responsible organizations with the appropriate quality control, these non autonomous systems could be made arbitrarily safe to operate.

with which we agree, but further advocate the addition of such applications as climate restoration, environmental remediation, agriculture, and water purification, to name a few important cases with ecological, humanitarian, and geopolitical significance, and which themselves relate to real rather than hypothetical crises. Depending on application, distinction according to whether the fabrication/assembly/replication subsystems are motile or sessile affects risk potential and the range of available incident responses.

1.4 Introduction to Reliability Engineering in Organizational Context

When searching for risk management strategies, often the focus is on technical solutions, due to the way risks and failures are traditionally analyzed. However, the actual root of failures in critical engineering systems is often organizational errors. This can be introduced by analyzing linkages between the probability of component failures and relevant features of an organization, allowing crude estimates of the benefits of specific organizational improvements.

One example of an accumulation of organizational problems was the Challenger accident: miscommunication of technical uncertainties, failure to use information from past near-misses and the error in weighing the tradeoffs of safety and maintaining schedule all contributed to the error in judgment contributing to the technical failure of the O-ring in low temperatures [21]. While studies of this story and other major failures, such as Chernobyl, Three Mile Island, the Exxon Valdez accident, and the British Petroleum leakage in the Gulf of Mexico, are instructive, they only provide a narrow slice of potential failures. A systematic analysis of these spectacular failures, combined with partial failures and near-misses, are necessary to begin to classify a probability risk analysis focused on the risk of organizational error. For new emerging technologies such as self-replication and AI, where failure could be compoundingly catastrophic, it is especially imperative to take note of management and organizational errors in other fields to develop the safest possible management of these technologies.

Traditional probability risk analysis models focus on technological failure, specifically the probabilities of initiating events (accidents and overloads), human errors, and the failures of components from a technological standpoint. More recent risk analyses attempt to quantify the effect of management and organizational errors. Examples of organizational factors contributing to management errors include excessive time pressures, failure to monitor hazard signals, and temptation to not provide downtime to properly inspect and refurbish technology. Some poor decisions may be simple human errors, but more often they are caused by the rules and goals set by the corporation. Five particular management problems seem to be at the root of judgment errors: (1) time pressures; (2) observation of warnings of deterioration and signals of malfunctions; (3) design of an incentive system to handle properly the tradeoffs between productivity and safety; (4) learning in a changing environment where there are few incentives to disclose mistakes; and (5) communication and processing of uncertainties [22]. We note that the majority of these management problems involve balancing profitability with safety risks, and that with technologies such as self-replication with long-term safety implications, the organizational emphasis must lie strongly with safety.

Coda:

There is a certain analogy between the failure-modes of organizations at fulfilling their stated aims in general and maximizing safety in particular, and the halting problem; here we refer to this as the organizational halting problem. For present purposes, we note that high transparency, accountability, deconfliction of practical incentives, redundancy and performance checks integrated into ordinary operations can minimize risks from these sources. These must override all other organizational imperatives of any organization which would have any direct or indirect role in the design, construction, operation or use of these technologies, or safety guarantees are undermined. Similarly it is proposed that at least any individual or entity given access to control of the technology, the means to independently bootstrap like technology or to exert power or coercion over any of the former be subject to a regime of radical transparency via sousveillance, including at any pertinent human-machine interfaces, with exceptions only to preserve cryptographic functions. While the broad-ranging implications of these points are acknowledged, the consequences of sub-optimal safety regimes could be broader, and so this is justified.

2 Finite State Machines and Subsystems

The complexity (in bits) of a finite-state machine with s states, v transition vectors between them possibly responding to i possible collective input states may for purposes of the present analysis be bounded as:

which is the complexity which must be elaborated in analysis of such a subsystem. (In reality, the complexity we must address is that of the machine and its environment). When states of a subsystem S can be classed into k functional categories relevant to the overall system, the complexity of that subsystem’s functional state-space in higher-level system analysis is

and the complexity (in bits) of a system comprising j uncorrelated subsystems is

Degrees of freedom are then taken to be

for E environmental states. Thus D is the pertinent scope of analysis. This means that under controlled conditions (such as a factory setting), C dominates, but in, for example, in environmental or climate restoration applications, the second term is of critical import.

Figure 1 depicts an exemplary state machine (state diagram on right) controlling a convergent fabrication subsystem (the topology of which is shown on the left), the states of which in general may include control variables controlling positions of manipulators, activation of pumps, states of relays, signals from sensors, etc.,. Programmability occurs through transition rules, i.e. rules for the succession of numerical states according to stored values for each transition vector, which, for example, may determine the translation of a manipulator. Stored values may be effectively hard-wired in the form of digital logic, or may be stored in a memory which may either be read-only or rewritable but would in the latter case only be writable with inputs from external control via a secure channel (authenticated and encrypted), not under autonomous control. Conditional branches are minimized.

As programming occurs through the informational content of transition vectors, and systems are not autonomous, a few different control paradigms are possible. These involve communication of instructions or information to effector systems. One simple case is for systems to have only a limited queue of stored transition vectors, and limitation of replication is affected by not communicating further instructions. (This is an extension of the broadcast architecture, see [23] and Sect. 16.3.2(a) of [9]). However, especially for remote deployments it may be preferable to instead communicate only what is necessary to enable a specific operational sequence segment. In this case, systems contain programs or sets of programs in encrypted form and have only limited memory into which decrypted information may be written; a decryption device generates a synchronizing keystream (using a time-synchronized cryptographic random number generator having a state known to operators), which generates nonce values used in reception of communications and decryption of stored instructions: a key is transmitted in encrypted form, decrypted using a first nonce as a key, and passed to a function (which may be as simple as an XOR) to yield the key to decrypt an operational sequence segment (instruction sequence or transition vector values). The ultimate decryption key is only stored in a register until decryption of the respective cyphertext is complete and then overwritten, and cannot otherwise be read. Thus, instructions do not ever necessarily need to be data-in-flight, control communication bandwidth may be minimized, keys and keystreams are never available outside of the system or operational control sites, replay or other cryptanalytic attacks are precluded, and the systems themselves only have limited cleartext instructions at any given time. This case may be termed the cryptographically restricted program access control mode.

We note that because advanced molecular nanotechnology is likely to increase the rate of progress towards realizing any viable quantum computing scheme, quantum cryptanalysis must be considered part of the threat-model. Quantum resistant cryptographic authenticated encryption, or alternatively quantum cryptographic authenticated encryption would be appropriate to protect the integrity of control communications and telemetry to preclude corresponding attack vectors.

An alternative method to analyze and quantitate the complexity of such control systems is that of cyclomatic complexity, in which retry cycles employed here each constitute a linearly independent cycle. This likewise reveals the simplicity of this machine control paradigm.

The foregoing demonstrate that necessary control functionalities can be implemented is simple, completely enumerable state-spaces. While other modalities are certainly possible, the essential point is to argue that simplicity is a critical design criterion for both comprehensibility and tractable analyzability, whereby subtle design errors are more easily avoided and open review is facilitated.

As an aside, we note that due to their simplicity, the choice of control by state-machines likely minimizes the quantity of material required to implement control functionality. Thus inappropriate design efforts would not gain reduced replication times by circumventing this aspect of control architecture.

3 Connexion with Autopoiesis

In contrast to autopoiesis, despite their ability to gather their own resources, harvest energy and self-replicate, the programmable systems discussed here are cold, dead clockworks which are the product of engineered design; they do not arise or continue to operate spontaneously, they lack informatic self-sufficiency by design, lack complete defenses by design (despite inherent materials strength), have no knowledge, intentionality or motivation, no inherent survival instinct, fear or even drive to reproduce, or any drive at all beyond what is in their instruction queues; although by design they require little maintenance, they do not maintain themselves unless instructed (i.e. lack involuntary homeostasis); they can pause indefinitely without the kinds of complications of arresting and resuming metabolism faced by biological life. The machine-states which would represent sensory-input data are not a basis for any higher-level cognition but at most initiate, enable or prevent state machine transitions—although cybernetic loops may be utilized for certain functions, usually responses to sensor inputs are little more than reflexes. Further, designs preclude mutation and hence evolution is neither their origin nor directly involved in any refinements or adaptations (evolutionary techniques might be used in simulations as a design methodology, but what is learned thereby is abstracted and applied explicitly by designers, only once fully analyzed and understood in the subsequent design of physical systems, which never themselves undergo any physical evolution), so these aspects of autopoiesis are likewise absent. Here, self-replication itself involves no emergence.

(It is noted that in various applications, self-replicating systems discussed here may have subsystems, e.g. for raw materials collection and transport, or for remote sensing, etc., which may depart from various aspects of the foregoing characterizations, but this does not change the fact that the self-replication of these systems or their operation departs significantly from the thrust and spirit of what is usually described as autopoiesis).

These systems are, however, of interest to the study of autopeoiesis because they represent a minimal core of functional requisites for autopoiesis—the minimal viability case, information excluded, that physical artificial autopoiesis must accomplish but would also have to go beyond. Natural autopoeisis had to reach that point from preexisting concentration and energy gradients through thermodynamics, statistical mechanics, and self-organizing chemistry culminating in the molecular evolution that afforded the metabolically supported replication of molecularly encoded information ultimately sufficient to specify all required complex components including the ensemble of catalysts which produce them and organize their structure, and including the translation apparatus for that information; it was also necessary that the complex structures operated in their intracellular or external environments to support the functions and behaviors of the organism which enable reproduction. (For an example of early aspects of chemical evolution involving catalysis in confined chambers occurring in mica yielding increased effective local concentrations and orienting effects, see [24]; while remarkable, these represent only the very beginnings of what is required). Organized behavior, even for simple unicellular life requires regulated structures effecting sense-response loops (e.g. bacterial operons) in order to be flexible yet efficient.

We note the distinction made by Sipper [5] between self-replication and reproduction:

Replication is an ontogenetic, that is, developmental process, involving no genetic operators, resulting in an exact duplicate of the parent organism. Reproduction, on the other hand, is a phylogenetic, that is, evolutionary process, involving genetic operators such as crossover and mutation, thereby giving rise to variety and ultimately to evolution.

If subsets of the foregoing distinctions were dropped, the present self-replicating systems could be regarded as the edge-case between allopoietic and autopoietic systems: they are no different from allopoietic systems but are incidentally capable of fabricating and assembling copies of themselves. While this property is critical to enabling their full power and utility, it is made possible through simplicity of design rather than complexity or emergence. It is interesting to note that alternation-of-generation, an evolutionary strategy adopted by various life-forms, in a certain sense likewise fits the category represented by this edge case, and similarly, the production of nanoscale self-replicating systems is facilitated by utilizing two or more materials [10] even though this superficially would seem to multiply the development efforts required.

4 Organisational Context for Safe Control Paradigm

Large-scale application of technology in the pursuit of goals by definition aims at effecting changes through action, usually a coordinated sequence of which are required on a routine basis under multiple practical constraints set by goals of an organization. Safety procedures, by contrast, are the procedures and constraints directed towards making adverse events rare—the more effective these are, the more remote respective risks begin to seem.

Application of self-replicating manufacturing capability to large scale problems superlatively augments the capacity to reach large-scale goals, but similarly increases the potential scale of risks if safety procedures fail. These facts entail that some of the economic resource savings from supply, labor, capital equipment, physical manufacturing and deployment be allocated to the organizational aspects of operational safety.

Organizational resources are allocated according to the scale of potential risks to enable redundancy of observation. Beyond reducing the likelihood of erroneous action due to failures to observe hazards, this excess capacity enables integration of observation quality assessment (as well as training) by mixing simulated hazard events into workloads on a routine basis while still maintaining redundancy of observation of actual observations. This type of intermediately frequent challenge will likely psychologically promote attentiveness, since it contrasts with watching for something improbable. Simulated hazards in data streams can be produced by the adversary networks of generative adversarial networks (GANs) [25,26,27].

For purposes of analysis, the physical system to be controlled safely is understood through a construction of system as machine + environment, and the enterprise system is understood through a construction of system as machine + organization + environment. Higher order effects may be possible because these system can (sometimes are intended to) modify their environment (which itself may be a nonlinear system)—and since this is a reiterative process, the potential for emergent dynamics which are difficult to predict cannot be excluded. Especially for this reason, monitoring must at least reliably detect whether operating assumptions violated in the process. Narrow-AI may in fact be well suited to augmenting human control in the following way: since recognition of stereotyped patterns is a well-defined task at which at least some artificial neural-networks perform well (e.g. pattern recognition [28]), patterns not recognized by a well-trained ANN can be highlighted for human operators.

Discriminator networks from GANs may also be used to highlight hazards. Nonetheless, where supervision at all relies on the foregoing or other modes of augmentation, human operators must utilize interfaces and procedures cognitively engineered to prevent them from becoming inured to simply relying on what automated analysis brings to their attention—this would erode any actual safety enhancement the inclusion of human operators is intended to provide.

Human oversight of critical decisions is itself insufficient; to be of much use, it must be adequately informed and vigilantly critical. If, for instance, inadequate monitoring of system surroundings causes operators to be oblivious to pertinent local circumstances, avoidable harm to bystanders, wildlife or the environment could occur through the operation, growth or replication of systems.

Competing organizational imperatives or external pressures applied to them can undermine adherence to safety procedures. A classic example is the Challenger disaster: despite a well-developed safety culture, political motivations led the Reagan Administration to pressure NASA and in turn a subcontractor to adhere to a launch schedule that necessitated well founded objections on the grounds of safety be overridden [29,30,31], with well-known consequences. If organizations responsible for operating self-replicating systems with any large-scale risk potential if mismanaged are susceptible to such pressure and lack means of contemporaneous redress, safety and confidence in safety may be undermined. At least in open societies, transparency is one countervailing factor that may mitigate such organizational risks.

Beyond generic organizational dysfunction, related pitfals include other authority-derived dictates, e.g. a regime determined to avail itself of offensive weapon systems based on these technologies will cycle through researchers who are unwilling or unable to deliver what is required until presented with results that appear to satisfy their mandates, whether or not those who deliver are actually capable of producing systems that are not inherently unsafe (even to those regimes) and have any probability of functioning as desired in the unfortunate event of actual use; rather there is a selective advantage for those who do not appreciate the daunting complexities which would be involved but are eager to pursue whatever inducements or respond to whatever fears (such as coercion) may be applied to motivate them, producing ostensibly satisfactory results which are more likely epistemically opaque, though not obviously so to the uncritical. This is akin to the gambler’s ruin problem, where even in cases with favorable odds, the structure of the game entails a random walk which eventually finds its way off a cliff. But the consequences there extend beyond the fortunes of the gambler, and so there is a general interest in avoiding such scenarios to begin with.

5 Simulation, Boxing and Abstraction

Before any physical systems are used or deployed at scale, for multiple reasons, extensive simulations of functionality and operation, including participation of human operators are appropriate, necessary and advantageous. Simulations permit many approaches which would be inappropriate in real-world application to be studied so that useful results can be abstracted and applied in appropriate form in real systems. Prime examples of these include: evolutionary design methodologies which may yield useful solutions to challenging problems which can be translated for implementation in non-evolving systems; and, involvement of ANI or AGI in simulated operational problem-solving, with results informing design or programming of non-intelligent systems. In these cases, there is a strict decoupling of anything capable of emergence or analytically refractory complexity and actual physical self-replicating systems. Rather than empowering inscrutable processes with control of self-replicating systems, this approach passages abstracted results through human design processes, which in the present paradigm would further be peer reviewed.

A similar approach can be applied to AI containment, also known as the AI-boxing problem (see, for example, [32]). Earlier proposals exist to confine potentially dangerous AI to simulations or virtual environments, although assertions have been made that this is an insoluble problem.

While a more comprehensive treatment of the topic is beyond the scope of this paper, we briefly list a few techniques which may be brought to bear to the boxing problem. The motivation for this is that if AGI (rather than NAI/AIS) were ever to be used in such applications (and the purpose here is not to advocate this but to list items pertinent to analysis of this possibility), even if only in simulation, it could not be kept ignorant of these technologies, so escape would be a particular matter for concern (so in fact the groundwork for such research would best be done in a simulation with modified physics and misleading training data for understanding of the technology, and assessment of facility for learning different [also counterfeit] physical principles would be pertinent). These include: multiple confinement layers or motes with simulated escape environments enabling study of escape behavior; combinatorial mazes along more available escape routes…especially with execution traps; avoiding hardware vulnerabilities especially with emulated but not reconfigurable hardware, but detecting attempts to exploit emulated bugs; organizing simulation trials similar to metadynamics..especially parameterized with differential subject-matter training set content, including umbrella dynamics to study what promotes escape efforts; pause, clone and probe analyses of modules or subspaces (instrumentation of the AI system, in analogy, electrocorticography, evoked potentials mapping, cortical stimulation mapping experiments, etc.); adversarial construction: captive and jailer AIs.

6 Discussion

6.1 Lessons Past and Forward Formulation

Because of the sweeping nature of the changes these technologies enable, it is appropriate to take care to not recapitulate past errors in the course of technological evolution or of human affairs in the development, implementation and use of these powerful technologies.

The model of economic parsimony—highly justifiable in the regime of material scarcity—favors incremental additions to what exists, and so new technologies frequently are adapted to the proximal constraints imposed by what came before them. Considerations such as first mover advantage (e.g. Facebook’s motto, “move fast and break things”) and marginal profitability encourage cutting of corners—and such tactics are not foreign to the course of successful business. However, cybersecurity as an afterthought and infrastructure riven with vulnerabilities have resulted (thus existing infrastructure would be wholly inappropriate for replication-control). While the significance of risks which now obtain due to this legacy is increasingly appreciated, it would be of another order entirely for such risks and practices to be carried forward into the realm of self-amplifying technologies.

Self-replicating manufacturing efficiently utilizing abundant raw materials can break the foregoing premise of scarcity, enabling a regime of material proficiency wherein enhanced capabilities are realized at better efficiencies and economies. This changes fundamental assumptions underlying the structure and behavior of contemporary organizations and society.

Much of our world has been shaped by competition, in various physical, historical and contemporary forms—and much of this competition has been competition for or related to material resources. While it would plainly be an error to consider all conflict to be reducible to conflict over resources, resource competition and population pressure on resources are recurring contexts for many conflicts (including both wars and genocides). This history has created both cultural enmities which have not yet been transcended as well as fears predicated on them, and the latter can themselves be sufficient potentiating factors for hostilities. Military conflict has long been a motive force in the development of technology (in extreme form, this idea was enunciated by Virilio [33]) and technology has often been decisive in military outcomes. Military observers and participants among others have recognized the potential significance of nanotechnology and self-replication. [34,35,36] With the advent of self-replicating productive capabilities, having the safety considerations outlined here, should this class of technology be offensively weaponized, this symbiotic progression could become untenably fraught, as follows:

-

Question: How do you defeat a militarily superior adversary?

-

Answer: Through unpredictability.

Should any actors ever pursue that course of action—which in particular would favor transgressing controls such as those set forth here—all predictions become inherently questionable. In essence, this becomes a theoretical game in which whatever would be ruled out of bounds becomes precisely advantageous, and notably, shifts in balance of forces effected through aggressive replication rates could be decisive. Similarly, one way of increasing replication rates is to minimize system extent, such as through the omission of components or subsystems necessitated only for safety. A desperate belligerent might resort to availing rather than preventing evolution of autonomous self-replicating offensive weapon systems under control of AGI, which would defy predictability in multiple dimensions. Further, control of military operations in such an environment would require rapid decisions and action in response to data streams exceeding what humans could ever be meaningfully capable of apprehending, necessitating automation, whether conventionally programmed or through machine learning or artificial intelligence. The complexities entailed again preclude analytic predictability, and since empirical testing necessitates incomplete surveys of assumed parameter spaces (presumably adversary strategies would likely aim to create unanticipated scenarios), there can be no certainty that any such system will perform as required in all or even most cases.

The clearest and most constructive path to avoiding the nebulous risks associated with direct conflicts between nanotechnological powers (risks which would be more likely than accidental runaway replication scenarios but potentially at least as catastrophic) is to rigorously focus development of these technologies on instead ameliorating those issues which were the context for so much historical conflict in the first place: promoting material well-being (freedom from want) and freedom from fear. [37] The technological foundations enabling these may soon be at hand. Even where material factors are not primary motivators for conflict, when populations widely enjoy well-being, there is less fertile soil for leaders who would endanger that with avoidable conflict.

-

Alternative answer: Through subversion.

At least in recent years we note (without supporting any particular conclusion as to the legitimacy of any particular purportedly freely elected government or the fairness of any particular election) that there have been recurrent questions as to the integrity of numerous elections which are less implausible than would be preferred; here we use the U.S. as an example of this in an ostensibly well-developed democracy, but the phenomenon is more broad. According to U.S. intelligence analysis, in addition to intrusions into state and local elections information technology infrastructure, influence and disinformation campaigns mediated by social and other media and involving bots appear to have been conducted by state actors [38]. Private parties have engaged in efforts similar to the latter, including campaigns researching the cost and effectiveness of such methods [39, 40]. The increasing sophistication of DeepFakes portends an intensification of the challenges such tactics may pose.

This is pertinent here on three levels: First, an adversary may attempt to gain competitive advantage by retarding the development of these critical technologies by competing entities by interfering in political processes to, for example, promote bans, particularly through alarmism, to which the background of cultural knowledge noted above readily lends itself. Second, promoting unnecessarily restrictive regulations on development or implementation could serve a similar purpose, particularly if they are national—this is another reason that to have any actual usefulness, appropriate regulations must be universally effective. Third, interference with organizational components of the present safety paradigm, for example disinformation at the level of monitoring of the technology, particularly deep-fake misrepresentation of any part of the process to either create hysteria or manipulate or degrade effectiveness stated purpose of safety (restriction of the associated infrastructure to this single purpose and only the functions of surveillance, control, primary audit data storage to minimize attack surface is essential, and protocols for verifiable independent monitoring are likewise essential), or for example, interfering with organizational components or contexts themselves via traditional espionage operations.

Beyond that, subversion (by organizational, informational or physical means) of physical defenses such as those proposed in [41] could transform these defenses into weapons aimed at their original masters. Note that [41] do acknowledge these threat vectors, and reach similar conclusions to those here as to the importance of transparency reducing opportunity for misuse.

6.2 Additional Comments

It is also important to note that while the hypothetical risk-space is vast, appropriate use could normally be restricted in the case of systems with self-replicating capacity to having vulnerabilities by design as a final safety measure. Of course, this is not an effective answer to issues of misuse or abuse, which are separate matters to be addressed elsewhere. The specific nature of designed vulnerabilities should enable arrest, deactivation or destruction of errant systems by operators or authorities, but should resist denial-of-service attacks or exploits.

6.3 Payoff Matrix

While the consequences of the technology, what is done with it and how it is regulated involve many different possible combinations and gradations, stark extremes are to be found among the more likely combinations of implementation versus modes of regulation and priorities, simplified in the following ansatz payoff matrix:

Appropriate Universal Regulation, Aligned Imperatives | Irregular and Variable Regulation, status quo imperatives | |

Safe Design & Organizational Structure | Highly & Widely Beneficial, Fair Predictability or better | Variable Benefit, Uncertain but Possibly High Risks |

Offensive Weaponization | High Risk/Variable Predictability/Questionable Rewards | Unpredictable/Unstable/High Risk/Inequitable Rewards, if any |

While the challenges involved in achieving a universal, effective but just regulatory regime are not to be underestimated, the diffusion of profound benefits made available and the extreme-to-existential risks (both those posed by inappropriate use or implementation of technologies considered here and those which these technologies may render tractable) to be avoided argue the effort will be worthwhile. As the extant preconditions for these technologies cannot be eliminated, and bans would be problematic along multiple dimensions and carry profound opportunity costs, the question is not whether these technologies will be developed but under what circumstances and to what ends. Desirable outcomes may depend on appropriate implementations at the technical, organizational and regulatory levels at least, and efforts are more likely to prevail if begun while vested interests are few.

References

A simplified form of some of the arguments discussed here are advanced by one of the authors [EMR]. http://radicalnanotechnology.com/selRepSafety.html. Accessed 1 Mar 2019

Lackner, K.S., Wendt, C.H.: Exponential growth of large self-reproducing machine systems. Math. Comput. Model. 21(10), 55–81 (1995)

von Neumann, J., Burks, A.W.: Theory of Self-Reproducing Automata. University of Illinois Press (1966)

Pesavento, U.: An implementation of von neumann’s self-reproducing machine. Artif. Life 2(4), 337–354 (1995)

Sipper, M.: Fifty years of research on self-replication: an overview. Artif. Life 4, 237–257 (1998)

Feynman, R.P.: There’s plenty of room at the bottom: an invitation to enter a new field of physics. In: American Physical Society Meeting Lecture, Caltech, 29 December 1959

Drexler, K.E.: Molecular engineering: an approach to the development of general capabilities for molecular manipulation. Proc. Natl. Acad. Sci. 78(9), 5275–5278 (1981)

Drexler, K.E.: Molecular machinery and manufacturing with applications to computation. Doctoral dissertation, Massachusetts Institute of Technology (1991). http://e-drexler.com/d/09/00/Drexler_MIT_dissertation.pdf. Accessed 1 Mar 2019

Drexler, K.E.: Nanosystems: Molecular Machinery, Manufacturing, and Computation. Wiley, New York (1992)

Moses, M.: A physical prototype of a self-replicating universal constructor. Master’s thesis, University of New Mexico (1999–2001). https://web.archive.org/web/20031130003228/http://home.earthlink.net/~mmoses152/SelfRep.doc. Accessed 1 Mar 2019

Zykov, V., et al.: Self-reproducing machines. Nature 435, 163–164 (2005)

Moses, M., Yamaguchi, H., Chirikjian, G.S.: Towards cyclic fabrication systems for modular robotics and rapid manufacturing. In: Robotics: Science and Systems 2009, Seattle, WA, USA, 28 June–1 July 2009 (2009)

Rabani, E.M.: U.S. Patent 10,106,401 (2018)

Merkle, R.C.: A proposed ‘metabolism’ for a hydrocarbon assembler. Nanotechnology 8, 149–162 (1997). http://www.zyvex.com/nanotech/hydroCarbonMetabolism.html. Accessed 1 Mar 2019

Freitas Jr., R.A., Merkle, R.C.: A minimal toolset for positional diamond mechanosynthesis. J. Comput. Theor. Nanosci. 5, 760–861 (2008). http://www.MolecularAssembler.com/Papers/MinToolset.pdf. Accessed 1 Mar 2019

Freitas Jr, R.A.: http://www.molecularassembler.com/Nanofactory/AnnBibDMS.htm. Accessed 1 Mar 2019

Rabani, E.M.: WO 97/06468 (1997)

Merkle, R.C.: Convergent assembly. Nanotechnology 8(1), 18–22 (1997)

Freitas Jr, R.A.: Some Limits to Global Ecophagy by Biovorous Nanoreplicators, with Public Policy Recommendations (2000). https://foresight.org/nano/Ecophagy.php. Accessed 1 Mar 2019

Jacobstein, N.: Foresight Guidelines for Responsible Nanotechnology Development, Draft version 6 (2006). https://foresight.org/guidelines/current.php. Accessed 1 Mar 2019

Vaughan, D.: Autonomy, interdependence, and social control: NASA and the space shuttle challenger. Adm. Sci. Q. 35(2), 225–257 (1990)

Paté-Cornell, M.E.: Organizational aspects of engineering system safety: the case of offshore platforms. Science 250, 1210–1217 (1990)

The Broadcast Architecture for Control. Kinematic Self-Replicating Machines. Landes Bioscience, Georgetown, TX (2004)

Hamsma, H.G.: Possible origin of life between mica sheets: does life imitate mica? J. Biomol. Struct. Dyn. 31(8), 888–895 (2013)

Schmidhuber, J.: Making the world differentiable: on using fully recurrent self-supervised neural networks for dynamic reinforcement learning and planning in non-stationary environments. TR FKI-126-90. Tech. Univ. Munich. (1990). http://people.idsia.ch/~juergen/FKI-126-90_(revised)bw_ocr.pdf. Accessed 1 Mar 2019

Schmidhuber, J.: Unsupervised Neural Networks Fight in a Minimax Game (2018). http://people.idsia.ch/~juergen/unsupervised-neural-nets-fight-minimax-game.html. Accessed 1 Mar 2019

Goodfellow, I., et al.: Generative adversarial nets. In: Advances in Neural Information Processing Systems 27 (NIPS 2014) (2014). https://papers.nips.cc/paper/5423-generative-adversarial-nets. Accessed 1 Mar 2019

Dong, Z., et al.: RRAM-based convolutional neural networks for high accuracy pattern recognition tasks. Published by Workshop on VLSI Symposia, Oral Presentation in Kyoto, Japan, 4 June 2017, pp. 145–146 (2017)

Berkes, H.: Remembering Roger Boisjoly: He Tried To Stop Shuttle Challenger Launch (2012). https://www.npr.org/sections/thetwo-way/2012/02/06/146490064/remembering-roger-boisjoly-he-tried-to-stop-shuttle-challenger-launch. Accessed 1 Mar 2019

Berkes, H.: 30 Years After Explosion, Challenger Engineer Still Blames Himself (2016). https://www.npr.org/sections/thetwo-way/2016/01/28/464744781/30-years-after-disaster-challenger-engineer-still-blames-himself. Accessed 1 Mar 2019

Berkes, H.: Challenger engineer who warned of shuttle disaster dies (2016). https://www.scpr.org/news/2016/03/22/58794/challenger-engineer-who-warned-of-shuttle-disaster/. Accessed 1 Mar 2019

Yampolskiy, R.V.: Leakproofing Singularity - Artificial Intelligence Confinement Problem. Journal of Consciousness Studies (JCS). Special Issue on the Singularity, Part 1. Volume 19(1–2) 194–214 (2012). http://cecs.louisville.edu/ry/LeakproofingtheSingularity.pdf. Accessed 1 Mar 2019

Virilio, P., Lotringer, S.: Pure War. Semiotext(e), New York (1983)

Jeremiah, D.: Nanotechnology and global security. In: Fourth Foresight Conference on Molecular Nanotechnology, 9 November 1995. http://www.zyvex.com/nanotech/nano4/jeremiahPaper.html. Accessed 1 Mar 2019

The Stealth Threat: An Interview with K. Eric Drexler. Bull. Atomic Sci. 63(1), 55–58 (2007). https://doi.org/10.2968/063001018. Accessed 1 Mar 2019

Henley, L.D.: The RMA After Next. Parameters, Winter 1999–2000, pp. 46–57 (1999). https://ssi.armywarcollege.edu/pubs/parameters/articles/99winter/henley.htm. Accessed 1 Mar 2019

Roosevelt, F.D.: State of the Union address, 6 January 1941 [known as the Four Freedoms speech] (1941)

Office of the Director of National Intelligence, U.S.: “Background to ‘Assessing Russian Activities and Intentions in Recent US Elections’: The Analytic Process and Cyber Incident Attribution”, 6 January 2017

Shane, S., Blinder, A.: Secret Experiment in Alabama Senate Race Imitated Russian Tactics. The New York Times, 19 December 2018. https://www.nytimes.com/2018/12/19/us/alabama-senate-roy-jones-russia.html. Accessed 1 Mar 2019

Osborne, M.: Roy Moore and the Politics of Alcohol in Alabama (2018). https://www.linkedin.com/pulse/roy-moore-politics-alcohol-alabama-matt-osborne/. Accessed 1 Mar 2019

Vassar, M., et al.: Lifeboat Foundation NanoShield Version 0.90.2.13. https://lifeboat.com/ex/nanoshield. Accessed 1 Mar 2019

Acknowledgments

EMR and LAP thank Sirius19 for discussion, criticism and generously defraying costs associated with attending HCII2019.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Ethics declarations

EMR is founder and Chief Executive Officer of NanoCybernetics Corporation, which is developing molecular nanofabrication technologies and programmable self-replicating systems for commercial, biomedical, agricultural, geotechnical, climate restoration and other uses.

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Rabani, E.M., Perg, L.A. (2019). Demonstrably Safe Self-replicating Manufacturing Systems. In: Schmorrow, D., Fidopiastis, C. (eds) Augmented Cognition. HCII 2019. Lecture Notes in Computer Science(), vol 11580. Springer, Cham. https://doi.org/10.1007/978-3-030-22419-6_22

Download citation

DOI: https://doi.org/10.1007/978-3-030-22419-6_22

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-22418-9

Online ISBN: 978-3-030-22419-6

eBook Packages: Computer ScienceComputer Science (R0)