Abstract

SoilGrids maps soil properties for the entire globe at medium spatial resolution (250 m cell side) using state-of-the-art machine learning methods. The expanding pool of input data and the increasing computational demands of predictive models required a prediction framework that could deal with large data. This article describes the mechanisms set in place for a geo-spatially parallelised prediction system for soil properties. The features provided by GRASS GIS – mapset and region – are used to limit predictions to a specific geographic area, enabling parallelisation. The Slurm job scheduler is used to deploy predictions in a high-performance computing cluster. The framework presented can be seamlessly applied to most other geo-spatial process requiring parallelisation. This framework can also be employed with a different job scheduler, GRASS GIS being the main requirement and engine.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Soil is key in the realisation of a number of UN Sustainable Development Goals by providing a variety of goods and services. Soil information is fundamental for a large range of global applications, including assessments of soil and land degradation, sustainable land management and environmental conservation. It is important to provide free, consistent, easily accessible, quality-controlled and standardised soil information. Spatial soil information is often available as maps of soil properties, e.g. pH, carbon content, texture information. Soil is a 3D body and the maps should describe the landscape (i.e. horizontal) variability as well as the vertical (i.e. along the soil depth) variability. Often, such maps are produced using a Digital Soil Mapping (DSM) approach [7], creating a statistical model between the properties measured at known locations and environmental covariates describing the soil forming factors [9]. In recent years machine learning methods [4] were used to develop such models. Once the model is calibrated and evaluated, it is used to predict soil properties at non visited locations.

SoilGrids is based on a DSM system that produces geo-spatial soil information fulfilling two main goals: (1) be a source of consistent soil information to support global modelling, and (2) provide complementary information to support regional and national soil information products in data deprived areas. The main target user groups are policy makers and land management services in countries that lack the means to produce such information, as well as scientists developing other models and tools requiring soil data as input.

SoilGrids is of a series of maps for different soil properties following the specifications of the GlobalSoilMap project [1]. The production of a soil property map consists of different steps: the fitting of a model, the evaluation of that model and finally the prediction for each raster cell at the global scale with a spatial resolution of 250 m. Six depth intervals are currently considered and prediction uncertainty is quantified. The total computation time required for a single soil property exceeds 1 500 CPU-hours. Parallelisation is therefore fundamental, requiring up to hundreds of CPUs.

This article outlines the computational infrastructure (hardware and software) employed to compute the latest SoilGrids products in a high-performance computing (HPC) cluster, focusing in particular on the parallelisation and the resource management for global DSM modelling.

2 General Framework

SoilGrids requires an intensive computational workflow, including different steps and integrating different software. SoilGrids is entirely based on open source software. The inputs to SoilGrids currently comprise observations from 250 000 soil profiles and a selection from over 400 global raster characterising soil forming factors: morphology (e.g. elevation, landform), vegetation information (e.g. NDVI and other indices), climate (e.g. precipitation, land surface temperature) and human factors (e.g. land use/cover).

Gathering and harmonising soil profile observations is one of the core work-streams performed by ISRIC, with the World Soil Information System (WoSIS) [2] being its most visible product. These observations are maintained in a relational database hosted by PostgreSQL [10], from which they are directly sourced for modelling. Environmental covariates are ingested into GRASS GIS [6, 11], thus being automatically normalised to a unique raster cell matrix (of 250 m cells). Previous SoilGrids releases were computed on the millenary Marinus of Tyre map projection [16], a popular projection in environmental modelling. However, this projection expands the surface area of the globe by about 60%. With the increasing size of inputs and outputs in SoilGrids, this overhead urged the switch to an equal-area map projection. The Homolosine projection [5] was selected, since among those projections supported by open source software, is the one that best preserves the shapes of lands masses [17]. The size of each output raster was thus reduced in over \(10^{12}\) cells.

The general framework of SoilGrids is described in Fig. 1. The main steps are:

-

1.

creation of a regression matrix that overlays soil profile data with environmental covariates.

-

2.

fitting of the regression model. Currently this step is based on state-of-the-art machine learning methods, able to produce a measure of the uncertainty of the predictions, namely Quantile Regression Forests (QRF) [8].

-

3.

prediction with the model at global scale.

3 HPC Infrastructure

SoilGrids is currently computed on Anunna, an HPC cluster managed by Wageningen University and Research (WUR). Anunna provides more than 2 000 CPUs in an heterogeneous set of compute nodes. The majority of compute nodes provide 12 GB of RAM per CPU. Storage is managed by the Lustre distributed file system [15].

The Slurm Workload Manager [20] is used to manage the cluster workload. Slurm offers a large range of flexibility. The user may restrict computation to a specific type of node, require an exact number of CPUs to use in parallel, set the number of CPUs required by each process, set a computation time limit, request a specific amount of memory and declare the exact software packages to load at computation time. These settings are defined in a configuration file (the Slurm file), where the user sets the programmes to run and their parameters.

4 Parallelisation of Global Scale Geo-Spatial Computations

SoilGrids requires the integration of a number of software. The two key components are GRASS GIS [6, 11] and R [13]. GRASS GIS is used to store the input data and as the engine to control the parallelisation of the predictions. R is used for the statistical modelling, i.e. to calibrate, fit and compute the predictions using the QRF model.

The GRASS GIS mapset and region features are used to set up parallelisation. Location is a directory which contains GRASS GIS mapsets, which are its sub-directories. All data in one location must refer to the same spatial reference system (SRS). Mapsets contain the actual data, they are a tool for organising maps in a transparent way and provide isolation between different tasks to prevent data loss. GRASS GIS is always connected to one particular mapset. Mapsets are used to store maps related to a project, a specific task, issue or sub-region. Besides the geo-spatial data, a mapset holds the resolution and extent of the current computational region. In this version of SoilGrids, the SRS used for the GRASS location is the Homolosine projection applied to the WGS84 datum.

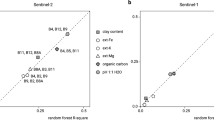

Prior to prediction, a global tessellation is created dynamically using the GRASS module r.tile, dividing land masses into square tiles of a given side (Fig. 2). Predictions are then executed independently, and in parallel, within each of these tiles.

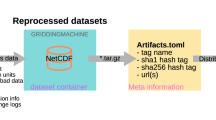

A Slurm file is used to start up each individual prediction process. The prediction process receives as argument the identifier of one of the tiles in the tessellation. The process then creates a temporary mapset, setting its region to the extent of the tile it is tasked to process. The temporary mapset works as a geo-spatial sand box for the prediction process. The prediction process loads data from GRASS within the extent of the tile, as set by the GRASS region. The prediction process is controlled by R software linked to GRASS with the rgrass7 package [3]. The result is saved to disk as a GeoTIFF file and the temporary mapset deleted (Fig. 3).

5 Resources Management with (sub-)tiling

Prediction models developed with the R language may require a large amount of RAM, i.e. tens of GBs of RAM to execute predictions for a single tile under 1 MB in size. The obvious strategy to tackle memory constraints would be to reduce the size of the tiles used to set up the temporary GRASS mapsets. However, this can soon result in tens of thousands of GeoTIFF files. Such large numbers can be excessive for some file systems, and complicate the functioning of command line tools [19].

In the case of SoilGrids, a different strategy was applied to address resource constraints, i.e. sub-tiling. The prediction process sub-divides the tile in equal-size sub-tiles. Within the temporary GRASS mapset, the prediction process successively sets the region to each of these sub-tiles and invokes the general prediction model. The output computed within each sub-tile is finally saved into a folder named after the prediction tile. The end result is a collection of folders, one per tile, each containing as many GeoTIFF files as the number of sub-tiles contained in the tile.

6 Assemblage of Prediction Files

The sub-tilling strategy can result in a very large number of GeoTIFF files. For example, a three-by-three sub-tiling matrix applied to tiles of 200 \(\times \) 200 km results in over 50 000 GeoTIFF files covering the globe’s land masses. All these files must then be aggregated to produce a single asset, easily manageable by end users. The Virtual Raster Tiles (VRT) format [12] introduced by the Geospatial Data Abstraction Library (GDAL) is a suitable solution, as it provides a simple and lightweight way to mosaic geo-spatial data. A VRT is in essence a XML file that patches together various contiguous rasters in the same SRS into a mosaic. As the GDAL library is used as I/O driver by most GIS programmes this format is widely supported.

GDAL provides a specific tool for the creation of VRT mosaics: gdalbuildvrt. It can take as input a file with the list of rasters to include in the output VRT. Another GDAL tool, gdaladdo, can afterwards be employed to create overviews for the VRT. These overviews are stored in a companion file to the original VRT. The end user needs only to point a GIS programme to the VRT file to load the full raster mosaic. With the overviews created, access is fast and fluid at different map scales.

The VRT format is also useful to simplify the re-projection of large rasters. Another GDAL tool – gdalwarp – can be applied directly to a VRT file, creating a second VRT file encoding the parameters of the specified output SRS. No transformations are conducted on the rasters themselves, thus a swift operation.

7 Reproducibility and Portability

The parallelisation scheme described in this article is directly portable to any system using Slurm where GRASS GIS and R can be installed. It is also applicable to any other system relying on a similar scheduling mechanism, such as Son of Grid Engine or Mesos [14]. In essence, any system able to spawn processes passing a tile identifier as parameter can be used to reproduce this set up. This solution can also be easily adapted to run on single machines with a large number of CPUs and managed by the GNU parallel tool [18].

While GRASS GIS is a key component in the approach described wherewith, alternative software can be used to similar ends. Certain raster formats, like GeoTIFF, store rasters in blocks of constant size. It is therefore possible to parallelise spatial computation on the basis of such blocks. GDAL in particular provides an API that facilitates the retrieval of these blocks. However, some pre-processing might be necessary to guarantee that all rasters stack up, using blocks of equal size and spatial extent. The mapset and region features in GRASS are unique among open source software and perform this stacking of input rasters in a seamless way.

The parallelisation approach reported in this article can be applied to other types of modelling where results can be easily parallelised in space. The tiling mechanism is fully dynamic and independent of the underlying SRS. The size of both the main map tiles and its sub-tiles are parameters to the tiling routine, and expressed in number of raster cells, not map units. It is therefore straightforward to apply with different SRSs and computation extents. The main limitation of this approach is with problems that can not be easily parallelised in space, such as hydrological processes requiring continuity or interactions between catchments.

References

Arrouays, D., et al.: GlobalSoilMap: toward a fine-resolution global grid of soil properties. In: Advances in Agronomy, vol. 125, pp. 93–134. Academic Press (2014). https://doi.org/10.1016/B978-0-12-800137-0.00003-0

Batjes, N.H., Ribeiro, E., van Oostrum, A.: Standardised soil profile data to support global mapping and modelling (wosis snapshot 2019). Earth Syst. Sci. Data Discussions 2019, 1–46 (2019). https://doi.org/10.5194/essd-2019-164. https://www.earth-syst-sci-data-discuss.net/essd-2019-164/

Bivand, R.: rgrass7: Interface between GRASS 7 geographical information system and R (2018). https://CRAN.R-project.org/package=rgrass7. r package version 0.1-12

Breiman, L.: Random forests. Mach. Learn. 45(1), 5–32 (2001)

Goode, J.P.: The homolosine projection: a new device for portraying the earth’s surface entire. Ann. Assoc. Am. Geogr. 15(3), 119–125 (1925)

GRASS Development Team: Geographic Resources Analysis Support System (GRASS GIS) software, version 7.6.0 (2019). http://www.grass.osgeo.org

McBratney, A., Santos, M., Minasny, B.: On digital soil mapping. Geoderma 117, 3–52 (2003). https://doi.org/10.1016/S0016-7061(03)00223-4

Meinshausen, N.: Quantile regression forests. J. Mach. Learn. Res. 7(Jun), 983–999 (2006)

Minasny, B., McBratney, A.: Digital soil mapping: a brief history and some lessons. Geoderma 264(Part B), 301–311 (2016). Soil mapping, classification, and modelling: history and future directions

Momjian, B.: PostgreSQL: Introduction and Concepts. Addison-Wesley, New York (2001)

Neteler, M., Mitasova, H.: Open Source GIS: A GRASS GIS Approach, vol. 689. Springer, Berlin (2013)

OSGeo Foundation: VRT - GDAL virtual format. https://gdal.org/drivers/raster/vrt.html (2019). Accessed 2019 Oct 09

R Core Team: R: A language and environment for statistical computing. R Foundation for Statistical Computing, Vienna, Austria (2019). http://www.R-project.org/. ISBN 3-900051-07-0

Reuther, A., et al.: Scheduler technologies in support of high performance data analysis. In: 2016 IEEE High Performance Extreme Computing Conference (HPEC), pp. 1–6. IEEE (2016)

Schwan, P., et al.: Lustre: building a file system for 1000-node clusters. In: Proceedings of the 2003 Linux Symposium, vol. 2003, pp. 380–386 (2003)

Snyder, J.P.: Flattening the Earth: Two Thousand Years of Map Projections. University of Chicago Press, Chicago (1997)

de Sousa, L.M., Poggio, L., Kempen, B.: Comparison of FOSS4G supported equal-area projections using discrete distortion indicatrices. ISPRS Int. J. Geo-Inf. 8(8), 351 (2019)

Tange, O.: GNU Parallel 2018. https://doi.org/10.5281/zenodo.1146014

Thomas Koenig: sysconf manual page, in Linux Programmer’s manual (2019). http://man7.org/linux/man-pages/man3/sysconf.3.html. Accessed 2019 Oct 15

Yoo, A.B., Jette, M.A., Grondona, M.: SLURM: simple linux utility for resource management. In: Feitelson, D., Rudolph, L., Schwiegelshohn, U. (eds.) JSSPP 2003. LNCS, vol. 2862, pp. 44–60. Springer, Heidelberg (2003). https://doi.org/10.1007/10968987_3

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 IFIP International Federation for Information Processing

About this paper

Cite this paper

de Sousa, L.M., Poggio, L., Dawes, G., Kempen, B., van den Bosch, R. (2020). Computational Infrastructure of SoilGrids 2.0. In: Athanasiadis, I., Frysinger, S., Schimak, G., Knibbe, W. (eds) Environmental Software Systems. Data Science in Action. ISESS 2020. IFIP Advances in Information and Communication Technology, vol 554. Springer, Cham. https://doi.org/10.1007/978-3-030-39815-6_3

Download citation

DOI: https://doi.org/10.1007/978-3-030-39815-6_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-39814-9

Online ISBN: 978-3-030-39815-6

eBook Packages: Computer ScienceComputer Science (R0)