Abstract

Recommending Chemical Compounds of interest to a particular researcher is a poorly explored field. The few existent datasets with information about the preferences of the researchers use implicit feedback. The lack of Recommender Systems in this particular field presents a challenge for the development of new recommendations models. In this work, we propose a Hybrid recommender model for recommending Chemical Compounds. The model integrates collaborative-filtering algorithms for implicit feedback (Alternating Least Squares (ALS) and Bayesian Personalized Ranking (BPR)) and semantic similarity between the Chemical Compounds in the ChEBI ontology (ONTO). We evaluated the model in an implicit dataset of Chemical Compounds, CheRM. The Hybrid model was able to improve the results of state-of-the-art collaborative-filtering algorithms, especially for Mean Reciprocal Rank, with an increase of 6.7% when comparing the collaborative-filtering ALS and the Hybrid ALS_ONTO.

This work was supported by the Fundação para a Ciência e Tecnologia (FCT), under LASIGE Strategic Project - UID/CEC/00408/2019, UIDB/00408/2020, CENTRA Strategic Project UID/FIS/00099/2019, FCT funded project PTDC/CCI-BIO/28685/2017 and PhD Scholarship SFRH/BD/128840/2017.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

The recommendation of Chemical Compounds of interest for scientific researchers has not been widely explored [9, 23]. However, Recommender Systems (RSs) may help in the discovery of compounds, for example, by suggesting items not yet studied by the researchers. One challenge in this field is the lack of available datasets with the preferences of the researchers about the Chemical Compounds for testing the RS. More recently, alternatives have emerged with the development of datasets consisting of data collected from implicit feedback. Unlike what happens with other datasets, for example, Movielens [6], these datasets do not contain the specific interests of the researchers. Instead, this information is extracted from the activities of the researchers, for example, through scientific literature [3, 15].

Datasets of explicit or implicit feedback require different recommender algorithms, especially because implicit feedback has some significant downgrades, such as the lack of negative feedback, and unbalanced ratio of positive vs unobserved ratings [11, 18]. When dealing with implicit feedback datasets, the solution involves applying learning to rank (LtR) approaches. LtR consists in, given a set of items, identify in which order they should be recommended [17].

The main approaches in RSs are Collaborative-Filtering (CF) and Content-Based (CB) [20]. CF uses the similarity between the ratings of the users, and CB uses the similarity between the features of the items. CF approaches cannot deal with new items or new users in the system, i.e., items and users without ratings (cold start problem). CB does not need to deal with this problem for new items, and that is the main reason Hybrid RSs (CF + CB) exist. One of the tools used by CB are ontologies [27], which are related vocabularies of terms and definitions for a specific field of study [2, 28]. Some examples of well-known ontologies are the Chemical Entities of Biological Interest (ChEBI)Footnote 1 [7], the Gene Ontology (GO)Footnote 2 [4], and the Disease Ontology (DO)Footnote 3 [21].

In this paper, we propose a Hybrid recommender model for recommending Chemical Compounds, consisting of a CF module and a CB module. In the CF module we tested two algorithms for implicit feedback datasets, Alternating Least Squares (ALS) [8] and Bayesian Personalized Ranking (BPR) [18], separately. In the CB module we explored the semantic similarity between the compounds in the ChEBI ontology (ONTO algorithm). The Hybrid model combines ALS + ONTO, and BPR + ONTO. The framework developed for this work is available at https://github.com/lasigeBioTM/ChemRecSys.

2 Related Work

There are a few studies using RS for recommending Chemical Compounds. [9] describes the use of CF methods for creating a Free-Wilson-like fragment recommender system. [23] use RS techniques for the discovery of new inorganic compounds, by applying machine-learning to find the similarity between the proposed and the existent compounds.

Next, we describe studies using ontologies for improving the performance of CF algorithms. [12] created a RS for recommending English collections of books in a library. The authors developed PORE, a personal ontology Recommender System, which consists of a personal ontology for each user and then the application of a CF method. They used a standard normalized cosine similarity for finding the similarity between the users. [26] also used an ontology for creating users’ profiles for the domain of books. They calculated the similarity, not between the ratings of the users, but based on the interest scores derived from the ontology. The CF method used was the k-nearest neighbours. [24] developed a Trust–Semantic Fusion approach, tested on movies and Yahoo! datasets. Their approach incorporates semantic knowledge to the items primary information, using knowledge from the ontologies. They used the user-based Constrained Pearson Correlation and the user-based Jaccard similarity.

[16] presented a solution for the top@k recommendations specifically for implicit feedback data. The authors developed the Spank - semantic path-based ranking. They extracted path-based features of the items from DBpedia and used LtR algorithms to get the rank of the most relevant items. They tested the method on music and movies domains. [1] developed a new semantic similarity measure, the Inferential Ontology-based Semantic Similarity. The new measure improved the results of a user-based CF approach, using Pearson Correlation for calculating the similarity between the users. The authors tested the approach on the tourism domain. Most recently, [14] developed a Hybrid RS tested on the movies domain. The method used Single Value Decomposition for dimensionality reduction for the item and user-based CF, and ontologies for item-based semantic similarity, improving the CF results. They do not deal with implicit data.

To the best of our knowledge, our study is the first to use semantic similarity for recommending Chemical Compounds, dealing with implicit data by using state-of-the-art methods (ALS and BPR) and improving the results for the top@k in several evaluation metrics.

3 The Proposed Model

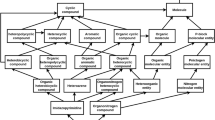

The proposed model has two modules: CF and CB. Figure 1 shows the general workflow of the model. The input data used in this model has the format of <user,item,rating>. The unrated set represents the items we want to rank to provide the best recommendations in the first positions to a user. The rated set are the items the users already rated. Since we will split the data into train and test, lets call train set to the rated set and test set to the unrated set. Both train and test sets are the input for CF and CB modules. Using CF algorithms for implicit feedback datasets, the CF module gives a score for each item in the test set. The CB module uses semantic similarity for providing a score for the items in the test set. In the last step, the scores from CF and CB modules are combined and sorted in descending order.

For the CF module, we selected state-of-the-art CF recommender algorithms for implicit dataFootnote 4, ALS [8] and BPR [18]. ALS is a latent factor algorithm that addresses the confidence of a user-item pair rating. BPR is also a latent factor algorithm, but it is more appropriate for ranking a list of items. BPR does not just consider the unobserved user-item pairs as zeros, but instead, it takes into consideration the preference of a user between an observed and an unobserved rating.

The CB module (ONTO algorithm) is based on ChEBI ontology. This module assigns a score S to each item in the test set, calculating the semantic similarity between each item in the train and the test sets, as shown in Fig. 2. For calculating the similarity, we used DiShInFootnote 5 [5], a tool for calculating semantic similarities between the entities represented by an ontology. Semantic similarity allows measuring how close two entities are in a semantic base. When using ontologies, the semantic similarity may be measured, for example, by calculating the shortest path connecting the nodes of two entities. DiShin allows to calculate three similarity metrics: Resnik [19], Lin [13], and Jiang and Conrath [10]. For this work, we used the Lin metric. We intend to test the other metrics in the future.

Whereas the CF module uses all the ratings from the train set to train the model, CB module only takes into account the ratings of each user. Using DiShin, we calculate the value of the similarity between each item in the train test and the items in test set.

Lets I1 be the item in test, and I2, I3 ... In the items in the train, with size m, for a user U. The score S for I1 (SI1) is calculated according to the Eq. 1. ONTO algorithm does not use any real rating of the test items when calculating the score for each item in the test set, thus we do not have the problem of introducing bias in the results.

For obtaining a final score (FS) for each item in the test, we combine the scores from CF module (SCF) and CB module (SCB), into a Hybrid recommendation approach, according to Eq. 2. Our goal is to prove that by combining both modules, we can improve the results of each module separately.

4 Experiments and Results

Experiments. The data used in this work is a subset of a dataset of Chemical Compounds, CheRM, with the format of <user,item,rating> [3]. The users are authors from research articles, the items are Chemical Compounds present in ChEBI, and the ratings (implicit) are the number of articles the author wrote about the itemFootnote 6. The subset has 102 Chemical Compounds, 1184 authors, 5401 ratings, and a sparsity level of 95.5%. We used a subset of CheRM because it has more than 22,000 items and there is a bottleneck in the calculation of the similarity between all the items in real time.

The algorithms tested were ALS, BPR, ONTO, and the hybrids ALS_ONTO and BPR_ONTO. For ALS and BPR we tested different latent factors, achieving the best results for this data with 150 factors. We used offline methods [25] for evaluating the performance of the algorithms for the top@k, with k varying between 0 and 20, with steps of 1. From the vast range of metrics for evaluating recommender algorithms, we selected Classification Accuracy Metrics (CAMet) and Rank Accuracy Metrics (RAMet). CAMet measure the relevant and irrelevant items recommended in a ranked list. Examples of CAMet are Precision, Recall, and F-Measure. RAMet measure the ability of an algorithm for recommending the items in the correct order. Some well-known RAMet are Mean Reciprocal Rank (MRR), Normalized Discount Cumulative Gain (nDCG), and Limited Area Under the Curve (lAUC), a variation of AUC [22]. All the selected metrics range between 0 and 1, and values closest to 1 are better. For the segmentation of the dataset, we used a cross-validation approach, by splitting users and items in 5 folds. Each iteration had 1/5 of the users and the items as test and 4/5 as train data. All the positive ratings in the test set are considered as relevant items. We considered the unrated items as negative ratings, i.e., not relevant for the users.

Results. We present the results of this study in Fig. 3, for all the algorithms and all the metrics described previously. Analysing Fig. 3, the ONTO algorithm alone has the lowest results in all metrics. Nevertheless, in metrics such as Precision, Recall and F-measure, it follows the trend of the other algorithms, and when measuring these metric for the top@20, the results are similar. ONTO has the advantage of being a CB algorithm, therefore it does not have the problem of cold start for new items. ALS and BPR cannot be used if the item in the test set is not in the train set at least once (at least one author in the train set wrote about this Chemical Compound).

Between ALS and BPR, ALS achieved the best results. Since BPR is an algorithm for ranking, it was expected to obtain better results. We believe this is due the fact that the dataset has a large number of ratings equal to one, and many items have the same relevance (difficult to rank).

The approach with the best results in most of the metrics is the Hybrid ALS_ONTO. The use of ALS and ONTO algorithms together has a particularly positive effect on the metrics measuring the ranking accuracy (MRR, nDCG and AUC), especially for MRR, with an increase of 6.7% when comparing the ALS algorithm and the Hybrid ALS_ONTO. This means that ONTO reorder ALS scores in a way that the first results in the top@k are more relevant.

These are preliminary results. The study needs to be replicated with the full CheRM dataset, and we need to perform more studies to see the real impact for the cold start problem. Nevertheless, the results seem promising, in the one hand for improving the relevant recommendations provided (CAMet), and on the other hand in enhancing the position of the most relevant items in a ranked list (RAMet). Our Hybrid algorithm may be applied to other areas, for example, for genes, phenotypes, and diseases, provided that exists an ontology for these items.

5 Conclusion

In this work, we presented a Hybrid recommendation model for recommending Chemical Compounds, based on CF algorithms for implicit data and a CB algorithm based on semantic similarity of the Chemical Compounds using the ChEBI ontology. The obtained results support our hypothesis that by using the semantic similarity between the Chemical Compounds, the results of state-of-the-art CF algorithms can be improved. For future work we intend to increase the length of the dataset, to test other similarity metrics, and to test other alternatives to calculate the final score of the Hybrid algorithm.

References

Al-Hassan, M., Lu, H., Lu, J.: A semantic enhanced hybrid recommendation approach: a case study of e-government tourism service recommendation system. Decis. Support Syst. 72, 97–109 (2015)

Barros, M., Couto, F.M.: Knowledge representation and management: a linked data perspective. Yearb. Med. Inform. 25(01), 178–183 (2016)

Barros, M., Moitinho, A., Couto, F.M.: Using research literature to generate datasets of implicit feedback for recommending scientific items. IEEE Access 7, 176668–176680 (2019)

Consortium, G.O.: The gene ontology resource: 20 years and still going strong. Nucleic Acids Res. 47(D1), D330–D338 (2018)

Couto, F., Lamurias, A.: Semantic similarity definition. In: Encyclopedia of Bioinformatics and Computational Biology, vol. 1 (2019)

Harper, F.M., Konstan, J.A.: The MovieLens datasets: history and context. ACM Trans. Interact. Intell. Syst. (TIIS) 5(4), 1–19 (2015)

Hastings, J., et al.: ChEBI in 2016: improved services and an expanding collection of metabolites. Nucleic Acids Res. 44(D1), D1214–D1219 (2015)

Hu, Y., Koren, Y., Volinsky, C.: Collaborative filtering for implicit feedback datasets. In: 2008 Eighth IEEE International Conference on Data Mining, pp. 263–272. IEEE (2008)

Ishihara, T., Koga, Y., Iwatsuki, Y., Hirayama, F.: Identification of potent orally active factor Xa inhibitors based on conjugation strategy and application of predictable fragment recommender system. Bioorg. Med. Chem. 23(2), 277–289 (2015)

Jiang, J.J., Conrath, D.W.: Semantic similarity based on corpus statistics and lexical taxonomy. arXiv preprint cmp-lg/9709008 (1997)

Khawar, F., Zhang, N.L.: Conformative filtering for implicit feedback data. In: Azzopardi, L., Stein, B., Fuhr, N., Mayr, P., Hauff, C., Hiemstra, D. (eds.) ECIR 2019. LNCS, vol. 11437, pp. 164–178. Springer, Cham (2019). https://doi.org/10.1007/978-3-030-15712-8_11

Liao, I.E., Hsu, W.C., Cheng, M.S., Chen, L.P.: A library recommender system based on a personal ontology model and collaborative filtering technique for english collections. Electron. Libr. 28(3), 386–400 (2010)

Lin, D., et al.: An information-theoretic definition of similarity. In: ICML, vol. 98, pp. 296–304. Citeseer (1998)

Nilashi, M., Ibrahim, O., Bagherifard, K.: A recommender system based on collaborative filtering using ontology and dimensionality reduction techniques. Expert Syst. Appl. 92, 507–520 (2018)

Ortega, F., Bobadilla, J., Gutiérrez, A., Hurtado, R., Li, X.: Artificial intelligence scientific documentation dataset for recommender systems. IEEE Access 6, 48543–48555 (2018)

Ostuni, V.C., Di Noia, T., Di Sciascio, E., Mirizzi, R.: Top-N recommendations from implicit feedback leveraging linked open data. In: Proceedings of the 7th ACM Conference on Recommender Systems, pp. 85–92. ACM (2013)

Rendle, S., Balby Marinho, L., Nanopoulos, A., Schmidt-Thieme, L.: Learning optimal ranking with tensor factorization for tag recommendation. In: Proceedings of the 15th ACM SIGKDD International Conference on Knowledge Discovery and Data Mining, pp. 727–736. ACM (2009)

Rendle, S., Freudenthaler, C., Gantner, Z., Schmidt-Thieme, L.: BPR: Bayesian personalized ranking from implicit feedback. In: Proceedings of the Twenty-Fifth Conference on Uncertainty in Artificial Intelligence, pp. 452–461. AUAI Press (2009)

Resnik, P.: Using information content to evaluate semantic similarity in a taxonomy. arXiv preprint cmp-lg/9511007 (1995)

Ricci, F., Rokach, L., Shapira, B.: Recommender systems: introduction and challenges. In: Ricci, F., Rokach, L., Shapira, B. (eds.) Recommender Systems Handbook, pp. 1–34. Springer, Boston (2015). https://doi.org/10.1007/978-1-4899-7637-6_1

Schriml, L.M., et al.: Human disease ontology 2018 update: classification, content and workflow expansion. Nucleic Acids Res. 47(D1), D955–D962 (2018)

Schröder, G., Thiele, M., Lehner, W.: Setting goals and choosing metrics for recommender system evaluations. In: UCERSTI2 Workshop at the 5th ACM Conference on Recommender Systems, Chicago, USA, vol. 23, p. 53 (2011)

Seko, A., Hayashi, H., Tanaka, I.: Compositional descriptor-based recommender system for the materials discovery. J. Chem. Phys. 148(24), 241719 (2018)

Shambour, Q., Lu, J.: A trust-semantic fusion-based recommendation approach for e-business applications. Decis. Support Syst. 54(1), 768–780 (2012)

Shani, G., Gunawardana, A.: Evaluating recommendation systems. In: Ricci, F., Rokach, L., Shapira, B., Kantor, P.B. (eds.) Recommender Systems Handbook, pp. 257–297. Springer, Boston (2011). https://doi.org/10.1007/978-0-387-85820-3_8

Sieg, A., Mobasher, B., Burke, R.: Improving the effectiveness of collaborative recommendation with ontology-based user profiles. In: Proceedings of the 1st International Workshop on Information Heterogeneity and Fusion in Recommender Systems, pp. 39–46. ACM (2010)

Tarus, J.K., Niu, Z., Mustafa, G.: Knowledge-based recommendation: a review of ontology-based recommender systems for e-learning. Artif. Intell. Rev. 50(1), 21–48 (2017). https://doi.org/10.1007/s10462-017-9539-5

Uschold, M., Gruninger, M.: Ontologies: principles, methods and applications. Knowl. Eng. Rev. 11(2), 93–136 (1996)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2020 Springer Nature Switzerland AG

About this paper

Cite this paper

Barros, M., Moitinho, A., Couto, F.M. (2020). Hybrid Semantic Recommender System for Chemical Compounds. In: Jose, J., et al. Advances in Information Retrieval. ECIR 2020. Lecture Notes in Computer Science(), vol 12036. Springer, Cham. https://doi.org/10.1007/978-3-030-45442-5_12

Download citation

DOI: https://doi.org/10.1007/978-3-030-45442-5_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-45441-8

Online ISBN: 978-3-030-45442-5

eBook Packages: Computer ScienceComputer Science (R0)