Abstract

In this paper, we present an approach for the specification and the execution of complex scientific workflows in cloud-like environments. The approach strives to support scientists during the modeling, deployment and the monitoring of their workflows. This work takes advantages from Petri nets and more pointedly the so called reference nets formalism, which provide robust modeling/implementation techniques. Meanwhile, we present the implementation of a new tool named RenewGrass. It allows the modeling as well as the execution of image processing workflows from the remote sensing domain. In terms of usability, we provide an easy way to support unskilled researchers during the specification of their workflows. Then, we use the Enhanced Vegetation Index (EVI) workflow as a showcase of the implementation. At last, we introduce our methodology to move the actual implementation to the Cloud.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Several applications require the completion of multiple interdependent tasks; the description of a complex activity involving such a set of tasks is known as a workflow. The Workflow Management Coalition (WfMC) defined a workflow as “the automation of business process, in whole or part, during which documents, information or tasks are passed from one participant to another for action, according to a set of procedural rules” [6]. Traditional workflow management systems (WfMS) were conceived for business domain. Later, they have been adopted for scientific applications in various fields such as astronomy, bio-informatics, meteorology, etc. Ludäscher et al. [9] define scientific workflows as: “networks of analytical steps that may involve, e.g., database access and querying steps, data analysis and mining steps, and many other steps including computationally intensive jobs on high performance cluster computers”.

Nevertheless, high-throughput and long running workflows are often composed of tasks; which usually need to be mapped to distributed resources, in order to access, manage and process large amount of data. These resources are often limited in supply and fail to meet the increasing demands of scientific applications. Cloud computing is an emerging computing technology for the execution of resource intensive workflows by integrating large-scale, distributed and heterogeneous computing and storage resources. Therefore, Cloud computing is of high interest to scientific workflows with high requirements on compute or storage resources. Furthermore, workflow concepts are critical features for a successful Cloud strategy. These concepts cover many steps of the deployment process. For instance graphical workflow specification, data/task/user management, workflow monitoring, etc. However, existing workflow architectures are not directly adapted to Cloud computing and workflow management systems are not integrated with the Cloud system [10]. Missing is more support at both conceptual and technical level.

At the conceptual level, we provide powerful modeling techniques based on Petri nets. We have implemented RenewGrass, which is a user friendly tool support for building scientific workflows. Moreover, RenewGrass integrates the features from the Geographic Resource Analysis Support System GIS for short Grass. Its functionality is to support scientists to (i) specify their workflows and to (ii) control their execution by providing appropriate modeling patterns which addresses specific functions. These functions can be provided by services hosted on-premise or in the Cloud. With reference to the workflow life-cycle, the tool supports both modeling and execution steps. The application field concerns the Geographical Information System (GIS) domain, especially the processing of satellite-based images.

Furthermore, we discuss issues and migration patterns for moving applications and application data to the Cloud. Based on these patterns, we propose an agent-based architecture to integrate the current implementation in Cloud-like environments, i.e., the components making up the workflow management system are mapped into services provided by specific agents. In general, agent concepts are employed for improving Cloud service discovery, composition and Service Level Agreement (SLA) management. The elaborated architecture is based on the Mulan/Capa framework and following the Paose approach. The objective is that agents perform the Cloud management functionalities such as brokering, workflow submission and instance control. Technically, the OpenStack framework was adopted for the creation and the management of the Cloud instances.

The remainder of this paper is organized as follows: Sect. 2 gives an overview about the concepts, tools and techniques that are tackled in this paper as well as related work. Section 3 introduces the RenewGrass tool and its architecture. The EVI workflow of a Landsat/Thematic Mapper (TM) imagery is presented as a showcase for the implementation. Section 4 introduces our approach for moving the execution of the workflow tasks to the Cloud. Finally, in Sect. 5 we give a summary as well as a plan for future work.

2 Background

This section gives a brief overview about the concepts, tools and technologies, which are addressed in this paper. This includes the reference nets formalism and the modeling/simulation tool Renew. The related work is also addressed.

2.1 Reference Nets

At the modeling level, we use a special kind of Petri nets called reference nets [7]. Petri nets proved their efficiency to model complex/distributed systems. The main advantage of reference nets lies in the use of Java inscriptions within the transitions making the gap between specification and implementation decrease considerably. Reference nets are object-oriented high-level Petri nets and are based on the nets-within-nets formalism introduced by [12], which allows tokens to be nets again. They extend Petri nets with dynamic net instances, net references, and dynamic transition synchronization through synchronous channels. Reference nets consist of places, transitions and arcs. The input and output arcs have a similar behavior to ordinary Petri nets. Tokens can be available of any type in the Java programming language. In opposite to the net elements of P/T nets, reference nets provide supplementary elements that increase the modeling power. These elements are: virtual places, declaration and arc types. The places are typed and the transitions can hold expressions, actions, guards, etc. Firing a transition can also create a new instance of a subnet. The creation of the instances is similar to object instances in object-oriented programming. This allows a specific, hierarchical nesting of networks, which is helpful for building complex systems.

2.2 Renew

The REference NEts Workshop (Renew)Footnote 1 is a graphical tool for creating, editing and simulating reference nets. It combines the nets-within-nets paradigm with the implementing power of Java. With Renew it is possible to draw and simulate both Petri nets and reference nets. During the simulation, a net instance is created and can be viewed in a separate window as its active transitions fire. Simulation is used in Renew to view firing sequences of active transitions in reference nets. Simulation can run in a one step modus where users can progress in steps where only one transition fires. Renew also offers the possibility to set breakpoints to hold the simulation process. Breakpoints can be set to places and transitions. By changing the compiler, Renew can also simulate P/T nets, timed petri nets, Workflow nets, etc.

2.3 Related Work

Before the emergence of the Cloud technology, there were significant research projects dealing with the development of distributed and scientific workflow systems with the grid paradigm. Workflow enactment service can be built on top of the low level grid middleware (eg. Globus ToolkitFootnote 2, UNICOREFootnote 3 and AlchemiFootnote 4), through which the workflow management system invokes services provided by grid resources [13]. At both the build-time and run-time phases, the state of the resources and applications can be retrieved from grid information services. There are many grid workflow management systems in use; like these representative projects: ASKALONFootnote 5, PegasusFootnote 6, TavernaFootnote 7, KeplerFootnote 8, TrianaFootnote 9 and Swift [14]. Most of these projects, have been investigating the adaptation of their architectures to include the Cloud technology. For instance, the Elastic Compute Cloud (EC2) module has been implemented to make Kepler supports Amazon Cloud services. Launched in 2012, the Amazon Simple Workflow (SWF)Footnote 10 is an orchestration service for building scalable applications. It maintains the execution state of the workflow in terms of consistency and reliability. It permits structuring the various processing steps in an application running on one or more systems as a set of tasks. These systems can be Cloud-based, on-premise, or both.

3 RenewGrass: A Tool for Building Geoprocessing Workflows

In this section, we introduce a new tool called RenewGrass. The objective is to extend Renew by providing geoprocessing capabilities. Since the version 1.7, Renew is built on a highly sophisticated plug-in architecture. It allows the extension and the integration of additional functionality through the use of interfaces from Renew components without changing the core architecture.

Up until now, Renew still serves as a modeling/simulation tool for different teaching projects. Unfortunately, the application domain concerns mostly business workflows. Through the integration of RenewGrass in Renew, we aim to:

-

extend Renew to be adapted for scientific workflow modeling and execution

-

provide unexperienced users with modeling patterns that focus on image processing

-

exploit and integrate the power of the Grass GIS, thus several image processing workflows can be implemented

-

reduce the gap between the specification of the workflow and its implementation

-

integrate Cloud services for mapping time-consuming and data-intensive workflows

3.1 Integration of RenewGrass in Renew

In this section, we present RenewGrass, which was successfully integrated in Renew. The main functionality of RenewGrass is to provide support during modeling and execution of image processing workflows. Figure 1 shows the simplified view of the position of the new tool in Renew. The Workflow and the WFNet are additional plug-ins, which are required in case worflow management functionalities are required such as log-in, tasks management, etc. As mentioned in Fig. 1, RenewGrass is built on top of the JGrasstoolsFootnote 11, which was adapted for Renew. The latter makes the Petri net models support the invocation of Grass core commands directly from the Petri net transitions (see Fig. 2). The current implementation of the tool allows local use only. This signifies that Renew, Grass GIS and data are hosted locally.

3.2 Use Case

As proof of concept, RenewGrass was used to implement the Enhanced Vegetation Index (EVI) for a satellite image taken from the LANDSAT-TM7. The EVI is a numerical indicator that uses the Red, the Near-infrared and the Blue bands. It is an optimized index designed to enhance the vegetation signal with improved sensitivity in high biomass regions.

2.5 * (nirchan - redchan) / (nirchan + 6.0 * redchan - 7.5 * bluechan + 1.0)

Figure 2, shows the Peri net model corresponding to the EVI workflow. For the clarity of the paper, we omitted several steps from the original workflow. With the same procedure other vegetation indexes or image processing workflows can be implemented.

4 Cloud-RenewGrass: A Cloud Migration Approach

RenewGrass has been successfully tested in a local environment. All the required components were hosted on-premise (Renew, Grass GIS and the data). Nevertheless, as soon as the number of tasks increases and the size of data become large, we start facing computing and storage issues. This is due to insufficient resources on the local site. This section presents our vision to deploy the current implementation of the RenewGrass tool onto the Cloud services. First, different possibilities to Cloud-enable an application in general are shortly illustrated. These possibilities are formulated in form of patterns. The illustration is based on the work of [1, 5]. They investigate and strive to answer questions like: where to enact the processes? Where to execute the activities? and Where to store the data? Thus the entities taken in account are: (1) the process engine (responsible for the execution and the monitoring of the activities) (2) the activities that need to be executed by the workflow and (3) the Data. Next, we propose an architecture to introduce a new pattern and an appropriate methodology to enable remote execution in the Cloud.

4.1 Migration Patterns

Moving an existing application to the Cloud should be based on a solid strategy. Providing the business management system (or WfMS) or a part in the Cloud raises a series of concerns about ensuring the security of the data and the performance of the system. For example, Cloud users could lose control on their own data in case of a fully Cloud-based solution. Some activities, which are not compute-intensive can be executed on-premise rather than moving them to the Cloud. Unfortunately, this transfer can be time and cost-consuming because of the pay-per-use model and the nature of the workflow tasks.

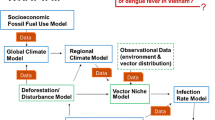

In the following, the patterns from [1, 5] are shortly introduced. The first pattern designs the traditional scenario where all the components of the workflow system are hosted at the user-side (on-premise). The second scenario represents a case when users already have a workflow engine but the application contains compute or data-intensive activities, so they are moved to the Cloud for acquiring more capabilities and better performance. The third case designs a situation, where the end-users do not have a workflow engine, so they use a Cloud-based workflow engine, which is provided on-demand. In that case, workflow designers can specify transfer requirements of activity execution and data storage, for example, sensitive data and non-compute-intensive activities can be hosted on-premise, and compute-intensive activities and non-sensitive data can be moved to the Cloud [5]. The last scenario presents a situation where all components are hosted in a Cloud and accessed probably from a Web interface. The advantage is that users do not need to install and configure any software on the user side. To make an analogy with the elements of our approach, Renew is the process engine, the activities are the geoprocessing tasks (performed by the Grass services), which are related to the satellite images (data). The latter (data and Grass GIS) can be either on-premise or hosted in the Cloud. Based on these elements and the illustration presented above, Fig. 3 presents an overview of the diverse approaches (patterns) to design our workflow system based on the Cloud technology.

Patterns for cloud-based workflow systems (Adapted from [5])

We have noticed that both [1, 5] do not address all possible situations. For instance, the following situation has been not addressed: the process engine is available on the user side but due to circumstances (internal failure, not sufficient compute or storage resources), remote process engines need to be integrated and remotely invoked. Figure 4 shows approximately how this scenario looks like. Our solution consists on transferring the data and executing the process by another process engine. This is discussed in the following sections.

4.2 Architecture

Figure 5 shows the architecture to integrate the current implementation into a Cloud system. While RenewGrass is already implemented and successfully integrated in Renew (see Sect. 3), most efforts are dedicated now to move the execution of the geoprocessing tasks to the Cloud and the provision of an interface to invoke these services directly from workflow models.

In our work, we follow an agent-based approach, i.e., many components functionalities are performed by special agents. In summary, the role of each agent used in the approach is described below.

-

1.

Workflow Holder Agent: The Workflow holder is the entity that specifies the workflow and in consequence holds the generated Petri net models. This entity can be either human or a software component. The specification of the image processing workflow is performed using RenewGrass, which provides a modeling palette or downright predefined modeling blocks.

-

2.

Cloud Portal Agent: provides the Workflow Holder Agent a Web portal as a primary interface to the whole system. It contains two components: Cloud Manager and Workflow Submission Interface. The latter provides a Web interface to the workflow holders to upload all necessary files to execute the workflow. This includes the workflow specification (Renew formatsFootnote 12), input files (images). It also serves getting notifications from the Cloud Broker Agent about the status of the workflow or the availability of the Cloud provider. The role of the Cloud Manager is to control the Cloud instances (start and stop or suspend).

-

3.

Cloud Broker Agent: It is a critical component of the architecture, since it is responsible of (i) the evaluation and selection of the Cloud providers that fits the workflow’s requirements (e.g., data volume and computing intensities) and (ii) mapping the workflow tasks. Both activities require information about the Cloud provider, which are available and provided by the Cloud Repository Agent.

-

4.

Cloud Repository Agent: The Cloud repository register the information about the Cloud providers and the state of their services. These information are saved in a database and are constantly updated, since they are required by the Cloud Broker Agent. To avoid failure scenarios (repository down, loss of data), we use distributed databases, which allows high availability and fault-tolerant persistence.

-

5.

Cloud Provider Agent: The role of this agent is to control the instances and to manage the execution of the tasks. Regularly, the Cloud providers need to update their status and send it to the Cloud Repository Agent. The status concerns both the instance and the services (Grass services).

Concerning the Cloud Broker Agent, the evaluation and the selection of the Cloud providers are critical processes for the Workflow Holders. In Cloud computing there are various factors impacting the Cloud provider evaluation and selection [4, 8] such as: computational capacity, IT security and privacy, reliability and trustworthiness, customization degree and flexibility/scalability, manageability/usability and customer service, geolocations of Cloud infrastructures. For this, in our work, brokering factors are limited to the computational capacity and the customization degree.

4.3 Cloud Configuration

Concerning the customization degree, there are some requirements for a successful deployment onto the Cloud. In general, a common procedure to deploy applications onto Cloud services consists of these two main steps:

-

1.

Set up the Environment: this mainly consists of the provision of Cloud instancesFootnote 13 and the configuration the required softwares properly. Essential are the environment variables, which differ from the local implementation such as JAVA configuration for the Web server.

-

2.

Deploy the Application: it consists of the customization of the Cloud instance with the appropriate softwares. For our work, Renew and the Grass GIS should be correctly and properly configured, especially the database and the installation path.

Furthermore, the Grass commands can be invoked in different ways. Either through a wrapper like in the original implementation of RenewGrass or provided as Web services. For the latter, we follow a Web-based approach with respect to the Open Geospatial Consortium (OGC) Web Processing Service (WPS) interface specification. Thus the Grass GIS functionalities are provided as Web services instead of desktop application. To achieve this, we chose the 52NorthFootnote 14 as a WPS server as well as the wps-grass-bridgeFootnote 15.

4.4 Execution Scenario

Considering the proposed architecture and the agent roles described above, a typical deployment scenario is broken into the following steps:

-

1.

Workflow holders specify their image processing workflows (data and control-flow) using Petri nets for example the NDVI workflow (see Sect. 3).

-

2.

They send a request to the Cloud Broker via the Cloud Portal.

-

3.

The Cloud Broker checks for available Cloud providers, which provide geoprocessing tools (Grass GIS). This information is retrieved from the Cloud Repository.

-

4.

The Cloud Broker sends a list to the Workflow Holder (through the Cloud Portal) to accept or to reject the offer.

-

5.

If the offer is accepted, the Workflow Holder submits the workflow specification (.rnw + .sns) to the selected Cloud Provider.

-

6.

Launch a customized Cloud instance with Renew and Grass GIS running in the background.

-

7.

After simulation/execution of the workflow, results (in our prototype it consists of calculating the NDVI value) are transmitted to the Workflow Holder through the Cloud Portal.

Rejecting an offer does not conclude the execution process immediately. Since the list transmitted by the Cloud Broker is updated constantly, it might be that new Cloud providers are available and fits the requirements. Therefore, from step (3), the process is iterative until the satisfaction of the Workflow Holder. Regarding step (5) and (6), Renew supports starting a simulation from the command line. This is possible by using the command startsimulation (net system) (primary net) [-i]. The parameters to this command have the following meaning:

-

Net system: The .sns file.

-

Primary net: The name of the net, of which a net instance shall be opened when the simulation starts.

-

-i: If you set this optional flag, then the simulation is initialized only, that is, the primary net instance is opened, but the simulation is not started automatically.

In a future paper, we will give a first evaluation of the presented work as well as the progress of the implementation of the components presented in this section. Furthermore, we have implemented other image processing workflows such as the Normalized Differences Vegetation Index (NDVI).

5 Conclusions and Future Work

In this paper we first presented the implementation of a geoprocessing tool named RenewGrass for Renew. This tool extends Renew to be able to support another kind of workflows (scientific workflows) apart the business workflows. The application domain of RenewGrass is the remote sensing, especially image processing. Therefore, we afford scientists with a palette of processing functionalities based on the Grass GIS. Furthermore, we discussed the extension of the current work by the integration of the Cloud technology. For this purpose, we introduced migration patterns and introduced our architecture for the deployment of workflows onto Cloud providers.

The future work takes two directions. First, we will improve RenewGrass by providing more functionality especially for not-experienced users. According to our first experience with the implemented tool, we detected that several geoprocessing workflows involve repetitive tasks, which need to be automated. This repetitive tasks can be grouped in modeling blocks and then used when necessary. To enable this, we are exploiting the well-known Net Components introduced by [2], which can provide pre-defined modeling structures. Thus the modeling of image processing workflows will be more straightforward.

The next step is to investigate the integration possibilities of the RenewGrass within an agent-based approach. This consists of using the Mulan/Capa framework [3, 11]. This framework allows building agent-based applications following the Paose approach. The idea is that the components described in Sect. 4 will be integrated in an agent-based application, which is currently being developed in the context of a teaching project. The first functionality that we are investigating is Cloud brokering, which consists on selecting the best Cloud provider to perform the workflow tasks.

Notes

- 1.

- 2.

- 3.

- 4.

- 5.

- 6.

- 7.

- 8.

- 9.

- 10.

aws.amazon.com/swf.

- 11.

- 12.

Renew supports various file formats saving (XML, .rnw, .sns, etc.).

- 13.

The Cloud instances should be in priori customized, i.e., they need to have the Grass GIS as back-end, the WPS server and Renew. We assume that the Cloud storage is a service, which is configured by the Cloud provider itself.

- 14.

- 15.

References

Anstett, T., Leymann, F., Mietzner, R., Strauch, S.: Towards bpel in the cloud: exploiting different delivery models for the execution of business processes. In: 2009 World Conference on Services - I, pp. 670–677 (2009)

Cabac, L.: Net components: concepts, tool, praxis. In: Moldt, D. (eds.) PNSE 2009, Proceedings, Technical reports Paris 13, pp. 17–33, 99, avenue Jean-Baptiste Clément, 93 430 Villetaneuse, June 2009. Université Paris 13

Duvigneau, M., Moldt, D., Rölke, H.: Concurrent architecture for a multi-agent platform. In: Giunchiglia, F., Odell, J.J., Weiß, G. (eds.) Proceedings of the AOSE 2002, Bologna, pp. 147–159. ACM Press, July 2002

Haddad, C.: Selecting a cloud platform: a platform as a service scorecard. Technical report, WSO2 (2011). http://wso2.com/download/wso2-whitepaper-selecting-a-cloud-platform.pdf. Accessed 2 December 2014

Han, Y.-B., Sun, J.-Y., Wang, G.-L., Li, H.-F.: A cloud-based bpm architecture with user-end distribution of non-compute-intensive activities and sensitive data. J. Comput. Sci. Technol. 25(6), 1157–1167 (2010)

Hollingsworth, D.: Workflow management coalition - the workflow reference model. Technical report, Workflow Management Coalition, January 1995. URL: http://www.wfmc.org/standards/model.htm

Kummer, O.: Referenznetze. Logos Verlag, Berlin (2002)

Patt, R., Badger, L., Grance, T., Voas, C.J.: Recommendations of the national institute of standards and technology. Technical report, NIST (2011). http://csrc.nist.gov/publications/nistpubs/800-146/sp800-146.pdf

Ludäscher, B., Altintas, I., Berkley, C., Higgins, D., Jaeger, E., Jones, M., Lee, E.A., Tao, J., Zhao, Y.: Scientific workflow management and the kepler system: research articles. Concurrency Comput. Pract. Experience 18(10), 1039–1065 (2006)

Pandey, S., Karunamoorthy, D., Buyya, R.: Workflow Engine for Clouds. Wiley, Hoboken (2011)

Rölke, H.: Modellierung von Agenten und Multiagentensystemen - Grundlagen und Anwendungen. Agent Technology - Theory and Applications, vol. 2. Logos Verlag, Berlin (2004)

Valk, R.: Petri nets as token objects - an introduction to elementary object nets. In: Desel, J., Silva, M. (eds.) ICATPN 1998. LNCS, vol. 1420, pp. 1–25. Springer, Berlin (1998)

Jia, Y., Buyya, R.: A taxonomy of workflow management systems for grid computing. J. Grid Comput. 3(3–4), 171–200 (2005)

Zhao, Y., Hategan, M., Clifford, B., Foster, I., von Laszewski, G., Nefedova, V., Raicu, I., Stef-Praun, T., Wilde, M.: Swift: fast, reliable, loosely coupled parallel computation. In: 2007 IEEE Congress on Services, pp. 199–206, July 2007

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Bendoukha, S., Moldt, D., Bendoukha, H. (2015). Building Cloud-Based Scientific Workflows Made Easy: A Remote Sensing Application. In: Marcus, A. (eds) Design, User Experience, and Usability: Users and Interactions. DUXU 2015. Lecture Notes in Computer Science(), vol 9187. Springer, Cham. https://doi.org/10.1007/978-3-319-20898-5_27

Download citation

DOI: https://doi.org/10.1007/978-3-319-20898-5_27

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-20897-8

Online ISBN: 978-3-319-20898-5

eBook Packages: Computer ScienceComputer Science (R0)