Abstract

In recent years finger vein authentication has gained an increasing attention, since it has shown the potential of providing high accuracy as well as robustness to spoofing attacks. In this paper we presented a new finger verification approach, which does not need precise segmentation of regions of interest (ROIs), as it exploits a co-registration process between two vessel structures. We tested the verification performance on the MMCBNU_6000 finger vein dataset, showing that this approach outperforms state of the art techniques in terms of Equal Error Rate.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

In the last decade, finger vein authentication has emerged as one of the most promising biometric technologies. Finger blood vessels are subcutaneous structures, providing a vein pattern that is unique and distinctive for each person, so it can be considered as an effective biometric trait. Since blood vessels can be captured only in a living finger, the vein pattern shows two main desirable properties: (a) it is harder to copy/forge than other biometric traits such as fingerprint and face; (b) it is very stable, not being affected by environmental and skin conditions (humidity, skin disease, dirtiness, etc.). There are many further aspects making the finger vein pattern a good candidate for a new generation of biometric systems. First of all, each individual has unique finger vein patterns that remain unchanged despite ageing. Just like fingerprints, ten different vein patterns are associated to a person, one for each finger, so that if one finger is accidentally injured, other fingers can be used for personal authentication. However, finger vein patterns can be acquired contactless, and this makes them preferable to fingerprints, considering that they are comparable in terms of distinctiveness. Moreover, small size capturing devices are available on the market at a low price, that makes this biometric very convenient for a wide range of security applications (e.g. physical and remote access control, ATM login, etc.).

As for many other biometrics, still there are open issues affecting the authentication accuracy, so restraining a massive diffusion of this technology out of academic laboratories. Indeed, finger vein patterns are mainly affected by uneven illumination and finger posture changes that produce irregular shading and deformation of the vein patterns in the acquired image. The research in this field is mainly devoted to cope with these two major problems by designing either ad hoc preprocessing algorithms, or robust feature extraction/matching techniques. Generally, the most of the approaches from recent literature mainly act specifically on one of the four main stages of the finger vein authentication, that are: (i) image acquisition, (ii) preprocessing, (iii) vein feature extraction and (iv) feature matching.

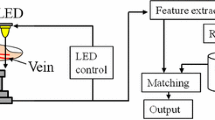

The capturing device project infrared light onto the finger and scans the inner surface of the skin to read the vein pattern by means of an infrared camera. Many imaging sensors are available on the market, which may differ both in the quality and resolution of the acquired finger image. In general, they provide a 8 bit grayscale finger image, whose resolution may range from 240 × 180 pixels to 640 × 480 pixels.

The image preprocessing stage can be further subdivided in three main steps that are enhancement, normalization, and segmentation. Image enhancement techniques aim to suppress noisy pixels and to make the vein structure more prominent and easy to distinguish from the background. Problems related to uneven illumination and low local contrast, are also addressed in this stage. Some image enhancement methods are based on multi-threshold combination [1], directional information [2], Gabor filter [3], Curvelets transform [4] and, restoration algorithms [5].

Image enhancement is generally followed by a normalization stage, which is aimed to detect a ROI containing the most of distinctive vein features. The detection of the ROI is implemented by means of edge operators [6,7,8], while taking into account the finger structure (knuckles and phalanxes) and some basic optic knowledge. After the ROI has been detected and separated from the rest of the finger image, it undergoes to a segmentation stage, whose purpose is to extract finger veins (foreground) from a complicated background. Finger vein extraction represents a crucial point, as it is fundamental for vein pattern-based authentication methods, as demonstrated by the large number of techniques that have been proposed in literature to address this problem; line-tracking [9], threshold-based methods [10], mean curvature [11] and region growth-based feature [12] are just some examples.

The last stage into the finger vein recognition pipeline is represented by feature extraction and classification. All the approaches related to this stage can be grouped into three main categories: (i) methods characterizing geometric shape and topological structure of vein patterns [9, 13,14,15,16], (ii) approaches based on dimensionality reduction techniques and learning processes [17,18,19,20], (iii) methods exploiting local operators to generate histograms of short binary codes [21,22,23,24].

In this paper we propose a new technique for finger vein verification, namely partial matching of finger vein patterns based on point sets alignment and directional information (PM-FVP). This method introduces some novelties. First of all, the same directional information is used to both detect and match vessel structures. It has been previously tested on retina blood vessel segmentation [25], but it had not been experimented yet on finger veins. We propose a new enhancing operator that increases local contrast by exploiting grey level information in pixels neighborhood. We also introduce a vessel structures co-registration stage in the processing pipeline, so avoiding the need of segmenting ROIs. We define a new local directional based feature vector that outperforms the existing ones like Local Binary Pattern (LBP) [26] and Local Directional Code (LDC) [23], which can be considered as the state of the art. The proposed approach has been tested on finger vein images provided in the MMCBNU_6000 dataset [27], which includes both complete finger vein images and their associated ROIs. Experimental results show that PM-FVP outperforms other approaches in terms of verification accuracy.

2 The Proposed Approach

In this paper, we introduce a new finger vein verification technique (PM-FVP) that aims to solve the problem of partial matching between finger vein patterns without the need of detecting specific ROIs. Our approach implements four main steps: (i) image enhancement, (ii) image segmentation, (iii) finger vein patterns co-registration, and (iv) feature extraction and matching. By exploiting a rigid co-registration process, this method is able to align partial finger vein patterns and to perform matching according to local directional information extracted from the blood vessel structure.

Image Enhancement.

Finger vein images are generally characterized by a very low contrast of blood vessels, which often jeopardizes results obtained by the image segmentation techniques. However, the detection of vein structure (foreground) from the rest of the image (background) represents a crucial point in finger vein authentication systems, since the better the extracted foreground, the higher the accuracy of the authentication process. In order to mitigate effects of noise and uneven illumination, PM-FVP implements a preprocessing stage with the purpose of enhancing local contrast before applying the segmentation algorithm. The input images in MMCBNU_6000 have a resolution of 640 × 480 pixels. We resize the input image to a fixed resolution of 128 × 96 pixels for reasons that will be explained in the following. From now on, we will consider as input images the ones obtained after size reduction. Subsequently, PM-FVP applies a contrast enhancement filter. The enhancing filter is a pixel-wise local operator. For each pixel p of the image, it considers two local windows W 1 and W 2 both centered in p, but with different sizes n 1 × n 1 and n 2 × n 2 , respectively (n 1 and n 2 depend on the image resolution and in our case they have been set to 3 and 11, respectively). The maximum grey value max 1 (max 2 ) in W 1 (W 2 ) is computed. The new grey value assigned to p is computed according to the following equation:

Clearly max 2 ≥ max 1 always holds, as W 1 is included in W 2 , so that the exponent in (1) is always lower than one. Since the value of p′ may be larger than 255, all grey values of the filtered image are mapped to the range [0, 255] by means of the min-max rule:

where \( m_{1} \) and \( m_{2} \) are the minimum and maximum grey values of the filtered image, respectively.

Image Segmentation.

The segmentation process exploits a technique presented in [25], where directional information associated to each pixel of the image is exploited to separate the foreground from the background. It is based on the assumption that blood vessels are generally darker than background, and they are thin and elongated structures with different directions. PM-FVP builds a direction map DM, where each pixel p of the image is assigned a discrete direction d j , according to a directional mask, centered in p. The number of different discrete directions d j depends on the size of the directional mask. In particular, for a mask of n × n pixels, 2 × (n − 1) discrete directions are possible. In turn, the value of n should be selected by taking into account the thickness of the veins and of the background regions (valleys) separating them. If a too small mask is centered on the innermost pixels in a vein (valley), no significant grey-level changes can be detected along all directions, so that any direction can be assigned to the innermost pixels. On the contrary, if a too large mask is used, it can include vein pixels of different veins causing a non-correct direction assignment.

For MMCBNU_6000 images, a reasonably compromise between segmentation quality and computational cost, is to reduce the image size to 128 × 96 pixels and to use a directional mask m of size n = 7 generating twelve different directions represented by twelve templates t j (j = 1,…,12). A graphical representation of all discrete directions generated by a 7 × 7 mask is given in Fig. 1.

Let be p a pixel of the image, for any direction d j (j = 1,2,…,12), neighboring pixels that fall into the template t j centered in p are partitioned into two sets indicated by S 1 and S 2 . The set S 1 consists in the 7 pixels aligned along the direction d j , while S 2 is formed by the 42 remaining pixels. Let be Ad j (p) and NAd j (p) the average values computed over S 1 and S 2 , respectively. In the directional map DM, the pixel p is assigned the direction d j , for which the score s j (p) = NAd j (p) − Ad j (p) is maximized. Doing so, a score map SM is built where SM(p) = max j(s j (p)). Starting from DM and SM, a preliminary segmentation is performed, which also undergoes to some refinement steps to obtain the final foreground. Details of these steps can be found in [25]. In Fig. 2, from left to the right, a running example, with its enhancement and final segmentation is shown.

The Co-registration of Finger Vein Patterns.

We formulate the problem of co-registering two finger vein patterns as a point set registration process, whose aim is to assign correspondences between two sets of points and to derive a transformation mapping one point set to the other. It is a challenging task due to many factors like the dimensionality of point sets and the presence of noise and outliers. In order to ensure that features extracted from different vessel structures are correctly matched, finger vein pattern must be previously align, so to guaranty a proper correspondence among homologous regions. To this aim, PM-FVP implements a co-registration process to align finger vein patterns. In other words, foreground pixels of vessel structures extracted from two different vein finger images may be considered as two different point sets. Since the dimensionality of point sets heavily affects the computational cost of point set alignment methods, a skeletonization operation is applied to the foreground extracted from a finger vein image. The skeletons of two different vessel structures are then considered as two point sets P 1 and P 2 , which are inputted to the co-registration algorithm.

Co-registration between point sets is performed by means of the Coherent Point Drift (CPD) algorithm presented in [27]. The CPD technique reformulates the point sets registration problem as a probability density estimation. The first point set P 1 represents centroids of a Gaussian Mixture Model (GMM) that must be fit to the second point set P 2 , by maximizing a likelihood. During the fitting process, topological structure is preserved by inducing centroids to move coherently as a group. CPD has been designed to cope with both rigid and non-rigid transformation, but in our specific case we only search for a rigid affine transform T mapping P 1 in P 2 . After the co-registration process, the directional features are extracted from both aligned vessel structures according to a local descriptor that is detailed in the following Section. To show that our method is able to perform a partial matching, in Fig. 3 we provide the co-registration result (right) obtained by aligning the vessel structures extracted from the whole finger (left) and its ROI (middle).

Feature Extraction and Matching.

The most of the existing approaches designed for finger vein matching work on ROIs, which are extracted from finger vein images, to guarantee that corresponding vessel structures properly overlap. In turn, PM-FVP does not work on ROIs, but directly aligns vessel structures before extracting directional features. Before applying the feature extraction process, PM-FVP computes the overlapping region between the two aligned vessel structures and limits next processing steps only to this area. The feature extraction process exploits the directional information that has been calculated to segment vessels, and that is provided by the corresponding directional maps DM 1 and DM 2 , and score maps SM 1 and SM 2 . In other words, PM-FVP just crops DM 1 , DM 2 , SM 1 and SM 2 to obtain DMc 1 , DMc 2 , SMc 1 and SMc 2 corresponding to the overlapping region. PM-FVP partitions DMc k and SMc k (k = 1,2) in non-overlapping sub-windows W i with fixed dimensions r × r, where r is proportional to the dimension n of the directional mask m that has been adopted to compute directional/score maps (in our case r = 16). For each sub-window W i , PM-FVP computes a 12-bins histogram H, where each bin b j corresponds to a discrete direction d j , while the value of each bin is computed by averaging the scores SM(p) of all pixels p in W i , whose assigned direction is d j . The feature vector V k is then built by concatenating all histograms in a global feature vector V k = H 1 ⊕ H 2 ⊕…⊕ H n . After the feature extraction stage, two vessel structures are assigned with the corresponding feature vectors V 1 and V 2 , which have the same length. Thus, matching between V 1 and V 2 , can be easily performed by applying a distance measure. In our experiments we tested the cosine distance.

3 Experiments and Results

All the experiments have been performed on the MMCBNU_6000 finger vein dataset [28], using MATLAB (R2010a) on an Intel Core2 Duo U7300 @ 1.30 GHz with 4 GB of RAM. The MMCBNU_6000 dataset consists of 6000 finger vein grayscale images captured from 100 persons. It also provides 6000 segmented ROIs with a resolution of 64 × 128 pixels. For the sake of simplicity we will indicate the set of images of whole fingers as A and the set of corresponding ROIs as B.

The experiments consist of two different tests: (a) evaluating the verification accuracy on set A (Experiment I), (b) computing the verification accuracy while comparing set B to set A (Experiment II). We measured the verification accuracy in terms of Equal Error Rate (EER), Feature Dimensionality (FD) and Processing Time (PT). In both experiments, five finger vein images from one subject have been considered as the gallery set, while the remaining five images have been used as the probe set. This led to 3000 genuine matches and 1797000 imposter matches, respectively. The performance of PM-FVP has been compared with that of some most commonly used state of the art technique for experiment one. Performance results are reported in Table 1 and show the effectiveness of the proposed method.

4 Conclusion

Finger vein authentication is emerging as one of the most promising biometric traits, as solves many of the limitations of existing biometrics like fingerprints, but offering comparable performances in terms of verification accuracy. In this paper we presented a new approach, namely PM-FVP, whose main contributions are: (i) designing a new local contrast enhancement filter, (ii) using new local directional features to both segmenting and matching finger vein pattern, (iii) co-registering vessel structures without needing precise segmentation of ROIs. Experimental results show that PM-FVP outperforms state of the art techniques in terms of verification accuracy. In future works we aim to combine both rigid and non rigid transformation to further improve the quality of the co-registration results, as we expect that this will further increase the verification performance.

References

Yu, C.-B., Zhang, D.-M., Li, H.-B.: Finger vein image enhancement based on multi-threshold fuzzy algorithm. In: 2nd International Congress on Image and Signal Processing, pp. 1–3. IEEE Press (2009)

Park, Y.H., Park, K.R.: Image quality enhancement using the direction and thickness of vein lines for finger-vein recognition. Int. J. Adv. Robot. Syst. 9, 1–10 (2012)

Yang, J.F., Yang, J.L.: Multi-channel gabor filter design for finger vein image enhancement. In: 5th International Conference on Image and Graphics, pp. 87–91. IEEE Press (2009)

Zhang, Z., Ma, S., Han, X.: Multiscale feature extraction of finger-vein patterns based on curvelets and local interconnection structure neural network. In: 18th International Conference on Pattern Recognition, pp. 145–148. IEEE Press (2006)

Yang, J., Shi, Y.: Towards finger-vein image restoration and enhancement for finger-vein recognition. Inf. Sci. 268, 33–52 (2014)

Yang, J., Li, X.: Efficient finger vein localization and recognition. In: 20th International Conference on Pattern Recognition, pp. 1148–1151. IEEE Press (2010)

Yang, J.F., Shi, Y.H.: Finger-vein ROI localization and vein ridge enhancement. Pattern Recogn. Lett. 33, 1569–1579 (2012)

Lu, Y., Xie, S.J., Yoon, S., Yang, J.C., Park, D.S.: Robust finger vein ROI localization based on flexible segmentation. Sensor 13(11), 14339–14366 (2013)

Miura, N., Nagasaka, A., Miyatake, T.: Feature extraction of finger-vein patterns based on repeated line tracking and its application to personal identification. Mach. Vis. Appl. 15(4), 194–203 (2004)

Bakhtiar, A.R., Chai, W.S., Shahrel, A.S.: Finger vein recognition using local line binary pattern. Sensors 11, 11357–11371 (2012)

Song, W., Kim, T., Kim, H.C., Choi, J.H., Kong, H.J., Lee, S.R.: A finger-vein verification system using mean curvature. PRL 32(11), 1541–1547 (2011)

Huafeng, Q., Lan, Q., Chengbo, Y.: Region growth-based feature extraction method for finger vein recognition. Opt. Eng. 50(2), 281–307 (2011)

Miura, N., Nagasaka, A., Miyatake, T.: Extraction of finger vein patterns using maximum curvature points in image profiles. IEICE Trans. Inf. Syst. E90D(8), 1185–1194 (2007)

Kumar, A., Zhou, Y.B.: Human identification using finger images. IEEE Trans. Image Process. 21(4), 2228–2244 (2012)

Qin, H.F., Yu, C.B., Qin, L.: Region growth-based feature extraction method for fingervein recognition. Opt. Eng. 50(5), 057208 (2011)

Liu, T., Xie, J.B., Yan, W., Li, P.Q., Lu, H.Z.: An algorithm for finger-vein segmentation based on modified repeated line tracking. Imaging Sci. J. 61(6), 491–502 (2013)

Wu, J.D., Liu, C.T.: Finger-vein pattern identification using principal component analysis and the neural network technique. Expert Syst. Appl. 38(5), 5423–5427 (2011)

Wu, J.D., Liu, C.T.: Finger-vein pattern identification using SVM and neural network technique. Expert Syst. Appl. 38(11), 14284–14289 (2011)

Yang, G.P., Xi, X.M., Yin, Y.L.: Finger vein recognition based on (2D)2 PCA and metric learning. J. Biomed. Biotechnol. 2012, 1–9 (2012)

Liu, Z., Yin, Y.L., Wang, H., Song, S., Li, Q.: Finger vein recognition with manifold learning. J. Netw. Comput. Appl. 33(3), 275–282 (2010)

Lee, E.C., Jung, H., Kim, D.: New finger biometric method using near infrared imaging. Sensors 11(3), 2319–2333 (2011)

Rosdi, B.A., Shing, C.W., Suandi, S.A.: Finger vein recognition using local line binary pattern. Sensors 11(12), 11357–11371 (2011)

Meng, X.J., Yang, G.P., Yin, Y.L., Xiao, R.Y.: Finger vein recognition based on local directional code. Sensors 12(11), 14937–14952 (2012)

Dai, Y.G., Huang, B.N., Li, W.X., Xu, Z.Q.: A method for capturing the finger-vein image using nonuniform intensity infrared light. In: Proceedings of Congress on Image and Signal Processing, pp. 501–505 (2008)

Frucci, M., Riccio, D., di Baja, G.S., Serino, L.: SEVERE: SEgmenting VEssels in REtina images. Pattern Recogn. Lett. 82, 162–169 (2016). doi:10.1016/j.patrec.2015.07.002

Lee, E.C., Lee, H.C., Park, K.R.: Finger vein recognition using minutia-based alignment and local binary pattern-based feature extraction. Int. J. Imaging Syst. Technol. 19(3), 179–186 (2009)

Myronenko, A., Song, X.: Point set registration: coherent point drift. IEEE Trans. Pattern Anal. Mach. Intell. 32(12), 2262–2275 (2010)

Lu, Y., Xie, S.J., Yoon, S., Wang, Z.H., Park, D.S.: A available database for the research of finger vein recognition. In: Proceedings of the 6th International Congress on Image and Signal Processing, pp. 410–415 (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Frucci, M., Riccio, D., Sanniti di Baja, G., Serino, L. (2017). Partial Matching of Finger Vein Patterns Based on Point Sets Alignment and Directional Information. In: Beltrán-Castañón, C., Nyström, I., Famili, F. (eds) Progress in Pattern Recognition, Image Analysis, Computer Vision, and Applications. CIARP 2016. Lecture Notes in Computer Science(), vol 10125. Springer, Cham. https://doi.org/10.1007/978-3-319-52277-7_3

Download citation

DOI: https://doi.org/10.1007/978-3-319-52277-7_3

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-52276-0

Online ISBN: 978-3-319-52277-7

eBook Packages: Computer ScienceComputer Science (R0)