Abstract

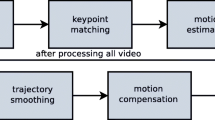

Video stabilization is a technique used to compensate user hand shaking. It avoids grabbing the unintentional motion in a video sequence, which causes unpleasant effects for the final user. In this paper we present a very simple but effective low power consumption solution, suitable for cheap and small video cameras, which is robust to common difficult conditions, like noise perturbations, illumination changes, motion blurring and rolling shutter distortions.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Different image stabilization techniques, both block-based and feature-based ones, have been proposed in literature. Most of these techniques are computationally expensive and thus not suitable for real time applications. We work under the assumption of limited (i.e. few pixels) misalignments between adjacent frames. Moreover, we take into account only vertical and horizontal shifts, because rotation is less disturbing for optical models with a wide field of view and the rotational compensation is expensive in terms of computations.

The proposed technique is primarily inspired by the use of characteristics curves and tends to overcome some limitations, often occurring in practical situations, especially in low-cost cameras. This is obtained by an accurate analysis of the characteristics curve by filtering out the deceptive information.

This paper is structured as follows: in Sect. 2 the prior art is shown; in Sect. 3 the proposed method is described. Then the experimental results are shown in Sect. 4, followed by some conclusions and future work in final Sect. 5.

2 Prior Art

Prior art digital video stabilization techniques can be grouped in two main categories: block based and feature based. The block based techniques split the image into blocks and then blocks of current frame are compared with blocks of previous frame to calculate the related motion. The matching between blocks of the current and the previous frame is done on the basis of block matching criteria. Smaller the value of matching criterion better is the match between blocks. To retrieve the motion vector for each block, different matching metrics have been proposed, like the Partial Distortion Elimination (PDE) [1], Mean Absolute Difference (MAD) [2] or Universal Image Quality Index (UIQI) [3]. A compounding algorithm to detect the global motion vector is then applied. These techniques are usually robust, but really slow, because the whole image should be processed, in a block by block fashion.

The feature based techniques, on the contrary, allow retrieving directly the global motion vector through the analysis of a particular feature. Recent papers have mainly adopted Speeded Up Robust Features (SURF) [4], Scale Invariant Feature Transform (SIFT) [5] and Kanade-Lucas-Tomasi (KLT) techniques [6]. Even if there is no need to process the whole image, as the block based algorithms, the disadvantage of feature based methods is that they are strictly dependent on feature point extraction step [7], in terms of accuracy. Moreover, even if these kinds of algorithms are robust enough, they are too expensive to be used in low cost cameras. Their complexity is due, not only to the expensive feature calculation, but especially to the related feature matching.

Usually in feature based techniques, the optical flow analysis is executed [8,9,10]. The optical flow is a useful representation of the scene, consisting in the set of motion vectors, calculated between the previous and current frame. Main problem is to distinguish foreground motion vectors, caused by moving objects, which should be not considered for the video stabilization, from background motion vectors, which have to be used to determine the correct stabilization. To solve this problem usually the Random Sample Consensus (RANSAC) [11] is used. Since RANSAC is a not deterministic iterative method, the worst case could require too much iterations to converge, excessively slowing down the whole processing. In recent years some optimizations of RANSAC algorithm have been proposed [12], but not so relevant to drastically increase performances.

Looking at the complexity of the aforementioned methods, we have chosen to start from a simpler technique, based on motion estimation through integral projection curves [13, 14]. This technique works as follows: for simplicity, let us assume we have two gray-scale temporally adjacent frames, where M and N are the horizontal and vertical dimensions and p ij is the pixel value in the position (i, j). The characteristics curves along the horizontal and vertical dimensions are respectively defined as:

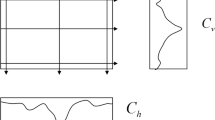

The meaning of the curves can be easily understood by referring to the drawing of Fig. 1, where two schematic characteristics curves are reported. Figure 2 shows C h curves for two successive frames F 1 and F 2 . A shift of the curves along the x-axis represents an equivalent shift of the frames in the horizontal dimension (similar approach for y-axis and vertical shift). From Fig. 2 the horizontal shift is particularly evident. Hence, in order to properly evaluate the motion occurring between consecutive frames, the shift along the axes (off h , off v ) of both C h and C v curves can be calculated as follows:

The term s is the search window size and represents the maximum retrievable displacement.

The described method is low-cost and robust to noise, but it suffers in the cases of illumination changes and motion blur. The effect of illumination changes, in the calculated characteristics curves, is shown in the following example. Figure 3 represents the results of the ideal matching (−29), that is one curve should be shifted by 29 pixels to match with the other one. It is to note that the shape of the curves is similar, but the values are slightly different due to the scene illumination changes. Due to these differences, with the integral projection method, the matching is not correct, as indicated in Fig. 4. This effect is more evident along the edge (a strong edge is visible around the point 1600).

3 Proposed Filtering

The effect of the illumination change in the curve is basically a shift, as indicated in Fig. 3. Removing this shift, the matching problem can be eliminated. It can be performed deleting the DC component to the integral projection signal, by pre-filtering the characteristics curve with a High Pass Filter (HPF), thus enhancing curve peaks, obtained as follows:

A very simple LPF with 16 ones (i.e. [1111111111111111]/16) allows obtaining a simple HPF, with good results and low extra cost, subtracting the filtered data to the original one. The filter response is shown in Fig. 5. In the proposed example, with this simple filter, we obtain the perfect matching (−29), as shown in Fig. 6.

Although this filter works better than prior art approaches in case of scene illumination changes, this is not the case when motion blur is present. To better understand this problem, we will show an example. In Fig. 7 the unfiltered characteristic curves are plotted. The matching is not perfect (+11 instead of +13 pixels). Using the HPF shown above, the matching is even worst (+7), as shown in Fig. 8. The mismatch is mainly due to the highest frequencies, considered as noise. By removing highest frequencies, hence applying a Low Pass Filter, the problem can be reduced.

Combining the two conditions, illumination changes and motion blur, a Band Pass Filter (BPF) seems to be the solution, so a Butterworth IIR BPF has been chosen. The general form of the IIR filter function is defined as:

The design problem consists in determining the coefficients b l and a m so that H(z) satisfies the given specifications. A second-order IIR filter has been chosen, to obtain a good tradeoff between implementation cost and results. The cutoff frequencies of the filter were fixed to w1 = 0.01 Hz and w2 = 0.20 Hz, chosen after having performed several simulations to obtain better results in the matching. The magnitude response of this filter is indicated in Fig. 9. With this filter, we obtain a good matching in both illumination changes and motion blur conditions, as indicated in Fig. 10 (related to the example in Fig. 4) and Fig. 11 (related to the example in Fig. 7).

This IIR BPF is really a good solution, even if it requires several floating point multiplications. It should not be disregarded that IIR filters are often prone to coefficients quantization errors. The zeroes-poles graph of the IIR filter, represented in Fig. 12, shows that one pole is quite close to the unit circle, so this IIR filter is susceptible to finite precision effects, and hence optimization of this filter should be carefully chosen and tested.

4 Experimental Results

About one hundred video sequences have been used to test the quality of the results in both objective and subjective way. Tables 1, 2 and 3 show the results obtained respectively with the prior art method (Classical IP) and the proposed FIR (HPF) and IIR (BPF) methods. In these Tables, for each video sequence, the minimum (MinX and MinY), the maximum (MaxX and MaxY) and the standard deviation (StdX and StdY) were computed between the real motion vectors and the estimated ones (in pixel), for each axis (X and Y). We can note a reduction in the standard deviation error of about 11% for the FIR and about 16% for the IIR. In particular, the best improvement of IIR compared with the prior art method and FIR is obtained in the cases of weakness of these methods, represented by the presence of overall motion blur and artifacts due to the rolling shutter (respectively video 3 and 6).

5 Conclusion and Future Work

A very low-cost algorithm for video stabilization has been developed, suitable for real-time processing. It achieves significant improvements in both subjective and objective manner compared with state of the art algorithms with similar complexity, reaching about 16% of improvement in standard deviation of the error. Moreover, apart to be robust to noise, like the prior art methods, it is also robust to illumination changes, motion blurring and rolling shutter distortions. Further investigation will involve a deeper research of better BPF to approximate the IIR chosen, to fully work in finite precision, and extension of the proposed technique to roto-traslation.

References

Jagtap, A.P., Baviskar, P.V.: Review of block based video stabilization. Int. Adv. Res. J. Sci. Eng. Technol. (2015)

Bhujbal, D., Pawar, B.V.: Review of video stabilization techniques using block based motion vectors. Int. Adv. Res. J. Sci. Eng. Technol., vol. 3, no. 3 (2016)

KovaazEvic, V., Pantic, Z., Beric, A., Jakovljevic, R.: Block-matching correlation motion estimation for frame-rate up-conversion. J. Sign. Process. Syst. 84(2), 283–292 (2016)

Salunkhe, A., Jagtap, S.: Robust feature-based digital video stabilization. Int. J. Adv. Res. Electron. Commun. Eng. (IJARECE) (2015)

Patel, M., Parmar, N., Nilesh, M.: Comparative Analysis on Feature Descriptor Algorithms to Aid Video Stabilization and Smooth Boundary Reconstruction Using In-painting and Interpolation, Int. J. Comput. Appl., Vol. 140, No.4 (2016)

Spampinato, G., Bruna, A., Guarneri, I., Tomaselli, V.: Advanced feature based digital video stabilization. In: 6th International Conference on Consumer Electronics, ICCE Berlin (2016)

Rawat, P., Singhai, J.: Review of motion estimation and video stabilization techniques for hand held mobile video. Sign. Image Process. Int. J. (SIPIJ), vol. 2, no. 2 (2011)

Liu, S., Yuan, L., Tan, P., Sun, J.: Steadyflow: spatially smooth optical flow for video stabilization. In: IEEE Conference on Computer Vision and Pattern Recognition (CVPR) (2014)

Goldstein, A., Fattal, R.: Video stabilization using epipolar geometry. ACM Trans. Graph. 32(5), 126 (2012)

Wang, Y.S., Liu, F., Hsu, P.S., Lee, T.Y.: Spatially and temporally optimized video stabilization. IEEE Tran. Vis. Comput. Graph. 19(8), 1354–1361 (2013)

Fischler, M.A., Bolles, R.C.: Random sample consensus: a paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 24(6), 381–395 (1981)

Lebeda, K., Matas, J., Chum, O.: Fixing the locally optimized RANSAC. In: British Machine Vision Conference, Guildford, United Kingdom (2012)

Kim, M., Kim, E., Shim, D., Jang, S., Kim, G., Kim, W.: An efficient global motion characterization method for image processing applications. IEEE Trans. Consum. Electron. 43(4), 1010–1018 (1997)

Koo, Y., Kim, W.: An image resolution enhancing technique using adaptive sub-pixel interpolation for digital still camera system. IEEE Trans. Consum. Electron. 45(1), 118–123 (2005)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Spampinato, G., Bruna, A., Naccari, F., Tomaselli, V. (2017). Adaptive Low Cost Algorithm for Video Stabilization. In: Battiato, S., Gallo, G., Schettini, R., Stanco, F. (eds) Image Analysis and Processing - ICIAP 2017 . ICIAP 2017. Lecture Notes in Computer Science(), vol 10485. Springer, Cham. https://doi.org/10.1007/978-3-319-68548-9_34

Download citation

DOI: https://doi.org/10.1007/978-3-319-68548-9_34

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-68547-2

Online ISBN: 978-3-319-68548-9

eBook Packages: Computer ScienceComputer Science (R0)