Abstract

This article proposes a microstructure model for stock prices in which parameters are modulated by a Markov chain determining the market behaviour. In this approach, called the switching microstructure model (SMM), the stock price is the result of the balance between the supply and the demand for shares. The arrivals of bid and ask orders are represented by two mutually- and self-excited processes. The intensities of these processes converge to a mean reversion level that depends upon the regime of the Markov chain. The first part of this work studies the mathematical properties of the SMM. The second part focuses on the econometric estimation of parameters. For this purpose, we combine a particle filter with a Markov chain Monte Carlo algorithm. Finally, we calibrate the SMM with two and three regimes to daily returns of the S&P 500 and compare them with a non switching model.

Similar content being viewed by others

Notes

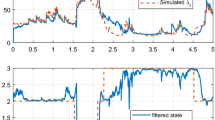

Chosen parameters are in the same range of values as real estimates reported in Sect. 4.2. In order to clearly vizualize changes of regimes, the gap between mean reversion levels in each regimes is increased. For the same reason, we have also modified transition probabilities in order to observe a sufficient number of changes of regime during the simulation.

References

Ait-Sahalia, Y., Cacho-Diaz, J., Laeven, R.J.A.: Modeling financial contagion using mutually exciting jump processes. J. Financ. Econ. 117(3), 586–606 (2015)

Al-Anaswah, N., Wilfing, B.: Identification of speculative bubbles using state-space models with Markov-switching. J. Bank. Finance 35(5), 1073–1086 (2011)

Bacry, E., Delattre, S., Hoffmann, M., Muzy, J.F.: Modelling microstructure noise with mutually exciting point processes. Quant. Finance 13(1), 65–77 (2013)

Bacry, E., Delattre, S., Hoffmann, M., Muzy, J.F.: Scaling limits for Hawkes processes and application to financial statistics. Stoch. Process. Appl. 123(7), 2475–2499 (2013)

Bacry, E., Muzy, J.F.: Hawkes model for price and trades high-frequency dynamics. Quant. Finance 14(7), 1147–1166 (2014)

Bacry, E., Mastromatteo, I., Muzy, J.F.: Hawkes processes in finance. Mark. Microstruct. Liq. 1(1), 1–59 (2015)

Bacry, E., Muzy, J.F.: Second order statistics characterization of Hawkes processes and non-parametric estimation. IEEE Trans. Inf. Theory 62(4), 2184–2202 (2016)

Bormetti, G., Calcagnile, L.M., Treccani, M., Corsi, F., Marmi, S., Lillo, F.: Modelling systemic price cojumps with Hawkes factor models. Quant. Finance 15(7), 1137–1156 (2015)

Bouchaud, J.P.: Price impact. In: Cont, R. (ed.) Encyclopedia of Quantitative Finance. Wiley, Hoboken (2010)

Bouchaud, J.P., Farmer, J.D., Lillo, F.: How markets slowly diggest changes in supply and demand. In: Hens, T., Reiner, K., Schenk-Hoppé. (eds.) Handbook of Financial Markets. Elsevier, New York (2009)

Bowsher, C.G.: Modelling security markets in continuous time: intensity based, multivariate point process models. Economics Discussion Paper No. 2002- W22, Nuffield College, Oxford (2002)

Branger, N., Kraft, H., Meinerding, C.: Partial information about contagion risk, self-exciting processes and portfolio optimization. J. Econ. Dyn. Control 39, 18–36 (2014)

Chavez-Demoulin, V., McGill, J.A.: High-frequency financial data modeling using Hawkes processes. J. Bank. Finance 36, 3415–3426 (2012)

Cont, R., Kukanov, A., Stoikov, S.: The price impact of order book events. J. Financ. Econ. 12(1), 47–88 (2013)

Da Fonseca, J., Zaatour, R.: Hawkes process: fast calibration, application to trade clustering, and diffusive limit. J. Futures Mark. 34(6), 548–579 (2014)

Doucet, A., Godsill, S., Andrieu, C.: On sequential Monte Carlo sampling methods for Bayesian filtering. Stat. Comput. 10, 197–208 (2000)

Errais, E., Giesecke, K., Goldberg, L.: Affine point processes and portfolio credit risk. SIAM J. Financ. Math. 1, 642–665 (2010)

Filimonov, V., Sornette, D.: Apparent criticality and calibration issues in the Hawkes self-excited point process model: application to high-frequency financial data. Quant. Finance 15(8), 1293–1314 (2015)

Gatumel, M., Ielpo, F.: The number of regimes across asset returns: identification and economic value. Int. J. Theor. Appl. Finance 17(06), 25 (2014)

Guidolin, M., Timmermann, A.: Economic implications of bull and bear regimes in UK stock and bond returns. Econ. J. 115, 11–143 (2005)

Guidolin, M., Timmermann, A.: International asset allocation under regime switching, skew, and kurtosis preferences. Rev. Financ. Stud. 21(2), 889–935 (2008)

Hainaut, D.: A model for interest rates with clustering effects. Quant. Finance 16(8), 1203–1218 (2016)

Hainaut, D.: A bivariate Hawkes process for interest rate modeling. Econ. Model. 57, 180–196 (2016)

Hainaut, D.: Clustered Lévy processes and their financial applications. J. Comput. Appl. Math. 319, 117–140 (2017)

Hainaut, D., MacGilchrist, R.: Strategic asset allocation with switching dependence. Ann. Finance 8(1), 75–96 (2012)

Hardiman, S.J., Bouchaud, J.P.: Branching ratio approximation for the self-exciting Hawkes process. Phys. Rev. E 90(6), 628071–628076 (2014)

Hautsch, N.: Modelling Irregularly Spaced Financial Data. Springer, Berlin (2004)

Hawkes, A.: Point sprectra of some mutually exciting point processes. J. R. Stat. Soc. Ser. B 33, 438–443 (1971)

Hawkes, A.: Spectra of some self-exciting and mutually exciting point processes. Biometrika 58, 83–90 (1971)

Hawkes, A., Oakes, D.: A cluster representation of a self-exciting process. J. Appl. Probab. 11, 493–503 (1974)

Horst, U., Paulsen, M.: A law of large numbers for limit order books. Math. Oper. Res. (2017). https://doi.org/10.1287/moor.2017.0848

Jaisson, T., Rosenbaum, M.: Limit theorems for nearly unstable Hawkes processes. Ann. Appl. Probab. 25(2), 600–631 (2015)

Kelly, F., Yudovina, E.: A Markov model of a limit order book: thresholds, recurrence, and trading strategies. Math. Oper. Res. (2017). https://doi.org/10.1287/moor.2017.0857

Kyle, A.S.: Continuous auction and insider trading. Econometrica 53, 1315–1335 (1985)

Large, J.: Measuring the resiliency of an electronic limit order book. Working Paper, All Souls College, University of Oxford (2005)

Lee, K., Seo, B.K.: Modeling microstructure price dynamics with symmetric Hawkes and diffusion model using ultra-high-frequency stock data. J. Econ. Dyn. Control 79, 154–183 (2017)

Protter, P.E.: Stochastic Integration and Differential Equations. Springer, Berlin (2004)

Wang, T., Bebbington, M., Harte, D.: Markov-modulated Hawkes process with stepwise decay. Ann. Inst. Stat. Math. 64, 521–544 (2012)

Acknowledgements

We thank for its support the Chair “Data Analytics and Models for insurance” of BNP Paribas Cardif, hosted by ISFA (Université Claude Bernard, Lyon France). We also thank the two anonymous referees and the editor, Ulrich Horst, for their recommandations.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Proof of Lemma 2.1

To prove this relation, we differentiate the expression of \(\lambda _{t}^{i}\) to retrieve its dynamic:

To prove the positivity, we first remind that \(\int _{0}^{t}\delta _{i,1}e^{\kappa _{i}(s-t)}dL_{s}^{1}\) and \(\int _{0}^{t}\delta _{i,2}e^{\kappa _{i}(s-t)}dL_{s}^{2}\) are positive by construction. According to Eq. (12), the process \(\lambda _{t}^{i}\) admit the following lower bound:

Given that \(\kappa _{i}\int _{0}^{t}e^{\kappa _{i}(s-t)}ds=\left( 1-e^{-\kappa _{i}t}\right) >0\), we conclude that

\(\square \)

Proof of Proposition 3.1

As \(\mathcal {F}_{s}\subset \mathcal {F}_{s}\vee \mathcal {G}_{t}\), using nested expectations leads to the following expression for the expected intensity:

If we remember the expression (13) of the intensity, using the Fubini’s theorem leads to the following expression for the expectation of \(\lambda _{t}^{i}\), conditionally to the augmented filtration \(\mathcal {F}_{s}\vee \mathcal {G}_{t}\) :

Using the same approach as in Errais et al. [17], \(dL_{u}^{i}\) is rewritten as follows:

The order size \(O_{i}\) being independent from all processes and then from \(\mathcal {F}_{s}\vee \mathcal {G}_{t}\), we infer that

Using nested expectations and conditioning with respect to the sample path of \(\lambda _{u}^{1}\) contained in the subfiltration \(\mathcal {H}_{u}\) of \(\mathcal {F}_{u}\) leads to equality for \(u\le t\)

Conditionally to the sample path of \(\lambda _{u}^{1}\), \(N_{u}^{1}\) is a non-homogeneous Poisson process,

therefore, we infer that

If we derive Eq. (47) with respect to time, we find that \(\mathbb {E}\left( \lambda _{t}^{i}|\mathcal {F}_{s}\vee \mathcal {G}_{t}\right) \) is solution of an ordinary differential equation (ODE):

Using Eq. ((47)), allows us to rewrite these ODE’s as follows:

Solving this system of equation requires to determine eigenvalues \(\gamma \) and eigenvectors \((v_{1},v_{2})\) of the matrix present in the right term of this system:

We know that eigenvalues cancel the determinant of the following matrix:

and are solutions of the second order equation:

Roots of the last equation are \(\gamma _{1}\) and \(\gamma _{2}\), as defined by the Eq. (14). One way to find an eigenvector is to note that it must be orthogonal to each rows of the matrix:

then necessary,

If we note \(D:=diag(\gamma _{1},\gamma _{2})\), the matrix in the right term of Eq. (48) admits the decomposition:

where V is the matrix of eigenvectors, as defined in Eq. (16). Its determinant, \(\Upsilon \), and its inverse are respectively provided by Eqs. (18) and (17). If two new variables are defined as follows:

The system (48) is decoupled into two independent ODEs:

And introducing the following notations

leads to the solutions for the system (49):

that allows us to infer the expression (15) for moments of \(\lambda _{t}^{i}\). Notice that the determinant \(\Upsilon \) is always real and if parameters of mutual excitations \(\delta _{1,2},\,\delta _{2,1}\), are positive. As \(\mu _{1},\mu _{2}>0\), the determinant is also strictly positive and the matric V is invertible. Finally, Eq. (15) states that conditionally to the sample path of the Markov chain \(\theta _{t}\), processes \(\lambda _{t}^{1}\) and \(\lambda _{t}^{2}\) are Markov given that their \(\mathcal {F}_{s}\vee \mathcal {G}_{t}\)-expectations only depend on the pair \(\left( \lambda _{t}^{1},\lambda _{t}^{2}\right) \). \(\square \)

Proof of Proposition 3.2

From the previous proposition, we infer that the unconditional expectations of OAI are the solutions of the following system

Given that \(\theta _{t}\) is a finite state Markov chain of generator \(Q_{0}\) and if we remember that \(c_{i}=\left( \begin{array}{c} c_{i,1}\\ \vdots \\ c_{i,l} \end{array}\right) \) for \(i=1,2\) are l- vectors, the expected level of mean reversion at time u is equal to:

then expectation of intensities, conditionally to \(\mathcal {F}_{s}\):

If we replace \(V^{-1}\) by its definition (17), we obtain that

The integrand in Eq. (51) becomes then:

and we can conclude by direct integration that expected value of \(\lambda _{t}^{i}\) are given by Eq. (19). This result also states processes \(\lambda _{t}^{1}\) and \(\lambda _{t}^{2}\) are Markov given that their \(\mathcal {F}_{s}\)expectations only depend on the information available at time s: \(\left( \lambda _{s}^{1},\lambda _{s}^{2},\theta _{s}^{1},\theta _{s}^{2}\right) \). \(\square \)

Proof of Corollary 3.3

To prove this statement, it is sufficient to show that the conditional expectation of these processes with respect to \(\mathcal {F}_{s}\) depends exclusively upon the information available at time s. Using the Tower property of conditional expectation, the expected number of supply order conditionally to \(\mathcal {F}_{s}\) is then equal to the following product:

By construction, the compensator of process \(N_{t}^{1}\) is an \(\mathcal {H}_{t}\)-adapted process \(\int _{0}^{t}\lambda _{u}^{1}du\) such that the compensated process \(M_{t}^{1}=N_{t}^{1}-\int _{0}^{t}\lambda _{u}^{1}du\) is a martingale. Given that \(\mathbb {E}\left( M_{t}^{1}|\mathcal {F}_{s}\vee \mathcal {H}_{t}\right) =M_{s}^{1}\), we deduce that \(\mathbb {E}\left( N_{t}^{1}|\mathcal {F}_{s}\vee \mathcal {H}_{t}\right) =N_{s}^{1}+\int _{s}^{t}\lambda _{u}^{1}du\). Using the Fubini’s theorem, we infer that

According to Proposition 3.2, \(\mathbb {E}\left( \lambda _{u}^{1}|\mathcal {F}_{s}\right) \) depends only upon \(\lambda _{s}^{1}\), \(\lambda _{s}^{2}\) and \(\theta _{s}\). From Eq. (52), we immediately deduce that \(\mathbb {E}\left( N_{t}^{1}|\mathcal {F}_{s}\right) \) is exclusively a function of (\(\lambda _{s}^{1}\), \(\lambda _{s}^{2}\) ,\(\theta _{s}\), \(N_{s}^{1}\)). The same holds for \(N_{t}^{2}\). By definition, \(L_{t}^{1}\) is a sum of independent random variables:

As \(\mathbb {E}\left( N_{t}^{1}|\mathcal {F}_{s}\right) \) is a function of (\(\lambda _{s}^{1}\), \(\lambda _{s}^{2}\), \(\theta _{s}\), \(N_{s}^{1}\)), the same conclusion holds for \(\mathbb {E}\left( L_{t}^{1}|\mathcal {F}_{s}\right) \). A similar reasoning for \(L_{t}^{2}\) and Proposition 3.2 allows to end the proof. \(\square \)

Proof of Proposition 3.4

If we remember the Eq. (48), we infer that the expectations of \(c_{j,t}\lambda _{t}^{i}\) for \(i,j=1,2\) are solution of ordinary differential equations (ODE):

We summarize this system of ODE as follows

If we note \(U(t)=W^{-1}E(t)\), we rewrite this last system:

that admits the following solution:

and we can conclude. \(\square \)

Proof of Proposition 3.5

If we remember Eq. (27), we can develop it as follows

and its expectation is given by

Integrating this last equation allows us to conclude. \(\square \)

Proof of Proposition 3.6

If we remember the expression (24) of the infinitesimal generator, we have

And given that \(\frac{\partial }{\partial t}g=\mathbb {E}\left( \mathcal {A}g\,|\,\mathcal {F}_{0}\right) \), we can conclude. \(\square \)

Proof of Proposition 3.9

Let us assume that \(\theta _{t}=e_{i}\). If we denote by \(g(\lambda _{t}^{1},J_{t}^{1},\lambda _{t}^{2},J_{t}^{2},\theta _{t})=\mathbb {E}\left( \omega ^{N_{T}^{k}}\,|\,\mathcal {F}_{t}\right) \), g is solution of the following Itô’s equation for semi martingale :

Next, we assume that g is an exponential affine function of \(\lambda _{t}^{1}\), \(\lambda _{t}^{2}\) and \(N_{t}^{i}\) :

where \(A(t,T,e_{i})\) for \(i=1\) to l, \(B_{1}(t,T)\), \(B_{2}(t,T)\) and C(t, T) are time dependent functions. The partial derivatives of g are then given by:

And the integrands in Eq. (53) are rewritten with the notations \(A:=A(t,T,e_{i}),\,B_{1}:=B_{1}(t,T),\)\(B_{2}:=B_{2}(t,T)\) and \(C:=C(t,T)\) as follows:

As the sum of instantaneous probabilities is null, \(q_{ii}=-\sum _{i\ne j}^{l}q_{i,j}\), we have that

Then the Eq. (53) becomes:

from which we guess that \(C(t,s)=\ln \omega \). Regrouping terms allows to infer that

Given that \(\lambda _{t}^{1}\) and \(\lambda _{t}^{2}\) are random quantities, this equation is satisfied if and only if

If we define \(\tilde{A}(t,T)=\left( e^{A(t,T,e_{1})},\ldots ,e^{A(t,T,e_{l})}\right) \) , the last equations can finally be put in matrix form as:

\(\square \)

Proof of Proposition 3.11

From previous results, we know that \(B_{k}(t,T)\) is solution of the following ODE

with terminal condition \(B_{k}(T,T)=\omega _{k+1}\). If we set \(B_{k}(t,T)=D_{k}(T-t)\) and \(\tau =T-t\). Then

Thus we obtain

The left hand side is then denoted \(h_{k}(D_{1},D_{2})\). Due to the convexity of \(\psi _{k}\) there is only one point \(\left( u_{1}^{*},u_{2}^{*}\right) \) such that \(h_{k}(u)=0\) for \(k=1,2\). These equations are indeed equivalent to

We rewrite the Eq. (55) as follows,

As \(D_{k}(0)=\omega _{k+1}\) for \(k\in \{1,2\}\) by direct integration, we have that

with \(D_{k}\in [\omega {k+1},u_{k}^{*})\) or \(D_{k}\in [u_{k}^{*},\omega {k+1})\).

We can remark that if \(\left( D_{1},D_{2}\right) =\left( u_{1}^{*},u_{2}^{*}\right) \) then \(\tau =+\infty \) as the numerator converges to zero. If we define the functions \(F_{\omega _{1}}^{1}(x,y)\) and \(F_{\omega _{1}}^{2}(x,y)\) from \(\mathbb {R}^{2}\) to \(\mathbb {R}^{+}\) by Eq. (36), \(D_{1}\) and \(D_{2}\) are such that \(F_{\omega _{1}}^{k}(D_{1},D_{2})=\tau \). If \(\left( F_{\omega _{1}}^{1}\right) ^{-1}(\tau \,|\,y)\) and \(\left( F_{\omega _{1}}^{2}\right) ^{-1}(\tau \,|\,x)\) are respectively the inverse functions of \(F_{\omega _{1}}^{1}(.,y)\) and \(\,F_{\omega _{1}}^{2}(x,.)\), then \(D_{1}\) and \(D_{2}\) satisfy the following system

or \(B_{1}(t,T)=\left( F_{\omega _{1}}^{1}\right) ^{-1}(T-t\,|\,B_{2}(t,T))\) and \(B_{2}(t,T)=\left( F_{\omega _{1}}^{2}\right) ^{-1}(T-t\,|\,B_{1}(t,T))\) . \(\square \)

Rights and permissions

About this article

Cite this article

Hainaut, D., Goutte, S. A switching microstructure model for stock prices. Math Finan Econ 13, 459–490 (2019). https://doi.org/10.1007/s11579-018-00234-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11579-018-00234-6