Abstract

We introduce an unsupervised feature learning approach that embeds 3D shape information into a single-view image representation. The main idea is a self-supervised training objective that, given only a single 2D image, requires all unseen views of the object to be predictable from learned features. We implement this idea as an encoder-decoder convolutional neural network. The network maps an input image of an unknown category and unknown viewpoint to a latent space, from which a deconvolutional decoder can best “lift” the image to its complete viewgrid showing the object from all viewing angles. Our class-agnostic training procedure encourages the representation to capture fundamental shape primitives and semantic regularities in a data-driven manner—without manual semantic labels. Our results on two widely-used shape datasets show (1) our approach successfully learns to perform “mental rotation” even for objects unseen during training, and (2) the learned latent space is a powerful representation for object recognition, outperforming several existing unsupervised feature learning methods.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

The field has made tremendous progress on object recognition by learning image features from supervised image datasets labeled by object categories [19, 22, 36]. Methods tackling today’s challenging recognition benchmarks like ImageNet or COCO [10, 41] capitalize on the 2D regularity of Web photos to discover useful appearance patterns and textures that distinguish many object categories. However, there are limits to this formula: manual supervision is notoriously expensive, not all objects are well-defined by their texture, and (implicitly) learning viewpoint-specific category models is cumbersome if not unscalable.

Learning ShapeCodes by lifting views to “viewgrids”. Given one 2D view of an unseen object (possibly from an unseen category), our deep network learns to produce the remaining views in the viewgrid. This self-supervised learning induces a feature space for recognition. It embeds valuable cues about 3D shape regularities that transcend object category boundaries.

Restricting learned representations to a 2D domain presents a fundamental handicap. While visual perception relies largely on 2D observations, objects in the world are inherently three-dimensional entities. In fact, cognitive psychologists find strong evidence that inferring 3D geometry from 2D views is a useful skill in human perception. For example, in their seminal “mental rotation" work, Shepard and colleagues observed that people attempting to determine if two views portray the same abstract 3D shape spend time that is linearly proportional to the 3D angular rotation between those views [53]. Such findings suggest that humans may explicitly construct mental representations of 3D shape from individual 2D views, and further, the act of mentally rotating such representations is integral to registering object views, and by extension, to object recognition.

Inspired by this premise, we propose an unsupervised approach to image feature learning that aims to “lift” individual 2D views to their 3D shapes. More concretely, we pose the feature learning task as one-shot viewgrid prediction from a single input view. A viewgrid—an array of views indexed by viewpoints—serves as an implicit image-based model of 3D shape. We implement our idea as an encoder-decoder deep convolutional neural network (CNN). Given one 2D view of any object from an arbitrary viewpoint, the proposed training objective learns a latent space from which images of the object after arbitrary rotations are predictable. See Fig. 1. Our approach extracts this latent “ShapeCode” encoding to generate image features for recognition. Importantly, our approach is class-agnostic: it learns a single model to accommodate all objects seen during training, thereby encouraging the representation to capture basic shape primitives, semantic regularities, and shading cues. Furthermore, the approach is self-supervised: it aims to learn a representation generically useful to object perception, but without manual semantic labels.

We envision the proposed training phase as if an embodied visual agent were learning visual perception from scratch by inspecting a large number of objects. It can move its camera to arbitrary viewpoints around each object to acquire its own supervision. At test time, it must be able to observe only one view and hallucinate the effects of all camera displacements from that view. In doing so, it secures a representation of objects that, in a departure from purely 2D view-based recognition, is inherently shape-aware. We discuss the advantages of viewgrids over explicit voxels/point clouds in Sect. 3.1.

Our work relates to a growing body of work on self-supervised representation learning [1, 12, 17, 26, 28, 39, 45, 46, 58, 66], where “pretext” tasks requiring no manual labels are posed to the feature learner to instill visual concepts useful for recognition. We pursue a new dimension in this area—instilling 3D reasoning about shape. The idea of inferring novel viewpoints also relates to work in CNN-based view synthesis and 3D reconstruction [7, 13, 18, 35, 50, 57, 60, 64, 67]. However, our intent and assumptions are quite different. Unlike our approach, prior work targets reconstruction as the end task itself (not recognition), builds category-specific models (e.g., one model for chairs, another for cars), and relies on networks pretrained with heavy manual supervision.

Our experiments on two widely used object/shape datasets validate that (1) our approach successfully learns class-agnostic image-based shape reconstruction, generalizing even to categories that were not seen during training and (2) the representations learned in the process transfer well to object recognition, outperforming several popular unsupervised feature learning approaches. Our results establish the promise of explicitly targeting 3D understanding as a means to learn useful image representations.

2 Related Work

Unsupervised representation learning. While supervised “pretraining" of CNNs with large labeled datasets is useful [19], it comes at a high supervision cost and there are limits to its transferability to tasks unlike the original labeled categories. As an increasingly competitive approach, researchers investigate unsupervised feature learning [1, 4, 8, 12, 17, 26, 28, 39, 45, 46, 58, 59, 66]. An emerging theme is to identify “pretext" tasks, where the network targets an objective for which supervision is inherently free. In particular, features tasked with being predictive of contextual layout [12, 45, 46], camera egomotion [1, 26, 47], stereo disparities [16], colorization [39], or temporal slowness [17, 28, 58] simultaneously embed basic visual concepts useful for recognition. Our approach shares this self-supervised spirit and can be seen as a new way to force the visual learner to pick up on fundamental cues. In particular, our method expands this family of methods to multi-view 3D data, addressing the question: does learning to infer 3D from 2D help perform object recognition? While prior work considers 3D egomotion [1, 26], it is restricted to impoverished glimpses of the 3D world through “unattached” neighboring view pairs from video sequences. Instead, our approach leverages viewgrid representations of full 3D object shape. Our experiments comparing to egomotion self-supervision show our method’s advantage.

Recognition of 3D objects. Though 2D object models dominate recognition in recent years (e.g., as evidenced in challenges like PASCAL, COCO, ImageNet), there is growing interest in grounding object models in their 3D structures and shapes. Recent contributions in large-scale data collections are fostering such progress [5, 62, 62], and researchers are developing models to integrate volumetric and multiview approaches effectively [48, 56], as well as new ideas for relating 3D properties (pose, occlusion boundaries) to 2D recognition schemes [63]. Active recognition methods reason about the information value of unseen views of an object [27, 29, 33, 49, 51, 62].

Geometric view synthesis. For many years, new view synthesis was solved with geometry. In image-based rendering, rather than explicitly constructing a 3D model, new views are generated directly from multiple 2D views [34], with methods that establish correspondence and warp pixels according to projective or multi-view geometry [2, 52]. Image-based models for object shape (implicitly) intersect silhouette images to carve a visual hull [38, 44].

Learning 2D-3D relationships. More recently, there is interest in instead learning the connection between a view and its underlying 3D shape. The problem is tackled on two main fronts: image-based and volumetric. Image-based methods infer the new view as a function of a specified viewpoint. Given two 2D views, they learn to predict intermediate views [11, 15, 23, 32]. Given only a single view, they learn to render the observed object from new camera poses, e.g., via disentanglement with deep inverse graphic networks [37], tensor completion [6], recurrent encoder-decoder nets [30, 65], appearance flow [67], or converting partial RGBD to panoramas [55]. Access to synthetic object models is especially valuable to train a generative CNN [13]. Volumetric approaches instead map a view(s) directly to a 3D representation of the object, such as a voxel occupancy grid or a point cloud, e.g., with 3D recurrent networks [7], direct prediction of 3D points [14], or generative embeddings [18]. While most efforts study synthetic 3D object models (e.g., CAD datasets), recent work also ventures into real-world natural imagery [35]. Beyond voxels, inferring depth maps [57] or keypoint skeletons [60] offer valuable representations of 3D structure.

Our work builds on such advances in learning 2D-3D ties, and our particular convolutional autoencoder (CAE)-based pipeline (Sect. 3.2) echoes the de facto standard architecture for pixel-output tasks [37, 43, 57, 64, 66]. However, our goal differs from any of the above. Whereas existing methods develop category-specific models (e.g., chairs, cars, faces) and seek high-quality images/voxels as the end product, we train a class-agnostic model and seek a transferable image representation for recognition.

3 Approach

Our goal is to learn a representation that lifts a single image from an arbitrary (unknown) viewpoint and arbitrary class to a space where the object’s 3D shape is predictable—its ShapeCode. This task of “mentally rotating” an object from its observed viewpoint to arbitrary relative poses requires 3D understanding from single 2D views, which is valuable for recognition. By training on a one-shot shape reconstruction task, our approach aims to learn an image representation that embeds this 3D understanding and applies the resulting embedding for single-view recognition tasks.

3.1 Task Setup: One-Shot Viewgrid Prediction

During training, we first evenly sample views from the viewing sphere around each object. To do this, we begin by selecting a set \(S_{az}\) of M camera azimuths \(S_{az}=\{360^{\circ }/M, 720^{\circ }/M, \dots 360^{\circ }\}\) centered around the object. Then we select a set \(S_{el}\) of N camera elevations \(S_{el}=\{0^{\circ }, \pm 180^{\circ }/(N-1), \pm 360^{\circ }/(N-1),\dots \pm 90^{\circ } \}\). We now sample all \(M\times N\) views of every object corresponding to the cartesian product \(S=S_{az}\times S_{el}\) of azimuth and elevation positions: \(\{\varvec{y}(\varvec{\theta _i}): \varvec{\theta _i}\in S\}\).Footnote 1 Note that each \(\varvec{\theta _i}\) is an elevation-azimuth pair, and represents one position in the viewing grid S.

Now, with these evenly sampled views, the one-shot viewgrid prediction task can be formulated as follows. Suppose the observed view is at an unknown camera position \(\varvec{\theta }\) sampled from our viewing grid set S of camera positions. The system must learn to predict the views \(\varvec{y}(\varvec{\theta '})\) at position \(\varvec{\theta '}=\varvec{\theta }+\varvec{\delta _i}\) for all \(\varvec{\delta _i}\in S\). Because of the even sampling over the full viewing sphere, \(\varvec{\theta }'\) is itself also in our original viewpoint set S, so we have already acquired supervision for all views that our system must learn to predict.

Why viewgrids? The viewgrid representation has advantages over other more explicit 3D representations such as point clouds [14] and voxel grids [7]. First, viewgrid images can be directly acquired by an embodied agent through object manipulation or inspection, whereas voxel grids and point clouds require noisy 3D inference from large image collections. While our experiments leverage realistic 3D object CAD models to render viewpoints on demand (Sect. 4), it is actually less dependent on CAD data than prior work requiring voxel supervision. Ultimately we envision training taking place in a physical scenario, where an embodied agent builds up its visual representation by examining various objects. By moving to arbitrary viewpoints around an object, it acquires self-supervision to understand the 3D shape. Finally, viewgrids facilitate the representation of missing data—if some ground truth views are unavailable for a particular object, the only change required in our training loss (below in Eq. 1) would be to drop the terms corresponding to unseen views.

3.2 Network Architecture and Training

To tackle the one-shot viewgrid prediction task, we employ a deep feed-forward neural network. Our network architecture naturally splits into four modular sub-networks with different functions: elevation sensor, image sensor, fusion, and finally, a decoder. Together, the elevation sensor, image sensor, and fusion modules process the observation and proprioceptive camera elevation information to produce a single feature vector that encodes the full object model. That vector space constitutes the learned ShapeCode representation. During training only, the decoder module processes this code through a series of learned deconvolutions to produce the desired image-based viewgrid reconstruction at its output.

Encoder: First, the image sensor module embeds the observed view through a series of convolutions and a fully connected layer into a vector. In parallel, the camera elevation angle is processed through the elevation sensor module. Note that the object pose is not fully known—while camera elevation can be determined from gravity cues, there is no way to determine the azimuth.

The outputs of the image and elevation sensor modules are concatenated and passed through a fusion module which jointly processes their information to produce a \(D=256\)-dimensional output “code” vector, which embeds knowledge of object shape. In short, the function of the encoder is to lift a 2D view to a single vector representation of the full 3D object shape.

Decoder: To learn a representation with this property, the output of the encoder is processed through another fully connected layer to increase its dimensionality before reshaping into a sequence of small \(4\times 4\) feature maps. These maps are then iteratively upsampled through a series of learned deconvolutional layers. The final output of the decoder module is a sequence of MN output maps \(\{\varvec{\hat{y}_i}: i=1,\dots M\times N\}\) of same height and width as the input image. Together these MN maps represent the system’s output viewgrid on which the training loss is computed.

Architecture of our system. A single view of an object (top left) and the corresponding camera elevation (bottom left) are processed independently in “image sensor” and “elevation sensor” neural net modules, before fusion to produce the ShapeCode representation of the input, which embeds the 3D object shape aligned to the observed view. This is now processed in a deconvolutional decoder. During training, the output is a sequence of images representing systematically shifted viewpoints relative to the observed view. During testing, novel 2D images are lifted into the ShapeCode representation to perform recognition.

The complete architecture, together with more detailed specifications, is visualized in Fig. 2. Our convolutional encoder-decoder [43] neural network architecture is similar to [30, 57, 64, 67]. As discussed above, however, the primary focus of our work is very different. We consider one-shot reconstruction as a path to useful image representations that lift 2D views to 3D, whereas existing work addresses the image/voxel generation task itself, and accordingly builds category-specific models.

By design, our approach must not exploit artificial knowledge about the absolute orientation of an object it inspects, either during training or testing. Thus, there is an important issue to deal with—what is the correspondence between the individual views in the target viewgrid and the system’s output maps? Recall that at test time, the system will be presented with a single view of a novel object, from an unknown viewpoint (elevation known, azimuth unknown). How then can it know the correct viewpoint coordinates for the viewgrid it must produce? It instead produces viewgrids aligned with the observed viewpoint at the azimuthal coordinate origin, similar to [30]. Azimuthal rotations of a given viewgrid all form an equivalence class. In other words, circularly shifting the 7\(\times \)12 viewgrid in Fig. 2 by one column will produce a different, but entirely valid viewgrid representation of the same airplane object.

To optimize the entire pipeline, we regress to the target viewgrid \(\varvec{y}\), which is available for each training object. Since our output viewgrids are aligned by the observed view, we must accordingly shift the target viewgrid before performing regression. This leads to the following minimization objective:

where we omit the summation over the training set to keep the notation simple. Each output map \(\varvec{\hat{y}_i}\) is thus penalized for deviation from a specific relative circular shift \(\varvec{\delta _i}\) from the observed viewpoint \(\varvec{\theta }\). This one-shot reconstruction task enforces that the encoder must capture the full 3D object shape from observing just one 2D view. A similar reconstruction loss was proposed in [30] in a different context; they learned exploratory action policies by training them to select a sequence of views best suited to reconstruct entire objects and scenes.

Recent work targeting image synthesis has benefited from using adversarial (GAN) losses [20]. GAN losses help achieve correct low-level statistics in image patches, improving photorealism [25]. Rather than targeting realistic image synthesis as an end in itself, we target shape reconstruction for feature learning, so we use the standard \(\ell _2\) loss. During feature transfer, we discard the decoder entirely (see Sect. 3.3). See Supp for optimization details.

3.3 ShapeCode Features for Object Recognition

During training, the objective is to minimize viewgrid error, to learn the latent space from which unseen views are predictable. Then, to apply our network to novel examples, the representation of interest is that same latent space output by the fusion module of the encoder—the ShapeCode. In the spirit of self-supervised representation learning, we hypothesize that features trained in this manner will facilitate high-level visual recognition tasks. This is motivated by the fact that in order to solve the reconstruction task effectively, the network must implicitly learn to lift 2D views of objects to inferred 3D shapes. A full 3D shape representation has many attractive properties for generic visual tasks. For instance, pose invariance is desirable for recognition; while difficult in 2D views, it becomes trivial in a 3D representation, since different poses correspond to simple transformations in the 3D space. Furthermore, the ShapeCode provides a representation that is equivariant to egomotion transformations, which is known to benefit recognition and emerges naturally in supervised networks [9, 23, 31, 40].

Suppose that the visual agent has learned a model as above for viewgrid prediction by inspecting 3D shapes. Now it is presented with a new recognition task, as encapsulated by a dataset of class-labeled training images from a disjoint set of object categories. We aim to transfer the 3D knowledge acquired in the one-view reconstruction task to this new task. Specifically, for each new class-labeled image, we directly represent it in the feature space represented by an intermediate fusion layer in the network trained for reconstruction. These features are then input to a generic machine learning pipeline that is trained for the categorization task.

Recall that the output of the fusion module in Fig. 2, which is the fc3 feature vector, is trained to encode 3D shape. In our experiments, we test the usefulness of features from fc3 and its two immediate preceding layers, fc2, and fc1, for solving object classification and retrieval tasks.

4 Experiments

First, we quantify performance for class-agnostic viewgrid completion (Sect. 4.2). Second, we evaluate the learned features for object recognition (Sect. 4.3).

4.1 Datasets

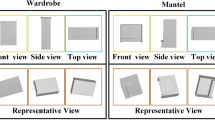

In principle, our self-supervised learning approach can leverage viewpoint-calibrated viewgrids acquired by an agent systematically inspecting objects in its environment. In our experiments, we generate such viewgrids from datasets of synthetic object shapes. We test our method on two such publicly available datasets: ModelNet [62] and ShapeNet [5]. Both of these datasets provide a large number of manually generated 3D models, with class labels. For each object model, we render 32\(\times \)32 grayscale views from a grid of viewpoints that is evenly sampled over the viewing sphere centered on the object.

ModelNet [62] has 3D CAD models downloaded from the Web, and then manually aligned and categorized. ModelNet comes with two standard subsets: ModelNet-10 and ModelNet-40, with 10 and 40 object classes respectively. The 40 classes in ModelNet-40 include the 10 classes in ModelNet-10. We use the 10 ModelNet-10 classes as our unseen classes, and the other 30 ModelNet-40 classes as seen classes. We use the standard train-test split, and set aside 20% of seen-class test set models as validation data. ModelNet is the most widely used dataset in recent 3D object categorization work [27, 29, 33, 48, 56, 61, 62].

ShapeNet [5] contains a large number of models organized into semantic categories under the WordNet taxonomy. All models are consistently aligned to fixed canonical viewpoints. We use the standard ShapeNetCore-v2 subset which contains 55 diverse categories. Of these, we select the 30 largest categories as seen categories, and the remaining 25 are unseen. We use the standard train-test split. Further, since different categories have highly varying numbers of object instances, we limit each category in our seen-class training set to 500 models, to prevent training from being dominated by models of a few very common categories. Table 1 (left) shows more details for both datasets.

4.2 Class-Agnostic One-Shot Viewgrid Prediction

First, we train and test our method for viewgrid prediction. For both datasets, the system is trained on the seen-classes training set. The trained model is subsequently tested on both seen and unseen class test sets.

The evaluation metric is the per-pixel mean squared deviation of the inferred viewgrid vs. the ground truth viewgrid. We compare to several baselines:

-

Avg view: This baseline simply predicts, at each viewpoint in the viewgrid, the average of all views observed in the training set over all viewpoints.

-

Avg viewgrid: Both ModelNet and ShapeNet have consistently aligned models, so there are significant biases that can be exploited by a method that has access to this canonical alignment information. This baseline aims to exploit this bias by predicting, at each viewpoint in the viewgrid, the average of all views observed in the training set at that viewpoint. Note that our system does not have access to this alignment information, so it cannot exploit this bias.

-

GT class avg view: This baseline represents a model with perfect object classification. Given an arbitrary object from some ground truth category, this baseline predicts, at each viewpoint, the average of all views observed in the training set for that category.

-

GT class avg viewgrid: This baseline is the same as GT category avg view, but has knowledge of canonical alignments too, so it produces the average of views observed at each viewpoint over all models in that category in the training set.

-

Ours w. CA: This baseline is our approach but trained with the (unrealistic) addition of knowledge about canonical alignment (“CA”) of viewgrids. It replaces Eq. (1) to instead optimize the loss: \(\mathcal {L}=\sum _{i=1}^{M\times N} (\varvec{\hat{y}_i} - \varvec{y}(\varvec{\delta _i}))^2\), so that each output map \(\varvec{\hat{y}_i}\) of the system is now assigned to a specific coordinate in the canonical viewgrid axes.

A key question in these experiments is whether the class-agnostic model we train can generalize to predict unseen views of objects from classes never seen during training.Footnote 2

Shape reconstructions from a single view (rightmost example from ShapeNet, other two from ModelNet). In each panel, ground truth viewgrids are shown at the top, the observed view is marked with a red box, and our method’s reconstructions are shown at the bottom. (Best seen in pdf at high resolution.) See text in Sect. 4.2 for description of these examples. (Color figure online)

Table 1 (right) shows the results. “Avg viewgrid” and “GT category avg viewgrid” improve by large margins over “Avg view” and “GT category avg view”, respectively. This shows that viewgrid alignment biases can be useful for reconstruction in ModelNet and ShapeNet. Recall however that while these baselines can exploit the (unrealistic) bias, our approach cannot; it knows only the elevation as sensed from gravity. Our approach is trained to produce views at various relative displacements from the observed view, i.e., its target is a viewgrid that has the current view at its origin. So it cannot learn to memorize and align an average viewgrid.

Despite this, our approach outperforms the baselines by large margins. It even outperforms its variant “Ours w. CA” that uses alignment biases in the data. Why is “Ours w. CA” weaker? It is trained to produce viewgrids with canonical alignments (CA). CA’s are loosely manually defined, usually class-specific conventions in the dataset (e.g., 0\(^{\circ }\) azimuth and elevation for all cars might be “head on” views). “Ours w. CA” naturally has no notion of CA’s for unseen categories, where it performs particularly poorly. On seen classes with strong alignment biases, CA’s make it easier to capture category-wide information (e.g., if the category is recognizable, produce its corresponding average aligned training viewgrid as output). However, it is harder to capture instance-specific details, since the network must not only mentally rotate the input view but also infer its canonical pose correctly. “Ours” does better by aligning outputs to the observed viewpoint.

Figure 3 shows example viewgrids generated by our method. In the leftmost panel, it reconstructs an object shape from a challenging viewpoint, effectively exploiting the semantic structure in ModelNet. In the center panel, the system observes an ambiguous viewpoint that could be any one of four different views at the same azimuth. In response to this ambiguity, it attempts to play it safe to minimize MSE loss by averaging over possible outcomes, producing blurry views. In the rightmost panel, our method shows the ability to infer shape from shading cues for simple objects.

Figure 4 examines which views are informative for one-shot viewgrid prediction. For each of the three classes shown, the heatmap of MSE is overlaid on the average viewgrid for that class. The yellowish (high error) horizontal and vertical stripes correspond to angles that only reveal a small number of faces of the object. Top and bottom views are consistently uninformative, since very different shapes can have very similar overhead projections. The middle row (0\(^{\circ }\) elev.) stands out as particularly bad for “airplane” because of the narrow linear projection, which presents very little information. See Supp. for more. These trends agree with intuitive notions of which views are most informative for 3D understanding, and serve as evidence that our method learns meaningful cues to infer unseen views.

Overall, the reconstruction results demonstrate that our approach successfully learns one single unified category-agnostic viewgrid reconstruction model that handles not only objects from the large number of generic categories that are represented in its training set, but also objects from unseen categories.

ModelNet reconstruction MSE for three classes, conditioned on observed view (best viewed in pdf at high resolution). Yellower colors correspond to high MSE (bad) and bluer colors correspond to low MSE (good). See text in Sect. 4.2.(Color figure online)

4.3 ShapeCode Features for Object Recognition

We now validate our key claim: the lifted features—though learned without manual labels—are a useful visual representation for recognition.

First, as described in Sect. 3.3, we extract features from various layers in the network (fc1, fc2, fc3 in Fig. 2) and use them as inputs to a classifier trained for categorization of individual object views. Though any classifier is possible, following [17, 31, 58], we employ a simple k-nearest neighbor classifier, which makes the power of the underlying representation most transparent. We run this experiment on both the seen and unseen class subsets on both ModelNet and ShapeNet. In each case, we use 1000 samples per class in the training set, and set \(k=5\). We compare our features against a variety of baselines:

-

DrLIM [21]: A commonly used unsupervised feature learning approach. During training, DrLIM learns an invariant feature space by mapping features of views of the same training object close to each other, and pushing features of views of different training objects far apart from one another.

-

Autoencoder [3, 24, 43]: A network is trained to observe an input view from an arbitrary viewpoint and produce exactly that same view as its output (compared to our method which produces the full viewgrid, including views from other viewpoints too). For this method, we use an architecture identical to ours except at the very last deconvolutional layer, where, rather than producing \(N\times M\) output maps, it predicts just one map corresponding to the observed view itself.

-

Context [46]: Representative of the popular paradigm of exploiting spatial context to learn features [12, 45, 46]. The network is trained to “inpaint” randomly removed masks of arbitrary shape covering up to \(\frac{1}{4}\) of the 32 \(\times \) 32 object views, thus learning spatial configurations of object parts. We adapt the public code of [46].

-

Egomotion [1]: Like our method, this baseline also exploits camera motion to learn unsupervised representations. While our method is trained to predict all rotated views given a starting view, [1] trains to predict the camera rotation between a given pair of images. We train the model to predict 8 classes of rotations, i.e., the immediately adjacent viewpoints in the viewgrid for a given view (3\(\times \)3 neighborhood).

-

PointSetNet [14]: This method reconstructs object shape point clouds from a single image, plus the ground truth segmentation mask. We extract features from their provided encoder network trained on ShapeNet. Since segmentation masks are unavailable in the feature evaluation setting, we set them to the whole image.

-

3D-R2N2 [7]: This method constructs a voxel grid from a single view. We extract features from their provided encoder network trained on ShapeNet.

-

VGG [54]: While our focus is unsupervised feature learning, this baseline represents current standard supervised features, trained on millions of manually labeled images. We use the VGG-16 architecture [54] trained on ImageNet, and extract fc6 features from 224\(\times \)224 images.

-

Shape classifier: To provide a supervised baseline trained on in-domain data, we train a network for single-view 3D shape categorization using 1 k labeled images per seen class. The architecture is kept identical to the encoder of our method, and features are extracted from the same layers.

-

Pixels: For this baseline the \(32\times 32\) image is vectorized and used directly as a feature vector.

-

Random weights: A network with identical architecture to ours and initialized with the same scheme is used to extract features with no training.

The “Random weights", “DrLIM", “Egomotion”, and “Autoencoder” methods use identical architectures to ours until fc3 (see Supp). For “Context”, we stay close to the authors’ architecture and retrain on our 3D datasets. For “VGG”, “PointSetNet”, and “3D-R2N2”, we use author-provided model weights.

Recall that our model is trained to observe camera elevations together with views, as shown in Fig. 2. While this is plausible in a real world setting where an agent may know its camera elevation angle from gravity cues, for fair comparison with our baselines, we omit proprioception inputs when evaluating our unsupervised features. Instead, we feed in camera elevation 0\(^{\circ }\) for all views.

Table 2 shows the results for both datasets. Since trends across fc1, fc2, and fc3 were all very similar, for each method, we report the accuracy from its best performing layer (see Supp for per-layer results). “Ours” strongly outperforms all prior approaches. Critically, our advantage holds whether or not the objects to be recognized are seen during training of the viewgrid prediction network.

Among the baselines, all unsupervised learning methods outperform “Pixels” and “Random weights”, as expected. The two strongest unsupervised baselines are “Egomotion” [1] and “DrLIM” [21]. Recall that “Egomotion” is especially relevant to our approach as it also has access to relative camera motion information. However, while their method only sees neighboring view pairs sampled from the viewgrid at training time, our approach learns to infer full viewgrids for each instance, thus exploiting this information more effectively. “Autoencoder” features perform very poorly. “Context” features [46] are also poor, suggesting that spatial context within views, though successful for 2D object appearance [46], is a weak learning signal for 3D shapes.

The fact that our method outperforms PointSetNet [14] and 3D-R2N2 [7] suggests that it is preferable to train for generating implicit 3D viewgrids rather than explicit 3D voxels or point clouds. While we include them as baselines for this very purpose, we stress that the goal in those papers [7, 14] is reconstruction—not recognition—so this experiment expands those methods’ application beyond the authors’ original intent. We believe their poor performance is partly due to domain shift: ModelNet performance is weaker for both methods since they were trained on ShapeNet. Further, the authors train PointSetNet only on objects with specific poses (elevation 20\(^{\circ }\)) and it exploits ground truth segmentation masks which are unavailable in this setting. Both methods were also trained on object views rendered with small differences from ours (see Supp).

Finally, Table 2 shows our self-supervised representation outperforms the ImageNet-pretrained supervised VGG features in most cases. Note that recognition tasks on synthetic shape datasets are commonly performed with ImageNet-pretrained neural networks [27, 29, 33, 56]. However, the domain gap between ImageNet and ModelNet/ShapeNet is not the sole reason for this; our method also beats supervised features trained on in-domain data (“Shape classifier”). These results illustrate that our geometry-aware self-supervised pretraining has potential to supersede traditional ImageNet pretraining, and even domain-specific supervised pretraining.

Figure 5 visualizes a t-SNE [42] embedding of unseen ModelNet-10 class images using ShapeCode features. As can be seen, categories tend to cluster, including those with diverse appearance like ‘chair’. ‘Chair’ and ‘toilet’ are close, as are ‘dresser’ and ‘night stand’, showing the emergence of high-level semantics.

In Supp, we test ShapeCode’s transferability to recognition tasks under many varying experimental conditions, including varying training dataset sizes for the k-NN classifier, and performing object category retrieval rather than categorization. A unified and consistent picture emerges: ShapeCode is a significantly better feature representation than the baselines for high-level recognition tasks.

5 Conclusions

We proposed ShapeCodes, self-supervised features trained for a mental rotation task so that they embed generic 3D shape priors useful to recognition. ShapeCodes outperform an array of state-of-the-art approaches for unsupervised feature learning, establishing the promise of explicitly targeting 3D understanding to learn useful image representations.

While we test our approach on synthetic object models, we are investigating whether features trained on synthetic objects could generalize to real images. Further, an embodied agent could in principle inspect physical objects to acquire viewgrids to allow training on real objects. Future work will explore extensions to permit sequential accumulation of observed views of real objects. We will also investigate reconstruction losses expressed at a more abstract level than pixels, e.g., in terms of a feature content loss.

References

Agrawal, P., Carreira, J., Malik, J.: Learning to see by moving. In: ICCV (2015)

Avidan, S., Shashua, A.: Novel view synthesis in tensor space. In: CVPR (1997)

Bengio, Y.: Learning deep architectures for ai. Foundations and trends\({\textregistered }\). Mach. Learn. 2(1), 1–127 (2009)

Bojanowski, P., Joulin, A.: Unsupervised learning by predicting noise. In: ICML (2017)

Chang, A.X., et al.: ShapeNet: an information-rich 3d model repository. Technical report arXiv:1512.03012 [cs.GR], Stanford University—Princeton University—Toyota Technological Institute at Chicago (2015)

Chen, C.Y., Grauman, K.: Inferring unseen views of people. In: CVPR (2014)

Choy, C., Xu, D., Gwak, J., Chen, K., Savarese, S.: 3d–r2n2: a unified approach for single and multi-view 3d object reconstrution. In: ECCV (2016)

Coates, A., Ng, A., Lee, H.: An analysis of single-layer networks in unsupervised feature learning. In: Proceedings of the fourteenth international conference on artificial intelligence and statistics, pp. 215–223 (2011)

Cohen, T.S., Welling, M.: Transformation properties of learned visual representations. In: ICLR (2014)

Deng, J., Dong, W., Socher, R., Li, L.J., Li, K., Fei-Fei, L.: Imagenet: a large-scale hierarchical image database. In: CVPR (2009)

Ding, W., Taylor, G.: “Mental rotation” by optimizing transforming distance. In: NIPS Workshop (2014)

Doersch, C., Gupta, A., Efros, A.: Unsupervised visual representation learning by context prediction. In: ICCV (2015)

Dosovitskiy, A., Springenberg, J., Brox, T.: Learning to generate chairs with convolutional neural networks. In: CVPR (2015)

Fan, H., Su, H., Guibas, L.: A point set generation network for 3D object reconstruction from a single image. In: Conference on Computer Vision and Pattern Recognition (CVPR), vol. 38 (2017)

Flynn, J., Neulander, I., Philbin, J., Snavely, N.: Deepstereo: learning to predict new views from the world’s imagery. In: CVPR (2015)

Gan, C., Gong, B., Liu, K., Su, H., Guibas, L.J.: Geometry guided convolutional neural networks for self-supervised video representation learning. In: CVPR (2018)

Gao, R., Jayaraman, D., Grauman, K.: Object-centric representation learning from unlabeled videos. In: Lai, S.-H., Lepetit, V., Nishino, K., Sato, Y. (eds.) ACCV 2016. LNCS, vol. 10115, pp. 248–263. Springer, Cham (2017). https://doi.org/10.1007/978-3-319-54193-8_16

Girdhar, R., Fouhey, D.F., Rodriguez, M., Gupta, A.: Learning a predictable and generative vector representation for objects. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9910, pp. 484–499. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46466-4_29

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: The IEEE Conference on Computer Vision and Pattern Recognition (CVPR), June 2014

Goodfellow, I., et al.: Generative adversarial nets. In: NIPS (2014)

Hadsell, R., Chopra, S., LeCun, Y.: Dimensionality reduction by learning an invariant mapping. In: CVPR (2006)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: CVPR (2016)

Hinton, G.E., Krizhevsky, A., Wang, S.D.: Transforming auto-encoders. In: Honkela, T., Duch, W., Girolami, M., Kaski, S. (eds.) ICANN 2011. LNCS, vol. 6791, pp. 44–51. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-21735-7_6

Hinton, G.E., Salakhutdinov, R.R.: Reducing the dimensionality of data with neural networks. Science 313(5786), 504–507 (2006)

Isola, P., Zhu, J.Y., Zhou, T., Efros, A.A.: Image-to-image translation with conditional adversarial networks. In: CVPR (2017)

Jayaraman, D., Grauman, K.: Learning image representations tied to ego-motion. In: ICCV (2015)

Jayaraman, D., Grauman, K.: Look-ahead before you leap: end-to-end active recognition by forecasting the effect of motion. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9909, pp. 489–505. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46454-1_30

Jayaraman, D., Grauman, K.: Slow and steady feature analysis: higher order temporal coherence in video. In: CVPR (2016)

Jayaraman, D., Grauman, K.: End-to-end policy learning for active visual categorization. In: TPAMI (2018)

Jayaraman, D., Grauman, K.: Learning to look around: intelligently exploring unseen environments for unknown tasks. In: CVPR (2018)

Jayaraman, D., Grauman, K.: Learning image representations tied to egomotion from unlabeled video. Int. J. Comput. Vis. 125, 136–161 (2017)

Ji, D., Kwon, J., McFarland, M., Savarese, S.: Deep view morphing. In: CVPR (2017)

Johns, E., Leutenegger, S., Davison, A.: Pairwise decomposition of image sequences for active multi-view recognition. In: CVPR (2016)

Kang, S.B.: A survey of image-based rendering techniques. In: Videometrics SPIE International Symposium on Electronic Imaging: Science and Technology (1999)

Kar, A., Tulsiani, S., Carreira, J., Malik, J.: Category-specific object reconstruction from a single image. In: CVPR (2015)

Krizhevsky, A., Sutskever, I., Hinton, G.: Imagenet classification with deep convolutional neural networks. In: NIPS (2012)

Kulkarni, T., Whitney, W., Kohli, P., Tenenbaum, J.: Deep convolutional inverse graphics network. In: NIPS (2015)

Kutulakos, K., Seitz, S.: A theory of shape by space carving. In: IJCV (2000)

Larsson, G., Maire, M., Shakhnarovich, G.: Colorization as a proxy task for visual understanding. In: CVPR (2017)

Lenc, K., Vedaldi, A.: Understanding image representations by measuring their equivariance and equivalence. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 991–999 (2015)

Lin, T.Y., et al.: Microsoft COCO: common objects in context. In: Fleet, D., Pajdla, T., Schiele, B., Tuytelaars, T. (eds.) ECCV 2014. LNCS, vol. 8693, pp. 740–755. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-10602-1_48

van der Maaten, L., Hinton, G.: Visualizing data using t-sne. JMLR 9, 2579–2625 (2008)

Masci, J., Meier, U., Cireşan, D., Schmidhuber, J.: Stacked convolutional auto-encoders for hierarchical feature extraction. In: Honkela, T., Duch, W., Girolami, M., Kaski, S. (eds.) ICANN 2011. LNCS, vol. 6791, pp. 52–59. Springer, Heidelberg (2011). https://doi.org/10.1007/978-3-642-21735-7_7

Matusik, W., Buehler, C., Raskar, R., Gortler, S., McMillan, L.: Image-based visual hulls. In: SIGGRAPH (2000)

Noroozi, M., Favaro, P.: Unsupervised learning of visual representations by solving jigsaw puzzles. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9910, pp. 69–84. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46466-4_5

Pathak, D., Krahenbuhl, P., Donahue, J., Darrell, T., Efros, A.A.: Context encoders: feature learning by inpainting. In: CVPR (2016)

Poier, G., Schinagl, D., Bischof, H.: Learning pose specific representations by predicting different views. In: CVPR (2018)

Qi, C.R., Su, H., Nießner, M., Dai, A., Yan, M., Guibas, L.J.: Volumetric and multi-view cnns for object classification on 3d data. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp. 5648–5656 (2016)

Ramanathan, V., Pinz, A.: Active object categorization on a humanoid robot. In: VISAPP (2011)

Rezende, D., Eslami, S., Mohamed, S., Battaglia, P., Jaderberg, M., Heess, N.: Unsupervised learning of 3d structure from images. In: NIPS (2016)

Schiele, B., Crowley, J.: Transinformation for active object recognition. In: ICCV (1998)

Seitz, S., Dyer, C.: View morphing. In: SIGGRAPH (1996)

Shepard, R.N., Metzler, J.: Mental rotation of three-dimensional objects. Science 171, 701–703 (1971)

Simonyan, K., Zisserman, A.: Very deep convolutional networks for large-scale image recognition. In: ICLR (2015)

Song, S., et al.: Im2pano3d: extrapolating 360 structure and semantics beyond the field of view. In: CVPR (2018)

Su, H., Maji, S., Kalogerakis, E., Learned-Miller, E.: Multiview convolutional neural netowrks for 3d shape recognition. In: ICCV (2015)

Tatarchenko, M., Dosovitskiy, A., Brox, T.: Multi-view 3d models from single images with a convolutional network. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9911, pp. 322–337. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46478-7_20

Wang, X., Gupta, A.: Unsupervised learning of visual representations using videos. In: ICCV (2015)

Wang, X., He, K., Gupta, A.: Transitive invariance for self-supervised visual representation learning. In: ICCV (2017)

Wu, J., et al.: Single image 3d interpreter network. In: ECCV (2016)

Wu, J., Zhang, C., Xue, T., Freeman, B., Tenenbaum, J.: Learning a probabilistic latent space of object shapes via 3d generative-adversarial modeling. In: NIPS (2016)

Wu, Z., et al.: 3d shapenets: A deep representation for volumetric shapes. In: CVPR (2015)

Xiang, Y., Choi, W., Lin, Y., Savarese, S.: Data-driven 3d voxel patterns for object category recognition. In: CVPR (2015)

Yan, X., Yang, J., Yumer, E., Guo, Y., Lee, H.: Perspective transformer nets: Learning single-view 3d object reconstruction without 3d supervision. In: NIPS (2016)

Yang, J., Reed, S., Yang, M.H., Lee, H.: Weakly supervised disentangling with recurrent transformations in 3d view synthesis. In: NIPS (2015)

Zhang, R., Isola, P., Efros, A.: Split-brain autoencoders: unsupervised learning by cross-channel prediction. In: CVPR (2017)

Zhou, T., Tulsiani, S., Sun, W., Malik, J., Efros, A.A.: View synthesis by appearance flow. In: Leibe, B., Matas, J., Sebe, N., Welling, M. (eds.) ECCV 2016. LNCS, vol. 9908, pp. 286–301. Springer, Cham (2016). https://doi.org/10.1007/978-3-319-46493-0_18

Acknowledgements

This research is supported in part by DARPA Lifelong Learning Machines, ONR PECASE N00014-15-1-2291, an IBM Open Collaborative Research Award, and Berkeley DeepDrive.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

1 Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Copyright information

© 2018 Springer Nature Switzerland AG

About this paper

Cite this paper

Jayaraman, D., Gao, R., Grauman, K. (2018). ShapeCodes: Self-supervised Feature Learning by Lifting Views to Viewgrids. In: Ferrari, V., Hebert, M., Sminchisescu, C., Weiss, Y. (eds) Computer Vision – ECCV 2018. ECCV 2018. Lecture Notes in Computer Science(), vol 11220. Springer, Cham. https://doi.org/10.1007/978-3-030-01270-0_8

Download citation

DOI: https://doi.org/10.1007/978-3-030-01270-0_8

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-01269-4

Online ISBN: 978-3-030-01270-0

eBook Packages: Computer ScienceComputer Science (R0)