Abstract

The paper presents a new theory of invariants to Gaussian blur. Unlike earlier methods, the blur kernel may be arbitrary oriented, scaled and elongated. Such blurring is a semi-group action in the image space, where the orbits are classes of blur-equivalent images. We propose a non-linear projection operator which extracts blur-insensitive component of the image. The invariants are then formally defined as moments of this component but can be computed directly from the blurred image without an explicit construction of the projections. Image description by the new invariants does not require any prior knowledge of the particular blur kernel shape and does not include any deconvolution. Potential applications are in blur-invariant image recognition and in robust template matching.

This work was supported by the Czech Science Foundation (Grant No. GA18-07247S), by the Praemium Academiae, and by the Grant Agency of the Czech Technical University (Grant No. SGS18/188/OHK4/3T/14).

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

One of the most common degradations in image processing is blur. Capturing an ideal scene f by an imaging device with the blurring point-spread function (PSF) h, the observed image g can be modeled as a convolution of both

This linear image formation model is a reasonably accurate approximation of many imaging devices and acquisition scenarios. In this paper, we concentrate our attention to the case when the PSF is a general anisotropic Gaussian function with unknown parameters.

Gaussian blur appears whenever the image has been acquired through a turbulent medium and the acquisition/exposure time is by far longer than the period of Brownian motion of the particles in the medium. Ground-based astronomical imaging through the atmosphere, taking pictures through a fog, underwater imaging, and fluorescence microscopy are typical examples of such situation (in some cases, the blur may be coupled with a contrast decrease). Gaussian blur is also introduced into the images as the sensor blur which is due to a finite size of the sampling pulse. Gaussian blur may be sometimes applied intentionally, for instance due to an on-chip denoising.

A complete recovering of f from Eq. (1) is an ill-posed inverse problem, whose solution, regardless of the particular method used, is ambiguous, unstable and time consuming [1, 2, 13, 14].

If our goal is an object classification, a complete knowledge of f is not necessary. Highly compressed information about the object, even if it has been extracted from a blurred image without any restoration, could be sufficient provided that the features used for object description are not much affected by blur. This idea was originally proposed by Flusser et al. [4,5,6, 12] who introduced so-called blur invariants with respect to non-parametric centrosymmetric and N-fold symmetric h. For Gaussian blur, first heuristically derived blur invariants were proposed by Liu and Zhang [10]. Later on, Zhang et al. [15] proposed a distance measure between two images which is independent of a circular Gaussian blur. Most recently, Flusser et al. [3] introduced a complete set of moment-based Gaussian blur invariants for the case that the Gaussian PSF has a diagonal covariance matrix.

In this paper, we substantially generalize the invariants proposed in [3]. While in [3] the Gaussian kernel must be in the axial position (which is constrained by the diagonal form of its covariance matrix), here we resolve the general case of an anisotropic arbitrary oriented Gaussian blurring kernel with a general covariance matrix. This allows to apply the invariants directly without testing the blur kernel orientation (which is in fact not feasible in practice).

2 Group-Theoretic Viewpoint

In this Section, we establish the necessary mathematical background which will be later used for designing the invariants. The new blur invariants are defined by means of nonlinear projection operators.

By image function (or just image for short) \(f(\mathbf{x})\) we understand any function from \(L_1(\mathbb {R}^2)\) space the integral of which is nonzero. 2D Gaussian \(G_{\varSigma }\) is defined as

where \( {\mathbf x} \equiv (x,y)^T \) and \(\varSigma \) is a \(2 \times 2\) regular covariance matrix which determines the shape of the Gaussian (the eigenvectors of \(\varSigma \) define the axes of the Gaussian and the ratio of the eigenvalues determines its elongation).

The set S of all Gaussian blurring kernels is

For the sake of generality, we consider unnormalized kernels to be able to model also the change of the image contrast and/or brightness. The basic properties of the set S, among which the closure properties play the most important role in deriving invariants, are listed below.

Proposition 1:

\(S \subset L_1\) since \(\int aG_{\varSigma } = a\). However, S is not a linear vector space because the sum of two different Gaussians is not a Gaussian.

Proposition 2:

Convolution closure. S is closed under convolution as

where \(a = a_1a_2\) and \(\varSigma = \varSigma _1 + \varSigma _2\).

Proposition 3:

Multiplication closure. S is closed under point-wise multiplication as

where

and \(\varSigma = (\varSigma _1^{-1} + \varSigma _2^{-1})^{-1}\).

Proposition 4:

Fourier transform closure. Fourier transform of a function from S always exists, lies in S and is given by

where

Proposition 2 says that \((S,*)\) is a commutative semi-group (when considering \(\delta \)-function to be an additional element of S). Hence, convolution with a function from S is a semi-group action on \(L_1\).

Orbits of this semi-group action are formed by Gaussian-blur equivalent images. We say that f and g are Gaussian blur equivalent (\(f \sim g\)), if and only if there exist \(h_1, h_2 \in S\) such that \(h_1*f = h_2*g\). The orbits (i.e. the blur-equivalent classes) can be described by their “origins” – the images, that are not blurred versions of any other images. We are going to show that each orbit contains only one such element. We are going to find these “origins” (we will call them primordial images) and describe them by means of properly chosen descriptors – invariants of the orbits. For instance, set S itself forms an orbit with \(\delta \) being its primordial image. The invariants should stay constant within the orbit while should distinguish any two images belonging to different orbits. Such invariance is in fact the invariance w.r.t. arbitrary Gaussian blur. The main trick, which makes this theory practically applicable, is that the invariants can be calculated from the given blurred image without explicitly constructing the primordial image.

In next Section, we define a projection operator that “projects” each image on S. The primordial images and, consequently, Gaussian blur invariants are constructed by means of this projection operator.

3 Projection Operators and Invariants

The main idea is the following one. We try to construct a proper image projection onto the set S, eliminate somehow the Gaussian component of the image and define the invariants in the complement. However, since S is not a vector space, such projection cannot be linear.

Let us define the projection operator P such that it projects image f onto the nearest unnormalized Gaussian, where the term “nearest” means the Gaussian having the same integral and covariance matrix as the image f itself. So, we define

where

and \(m_{pq}\) is a centralized image moment

with \((c_1,c_2)\) being the image centroid.

Clearly, P is well defined for all images of non-zero integral of regular C. Actually, \(Pf \in S\) for any such f. Although P is not linear, it can still be called projection operator, because it is idempotent \(P^2 = P\). In particular, \(P(aG_\varSigma ) = aG_\varSigma \). By means of P, any function f can be uniquely expressed as \(f = Pf + f_n\), where Pf is a Gaussian component and \(f_n\) can be considered a “non-Gaussian” component of f.

A key property of P, which will be later used for construction of the invariants, is that it commutes with a convolution with a Gaussian kernel. It holds, for any f and \(G_\varSigma \),

Now we can formulate the main Theorem of this paper.

Theorem 1

Let f be an image function. Then

is an invariant to Gaussian blur, i.e. \( I(f) = I(f*h) \) for any \(h \in S\).

The proof follows immediately from Eq. (6). Note that I(f) is well defined on all frequencies because the denominator is non-zero everywhere.

The following Theorem says that invariant I(f) is complete, which means the equality \(I{(f_1)} = I{(f_2)}\) occurs if and only if \(f_1\) and \(f_2\) belong to the same orbit.

Theorem 2

Let \(f_1\) and \(f_2\) be two image functions and I(f) be the invariant defined in Theorem 1. Then \(I{(f_1)} = I{(f_2)}\) if and only if there exist \(h_1, h_2 \in S\) such that \(h_1*f_1 = h_2*f_2\).

The proof is straightforward by setting \(h_1 = Pf_2\) and \(h_2 = Pf_1\). The completeness guarantees that I(f) discriminates between the images from different orbits, while stays constant inside an orbit due to the invariance property.

Invariant I(f) is a ratio of two Fourier transforms which may be interpreted as a deconvolution in frequency domain. Having an image f, we seemingly “deconvolve” it by the kernel P(f), which is in fact the Gaussian component of image f. This deconvolution always sends the Gaussian component to \(\delta \)-function. We call the result of this seeming deconvolution the primordial image

Hence, I(f) can be viewed as its Fourier transform, although \(f_r\) may not exist in \(L_1\) or may even not exist at all. Note that \(f_r\) is actually the “maximally possible” deconvolved non-Gaussian component of f plus \(\delta \)-function and creates the origin of the respective orbit. It can be viewed as a kind of normalization (or canonical form) of f w.r.t. arbitrary Gaussian blurring (see Fig. 1 for illustration).

Projection operator P divides each image into its Gaussian component Pf, which is projected onto S and a non-Gaussian component. The orbits are symbolically depicted as cones with the primordial image in the vertex. The primordial image represents the orbit, its non-Gaussian component provides discriminative description of the orbit.

4 The Invariants in the Image Domain

Although I(f) itself could serve as an image descriptor, its direct usage brings certain difficulties and disadvantages. On high frequencies, we divide by small numbers which may lead to precision loss. This effect is even more severe if f is noisy. This problem could be overcome by suppressing high frequencies by a low-pass filter, but such a procedure would introduce a user-defined parameter (the cut-off frequency) which should be set up with respect to the particular noise level. Another disadvantage is that we would have to actually construct \(\mathcal{F}(Pf)\) in order to calculate I(f). That is why we prefer to work directly in the image domain, where moment-based invariants equivalent to I(f) can be constructed and evaluated without an explicit calculation of Pf.

First of all, we recall that geometric moments of an image are Taylor coefficients (up to a constant factor) of its Fourier transform

(we use the multi-index notation). Theorem 1 can be rewritten as

All these three Fourier transforms can be expanded similarly to (7) into absolutely convergent Taylor series

Comparing the coefficients of the same powers of \(\mathbf {u}\) we obtain, for any \(\mathbf {p}\),

which can be read as

In 2D, Eq. (10) reads as

Since \(Pf = m_{00}^{(f)}G_C\), where C is given by the second-order moments of f, we can express its moments \(m_{mn}^{(Pf)}\) without actually constructing the projection Pf. Clearly, \(m_{mn}^{(Pf)} = 0\) for any odd \(m+n\) due to the centrosymmetry of \(G_C\). For any even \(m+n\), \(m_{mn}^{(Pf)}\) can be expressed in terms of the moments of f as

The above formula was obtained by substituting our particular C into the general formula for moments of a 2D Gaussian.

Now we can isolate \(M_{pq}\) on the left-hand side and obtain the recurrence

This recurrence formula defines Gaussian blur invariants in the image domain. Since I(f) has been proven to be invariant to Gaussian blur, all coefficients \(M_{pq}\) must also be blur invariants. The \(M_{pq}\)’s can be understood as the moments of the primordial image \(f_r\). The power of Eq. (13) lies in the fact that we can calculate them directly from the moments of f, without constructing the primordial image explicitly either in frequency or in the spatial domain and also without any prior knowledge of the blurring kernel shape and orientation.

Thanks to the uniqueness of Fourier transform, the set of all \(M_{pq}\)’s carries the same information about the function f as I(f) itself, so the cumulative discrimination power of all \(M_{pq}\)’s equals to that of I(f).

Some of the invariants (13) are always trivial. Regardless of f, we have \(M_{00} = 1\), \(M_{10} = M_{01} = 0\) because we work in centralized coordinates, and \(M_{20} = M_{11} = M_{02} = 0\) since these three moments had been already used for the definition of Pf.

Note that the joint null-space of all \(M_{pq}\)’s except \(M_{00}\) equals the set S, which is implied by the fact that \(P(aG_{\varSigma }) = aG_{\varSigma }\) and \(f_r = \delta \).

5 Experiments

5.1 Verification on Public Datasets

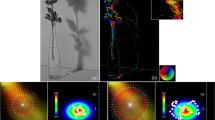

This basic experiment is a verification of the invariance of functionals \(M_{pq}\) from Eq. (13). We used two public-domain image databases, which contain series of Gaussian-blurred images (see Fig. 2 for examples).

We used 30 series (original and five blurred instances of various extent) from the CID:IQ dataset [11] and 23 series from the CSIQ dataset [9]. For each of them, we calculated the invariants up to the 9th order. The relative error of all invariants on each image series was always between \(10^{-4}\) and \(10^{-3}\), which illustrates a perfect invariance. The fluctuation within a single series is so small that in no way diminishes the ability to discriminate two different originals, as is illustrated in Fig. 3.

5.2 Influence of the Kernel Orientation

In this experiment, we used elongated Gaussian kernel in the axial position (the quotient of its eigenvalues was 2) and we rotated it gradually from 0 to \(\pi \) radians by one degree. We blurred standard Lena image by each rotated kernel, calculated the invariants from [3] and those given by Eq. (13) up to the 8th order, compared them to the same invariants calculated from the original sharp image, and plotted their mean relative errors (see Fig. 4). While the MRE of the new invariants only slightly oscillates around \(10^{-8}\) due to sampling errors (which means the MRE is sufficiently small and basically does not depend on the kernel orientation), the behavior of the invariants from [3] is different. Their MRE is around \(10^{-3}\) for most kernel orientations and exhibits narrow drops to \(10^{-8}\) for the axes orientation close to 0, \(\pi /2\) and \(\pi \). This is because for these axes orientations, both invariant sets are exactly equivalent. The most remarkable fact is that even for very small deviations from the “horizontal/vertical” orientation of the Gaussian, the MRE grows up quickly by several orders. This illustrates that the new invariants are actually a significant improvement and generalization of the method from [3].

Mean relative errors of the Gaussian blur invariants from [3] (red curve, top) and the same of the proposed ones (blue curve, bottom) as functions of the kernel orientation. (Color figure online)

5.3 Recognition of Blurred Faces

In the last experiment we show the performance of the proposed invariants in face recognition applied on blurred photographs. We compare our method with the approach proposed by Gopalan et al. [8], which is probably the most relevant competitor. Gopalan et al. derived invariants to image blurring and claimed they are suitable particularly for face recognition. They did not employ any parametric form of the blur kernel when constructing invariants (in that sense, their method is more general than ours which is restricted to Gaussian blur) but assumed the knowledge of its support size.

We used 38 distinct human faces from the Extended Yale Face Database B [7] (the same database was used in [8]). This database contains only sharp faces, so we created the blurred and noisy query images artificially (see Fig. 5 for some examples). In all tests, moment invariants up to the 9th order were used. The results are summarized in Table 1.

First, we tested the recognition rate with respect to the blur size. The blurred query image was always classified against the clear 38-image database. While moment invariants are 100% successful even for relatively large blurs, the Gopalan’s method does not reach any comparable results and the success rate drops very rapidly with the increasing blur size, even if we provided the correct blur size as the input parameter of the algorithm.

Then, we tested the noise robustness of both methods. We corrupted the probe images with AWGN of SNR from 50 to 5. The success rate of moment invariants remains 100%, but the Gopalan’s method appears to be very vulnerable. This can be explained by the fact that the moments, being integral features, average-out the noise while the Gopalan’s invariants do not have this property.

Finally, we compared the speed of both methods, again for various image size. The data in the table are related to a single query, they do not comprise pre-calculations on the database images. The moment method is much faster because the invariants up to the 9th order are a highly-compressed image representation (but still sufficient for recognition) while the Gopalan’s method works with a complete pixel-wise representation to construct invariants.

6 Conclusion

We proposed new invariants w.r.t. Gaussian blur. Unlike all earlier works, we do not assume the blurring kernel to be circularly symmetric or in axial position. Still, we found a non-linear projection operator by means of which the invariants are defined in the Fourier domain. Equivalently, the invariants can be calculated directly in the image domain, without an explicit construction of the projections. We proved by experiments superior recognition abilities, stability and robustness, at least on simulated data that follow the assumed degradation model.

References

Carasso, A.S.: The APEX method in image sharpening and the use of low exponent Lévy stable laws. SIAM J. Appl. Math. 63(2), 593–618 (2003)

Elder, J.H., Zucker, S.W.: Local scale control for edge detection and blur estimation. IEEE Trans. Pattern Anal. Mach. Intell 20(7), 699–716 (1998)

Flusser, J., Farokhi, S., Höschl IV, C., Suk, T., Zitová, B., Pedone, M.: Recognition of images degraded by Gaussian blur. IEEE Trans. Image Process. 25(2), 790–806 (2016)

Flusser, J., Suk, T.: Degraded image analysis: an invariant approach. IEEE Trans. Pattern Anal. Mach. Intell. 20(6), 590–603 (1998)

Flusser, J., Suk, T., Boldyš, J., Zitová, B.: Projection operators and moment invariants to image blurring. IEEE Trans. Pattern Anal. Mach. Intell. 37(4), 786–802 (2015)

Flusser, J., Suk, T., Saic, S.: Recognition of blurred images by the method of moments. IEEE Trans. Image Process. 5(3), 533–538 (1996)

Georghiades, A., Belhumeur, P., Kriegman, D.: From few to many: illumination cone models for face recognition under variable lighting and pose. IEEE Trans. Pattern Anal. Mach. Intell. 23(6), 643–660 (2001)

Gopalan, R., Turaga, P., Chellappa, R.: A blur-robust descriptor with applications to face recognition. IEEE Trans. Pattern Anal. Mach. Intell. 34(6), 1220–1226 (2012)

Larson, E.C., Chandler, D.M.: Most apparent distortion: full-reference image quality assessment and the role of strategy. J. Electron. Imaging 19(1), 011006 (2010)

Liu, J., Zhang, T.: Recognition of the blurred image by complex moment invariants. Pattern Recognit. Lett. 26(8), 1128–1138 (2005)

Liu, X., Pedersen, M., Hardeberg, J.Y.: CID:IQ – a new image quality database. In: Elmoataz, A., Lezoray, O., Nouboud, F., Mammass, D. (eds.) ICISP 2014. LNCS, vol. 8509, pp. 193–202. Springer, Cham (2014). https://doi.org/10.1007/978-3-319-07998-1_22

Pedone, M., Flusser, J., Heikkilä, J.: Blur invariant translational image registration for \(N\)-fold symmetric blurs. IEEE Trans. Image Process. 22(9), 3676–3689 (2013)

Xue, F., Blu, T.: A novel SURE-based criterion for parametric PSF estimation. IEEE Trans. Image Process. 24(2), 595–607 (2015)

Zhang, W., Cham, W.K.: Single-image refocusing and defocusing. IEEE Trans. Image Process. 21(2), 873–882 (2012)

Zhang, Z., Klassen, E., Srivastava, A.: Gaussian blurring-invariant comparison of signals and images. IEEE Trans. Image Process. 22(8), 3145–3157 (2013)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2019 Springer Nature Switzerland AG

About this paper

Cite this paper

Kostková, J., Flusser, J., Lébl, M., Pedone, M. (2019). Image Invariants to Anisotropic Gaussian Blur. In: Felsberg, M., Forssén, PE., Sintorn, IM., Unger, J. (eds) Image Analysis. SCIA 2019. Lecture Notes in Computer Science(), vol 11482. Springer, Cham. https://doi.org/10.1007/978-3-030-20205-7_12

Download citation

DOI: https://doi.org/10.1007/978-3-030-20205-7_12

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-030-20204-0

Online ISBN: 978-3-030-20205-7

eBook Packages: Computer ScienceComputer Science (R0)