Abstract

I briefly survey several fascinating topics in networks and nonlinearity. I highlight a few methods and ideas, including several of personal interest, that I anticipate to be especially important during the next several years. These topics include temporal networks (in which a network’s entities and/or their interactions change in time), stochastic and deterministic dynamical processes on networks, adaptive networks (in which a dynamical process on a network is coupled to dynamics of network structure), and network structure and dynamics that include “higher-order” interactions (which involve three or more entities in a network). I draw examples from a variety of scenarios, including contagion dynamics, opinion models, waves, and coupled oscillators.

You have full access to this open access chapter, Download chapter PDF

Similar content being viewed by others

6.1 Introduction

Network analysis is one of the most exciting areas of applied and industrial mathematics [1,2,3,4]. It is at the forefront of numerous and diverse applications throughout the sciences, engineering, technology, and the humanities. The study of networks combines tools from many areas of mathematics, including graph theory, linear algebra, probability, statistics, optimization, statistical mechanics, scientific computation, and nonlinear dynamics.

In this chapter, I give a short overview of popular and state-of-the-art topics in network science. My discussions of these topics, which I draw preferentially from ones that relate to nonlinear and complex systems, will be terse, but I will cite many review articles and highlight specific research papers for those who seek more details. This chapter is not a review or even a survey; instead, I give my perspective on the short-term and medium-term future of network analysis in applied mathematics for 2020 and beyond.

My presentation proceeds as follows. In Sect. 6.2, I review a few basic concepts from network analysis. In Sect. 6.3, I discuss the dynamics of networks in the form of time-dependent (“temporal”) networks. In Sect. 6.4, I discuss dynamical processes—both stochastic and deterministic—on networks. In Sect. 6.5, I discuss adaptive networks, in which there is coevolution of network structure and a dynamical process on that structure. In Sect. 6.6, I discuss higher-order structures (specifically, hypergraphs and simplicial complexes) that aim to go beyond the standard network paradigm of pairwise connections. I conclude with an outlook in Sect. 6.7.

6.2 Background on Networks

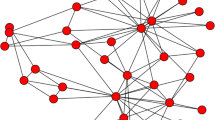

In its broadest form, a network encodes the connectivity patterns and connection strengths in a complex system of interacting entities [1]. The most traditional type of network is a graph \(G = (V,E)\) (see Fig. 6.1a), where V is a set of “nodes” (i.e. “vertices”) that encode entities and \(E \subseteq V \times V\) is a set of “edges” (i.e. “links” or “ties”) that encode the interactions between those entities. However, recent uses of the term “network” have focused increasingly on connectivity patterns that are more general than graphs [5]: a network’s nodes and/or edges (or their associated weights) can change in time [6, 7] (see Sect. 6.3), its nodes and edges can include annotations [8], a network can include multiple types of edges and/or multiple types of nodes [9, 10], a network can have associated dynamical processes [11] (see Sects. 6.3, 6.4, and 6.5), a network can include memory [12], a network’s connections can occur between an arbitrary number of entities [13, 14] (see Sect. 6.6), and so on.

Associated with a graph is an adjacency matrix \(\mathbf{A}\) with entries \(a_{ij}\). In the simplest scenario, edges either exist or they don’t. If edges have directions, \(a_{ij} = 1\) when there is an edge from entity j to entity i and \(a_{ij}=0\) when there is no such edge. When \(a_{ij}=1\), node i is “adjacent” to node j (because we can reach i directly from j), and the associated edge is “incident” from node j and to node i. The edge from j to i is an “out-edge” of j and an “in-edge” of i. The number of out-edges of a node is its “out-degree”, and the number of in-edges of a node is its “in-degree”. For an undirected network, \(a_{ij}=a_{ji}\), and the number of edges that are attached to a node is the node’s “degree”. One can assign weights to edges to represent connections with different strengths (e.g. stronger friendships or larger transportation capacity) by defining a function \(w: E \longrightarrow \mathbb {R}\). In many applications, the weights are nonnegative, although several applications [15] (such as in international relations) incorporate positive, negative, and zero weights. In some applications, nodes can also have self-edges (i.e. nodes can be adjacent to themselves) and multi-edges (i.e. adjacent nodes can have multiple edges between them). The spectral properties of adjacency (and other) matrices give important information about their associated graphs [1, 16]. For undirected networks, it is common to exploit the beneficent property that all eigenvalues of symmetric matrices are real.

Several types of network structures: (a) a graph, (b) a temporal network, (c) a multilayer network, and (d) a simplicial complex. (I drew panels (a) and (c) using Tikz-network, which is by Jürgen Hackl and is available at https://github.com/hackl/tikz-network. Panel (b) is inspired by Fig. 1 of [6]. Panel (d), which is in the public domain, was drawn by Wikipedia user Cflm001 and is available at https://en.wikipedia.org/wiki/Simplicial_complex

6.3 Time-Dependent Networks

Traditionalstudies of networks consider time-independent structures, but most networks evolve in time. For example, social networks of people and animals change based on their interactions, roads are occasionally closed for repairs and new roads are built, and airline routes change with the seasons and over the years. To study such time-dependent structures, one can analyze “temporal networks”. See [6, 7] for reviews and [17, 18] for edited collections.

The key idea of a temporal network is that networks change in time, but there are many ways to model such changes, and the time scales of interactions and other changes play a crucial role in the modeling process. There are also other important modeling considerations. To illustrate potential complications, suppose that an edge in a temporal network represents close physical proximity between two people in a short time window (e.g. with a duration of two minutes). It is relevant to consider whether there is an underlying social network (e.g. the friendship network of mathematics Ph.D. students at UCLA) or if the people in the network do not in general have any other relationships with each other (e.g. two people who happen to be visiting a particular museum on the same day). In both scenarios, edges that represent close physical proximity still appear and disappear over time, but indirect connections (i.e. between people who are on the same connected component, but without an edge between them) in a time window may play different roles in the spread of information. Moreover, network structure itself is often influenced by a spreading process or other dynamics, as perhaps one arranges a meeting to discuss a topic (e.g. to give me comments on a draft of this chapter). See my discussion of adaptive networks in Sect. 6.5.

6.3.1 Discrete Time

For convenience, most work on temporal networks employs discrete time (see Fig. 6.1b). Discrete time can arise from the natural discreteness of a setting, discretization of continuous activity over different time windows, data measurement that occurs at discrete times, and so on.

6.3.1.1 Multilayer Representation of Temporal Networks

One wayto represent a discrete-time (or discretized-time) temporal network is to use the formalism of “multilayer networks” [9, 10]. One can also use multilayer networks to study networks with multiple types of relations, networks with multiple subsystems, and other complicated networked structures.

A multilayer network \(M=(V_M,E_M,V,\mathtt {L})\) (see Fig. 6.1c) has a set V of nodes—these are sometimes called “physical nodes”, and each of them corresponds to an entity, such as a person—that have instantiations as “state nodes” (i.e. node-layer tuples, which are elements of the set \(V_M\)) on layers in \(\mathtt {L}\). One layer in the set \(\mathtt {L}\) is a combination, through the Cartesian product \(L_1 \times \dots \times L_d\), of elementary layers. The number d indicates the number of types of layering; these are called “aspects”. A temporal network with one type of relationship has one type of layering, a time-independent network with multiple types of social relationships also has one type of layering, a multirelational network that changes in time has two types of layering, and so on. The set of state nodes in M is \(V_M \subseteq V \times L_1 \times \dots \times L_d\), and the set of edges is \(E_M \subseteq V_M \times V_M\). The edge \(((i,\alpha ),(j,\beta )) \in E_M\) indicates that there is an edge from node j on layer \(\beta \) to node i on layer \(\alpha \) (and vice versa, if M is undirected). For example, in Fig. 6.1c, there is a directed intralayer edge from (A,1) to (B,1) and an undirected interlayer edge between (A,1) and (A,2). The multilayer network in Fig. 6.1c has three layers, \(|V| = 5\) physical nodes, \(d = 1\) aspect, \(|V_M| = 13\) state nodes, and \(|E_M| = 20\) edges. To consider weighted edges, one proceeds as in ordinary graphs by defining a function \(w: E_M \longrightarrow \mathbb {R}\). As in ordinary graphs, one can also incorporate self-edges and multi-edges.

Multilayer networks can include both intralayer edges (which have the same meaning as in graphs) and interlayer edges. The multilayer network in Fig. 6.1c has 4 directed intralayer edges, 10 undirected intralayer edges, and 6 undirected interlayer edges. In most studies that have employed multilayer representations of temporal networks, researchers have included interlayer edges only between state nodes in contiguous layers and only between state nodes that are associated with the same entity (see Fig. 6.1c). However, this restriction is not always desirable (see [19] for an example), and one can envision interlayer couplings that incorporate ideas like time horizons and interlayer edge weights that decay over time. For convenience, many researchers have used undirected interlayer edges in multilayer analyses of temporal networks, but it is often desirable for such edges to be directed to reflect the arrow of time [20]. The sequence of network layers, which constitute time layers, can represent a discrete-time temporal network at different time instances or a continuous-time network in which one bins (i.e. aggregates) the network’s edges to form a sequence of time windows with interactions in each window.

Each d-aspect multilayer network with the same number of nodes in each layer has an associated adjacency tensor \(\mathcal {A}\) of order \(2(d+1)\). For unweighted multilayer networks, each edge in \(E_M\) is associated with a 1 entry of \(\mathcal {A}\), and the other entries (the “missing” edges) are 0. If a multilayer network does not have the same number of nodes in each layer, one can add empty nodes so that it does, but the edges that are attached to such nodes are “forbidden”. There has been some research on tensorial properties of \(\mathcal {A}\) [21] (and it is worthwhile to undertake further studies of them), but the most common approach for computations is to flatten \(\mathcal {A}\) into a “supra-adjacency matrix” \(\mathbf{A}_M\) [9, 10], which is the adjacency matrix of the graph \(G_M\) that is associated with M. The entries of the diagonal blocks of \(\mathbf{A}_M\) correspond to intralayer edges, and the entries of its off-diagonal blocks correspond to interlayer edges.

6.3.1.2 Centrality, Clustering, and Large-Scale Network Structures

Following a long line of research in sociology [22], two important ingredients in the study of networks are examining (1) the importances (“centralities”) of nodes, edges, and other small network structures and the relationship of measures of importance to dynamical processes on networks and (2) the large-scale organization of networks [1, 23].

Studying central nodes in networks is useful for numerous applications, such as ranking Web pages, football teams, or physicists [24]. It can also help reveal the roles of nodes in networks, such as nodes that experience high traffic or help bridge different parts of a network [1, 23]. Mesoscale features can impact network function and dynamics in important ways. Small subgraphs called “motifs” may appear frequently in some networks [25], perhaps indicating fundamental structures such as feedback loops and other building blocks of global behavior [26]. Various types of larger-scale network structures, such as dense “communities” of nodes [27, 28] and core–periphery structures [29, 30], are also sometimes related to dynamical modules (e.g. a set of synchronized neurons) or functional modules (e.g. a set of proteins that are important for a certain regulatory process) [31]. A common way to study large-scale structuresFootnote 1 is inference using statistical models of random networks, such as stochastic block models [33]. Much recent research has generalized the study of large-scale network structure to temporal and multilayer networks [9, 18, 34].

Various types of centrality—including betweenness centrality [35, 36], Bonacich and Katz centrality [37, 38], communicability [39], PageRank [40, 41], and eigenvector centrality [42, 43]—have been generalized to temporal networks using a variety of approaches. Such generalizations make it possible to examine how node importances change over time as network structure evolves.

In recent work, my collaborators and I used multilayer representations of temporal networks to generalize eigenvector-based centralities to temporal networks [20, 44].Footnote 2 One computes the eigenvector-based centralities of nodes for a time-independent network as the entries of the “dominant” eigenvector, which is associated with the largest positive eigenvalue (by the Perron–Frobenius theorem, the eigenvalue with the largest magnitude is guaranteed to be positive in these situations) of a centrality matrix \({C}(\mathbf {A})\). Examples include eigenvector centrality (by using \({C}(\mathbf {A}) = \mathbf {A}\)) [46], hub and authority scoresFootnote 3 (by using \({C}(\mathbf {A}) = \mathbf {A}\mathbf {A}^T\) for hubs and \(\mathbf {A}^T\mathbf {A}\) for authorities) [47], and PageRank [24].

Given a discrete-time temporal network in the form of a sequence of adjacency matrices \(\mathbf{A}^{(t)} \in \mathbb {R}^{N\times N}\) for \(t\in \{1,\dots ,T\}\), where \(a_{ij}^{(t)}\) denotes a directed edge from entity j to entity i in time layer t, we construct a “supracentrality matrix” \(\mathbb {C}(\omega )\), which couples the centrality matrices \({C}(\mathbf{A}^{(t)})\) of the individual time layers. We then compute the dominant eigenvector of \(\mathbb {C}(\omega )\), where \(\omega \) is an interlayer coupling strength.Footnote 4 In [20, 44], a key example was the ranking of doctoral programs in the mathematical sciences (using data from the Mathematics Genealogy Project [48]), where an edge from one institution to another arises when someone with a Ph.D. from the first institution supervises a Ph.D. student at the second institution. By calculating time-dependent centralities, one can study how the rankings of mathematical-sciences doctoral programs change over time and the dependence of such rankings on the value of \(\omega \). Larger values of \(\omega \) impose more ranking consistency across time, so centrality trajectories over time are less volatile for larger \(\omega \) [20, 44].

Multilayer representations of temporal networks have been very insightful in the detection of communities and how they split, merge, and otherwise evolve over time. Numerous methods for community detection—including inference via stochastic block models [49], maximization of objective functions (especially “modularity”) [50], and methods based on random walks and bottlenecks to their traversal of a network [51, 52]—have been generalized from graphs to multilayer networks. They have yielded insights in a diverse variety of applications, including brain networks [53], granular materials [54], political voting networks [50, 55], the spread of infectious diseases [56], and ecology and animal behavior [57, 58]. To assist with such applications, there are efforts to develop and analyze multilayer random-network models that incorporate rich and flexible structures [59], such as diverse types of interlayer correlations.

6.3.1.3 Activity-Driven Models

Activity-driven (AD) models of temporal networks [60] are a popular family of generative models that encode instantaneous time-dependent descriptions of network dynamics through a function called an “activity potential”, which gives a mechanism to generate connections and characterizes the interactions between entities in a network. An activity potential encapsulates all of the information about the temporal network dynamics of an AD model, making it tractable to study dynamical processes (such as ones from Sect. 6.4) on networks that are generated by such a model. It is also common to compare the properties of networks that are generated by AD models to those of empirical temporal networks [18].

In the original AD model of Perra et al. [60], one considers a network with N entities, which we encode by the nodes. We suppose that node i has an activity rate \(a_i = \eta x_i\), which gives the probability per unit time to create new interactions with other nodes. The scaling factor \(\eta \) ensures that the mean number of active nodes per unit time is \(\eta \langle x\rangle N\), where \(\langle x \rangle = \frac{1}{N}\sum _{i = 1}^N x_i\). We define the activity rates such that \(x_i \in [\epsilon ,1]\), where \(\epsilon > 0\), and we assign each \(x_i\) from a probability distribution F(x) that can either take a desired functional form or be constructed from empirical data. The model uses the following generative process:

-

At each discrete time step (of length \(\Delta t\)), start with a network \(G_t\) that consists of N isolated nodes.

-

With a probability \(a_i\Delta t\) that is independent of other nodes, node i is active and generates m edges, each of which attaches to other nodes uniformly (i.e. with the same probability for each node) and independently at random (without replacement). Nodes that are not active can still receive edges from active nodes.

-

At the next time step \(t + \Delta t\), we delete all edges from \(G_t\), so all interactions have a constant duration of \(\Delta t\). We then generate new interactions from scratch. This is convenient, as it allows one to apply techniques from Markov chains.

Because entities in time step t do not have any memory of previous time steps, F(x) encodes the network structure and dynamics.

The AD model of Perra et al. [60] is overly simplistic, but it is amenable to analysis and has provided a foundation for many more general AD models, including ones that incorporate memory [61]. In Sect. 6.6.4, I discuss a generalization of AD models to simplicial complexes [62] that allows one to study instantaneous interactions that involve three or more entities in a network.

6.3.2 Continuous Time

Many networked systems evolve continuously in time, but most investigations of time-dependent networks rely on discrete or discretized time. It is important to undertake more analysis of continuous-time temporal networks.

Researchers have examined continuous-time networks in a variety of scenarios. Examples include a compartmental model of biological contagions [63], a generalization of Katz centrality to continuous time [38], generalizations of AD models (see Sect. 6.3.1.3) to continuous time [64, 65], and rankings in competitive sports [66].

In a recent paper [67], my collaborators and I formulated a notion of “tie-decay networks” for studying networks that evolve in continuous time. We distinguished between interactions, which we modeled as discrete contacts, and ties, which encode relationships and their strengths as a function of time. For example, perhaps the strength of a tie decays exponentially after the most recent interaction. More realistically, perhaps the decay rate depends on the weight of a tie, with strong ties decaying more slowly than weak ones. One can also use point-process models like Hawkes processes [68] to examine similar ideas from a node-centric perspective.

Suppose that there are N interacting entities, and let \(\mathbf{B}(t)\) be the \(N \times N\) time-dependent, real, non-negative matrix with entries \(b_{ij}(t)\) that encode the tie strength from entity j to entity i at time t for each j and i. In [67], we made the following simplifying assumptions:

-

1.

As in [69], ties decay exponentially when there are no interactions: \(\frac{{\mathrm d} b_{ij}}{{\mathrm d}t}=-\alpha b_{ij}\), where \(\alpha \ge 0\) is the decay rate.

-

2.

If two entities interact at time \(t=\tau \), the strength of the tie between them grows instantaneously by 1.

See [70] for a comparison of various choices, including those in [67, 69], for tie evolution over time.

In practice (e.g. in data-driven applications), one obtains \(\mathbf{B}(t)\) by discretizing time, so let’s suppose that there is at most one interaction in each time step of length \(\Delta t\). This occurs, for example, in a Poisson process. Such time discretization is common in the simulation of stochastic dynamical systems, such as in Gillespie algorithms [11, 71, 72]. Consider an \(N \times N\) matrix \(\mathbf{A}(t)\) in which \(a_{ij}(t)=1\) if node j interacts with node i at time t and \(a_{ij}(t)=0\) otherwise. For a directed network, \(\mathbf{A}(t)\) has exactly one nonzero entry in each time step when there is an interaction and no nonzero entries when there isn’t one. For an undirected network, because of the symmetric nature of interactions, there are exactly two nonzero entries in time steps that include an interaction. We write

Equivalently, if interactions between entities occur at times \(\tau ^{\left( \ell \right) }\) such that \(0\le \tau ^{\left( 0\right) }< \tau ^{\left( 1\right) }< \cdots < \tau ^{\left( T\right) }\), then at time \(t\ge \tau ^{\left( T\right) }\), we have

In [67], my coauthors and I generalized PageRank [24, 73] to tie-decay networks. One nice feature of our tie-decay PageRank is that it is applicable not just to data sets, but also to data streams, as one updates the PageRank values as new data arrives. By contrast, one problematic feature of many methods that rely on multilayer representations of temporal networks is that one needs to recompute everything for an entire data set upon acquiring new data, rather than updating prior results in a computationally efficient way.

6.4 Dynamical Processes on Networks

A dynamical process can be discrete, continuous, or some mixture of the two; it can also be either deterministic or stochastic. It can take the form of one or several coupled ordinary differential equations (ODEs), partial differential equations (PDEs), maps, stochastic differential equations, and so on.

A dynamical process requires a rule for updating the states of its dependent variables with respect one or more independent variables (e.g. time), and one also has initial conditions and/or boundary conditions. To formalize a dynamical process on a network, one needs a rule for how to update the states of the network’s nodes and/or edges.

The nodes (of one or more types) of a network are connected to each other in nontrivial ways by one or more types of edges. This leads to a natural question: How does nontrivial connectivity between nodes affect dynamical processes on a network [11]? When studying a dynamical process on a network, the network structure encodes which entities (i.e. nodes) of a system interact with each other and which do not. If desired, one can ignore the network structure entirely and just write out a dynamical system. However, keeping track of network structure is often a very useful and insightful form of bookkeeping, which one can exploit to systematically explore how particular structures affect the dynamics of particular dynamical processes.

Prominent examples of dynamical processes on networks include coupled oscillators [74, 75], games [76], and the spread of diseases [77, 78] and opinions [79, 80]. There is also a large body of research on the control of dynamical processes on networks [81, 82].

Most studies of dynamics on networks have focused on extending familiar models—such as compartmental models of biological contagions [77] or Kuramoto phase oscillators [75]—by coupling entities using various types of network structures, but it is also important to formulate new dynamical processes from scratch, rather than only studying more complicated generalizations of our favorite models. When trying to illuminate the effects of network structure on a dynamical process, it is often insightful to provide a baseline comparison by examining the process on a convenient ensemble of random networks [11].

6.4.1 An Illustrative Example: A Threshold Model of a Social Contagion

A simple, but illustrative, dynamical process on a network is the Watts threshold model (WTM) of a social contagion [11, 79]. It provides a framework for elucidating how network structure can affect state changes, such as the adoption of a product or a behavior, and for exploring which scenarios lead to “virality” (in the form of state changes of a large number of nodes in a network).

The original WTM [83], a binary-state threshold model that resembles bootstrap percolation [84], has a deterministic update rule, so stochasticity can come only from other sources (see Sect. 6.4.2). In a binary state model, each node is in one of two states; see [85] for a tabulation of well-known binary-state dynamics on networks. The WTM is a modification of Mark Granovetter’s threshold model for social influence in a fully-mixed population [86]. See [87, 88] for early work on threshold models on networks that developed independently from investigations of the WTM. Threshold contagion models have been developed for many scenarios, including contagions with multiple stages [89], models with adoption latency [90], models with synergistic interactions [91], and situations with hipsters (who may prefer to adopt a minority state) [92].

In a binary-state threshold model such as the WTM, each node i has a threshold \(R_i\) that one draws from some distribution. Suppose that \(R_i\) is constant in time, although one can generalize it to be time-dependent. At any time, each node can be in one of two states: 0 (which represents being inactive, not adopted, not infected, and so on) or 1 (active, adopted, infected, and so on). A binary-state model is a drastic oversimplification of reality, but the WTM is able to capture two crucial features of social systems [93]: interdependence (an entity’s behavior depends on the behavior of other entities) and heterogeneity (because nodes with different threshold values behave differently). One can assign a seed number or seed fraction of nodes to the active state, and one can choose the initially active nodes either deterministically or randomly.

The states of the nodes change in time according to an update rule, which can either be synchronous (such that it is a map) or asynchronous (e.g. as a discretization of continuous time) [11]. In the WTM, the update rule is deterministic, so the choice of synchronous versus asynchronous updating affects only how long it takes to reach a steady state; it does not affect the steady state itself. With a stochastic update rule, the synchronous and asynchronous versions of ostensibly the “same” model can behave in drastically different ways [94]. In the WTM on an undirected network, to update the state of a node, one compares its fraction \(s_i/k_i\) of active neighbors (where \(s_i\) is the number of active neighbors and \(k_i\) is the degree of node i) to the node’s threshold \(R_i\). An inactive node i becomes active (i.e. it switches from state 0 to state 1) if \(s_i/k_i \ge R_i\); otherwise, it stays inactive. The states of the nodes in the WTM are monotonic, in the sense that a node that becomes active remains active forever. This feature is convenient for deriving accurate approximations for the global behavior of the WTM using branching-process approximations [11, 85] and when analyzing the behavior of the WTM using tools such as persistent homology [95].

6.4.2 Stochastic Processes

A dynamical process on a network can take the form of a stochastic process [1, 11]. There are several possible sources of stochasticity: (1) choice of initial condition, (2) choice of which nodes or edges to update (when considering asynchronous updating), (3) the rule for updating nodes or edges, (4) the values of parameters in an update rule, and (5) the selection of particular networks from a random-graph ensemble (i.e. a probability distribution on graphs). Some or all of these sources of randomness can be present when studying dynamical processes on networks. It is desirable to compare the sample mean of a stochastic process on a network to an ensemble average (i.e. to an expectation over a suitable probability distribution).

Prominent examples of stochastic processes on networks include percolation [96], random walks [97], compartment models of biological contagions [77, 78], bounded-confidence models of the dynamics of continuous-valued opinions [98], and other opinion and voter models [11, 79, 80, 99].

6.4.2.1 Example: A Compartmental Model of a Biological Contagion

Compartmental models of biological contagions are a topic of intense interest in network science [1, 11, 77, 78]. A compartment represents a possible state of a node; examples include susceptible, infected, zombified, vaccinated, and recovered. An update rule determines how a node changes its state from one compartment to another. One can formulate models with as many compartments as desired [100], but investigations of how network structure affects dynamics typically have employed examples with only two or three compartments [77, 78]. The formulation and analysis of compartmental models on networks have played a crucial role—and have influenced both human behavior and public policy—during the current coronavirus disease 2019 (COVID-19) pandemic. For one nice example of such important work, see [101].

Researchers have studied various extensions of compartmental models, including contagions on multilayer and temporal networks [9, 34, 102], metapopulation models on networks for simultaneously studying network connectivity and subpopulations with different characteristics [103], non-Markovian contagions on networks for exploring memory effects [104], and explicit incorporation of individuals with essential societal roles (e.g. health-care workers) [105]. As I discuss in Sect. 6.4.4, one can also examine coupling between biological contagions and the spread of information (e.g. “awareness”) [106, 107]. One can also use compartmental models to study phenomena, such as the dissemination of ideas on social media [108] and forecasting of political elections [109], that are much different from the spread of diseases.

One of the most prominent examples of a compartmental model is a susceptible–infected–recovered (SIR) model, which has three compartments. Susceptible nodes are healthy and can become infected, and infected nodes can eventually recover. The steady state of the basic SIR model on a network is related to a type of bond percolation [110,111,112,113]. There are many variants of SIR models and other compartmental models on networks [77]. See [114] for an illustration using susceptible–infected–susceptible (SIS) models.

Suppose that an infection is transmitted from an infected node to a susceptible neighbor at a rate of \(\lambda \). The probability of a transmission event on one edge between an infected node and a susceptible node in an infinitesimal time interval \(\mathrm {d}t\) is \(\lambda \, \mathrm {d}t\). Assuming that all infection events are independent, the probability that a susceptible node with s infected neighbors becomes infected (i.e. that it transitions from the S compartment to the I compartment, which represents both being infected and being infective) in a time step of duration \(\mathrm {d}t\) is

If an infected node recovers at a constant rate of \(\mu \), the probability that it switches from state I to state R (the recovered compartment) in an infinitesimal time interval \(\mathrm {d}t\) is \(\mu \,\mathrm {d}t\).

6.4.3 Deterministic Dynamical Systems

When there is no source of stochasticity, a dynamical process on a network is “deterministic”. A deterministic dynamical system can take the form of a system of coupled maps, ODEs, PDEs, or something else. As with stochastic systems, the network structure encodes which entities of a system interact with each other and which do not.

There are numerous interesting deterministic dynamical systems on networks—just incorporate nontrivial connectivity between entities into your favorite deterministic model—although it is worth noting that some stochastic aspects (e.g. choosing parameter values from a probability distribution or sampling choices of initial conditions) can arise in these models.

6.4.3.1 Example: Coupled Oscillators

For concreteness, let’s consider the popular setting of coupled oscillators. Each node in a network is associated with an oscillator, and we want to examine how network structure affects the collective behavior of the coupled oscillators.

It is common to investigate various forms of synchronization (a type of coherent behavior), such that the rhythms of the oscillators adjust to match each other (or to match a subset of the oscillators) because of their interactions [115]. A variety of methods, such as “master stability functions” [116], have been developed to study the local stability of synchronized states and their generalizations [11, 74], such as cluster synchrony [117]. Cluster synchrony, which is related to work on “coupled-cell networks” [26], uses ideas from computational group theory to find synchronized sets of oscillators that are not synchronized with other sets of synchronized oscillators. Many studies have also examined other types of states, such as “chimera states” [118], in which some oscillators behave coherently but others behave incoherently. (Analogous phenomena sometimes occur in mathematics departments.)

A ubiquitous example is coupled Kuramoto oscillators on a network [74, 75, 119], which is perhaps the most common setting for exploring and developing new methods for studying coupled oscillators. (In principle, one can then build on these insights in studies of other oscillatory systems, such as in applications in neuroscience [120].) Coupled Kuramoto oscillators have been used for modeling numerous phenomena, including jetlag [121] and singing in frogs [122]. Indeed, a “Snowbird” (SIAM) conference on applied dynamical systems would not be complete without at least several dozen talks on the Kuramoto model. In the Kuramoto model, each node i has an associated phase \(\theta _i(t) \in [0,2\pi )\). In the case of “diffusive” coupling between the nodes,Footnote 5 the dynamics of the ith node is governed by the equation

where one typically draws the natural frequency \(\omega _i\) of node i from some distribution \(g(\omega )\), the scalar \(a_{ij}\) is an adjacency-matrix entry of an unweighted network, \(b_{ij}\) is the coupling strength that is experienced by oscillator i from oscillator j (so \(b_{ij}a_{ij}\) is an element of an adjacency matrix \(\mathbf{W}\) of a weighted network), and \(f_{ij}(y) = \sin (y)\) is the coupling function, which depends only on the phase difference between oscillators i and j because of the diffusive nature of the coupling.

Once one knows the natural frequencies \(\omega _i\), the model (6.4) is a deterministic dynamical system, although there have been studies of coupled Kuramoto oscillators with additional stochastic terms [123]. Traditional studies of (6.4) and its generalizations draw the natural frequencies from some distribution (e.g. a Gaussian or a compactly supported distribution), but some studies of so-called “explosive synchronization” (in which there is an abrupt phase transition from incoherent oscillators to synchronized oscillators) have employed deterministic natural frequencies [119, 124]. The properties of the frequency distribution \(g(\omega )\) have a significant effect on the dynamics of (6.4). Important features of \(g(\omega )\) include whether it has compact support or not, whether it is symmetric or asymmetric, and whether it is unimodal or not [75, 125].

The model (6.4) has been generalized in numerous ways. For example, researchers have considered a large variety of coupling functions \(f_{ij}\) (including ones that are not diffusive) and have incorporated an inertia term \(\ddot{\theta }_i\) to yield a second-order Kuramoto oscillator at each node [75]. The latter generalization is important for studies of coupled oscillators and synchronized dynamics in electric power grids [126]. Another noteworthy direction is the analysis of Kuramoto model on “graphons” (see, for example, [127]), an important type of structure that arises in a suitable limit of large networks.

6.4.4 Dynamical Processes on Multilayer Networks

An increasingly prominent topic in network analysis is the examination of how multilayer network structures—multiple system components, multiple types of edges, co-occurrence and coupling of multiple dynamical processes, and so on—affect qualitative and quantitative dynamics [9, 34, 102]. For example, perhaps certain types of multilayer structures can induce unexpected instabilities or phase transitions in certain types of dynamical processes?

There are two categories of dynamical processes on multilayer networks: (1) a single process can occur on a multilayer network; or (2) processes on different layers of a multilayer network can interact with each other [102]. An important example of the first category is a random walk, where the relative speeds and probabilities of steps within layers versus steps between layers affect the qualitative nature of the dynamics. This, in turn, affects methods (such as community detection [51, 52]) that are based on random walks, as well as anything else in which diffusion is relevant [128, 129]. Two other examples of the first category are the spread of information on social media (for which there are multiple communication channels, such as Facebook and Twitter) and multimodal transportation systems [130]. For instance, a multilayer network structure can induce congestion even when a system without coupling between layers is decongested in each layer independently [131]. Examples of the second category of dynamical process are interactions between multiple strains of a disease and interactions between the spread of an infectious disease and the spread of information [106, 107, 132]. Many other examples have been studied [34], including coupling between oscillator dynamics on one layer and a biased random walk on another layer (as a model for neuronal oscillations coupled to blood flow) [133].

Numerous interesting phenomena can occur when dynamical systems, such as spreading processes, are coupled to each other [106]. For example, the spreading of one disease can facilitate infection by another [134], and the spread of awareness about a disease can inhibit the spread of the disease itself (e.g. if people stay home when they are sick) [135]. Interacting spreading processes can also exhibit other fascinating dynamics, such as oscillations that are induced by multilayer network structures in a biological contagion with multiple modes of transmission [136] and novel types of phase transitions [102].

A major simplification in most work thus far on dynamical processes on multilayer networks is a tendency to focus on toy models. For example, a typical study of coupled spreading processes may consider a standard (e.g. SIR) model on each layer, and it may draw the connectivity pattern of each layer from the same standard random-graph model (e.g. an Erdős–Rényi model or a configuration model). However, when studying dynamics on multilayer networks, it is particular important in future work to incorporate heterogeneity in network structure and/or dynamical processes. For instance, diseases spread offline but information spreads both offline and online, so investigations of coupled information and disease spread ought to consider fundamentally different types of network structures for the two processes.

6.4.5 Metric Graphs and Waves on Networks

Network structures also affect the dynamics of PDEs on networks [137,138,139,140,141]. Interesting examples include a study of a Burgers equation on graphs to investigate how network structure affects the propagation of shocks [138] and investigations of reaction–diffusion equations and Turing patterns on networks [140, 142]. The latter studies exploited the rich theory of Laplacian dynamics on graphs (and concomitant ideas from spectral graph theory) [16, 97] and examined the addition of nonlinear terms to Laplacians on various types of networks (including multilayer ones).

A mathematically oriented thread of research on PDEs on networks has built on ideas from so-called “quantum graphs” [137, 143] to study wave propagation on networks through the analysis of “metric graphs”. Metric graphs differ from the usual “combinatorial graphs”, which in other contexts are usually called simply “graphs”.Footnote 6 As in a combinatorial graph, a metric graph has nodes and edges, but now each edge e also has an associated positive length \(l_e \in (0,\infty ]\). For many experimentally relevant scenarios (e.g. in models of circuits of quantum wires [144]), there is a natural embedding into space, but metric graphs that are not embedded in space are also appropriate for some applications.

As the nomenclature suggests, one can equip a metric graph with a natural metric. If a sequence \(\{e_j\}_{j=1}^m\) of edges forms a path, the length of the path is \(\sum _j l_{e_j}\). The distance \(\rho (v_1,v_2)\) between two nodes, \(v_1\) and \(v_2\), is the minimum path length between them. We place coordinates along each edge, so we can compute a distance between points \(x_1\) and \(x_2\) on a metric graph even when those points are not located at nodes. Traditionally, one assumes that the infinite ends (which one can construe as “leads” at infinity, as in scattering theory) of infinite edges have degree 1. It is also traditional to assume that there is always a positive distance between distinct nodes and that there are no finite-length paths with infinitely many edges. See [143] for further discussion.

To study waves on metric graphs, one needs to define operators, such as the negative second derivative or more general Schrödinger operators. This exploits the fact that there are coordinates for all points on the edges—not only at the nodes themselves, as in combinatorial graphs. When studying waves on metric graphs, it is also necessary to impose boundary conditions at the nodes [143].

Many studies of wave propagation on metric graphs have considered generalizations of nonlinear wave equations, such as the cubic nonlinear Schrödinger (NLS) equation [145] and a nonlinear Dirac equation [146]. The overwhelming majority of studies of metric graphs (with both linear and nonlinear waves) have focused on networks with a very small number of nodes, as even small networks yield very interesting dynamics. For example, Marzuola and Pelinovsky [147] analyzed symmetry-breaking and symmetry-preserving bifurcations of standing waves of the cubic NLS equation on a dumbbell graph (with two rings attached to a central line segment and Kirchhoff boundary conditions at the nodes). Kairzhan et al. [148] studied the spectral stability of half-soliton standing waves of the cubic NLS equation on balanced star graphs. Sobirov et al. [149] studied scattering and transmission at nodes of sine–Gordon solitons on networks (e.g. on a star graph and a small tree).

A particularly interesting direction for future work is to study wave dynamics on large metric graphs. This will help extend investigations, as in ODEs and maps, of how network structures affect dynamics on networks to the realm of linear and nonlinear waves. One can readily formulate wave equations on large metric graphs by specifying relevant boundary conditions and rules at each junction. For example, Joly et al. [150] recently examined wave propagation of the standard linear wave equation on fractal trees. Because many natural real-life settings are spatially embedded (e.g. wave propagation in granular materials [54, 151] and traffic-flow patterns in cities), it will be particularly valuable to examine wave dynamics on (both synthetic and empirical) spatially-embedded networks [152]. Therefore, I anticipate that it will be very insightful to undertake studies of wave dynamics on networks such as random geometric graphs, random neighborhood graphs, and other spatial structures. A key question in network analysis is how different types of network structure affect different types of dynamical processes [11], and the ability to take a limit as model synthetic networks become infinitely large (specifically, a thermodynamic limit) is crucial for obtaining many key theoretical insights.

6.5 Adaptive Networks

Dynamicsof networks and dynamics on networks do not occur in isolation; instead, they are coupled to each other. Researchers have studied the coevolution of network structure and the states of nodes and/or edges in the context of “adaptive networks” (which are also known as “coevolving networks”) [153, 154]. Whether it is sensible to study a dynamical process on a time-independent network, a temporal network with frozen (or no) node or edge states, or an adaptive network depends on the relative time scales of the dynamics of a network’s structure and the dynamics of the states of the nodes and/or edges of the network. See [11] for a brief discussion.

Models in the form of adaptive networks provide a promising mechanistic approach to simultaneously explain both structural features (e.g. degree distributions and temporal features (e.g. burstiness) of empirical data [155]. Incorporating adaptation into conventional models can produce extremely interesting and rich dynamics, such as the spontaneous development of extreme states in opinion models [156].

Most studies of adaptive networks that include some analysis (i.e. that go beyond numerical computations) have employed rather artificial adaption rules for adding, removing, and rewiring edges. This is relevant for mathematical tractability, but it is important to go beyond these limitations by considering more realistic types of adaptation and coupling between network structure (including multilayer structures, as in [157]) and the states of nodes and edges.

6.5.1 Contagion Models

When people are sick, they stay home from work or school. People also form and remove social connections (both online and offline) based on observed opinions and behaviors. To study these ideas using adaptive networks, researchers have coupled models of biological and social contagions with time-dependent networks [11, 79].

An early example of an adaptive network of disease spread is the SIS model in Gross et al. [158]. In this model, susceptible nodes sometimes rewire their incident edges to “protect themselves”. Suppose that we have an N-node network with a constant number of undirected edges. Each node is either susceptible (i.e. in state S) or infected (i.e. in state I). At each time step, and for each edge—so-called “discordant edges”—between nodes in different states, the susceptible node becomes infected with probability \(\lambda \). For each discordant edge, with some probability \(\kappa \), the incident susceptible node breaks the edge and rewires to some other susceptible node. (Multi-edges and self-edges are not allowed.) This is called a “rewire-to-same” mechanism in the language of some adaptive opinion models [159, 160]. In each time step, infected nodes can also recover to become susceptible again.

Gross et al. [158] studied how the rewiring probability affects the “basic reproduction number”, a scalar that measures how many infections occur, on average, from one infected node in a population in which all other nodes are susceptible to infection [77, 78, 100]. The basic reproduction number determines the size of a critical infection probability \(\lambda _*\) that is necessary to maintain a stable epidemic (as determined traditionally using linear stability analysis). A high rewiring rate can significantly increase \(\lambda _*\) and thereby significantly reduce the prevalence of a contagion. Although results like these are perhaps intuitively clear, other studies of contagions on adaptive networks have yielded potentially actionable (and arguably nonintuitive) insights. For example, Scarpino et al. [105] demonstrated using an adaptive compartmental model (along with some empirical evidence) that the spread of a disease can accelerate when individuals with essential societal roles (e.g. health-care workers) become ill and are replaced with healthy individuals.

6.5.2 Opinion Models

Another type of model with many interesting adaptive variants are opinion models [11, 80], especially in the form of generalizations of classical voter models [99].

Voter dynamics were first considered in the 1970s by Clifford and Sudbury [161] as a model for species competition, and the dynamical process that they introduced was dubbed “the voter model”Footnote 7 shortly thereafter by Holley and Liggett [162]. Voter dynamics are fun and are popular to study [99], although it is questionable whether it is ever possible to genuinely construe a voter model as a model of voters [163].

Holme and Newman [164] undertook an early study of a rewire-to-same adaptive voter model. Inspired by their research, Durrett et al. [159] compared the dynamics from two different types of rewiring in an adaptive voter model. In each variant of their model, one considers an N-node network and supposes that each node is in one of two states. The network structure and the node states coevolve. Pick an edge uniformly at random. If this edge is discordant, then with probability \(1 - \kappa \), one of its incident nodes adopts the opinion state of the other. Otherwise, with complementary probability \(\kappa \), a rewiring action occurs: one removes the discordant edge, and one of the associated nodes attaches to a new node either through a rewire-to-same mechanism (choosing uniformly at random among the nodes with the same opinion state) or through a “rewire-to-random” mechanism (choosing uniformly at random among all nodes). As with the adaptive SIS model of [158], self-edges and multi-edges are not allowed.

The models in [159] evolve until there are no discordant edges. There are several key questions. Does the system reach a consensus (in which all nodes are in the same state)? If so, how long does it take to converge to consensus? If not, how many opinion clusters (each of which is a connected component, perhaps interpretable as an “echo chamber”, of the final network) are there at steady state? How long does it take to reach this state? The answers and analysis are subtle; they depend on the initial network topology, the initial conditions, and the specific choice of rewiring rule. As with other adaptive network models, researchers have developed some nonrigorous theory (e.g. using mean-field approximations and their generalizations) on adaptive voter models with simplistic rewiring schemes, but they have struggled to extend these ideas to models with more realistic rewiring schemes. There are very few mathematically rigorous results on adaptive voter models, although there do exist some, under various assumptions on initial network structure and edge density [165].

Researchers have generalized adaptive voter models to consider more than two opinion states [166] and more general types of rewiring schemes [167]. As with other adaptive networks, analyzing adaptive opinion models with increasingly diverse types of rewiring schemes (ideally with a move towards increasing realism) is particularly important. In [160], Yacoub Kureh and I studied a variant of a voter model with nonlinear rewiring (in our model, the probability that determines whether a node rewires or adopts a neighbor’s opinion is a function of how well it “fits in” with the nodes in its neighborhood), including a “rewire-to-none” scheme to model unfriending and unfollowing in online social networks. It is also important to study adaptive opinion models with more realistic types of opinion dynamics. A promising example is adaptive generalizations of bounded-confidence models (see the introduction of [98] for a brief review of bounded-confidence models), which have continuous opinion states, with nodes interacting either with nodes or with other entities (such as media [168]) whose opinion is sufficiently close to theirs. A recent numerical study examined an adaptive bounded-confidence model [169]; this is an important direction for future investigations.

6.5.3 Synchronization of Adaptive Oscillators

It is also interesting to examine how the adaptation of oscillators—including their intrinsic frequencies and/or the network structure that couples them to each other—affects the collective behavior (e.g. synchronization) of a network of oscillators [75]. Such ideas are useful for exploring mechanistic models of learning in the brain (e.g. through adaptation of coupling between oscillators to produce a desired limit cycle [170]).

One nice study is a paper by Skardal et al. [171], who examined an adaptive model of coupled Kuramoto oscillators as a toy model of learning. First, we write the Kuramoto system as

where \(f_{ij}\) is a \(2\pi \)-periodic function of the phase difference between oscillators i and j. The function \(f_{ij}\) incorporates the matrix elements \(b_{ij}\) and \(a_{ij}\) from (6.4). One way to examine adaptation is to define an “order parameter” \(r_i\) (which, in its traditional form, quantifies the amount of coherence of the coupled Kuramoto oscillators [75]) for the ith oscillator by

and to consider the following dynamical system:

where \(\mathrm {Re}(\zeta )\) denotes the real part of a quantity \(\zeta \) and \(\mathrm {Im}(\zeta )\) denotes its imaginary part. In the model (6.6), \(\lambda _D\) denotes the largest positive eigenvalue of the adjacency matrix \(\mathbf{A}\), the variable \(z_i(t)\) is a time-delayed version of \(r_i\) with time parameter \(\tau \) (with \(\tau \rightarrow 0\) implying that \(z_i \rightarrow r_i\)), and \(z_i^*\) denotes the complex conjugate of \(z_i\). One draws the frequencies \(\omega _i\) from some distribution (e.g. a Lorentz distribution, as in [171]), and we recall that \(b_{ij}\) is the coupling strength that is experienced by oscillator i from oscillator j. The parameter T gives an adaptation time scale, and \(\alpha \in \mathbb {R}\) and \(\beta \in \mathbb {R}\) are parameters (which one can adjust to study bifurcations). Skardal et al. [171] interpreted scenarios with \(\beta > 0\) as “Hebbian” adaptation (see [172]) and scenarios with \(\beta < 0\) as anti-Hebbian adaptation, as they observed that oscillator synchrony is promoted when \(\beta > 0\) and inhibited when \(\beta < 0\).

6.6 Higher-Order Structures and Dynamics

Most studies of networks have focused on networks with pairwise connections, in which each edge (unless it is a self-edge, which connects a node to itself) connects exactly two nodes to each other. However, many interactions—such as playing games, coauthoring papers and other forms of collaboration, and horse races—often occur between three or more entities of a network. To examine such situations, researchers have increasingly studied “higher-order” structures in networks, as they can exert a major influence on dynamical processes.

6.6.1 Hypergraphs

Perhaps the simplest way to account for higher-order structures in networks is to generalize from graphs to “hypergraphs” [1]. Hypergraphs possess “hyperedges” that encode a connection between an arbitrary number of nodes, such as between all coauthors of a paper. This allows one to make important distinctions, such as between a k-clique (in which there are pairwise connections between each pair of nodes in a set of k nodes) and a hyperedge that connects all k of those nodes to each other, without the need for any pairwise connections.

One way to study a hypergraph is as a “bipartite network”, in which nodes of a given type can be adjacent only to nodes of another type. For example, a scientist can be adjacent to a paper that they have written [173] and a legislator can be adjacent to a committee on which they sit [174]. It is important to generalize ideas from graph theory to hypergraphs, such as by developing models of random hypergraphs [8, 175, 176].

6.6.2 Simplicial Complexes

Another wayto study higher-order structures in networks is to use “simplicial complexes” [13, 177, 178]. A simplicial complex is a space that is built from a union of points, edges, triangles, tetrahedra, and higher-dimensional polytopes (see Fig. 6.1d). Simplicial complexes are topological spaces that one can use to approximate other topological spaces and thereby capture some of their properties.

A p-dimensional simplex (i.e. a p-simplex) is a p-dimensional polytope that is the convex hull of its \(p+1\) vertices (i.e. nodes). Any \(\tilde{p}\)-dimensional subset (with \(\tilde{p} < p\)) of a p-simplex that is itself a simplex is a face of the p-simplex. A simplicial complex K is a set of simplices such that (1) every face of a simplex from S is also in S and (2) the intersection of any two simplices \(\sigma _1, \sigma _2 \in S\) is a face of both \(\sigma _1\) and \(\sigma _2\). An increasing sequence \(\emptyset = K_0 \subseteq K_1 \subseteq K_2\subseteq \cdots \subseteq K_l = K \) of simplicial complexes forms a filtered simplicial complex; each \(K_i\) is a subcomplex. As discussed in [13] and references therein, one can examine the homology of each subcomplex. In studying the homology of a topological space, one computes topological invariants that quantify features of different dimensionalities [178]. One studies the “persistent homology” of a filtered simplicial complex to quantify the topological structure of a data set (e.g. a point cloud) across multiple scales of such data. The goal of such “topological data analysis” is to measure the “shape” of data in the form of connected components, “holes” of various dimensionality, and so on [13]. From the perspective of network analysis, this yields insight into types of large-scale structure that complement traditional ones (such as community structure). See [179] for a friendly, nontechnical introduction to topological data analysis.

A natural goal is to generalize ideas from network analysis to simplicial complexes. Important efforts include generalizing configuration models of random graphs [180] to random simplicial complexes [181, 182]; generalizing well-known network growth mechanisms, such as preferential attachment [183]; and developing geometric notions, like curvature, for networks [184]. An important modeling issue when studying higher-order network data is the question of when it is more appropriate (or convenient) to use the formalisms of hypergraphs or simplicial complexes.

The computation of persistent homology has yielded insights into a diverse set of models and applications in network science and complex systems. Examples include granular materials [54, 185], functional brain networks [177, 186], quantification of “political islands” in voting data [187], percolation theory [188], contagion dynamics [95], swarming and collective behavior [189], chaotic flows in ODEs and PDEs [190], diurnal cycles in tropical cyclones [191], and mathematics education [192]. See the introduction to [13] for pointers to numerous other applications.

Most uses of simplicial complexes in network science and complex systems have been in the context of topological data analysis (especially the computation of persistent homology) and its applications [13, 14, 193]. In my discussion, however, I focus instead on a somewhat different (and increasingly popular) topic: the generalization of dynamical processes on and of networks to simplicial complexes to study the effects of higher-order interactions on network dynamics. Simplicial structures influence the collective behavior of the dynamics of coupled entities on networks (e.g. they can lead to novel bifurcations and phase transitions), and they provide a natural approach to analyze p-entity interaction terms, including for \(p \ge 3\), in dynamical systems. Existing work includes research on linear diffusion dynamics (in the form of Hodge Laplacians, such as in [194]) and generalizations of a variety of other popular types of dynamical processes on networks.

6.6.3 Coupled Phase Oscillators with p-Body Interactions with \(p \ge 3\)

Given the ubiquitous study of coupled Kuramoto oscillators [75], a sensible starting point for exploring the impact of simultaneous coupling of three or more oscillators on a system’s qualitative dynamics is to study a generalized Kuramoto model. For example, to include both two-entity (“two-body”) and three-entity interactions in a model of coupled oscillators on networks, we write [195]

where \({\varvec{\omega }}_i\) describes the intrinsic dynamics of oscillator i and the three-oscillator interaction term \(\mathbf{f}_{ijk}\) can also encompass two-oscillator interaction terms \(\mathbf{f}_{ij}(\mathbf{x}_i,\mathbf{x}_j)\).

An example of N coupled Kuramoto oscillators with three-body interactions is [195]

where we draw the coefficients \(a_{ij}\), \(b_{ij}\), \(c_{ijk}\), \(\alpha _{1ij}\), \(\alpha _{2ij}\), \(\alpha _{3ijk}\), and \(\alpha _{4ijk}\) from various probability distributions. Including three-body interactions leads to a large variety of intricate dynamics, and I anticipate that incorporating the formalism of simplicial complexes will be very helpful for categorizing the possible dynamics.

In the last few years, several other researchers have also studied Kuramoto models with three-body interactions [196,197,198]. A recent study [198], for example, discovered a continuum of abrupt desynchronization transitions with no counterpart in abrupt synchronization transitions. There have been mathematical studies of coupled oscillators with interactions of three or more entities using methods such as normal-form theory [199] and coupled-cell networks [26].

An important point, as one can see in the above discussion (which does not employ the mathematical formalism of simplicial complexes), is that one does not necessarily need to explicitly use the language of simplicial complexes to study interactions between three or more entities in dynamical systems. Nevertheless, I anticipate that explicitly incorporating the formalism of simplicial complexes will be useful both for studying coupled oscillators on networks and for other dynamical systems. In upcoming studies, it will be important to determine when this formalism helps illuminate the dynamics of multi-entity interactions in dynamical systems and when simpler approaches suffice.

6.6.4 Social Dynamics and Simplicial Complexes

Several recentpapers have generalized models of social dynamics by incorporating higher-order interactions [62, 200,201,202]. For example, perhaps somebody’s opinion is influenced by a group discussion of three or more people, so it is relevant to consider opinion updates that are based on higher-order interactions. Some of these papers use some of the terminology of simplicial complexes, but it is mostly unclear (except perhaps for [201]) how the models in those papers take advantage of the associated mathematical formalism, so arguably it often may be unnecessary to use such language. Nevertheless, these models are very interesting and provide promising avenues for further research.

Petri and Barrat [62] generalized activity-driven models to simplicial complexes. Such a simplicial activity-driven (SAD) model generates time-dependent simplicial complexes, on which it is desirable to study dynamical processes (see Sect. 6.4), such as opinion dynamics, social contagions, and biological contagions.

The simplest version of the SAD model is defined as follows.

-

Each node i has an activity rate \(a_i\) that we draw independently from a distribution F(x).

-

At each discrete time step (of length \(\Delta t\)), we start with N isolated nodes. Each node i is active with a probability of \(a_i \Delta t\), independently of all other nodes. If it is active, it creates a \((p-1)\)-simplex (forming, in network terms, a clique of p nodes) with \(p - 1\) other nodes that we choose uniformly and independently at random (without replacement). One can either use a fixed value of p or draw p from some probability distribution.

-

At the next time step, we delete all edges, so all interactions have a constant duration. We then generate new interactions from scratch.

This version of the SAD model is Markovian, and it is desirable to generalize it in various ways (e.g. by incorporating memory or community structure).

Iacopini et al. [200] recently developed a simplicial contagion model that generalizes an SIS process on a graph. Consider a simplicial complex K with N nodes, and associate each node i with a state \(x_i(t) \in \{0,1\}\) at time t. If \(x_i(t) = 0\), node i is part of the susceptible compartment S; if \(x_i(t) = 1\), it is part of the infected compartment I. The density of infected nodes at time t is \(\rho (t) = \frac{1}{N}\sum _{i = 1}^N x_i(t)\). Suppose that there are D parameters \(\varpi _1, \ldots , \varpi _D\) (with \(D \in \{1, \ldots , N-1\}\)), where \(\varpi _d\) represents the probability per unit time that a susceptible node i that participates in a d-dimensional simplex \(\sigma \) is infected from each of the faces of \(\sigma \), under the condition that all of the other nodes of the face are infected. That is, \(\varpi _1\) is the probability per unit time that node i is infected by an adjacent node j via the edge (i, j). Similarly, \(\varpi _2\) is the probability per unit time that node i is infected via the 2-simplex (i, j, k) in which both j and k are infected, and so on. The recovery dynamics, in which an infected node i becomes susceptible again, proceeds as in the SIR model that I discussed in Sect. 6.4.2, except that the node is again susceptible to the contagion. One can envision numerous interesting generalizations of this model (e.g. ones that are inspired by ideas that have been investigated in contagion models on graphs).

6.7 Outlook

The study of networks is one of the most exciting and rapidly expanding areas of mathematics, and it touches on myriad other disciplines in both its methodology and its applications. Network analysis is increasingly prominent in numerous fields of scholarship (both theoretical and applied), it interacts very closely with data science, and it is important for a wealth of applications.

My focus in this chapter has been a forward-looking presentation of ideas in network analysis. My choices of which ideas to discuss reflect their connections to dynamics and nonlinearity, although I have also mentioned a few other burgeoning areas of network analysis in passing. Through its exciting combination of graph theory, dynamical systems, statistical mechanics, probability, linear algebra, scientific computation, data analysis, and many other subjects—and through a comparable diversity of applications across the sciences, engineering, and the humanities—the mathematics and science of networks has plenty to offer researchers for many years.

Notes

- 1.

There are recent theoretical advances on examining network structure amidst rich but noisy data [32], and it is important for research on both network structure and dynamics to explicitly consider such scenarios.

- 2.

- 3.

Nodes that are good authorities tend to have good hubs that point to them, and nodes that are good hubs tend to point to good authorities.

- 4.

A major open problem in multilayer network analysis is the measurement and/or inference of values of \(\omega \) (and generalizations of it in the form of coupling tensors) [10].

- 5.

In this case, linearization yields Laplacian dynamics, which is closely related to a random walk on a network [97].

- 6.

Combinatorial graphs, and more general combinatorial objects, are my main focus in this chapter. This subsection is an exception.

- 7.

References

M.E.J. Newman, Networks, 2nd edn. (Oxford University Press, Oxford, UK, 2018)

M.A. Porter, S.D. Howison, The role of network analysis in industrial and applied mathematics (2017), arXiv:1703.06843

M.A. Porter, G. Bianconi, Eur. J. App. Math. 27, 807 (2016)

F. Bullo, Lectures on Network Systems, 1.3 edn. (Kindle Direct Publishing, 2019), http://motion.me.ucsb.edu/book-lns

R. Lambiotte, M. Rosvall, I. Scholtes, Nat. Phys. 15, 313 (2019)

P. Holme, J. Saramäki, Phys. Rep. 519, 97 (2012)

P. Holme, Eur. Phys. J. B 88, 234 (2015)

P.S. Chodrow, A. Mellor, App. Netw. Sci. 5, 9 (2020)

M. Kivelä, A. Arenas, M. Barthelemy, J.P. Gleeson, Y. Moreno, M.A. Porter, J. Complex Netw. 2, 203 (2014)

M.A. Porter, Notices Am. Math. Soc. 65, 1419 (2018)

M.A. Porter, J.P. Gleeson, Dynamical Systems on Networks: A Tutorial, vol. 4 in Frontiers in Applied Dynamical Systems: Reviews and Tutorials (Springer, Cham, Switzerland, 2016)

M. Rosvall, A. Esquivel, A. Lancichinetti, J. West, R. Lambiotte, Nat. Commun. 5, 4630 (2014)

N. Otter, M.A. Porter, U. Tillmann, P. Grindrod, H.A. Harrington, Eur. Phys. J. Data Sci. 6, 17 (2017)

A. Patania, F. Vaccarino, G. Petri, Eur. Phys. J. Data Sci. 6, 7 (2017)

V.A. Traag, P. Doreian, A. Mrvar, in Advances in Network Clustering and Blockmodeling, ed. by P. Doreian, V. Batagelj, A. Ferligoj (Wiley, Hoboken, NJ, USA, 2020), p. 225

P. Van Mieghem, Graph Spectra for Complex Networks (Cambridge University Press, Cambridge, UK, 2013)

P. Holme, J. Saramäki (eds.), Temporal Networks (Springer, Heidelberg, Germany, 2013)

P. Holme, J. Saramäki (eds.), Temporal Network Theory (Springer, Cham, Switzerland, 2019)

E. Valdano, L. Ferreri, C. Poletto, V. Colizza, Phys. Rev. X 5, 021005 (2015)

D. Taylor, M.A. Porter, P.J. Mucha, in Temporal Network Theory, ed. by P. Holme, J. Saramäki (Springer, Cham, Switzerland, 2019), p. 325

M. De Domenico, A. Solé-Ribalta, E. Cozzo, M. Kivelä, Y. Moreno, M.A. Porter, S. Gómez, A. Arenas, Phys. Rev. X 3, 041022 (2013)

I. de Sola Pool, M. Kochen, Soc. Netw. 1, 5 (1978)

S. Wasserman, K. Faust, Social Network Analysis: Methods and Applications (Cambridge University Press, Cambridge, UK, 1994)

D.F. Gleich, SIAM Rev. 57, 321 (2015)

R. Milo, S. Shen-Orr, S. Itzkovitz, N. Kashtan, D. Chklovskii, U. Alon, Science 298, 824 (2002)

M. Golubitsky, I. Stewart, A. Török, SIAM. J. Appl. Dyn. Syst. 4, 78 (2005)

M.A. Porter, J.-P. Onnela, P.J. Mucha, Notices Am. Math. Soc. 56, 1082 (2009)

S. Fortunato, D. Hric, Phys. Rep. 659, 1 (2016)

P. Csermely, A. London, L.Y. Wu, B. Uzzi, J. Complex Netw. 1, 93 (2013)

P. Rombach, M.A. Porter, J.H. Fowler, P.J. Mucha, SIAM Rev. 59, 619 (2017)

H. Simon, Proc. Am. Philos. Soc. 106, 467 (1962)

M.E.J. Newman, Nat. Phys. 14, 542 (2018)

T.P. Peixoto, in Advances in Network Clustering and Blockmodeling, ed. by P. Doreian, V. Batagelj, A. Ferligoj (Wiley, Hoboken, NJ, USA, 2020), p. 289

A. Aleta, Y. Moreno, Annu. Rev. Condens. Matter Phys. 10, 45 (2019)

J. Tang, M. Musolesi, C. Mascolo, V. Latora, V. Nicosia, in SNS ’10—Proceedings of the 3rd Workshop on Social Network Systems. Paris (Association for Computing Machinery, New York City, NY, USA, 2010), Article No. 3

H. Kim, J. Tang, R. Anderson, C. Mascolo, Comput. Netw. 56, 983 (2012)

K. Lerman, R. Ghosh, J.H. Kang, in MLG’10—Proceedings of the 8th Workshop on Mining and Learning with Graphs. Washington D.C. (Association for Computing Machinery, New York City, NY, USA, 2010), p. 70

P. Grindrod, D.J. Higham, Proc. R. Soc. A 470, 20130835 (2014)

P. Grindrod, D.J. Higham, SIAM Rev. 55, 118 (2013)

D. Walker, H. Xie, K.K. Yan, S. Maslov, J. Stat. Mech.: Theory Exp. 2007, P06010 (2007)

R.A. Rossi, D.F. Gleich, in WAW 2018—Proceedings of the 15th International Workshop on Algorithms and Models for the Web Graph. Moscow (Springer, Heidelberg, Germany, 2012), p. 126

S. Praprotnik, V. Batagelj, Ars Math. Contemp. 11, 11 (2015)

J. Flores, M. Romance, J. Comput. Appl. Math. 330, 1041 (2018)

D. Taylor, S.A. Myers, A. Clauset, M.A. Porter, P.J. Mucha, Multiscale Model Simul. 15, 537 (2017)

D. Taylor, M.A. Porter, P.J. Mucha, Tunable eigenvector-based centralities for multiplex and temporal networks (2019), arXiv:1904.02059

P. Bonacich, J. Math. Sociol. 2, 113 (1972)

J. Kleinberg, J. ACM 46(5), 604 (1999)

The Mathematics Genealogy Project (2020), http://www.genealogy.ams.org. Accessed 27 Jan 2020

T.P. Peixoto, M. Rosvall, Nat. Commun. 8, 582 (2017)

P.J. Mucha, T. Richardson, K. Macon, M.A. Porter, J.-P. Onnela, Science 328(5980), 876 (2010)

M. De Domenico, A. Lancichinetti, A. Arenas, M. Rosvall, Phys. Rev. X 5, 011027 (2015)

L.G.S. Jeub, M.W. Mahoney, P.J. Mucha, M.A. Porter, Netw. Sci. 5, 144 (2017)

M. Vaiana, S. Muldoon, Resolution limits for detecting community changes in multilayer networks (2018), arXiv:1803.03597

L. Papadopoulos, M.A. Porter, K.E. Daniels, D.S. Bassett, J. Complex Netw. 6, 485 (2018)

J. Moody, P.J. Mucha, Netw. Sci. 1, 119 (2013)

M. Sarzynska, E.A. Leicht, G. Chowell, M.A. Porter, J. Complex Netw. 4, 363 (2016)

S. Pilosof, M.A. Porter, M. Pascual, S. Kéfi, Nat. Ecol. Evol. 1, 0101 (2017)

K.R. Finn, M.J. Silk, M.A. Porter, N. Pinter-Wollman, Anim. Behav. 149, 7 (2019)

M. Bazzi, L.G.S. Jeub, A. Arenas, S.D. Howison, M.A. Porter, Phys. Rev. Res. 2, 023100 (2020)

N. Perra, B. Gonçalves, R. Pastor-Satorras, A. Vespignani, Sci. Rep. 2, 469 (2012)

L. Zino, A. Rizzo, M. Porfiri, SIAM, J. Appl. Dyn. Syst. 17, 2830 (2018)

G. Petri, A. Barrat, Phys. Rev. Lett. 121, 228301 (2018)

E. Valdano, M.R. Fiorentin, C. Poletto, V. Colizza, Phys. Rev. Lett. 120, 068302 (2018)

L. Zino, A. Rizzo, M. Porfiri, Phys. Rev. Lett. 117, 228302 (2016)

L. Zino, A. Rizzo, M. Porfiri, J. Complex Netw. 5, 924 (2017)

S. Motegi, N. Masuda, Sci. Rep. 2, 904 (2012)

W. Ahmad, M.A. Porter, M. Beguerisse-Díaz, Tie-decay temporal networks in continuous time and eigenvector-based centralities (2019), arXiv:1805.00193

P.J. Laub, T. Taimre, P.K. Pollett, Hawkes processes (2015), arXiv:1507.02822

E.M. Jin, M. Girvan, M.E.J. Newman, Phys. Rev. E 64, 046132 (2001)

X. Zuo, M.A. Porter, Models of continuous-time networks with tie decay, diffusion, and convection (2019), arXiv:1906.09394

R. Erban, S.J. Chapman, P.K. Maini, A practical guide to stochastic simulations of reaction-diffusion processes (2017), arXiv:0704.1908

C.L. Vestergaard, M. Génois, PLoS Comput. Biol. 11, e1004579 (2015)

S. Brin, L. Page, Comput. Netw. 30, 107 (1998)

A. Arenas, A. Díaz-Guilera, J. Kurths, Y. Moreno, C. Zhou, Phys. Rep. 469, 93 (2008)

F.A. Rodrigues, T.K.DM. Peron, P. Ji, J. Kurths, Phys. Rep. 469, 1 (2016)

M.O. Jackson, Y. Zenou, in Handbook of Game Theory, vol. 4, ed. by P. Young, S. Zamir (Elsevier, Amsterdam, The Netherlands, 2014), p. 95

I.Z. Kiss, J.C. Miller, P.L. Simon, Mathematics of Epidemics on Networks: From Exact to Approximate Models (Springer, Cham, Switzerland, 2017)

R. Pastor-Satorras, C. Castellano, P. Van Mieghem, A. Vespignani, Rev. Mod. Phys. 87, 925 (2015)

S. Lehmann, Y.-Y. Ahn, Complex Spreading Phenomena in Social Systems: Influence and Contagion in Real-World Social Networks (Springer, Cham, Switzerland, 2018)

C. Castellano, S. Fortunato, V. Loreto, Rev. Mod. Phys. 81, 591 (2009)

Y.Y. Liu, A.L. Barabási, Rev. Mod. Phys. 88, 035006 (2016)

A. Motter, Chaos 25, 097501 (2015)

D.J. Watts, Proc. Natl. Acad. Sci. USA 99, 5766 (2002)

J. Chalupa, P.L. Leath, G.R. Reich, J. Phys. C 12, L31 (1979)

J.P. Gleeson, Phys. Rev. X 3, 021004 (2013)

M. Granovetter, Am. J. Sociol. 83, 1420 (1978)

T.W. Valente, Network Models of the Diffusion of Innovations (Hampton Press, New York City, NY, USA, 1995)

D. Kempe, J. Kleinberg, É. Tardos, in KDD ’03—Proceedings of the Ninth ACM SIGKDD International Conference on Knowledge Discovery and Data Mining. Washington D.C., August 2003 (Association for Computing Machinery, New York City, NY, USA, 2003), p. 137

S. Melnik, J.A. Ward, J.P. Gleeson, M.A. Porter, Chaos 23, 013124 (2013)

S.W. Oh, M.A. Porter, Chaos 28, 033101 (2018)

J.S. Juul, M.A. Porter, Chaos 28, 013115 (2018)

J.S. Juul, M.A. Porter, Phys. Rev. E 99, 022313 (2019)

P. Oliver, G. Marwell, R. Teixeira, Am. J. Sociol. 91, 522 (1985)

P.G. Fennell, S. Melnik, J.P. Gleeson, Phys. Rev. E 94, 052125 (2016)

D. Taylor, F. Klimm, H.A. Harrington, M. Kramár, K. Mischaikow, M.A. Porter, P.J. Mucha, Nat. Commun. 6, 7723 (2015)

A.A. Saberi, Phys. Rep. 578, 1 (2015)

N. Masuda, M.A. Porter, R. Lambiotte, Phys. Rep. 716–717, 1 (2017)

X.F. Meng, R.A. Van Gorder, M.A. Porter, Phys. Rev. E 97, 022312 (2018)

S. Redner, C. R. Phys. 20, 275 (2019)

F. Brauer, C. Castillo Chavez, Mathematical Models in Population Biology and Epidemiology, 2nd edn. (Springer, Heidelberg, Germany, 2012)

A. Arenas, W. Cota, J. Gomez-Gardenes, S. Gómez, C. Granell, J.T. Matamalas, D. Soriano-Panos, B. Steinegger, A mathematical model for the spatiotemporal epidemic spreading of COVID19, medRxiv (2020), https://doi.org/10.1101/2020.03.21.20040022

M. De Domenico, C. Granell, M.A. Porter, A. Arenas, Nat. Phys. 12, 901 (2016)

V. Colizza, R. Pastor-Satorras, A. Vespignani, Nat. Phys. 3, 276 (2007)

P. Van Mieghem, R. Van de Bovenkamp, Phys. Rev. Lett. 110, 108701 (2013)