Abstract

For the calculation complexity problem of image fusion based on traditional non-subsampled contourlet transform (NSCT), a novel NSCT fusion algorithm based on compressive sensing (CS) and dynamic weighted non-negative matrix factorization (DWNMF) is proposed. Firstly, NSCT is employed to decompose source images, for band-pass directional sub-band coefficients which are featured with high calculation complexity, CS theory is applied to fuse them, meanwhile, the fusion approach based on DWNMF is employed to fuse low-pass sub-band coefficients. Secondly, the minimum total variation (min-TV) algorithm is adopted to reconstruct fused band-pass directional sub-band coefficients in CS domain, to get fused band-pass sub-band coefficients in NSCT domain. Finally, using the inverse NSCT transform to reconstruct fused low-pass and band-pass sub-images, to gain the final fused image. Simulation results show that, the proposed approach can not only improve the fusion effect, but also reduce the calculation complexity.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Image fusion refers to integrate two or more images having the same scene obtained by different sensors, in order to gain the new image which contains richer information, and is more suitable for the visual perception in [1]. Image fusion has spread many fields, such as, security monitoring, intelligent transportation, medical diagnostics et al. Currently, methods for image fusion mainly contain the pixel-level fusion, the feature-level fusion and the decision-level fusion in [2]. In the pixel-level fusion field, the multi-resolution analysis is widely employed. Because of the wavelet transform having a good time-frequency localization feature, it is used widely, but it can only express the “point” singularities characteristic effectively, for images having “linear” characteristic, it isn’t optimal approximate expression. 2002, Do proposed the contourlet transform (CT) theory in [3], which not only retains time-frequency analysis feature, but also has the good directionality and anisotropy. However, the processing of CT decomposing image is down-sampling, so it doesn’t have the translation invariance. 2006, NSCT was proposed by Arthur L.da et al. in reference [4]. NSCT has the excellent directionality and anisotropy, and has the translation invariance, it effectively overcomes “Gibbs” effect caused by the traditional multi-resolution analysis.

However, the computational complexity of NSCT algorithm based on Nyquist sampling theory is high, that will bring a large number of data redundancies. Then, is there a compressive sampling technology to break through the traditional Nyquist sampling model? And it makes sure that the information isn’t lost, so that fewer sampling data involve in image processing, meanwhile, it can perfectly reconstruct the original image. 2006, Donoho, Candes, Romberg and Tao first proposed CS theory, breaking through the traditional signal processing model. It shows that, if the signal in a transform domain is k-sparse (or compressive), it will be possible to design a measurement matrix (or observation matrix) which is irrelevant with orthogonal basis, to measure the signal. The length of measurement matrix is far smaller than the original signal. Finally, to reconstruct the signal by solving the convex optimization problem in [5]. 2008, Wan Tao first used CS for image fusion in [6], effectively reducing the computational complexity, but the fused image obtained has some distortion, and has obvious stripes. Later, X.Li et al. improved Wan’s method in [7], but the fusion effect isn’t very satisfactory.

Hence, on the basis of studying NSCT algorithm, this paper applies CS theory to NSCT image fusion algorithm, what not only ensures the fusion effect, but also improves the problem of high redundancy based on traditional NSCT. Firstly, employing NSCT to decompose source images, to get low-pass sub-band coefficients, which represent overview features of the original image and band-pass directional sub-band coefficients, which represent details information of the original image. For the fusion of band-pass directional sub-band coefficients having a large number of calculations, the fusion method based on CS is employed. For the fusion of low-pass sub-band coefficients, the rule based on weighted average usually is employed, which don’t consider the feature information of low-pass sub-band coefficients synthetically. In order to improve the quality of the fused image better, the rule based on DWNMF is employed to fuse the low-pass sub-band. 2010, DWNMF algorithm was proposed by LiuXiaopeng and applied to image fusion in [8], weighted coefficients of DWNMF are adjusted dynamically according to mutation degrees of pixels, and DWNMF optimally integrates the feature information from source images, effectively improving the contrast of the fused result.

In summary, this paper puts forward a novel NSCT fusion algorithm for images based on CS and DWNMF. Experimental results show that the proposed method not only keep abundant details for the fused image, but also reduces the computational complexity of traditional NSCT fusion algorithm.

2 NSCT

NSCT transform contains the non-subsampled pyramid filter bank (NSPFB) and the non-subsampled directional filter bank (NSDFB) in [9]. Firstly, utilizing NSPFB to decompose original images, the low-pass sub-image and the band-pass directional sub-image can be gotten, and the low-pass sub-image will be decomposed in the higher scale. If the image is decomposed through k grade of NSPFB decomposition, we will get k+1 sub-images, including 1 low-pass sub-image and k band-pass sub-images. After the multi-scale decomposition is finished, the band-pass sub-image will be decomposed at l levels by NSDFB, then, \(2^{l}\) band-pass sub-images will be gotten. Figure 1 is the schematic diagram of NSCT decomposition at two levels.

NSPFB draws on the idea of atrous algorithm, which is designed as dual-channel non-subsampled filter banks. As is shown in Fig. 2(a), where, \(H_0(z)\) and \(G_0(z)\) are respectively the low-pass decomposition and reconstruction filter bank of NSPFB, and \(H_1(z)\) and \(G_1(z)\) represent the high-pass decomposition and reconstruction filter bank of NSPFB. The basic building block of NSDFB is also a kind of dual-channel filter banks. As is shown in Fig. 2(b), where, \(U_0(z)\) and \(V_0(z)\) are respectively the low-pass decomposition and reconstruction filter bank of NSDFB, while \(U_1(z)\) and \(V_1(z)\) represent the high-pass decomposition and reconstruction filter bank of NSDFB.

3 CS Theory

Considering a real-valued, finite-length and one-dimensional discrete time signal \(x\in R^{N}\), according to the signal theory, It can be represented as (1)

where, \(\varPsi \) represents an \(N\times N\) orthonormal basis, and \(\alpha \) is an \(N\times 1\) vector, containing only K non-zero coefficients. If \(K\ll N\), we can say that x is compressive, \(\varPsi \) is the sparse base, and \(\alpha \) is the sparse signal of x in \(\varPsi \) domain. Then, we can design an \(M\times N\) measurement matrix \(\varPhi \)(\(M\ll N\)), multiplying \(\varPhi \) by x to achieve the compressive sampling for the signal x as formula (2), where \(y\in R^{M}\) represents the measurement value, and \(A^{CS}=\varPhi \varPsi \), the process of CS measurement is shown as Fig. 3.

R. Baraniuk proved that independent and identically distributed Gaussian random matrix can be a universal compressive measurement matrix, which is irrelevant with almost sparse bases. Gaussian random matrix is employed as the measurement matrix in this paper. Compressive reconstruction is essentially solving (2), because M is far smaller than N, (2) is an ill-conditioned equation. Numerous studies show that, for the compressive signal, if \(\varPhi \) and \(\varPsi \) meet RIP feature in [10], we can first recover the sparse signal \(\alpha \), then, multiplying \(\alpha \) by \(\varPsi \) to get the original signal x. Candes shows that we can recover the K sparse signal x exactly by solving the problem as formula (3) in [11],

Equation (3) is a convex optimization problem known as basis pursuit(BP), the improved version is called as orthogonal matching pursuit (OMP) algorithm, which is easy to implement and has fast computation speed. OMP is especially suitable for the recovery of one-dimensional signals. Then, Candes proposed the min-TV model for 2-D images based on its gradient is sparse in [12]. Min-TV has better robustness than OMP, which can perfectly accomplish compressive reconstruction for images. We adpot min-TV algorithm to achieve the reconstruction for images as formula (4),

The objective function TV(x) is the sum of the discrete gradient of the image, defined as (5),

4 WNMF

Standard NMF algorithm is the description method of pure additive and sparse data, Daniel D. Lee et al. proved that it is convergence in [13]. The calculation model is as follows:

where, the column vector W is called as the base vector, having linear independence and sparse, so it can express the characteristics structure of the original data, column vector elements of H are its weighted coefficients. the basic process of NMF can be described as looking for a \(p\times r\) non-negative matrix W and \(r\times q\) matrix H, making the objective function F(V, WH) be minimum. F(V, WH) is the cost function to measure the approximate degree between V and WH. To solve W and H, utilizing the minimize of Euclidean distance between V and WH as the cost function F(V, WH), defined as (7),

If and only if \(V=WH\), the value of the function above is 0, the corresponding iteration rule is:

where, “\(\cdot \)" indicates dot multiplication of matrixes. In order to improve the algorithm’s ability to extract local features further, Blondel proposed WNMF algorithm in reference [14], and used it for face recognition, introducing the weighted coefficient matrix T in formula (8) and (9), to get the new iterative as (10), (11),

5 Fusion Method Based on CS and DWNMF

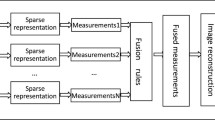

In this paper, aiming at the high computational complexity of image fusion based on traditional NSCT, an improved NSCT fusion method is proposed. In order to obtain the better fusion effect, different fusion rules are adopted to deal with the low-frequency and high-frequency sub-band coefficients. The fusion program is shown as Fig. 4.

Main steps based on the proposed method:

-

(1)

Decomposing Image A and B by NSCT into low-pass sub-band coefficients {\(L^{A}\),\(L^{B}\)}, and band-pass directional sub-band coefficients {\(H^{A}_{l,k}\), \(H^{B}_{l,k}\)}, where L represents the low-pass sub-band coefficient, \(H_{l,k}\) represents band-pass directional sub-band coefficient at the scale l and the direction k;

-

(2)

Fusing low-pass sub-band coefficients based on the fusion rule described in Sect. 5.1, to get the fused low-pass coefficient \(F^{low}\);

-

(3)

Using Gaussian random matrix to observe \(H^{A}_{l,k}\) and \(H^{B}_{l,k}\), to get measurements \(X^{A}_{l,k}\) and \(Y^{B}_{l,k}\);

-

(4)

Fusing \(X^{A}_{l,k}\) and \(Y^{B}_{l,k}\) according to the rule in Sect. 5.2, to obtain the fused measurement \(Z^{F}_{l,k}\) in CS domain;

-

(5)

Employing min-TV algorithm according to (12), to reconstruct the fused band-pass sub-band \(F_{l,k}^{high}\) from \(Z_{l,k}^{F}\). we can first reconstruct the fused sparse band-pass sub-band \(F_{l,k}^{'high}\), and then, carrying out sparse inverse transform for \(F_{l,k}^{'high}\), to obtain \(F_{l,k}^{high}\) in NSCT domain;

$$\begin{aligned} \min TV(F^{'high}_{l,k}) \quad s.t. \quad \varPhi F^{'high}_{l,k}=Z^{F}_{l,k} \end{aligned}$$(12) -

(6)

Reconstructing \(F^{low}\) and \(F^{high}_{l,k}\) by NSCT inverse transform, to gain the final fused image F.

5.1 Fusion Rule of Low-Pass Sub-band Based on DWNMF

The low-pass sub-band contains a large amount of basic information about the source image, in order to integrate the feature information better, the fusion rule based on DWNMF is employed to fuse the low-pass sub-band. Firstly, to rearrange low frequency coefficients \(L^{A}\) and \(L^{B}\) into column vectors, considered as V in formula (10), (11). Along with the iteration progressing, the weighted coefficient is adjusted dynamically according to the latest iteration result. If the specific indicator of the iteration result reaches the target threshold, the adjustment of the corresponding weighted coefficient is stopped, on the contrary, the weighted coefficient continue to be adjusted towards the direction making for the improvement of specific target. To select a particular index as the objective function, and the weighted coefficient is adjusted dynamically, we can achieve the improvement about the specific indicator in image fusion. Because human eyes are sensitive to the contrast of images, in order to improve the contrast and the visual effect of fused image, to select the mutation degree which has the same trend with the contrast, as the objective function. To define the mutation degree as follows,

where, x(m, n) represents the low-pass sub-band coefficient at (m, n), u stands for the average value of low-pass sub-band coefficients. Specific fusion steps for low-pass sub-band coefficients as follows:

\({{\varvec{Step}}}\, \mathbf{{1\!\!:}}\) Calculating pixels’ mutations of two low-frequency sub-bands \(L^{A}\) and \(L^{B}\) according to (13), mutations are respectively denoted as \(\tau _{A}\) and \(\tau _{B}\), the greater pixel mutation is selected as the mutation of fused low-pass sub-band.

\({{\varvec{Step}}}\, \mathbf{{2\!\!:}}\) Initializing basis vector matrix W and the weighted vector matrix H, meanwhile, initializing the weighted coefficient matrix T, the size is \(n\times 2\), its elements are all 0.5.

\({{\varvec{Step}}}\, \mathbf{{3\!\!:}}\) Beginning the iteration according to (10), (11), if the maximum number of iterations reaches M(the maximum number is set to 20), adjusting elements of W to the range of original low-frequency coefficients. Finally, outputting all of elements having been adjusted in W as fused low-pass sub-band coefficients. Otherwise, go to \({{\varvec{Step}}}\, \mathbf{{4}}\).

\({{\varvec{Step}}}\, \mathbf{{4\!\!:}}\) Calculating the mutation of W after the iteration according to (13), if it is greater than the target mutation degree, returning to Step 3 and the iteration is continued, otherwise, to adjust the weighted matrix T according to formulas (14), (15). It’s to adjust \(T_{L^{A}}(m,n)\) and \(T_{L^{B}}(m,n)\) to be \(T_{L^{A}}^{*}(m,n)\) and \(T_{L^{B}}^{*}(m,n)\), and returning to \({{\varvec{Step}}}\, \mathbf{{3}}\).

where, subscripts \(L^{A}\) and \(L^{B}\) respectively represent two low-pass sub-images, the weighted coefficient matrix T is adjusted towards that the mutation degree is greater, effectively enhancing the contrast of fused image, the fused low-pass sub-band is recorded as \(F^{low}\).

5.2 Fusion Rule of Band-Pass Sub-band Measurements

In fact, the fusion rule based on CS is to fuse measurements. Because the number of measurements is less than the length of band-pass sub-band coefficients, the strategy based on CS can reduce time consuming. As we all know, the higher definition of areas in the source image, the bigger variance of corresponding pass-band directional sub-bands in [15]. Thus, the variance of pass-band directional sub-band can be considered as the indirect measure of local high-frequency information in some degree. However, the process of measurement is linear in CS theory. So we propose the fusion method that deals with measurements according to the variance of directional sub-band measurements. The process is to calculate the local variance of measurements in turn, then the relevance of measurements’ areas is defined according to above variance values, finally, determining fused measurements coefficients according to the relevance value in [16]. The regional correlation function is defined as follows,

where, \(sd_{X}(m,n)\) and \(sd_{Y}(m,n)\) respectively represent the local variance of \(X^{A}_{l,k}\) and \(Y^{B}_{l,k}\), in the 3*3 window that the center is (i, j). The subscript X,Y respectively represent measurements of band-pass directional sub-band coefficients. Assuming that the threshold is T, its value is 0.5, if \(corr_{X,Y}(m,n)\le T\), that means the corresponding information of the source image in that direction has large difference. Then, we should select measurements with larger variance as fused measurements of directional sub-band coefficients. That is as follows:

Instead, if \(corr_{X,Y}(m,n)>T\), that means the correlation is higher between source images. It is to say, it is comparable for the corresponding information of two source images in the sub-image. Now we can employ the weighted strategy as the fusion rule. It is as follows:

where, \(\omega (m,n)=\frac{sd_{X}(m,n)}{sd_{X}(m,n)+sd_{Y}(m,n)}\)

6 Experiment Results and Analysis

In order to verify the effectiveness of the proposed fusion algorithm, selecting “Users” night vision visible light and infrared images, as the first set of images to test. Besides, multi-focus images “Clock” are selected as the second set of images to test. The size are all 256*256. The environment of experiment is Win7, and the software is Matlab R2010. Comparing the proposed method in this paper with other methods, they are respectively, (1) the fusion method proposed by Wan Tao, denoted as CS-max, (2) the fusion method based on X. Li’s, denoted as CS-sd, (3) The NSCT fusion method, the rule of weighted average is employed to fuse the low-pass sub-image, and the rule based on maximum absolute value is employed to fuse band-pass sub-images. (4) The NSCT-SF-PCNN proposed by Qu Xiaobo in [17]. In order to make sure that the comparison between different methods is effective, we set the level of NSCT decomposition is all 2, and the level of directional sub-band decomposition is all {2,3}. Meanwhile,the “9-7” filter is employed as the scale decomposition filter, and the “pkva” filter is selected as the direction decomposition filter. And the measurement matrix is all Gaussian random matrix. The sampling rate is all 0.5 according to reference [6]. Min-TV is selected as the compressive reconstruction algorithm.

For fused results, we first evaluate the quality from subjective visual, then, adopting the objective evaluation criteria to prove the superiority of the proposed method. Evaluation criteria selected contain, SSIM, (Q, Qw, Qe) which are criteria combining subjectivity with objectivity proposed in [18], and Definition. In details, SSIM is used to measure the similarity between two images, it is bigger that means the fused image containing more information from source images, but the maximum value isn’t more than 1. (Q, Qw, Qe) can fully consider the human visual, and measure how much significant information will be reserved from original images, Also, the value is closer to 1, the quality of the fused image is better. Definition is bigger means the quality is better.

6.1 “Users” Images Fusion Experiment

“Users” images have been registered. Fusion results are shown as Fig. 5. Figure 5(a) and (b) represent the night vision visible light image and infrared image. Their characteristics are different, the visible light image can reflect the spectral reflection information and edges, and textures are richer, but it is not sensitive to the heat target. While, the infrared image can represent thermal radiation information, having the excellent detection for the target. To fuse these two kinds of images can greatly contribute to the understanding and detectability for the scene and object. Fused results based on CS-max, CS-sd, NSCT, NSCT-SF-PCNN and our method are shown in Fig. 5(c)–(g).

From Fig. 5, all of methods can keep important information from source images. Every fused results contain both background information of visible light image and target information of infrared image. However, obviously the result based on CS-max is not good, and the contrast is low, for example, the house, trees and ground are blurry, the person is not obvious. Meanwhile, the result based on CS-sd is a little better than CS-max. From the subjective view, fused results based on traditional NSCT and NSCT-SF-PCNN own better brightness and contrast, but the definition isn’t still satisfactory. In aspect of the contrast and definition, our method performs best, no matter the background, or the target can be accurately represented.

Objective criteria are listed in Table 1, to demonstrate the effectiveness of our method. From Table 1, NSCT method’s time consuming is the longest, reaching 245.231 s. NSCT-SF-PCNN method’s time consuming is also too long. SSIM based on our method is 0.8590, which is bigger than traditional NSCT, and Definition is up to 6.7693, which is far bigger than methods based on CS-max and CS-sd. Besides, other criteria like Q, Qw, Qe based on ours is also the best. In a word, the proposed can effectively preserve details, and the execution time is only 18.6018 s.

6.2 “Clocks” Images Fusion Experiment

To further verify the robustness of our method, we select multi-focus images to test, and comparing fusion results with other methods. Fusion effects are shown as Fig. 6. Figure 6(a) is the left-focus image of “Clocks”, the left clock looks like more clear than the right. Figure 6(b) is the right-focus image of “Clocks”, the right clock looks like more clear than the left. Fusing multi-focus images can get a full-focus image. Figure 6(c) is the fusion result based on CS-max, Fig. 6(d) is the fusion result based on CS-sd, Fig. 6(e) is the fusion result based on traditional NSCT, Fig. 6(f) is the fusion result based on NSCT-SF-PCNN, and Fig. 6(g) is the result based on ours.

From Fig. 6, Fig. 6(c) and (d) have obvious stripes, the contrast and brightness are poor. Figure 6(e) and (f) has similar visual effect, improving stripes effectively, and retaining focus areas of original images. Figure 6(g) is the result based on ours, the visual effect is best. The comparison of fusion results based on different methods are shown in Table 2. Object evaluation criteria based on our method are better than others, and the running time is only about 10 % of traditional NSCT.

7 Conclusions

This paper takes the advantage of CS and DWNMF, to propose a novel NSCT image fusion algorithm, which can effectively reduce the high computational complexity. Firstly, we decompose original images by NSCT, to obtain the low-pass sub-band and band-pass sub-band. Then, adopting CS fusion strategy to fuse the band-pass sub-band with high complexity, meanwhile, DWNMF algorithm is used to fuse low-pass sub-band coefficients. At last, NSCT inverse transformation is employed to get the fusion image. In comparison with traditional NSCT fusion method, the proposed approach not only improves the quality and contrast of fused image, but also reduces the time consuming. However, looking for better image approximating forms, such as non-subsampled shearlet transform, to improve the fusion effect, is the important topic in the future.

References

Hang, Yang., et al.: Image fusion based on multiscale guided filters. J. Optoelectron. Laser 26(1), 170–176 (2015)

Hui, X., et al.: Detection probability of infrared and visible image fusion system. Opt. Precis. Eng. 21(12), 3206–3213 (2013)

Do, M.N., Vetterli, M.: Contourlets in Beyond Wavelets. Welland, G.V. (ed.) Academic Press (2003)

Da Cunha, A.L., Zhou, J., Do, M.N.: The nonsubsampled contourlet transform: theory, design, and application. IEEE Trans. Image Process. 15(10), 3089–3101 (2006)

Licheng, J., et al.: Development and prospect of compressive sensing. Acta Electronica Sinica 39(7), 1652–1658 (2011)

Wan, T., Canagarajah, N., et al.: Compressive image fusion. In: IEEE ICIP, pp. 1308–1311 (2008)

Li, X., Qin, S.Y.: Efficient fusion for infrared and visible images based on compressive sensing principle. IET Image Process 5(2), 141–147 (2011)

Liu, S.P., et al.: Dynamic Weighted non-negative matrix factorization and its using research in image fusion. Chin. J. Sens. Actuators 23(9), 1267–1271 (2010)

Yong, Y., et al.: Multi-focus image fusion based on human visual perception characteristic in non-subsampled Contourlet transform domain. J. Image Graph. 19(3), 447–455 (2014)

Baraniuk, R.: A lecture on compressive sensing. IEEE Signal Process. Mag. 24(4), 118–121 (2007)

Donoho, D.L.: Compressed sensing. IEEE Trans. Inf. Theor. 52(4), 1289–1306 (2006)

Candes, E.J., Tao, T.: Near optimal signal recovery from random projections: universal encoding strategies. IEEE Trans. Inf. Theor. 52(12), 5406–5425 (2006)

Wang, J., Lai, S., et al.: Improved image fusion method based on NSCT and accelerated NMF. Sensors 12, 5872–5887 (2012)

Blondel, V.D., et al.: Weighted nonnegative matrix factorization and face feature extraction. Image Vision Comput. 1–17 (2007)

Chuanqi, Y., et al.: Fusion algorithm of infrared and visible light images based on NSCT transform. Syst. Eng. Electron. 30(4), 593–596 (2008)

Jiao, L., et al.: Intelligent SAR Image Processing and Interpretation. Science Press, Beijing (2008)

Xiaobo, Qu: Image fusion algorithm based on spatial frequency-motivated pulse coupled neural networks in nonsubsampled contourlet transform domain. Acta Automatica Sinica 34(12), 1508–1514 (2008)

Piella, G.: New quality measures for image fusion. In: Proceedings of the 7th International Conference on Information Fusion, Stockholm, Sweden, pp. 542–546 (2004)

Acknowledgment

This work is supported by National Natural Science Foundation of China (No. 611 70185), the Science and Technology Research Project of Liaoning Provincial Education Department (No. L2012052), and Non PHD Youth Growth Fund of Shenyang Aerospace University (No. 201406Y). Thank http://www.quxiaobo.org/software/software_FusingImages.html and http://www.imagefusion.org for providing source images.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 Springer International Publishing Switzerland

About this paper

Cite this paper

Wu, Y., Wang, Y., Wang, L., Shi, X. (2015). A Novel NSCT Fusion Algorithm for Images Based on Compressive Sensing and Dynamic WNMF. In: Zhang, YJ. (eds) Image and Graphics. ICIG 2015. Lecture Notes in Computer Science(), vol 9217. Springer, Cham. https://doi.org/10.1007/978-3-319-21978-3_32

Download citation

DOI: https://doi.org/10.1007/978-3-319-21978-3_32

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-21977-6

Online ISBN: 978-3-319-21978-3

eBook Packages: Computer ScienceComputer Science (R0)