Abstract

Multi-modal interactive maps can provide a useful aid to navigation for blind people. We have been experimenting with such maps that present information in a tactile and auditory (speech) form, but with the novel feature that the map’s orientation is tracked. This means that the map can be explored in a more ego-centric manner, as favoured by blind people. Results are encouraging, in that scores in an orientation task are better with the use of map rotation.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

When travelling to an unfamiliar location people may prepare in a number of ways. Sighted people will often rely on their vision – supported by visual cues such as signs to navigate on arrival. They may also prepare in advance by consulting a map (increasingly this may be on-line). Maps designed for sighted users are overloaded with information, combining a range of visual encodings: geometrical, symbolic, textual, colour etc. The important point is that users can cope with this complexity because of the power of the visual channel. They can – literally – focus on the information that is relevant to their current task and ignore that which is not relevant.

Blind people can also benefit from the use of (non-visual) maps. Indeed, they may have more of a need to do so, since it is not possible for them to use vision to navigate in situ. In creating maps for blind users one encounters what is known as the ‘bandwidth problem’, which is to say that the non-visual senses simply do not have the same capacity to carry so much information that can be filtered as necessary. In this situation it is common to use as many of the non-visual senses together in the form of multimodal interaction, with the objective that the whole may be greater than the sum of the parts. Thus there has been developed the concept of the multimodal map (e.g. [1, 6, 10]). This paper presents the results of experiments with multimodal maps that combine haptic interaction with auditory output, but introducing the novel aspect of using the map’s orientation as an additional interactive element.

The primary objective of the research is to find out whether blind people can be better prepared to navigate in unfamiliar environments, given this form of multimodal map. A secondary aspiration is to find out more about the kinds of internal representations such people use.

2 Background

As argued above, maps can be very powerful tools. One role for (non-visual) maps is for planning. That is to say that before arriving at a new location the person may prepare, becoming familiar with the area by exploring a map of it. Increasingly it is the case that when in the location other technology (usually GPS-based) will be used to assist guidance, but there is still a role for the map to be used as a means of learning about the layout of the place in planning a visit.

It is relatively easy to manufacture tactile maps, using technology such as swell-paper [13], although designing such maps to be usable is quite difficult, given the limitations of the haptic senses. One approach to overcoming some of the limitations of tactile maps is to make them interactive and to provide other non-visual cues. Thus, a tactile map can be mounted on a touch-sensitive pad. This can track the location of the user’s finger as well as haptic gestures that they may make, such as pressing on the map. Then appropriate auditory feedback can be provided, such as speech or non-speech sounds.

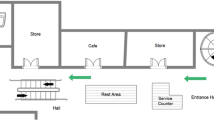

Previous audio-tactile maps systems (for example [1, 6, 10]) used tactile maps as an overlay where the systems were designed and used by the user to learn maps in a static orientation. A possible limitation of any conventional tactile map – that we have set out to explore – has to do with the style of interaction. As illustrated in Fig. 1, the user may be tracing a path in a forwards direction (Fig. 1.1 and 1.2). When they reach a corner where the path turns to the left (1.3) they keep the map in the same orientation and move their finger sideways (1.4).

Of course, this does not correspond to the way that they would behave in the real world. There, on reaching such a corner, a person would turn to the left and continue walking forwards. This is the basis of our experiments. By being able to detect the orientation of the map, it is possible (as illustrated in Fig. 2) that when the map-user reaches the corner (2.3) they can then rotate the map and continue to trace in a forwards direction (2.4).

It seems likely that such a map will generate a more useful representation in the user’s mind. For instance, the building represented by the grey rectangle in Figs. 1 and 2 will be to the left of the finger following the turn (2.4) – and will be to the user’s left if they walk that way in the real world. However, there is evidence that this kind of approach is particularly appropriate for blind people because they tend to use an ego-centric spatial frame of reference as opposed to the external, allocentric one which sighted people tend to use [11].

The long-term aim of this work is to develop and test a fully-functional multimodal map system, incorporating tactile interaction with speech and non-speech audio output. However, the main focus of the experiments reported herein is the specific facility of map rotation. This builds on previous work on the role of map orientation for both sighted and blind people, as explained in the following section.

3 Experimental Precursors

Levine et al. [4] have carried out experiments on simple map use by sighted people. Rossano and Warren [7] extended this work to blind people. In their experiments blind people were presented with tactile maps and then asked to perform an orientation task in the real world. For instance, they might explore the map in Fig. 3 and then be given an instruction such as, ‘Imagine that you are standing on point B of the path that I just showed you, with point A directly in front of you—indicate the direction you need to go to get to point C.’

The maps were presented to the participants in one of three orientations:

-

aligned (i.e. for the example, in which the participant is to imagine being at point B facing A, the map would be in that orientation, as it is in Fig. 3).

-

contra-aligned (that is Fig. 3 rotated through 180°)

-

mis-aligned (aligned at 45°, 90° or 135°)

Rossano and Warren [7] found that blind participants were much more accurate in the aligned condition, achieving a correctness score of 85 %.

Giudice et al. [3] also carried out work based on these experiments. They found evidence to support a hypothesis that spatial images are stored in an amodal representation, in both sighted and blind people. Of interest in the context of this work, though, was the fact that their experiments included elements of rotation. However it is not possible to make any direct comparison between their results and ours. Pielot et al. [14] have also conducted experiments on auditory tangible maps with rotation. In their case they spatialized the audio feedback to match the orientation of a tangible avatar. In the experiments reported herein we did not spatialize the sounds, though we plan to do so in future developments.

4 Experimental Outline

Our experiment had a number of objectives:

-

1.

Are blind participants more accurate in pointing to a landmark when they have used a rotatable audio-tactile map in comparison to a static audio-tactile map?

-

2.

Does their performance on this task correlate with their sense of direction?

-

3.

What is their subjective preference for the Static and Rotation conditions?

Further data was collected which might shed some light on the results. For instance, is it possible to identify why people are particularly good or bad at the task and can we find something about the cognitive representations that people use in the task? This paper reports on the investigation of objective 1. Further data was collected addressing 2 and 3 which will be reported in future publications.

5 Method

The method was based on that of Rossano and Warren. Tactile maps were produced, based on the guidelines in [4]. Alphabetical labels were provided through synthetic speech. That is to say, that if the participant pressed on the point B (Fig. 3), then they would hear the label ‘B’. There were two conditions in the experiment: Static, in which it was not possible to rotate the map, and Rotation, in which it was possible to rotate it. All participants experienced both conditions but the order of presentation was counter-balanced (i.e. 50% of the participants undertook the static condition followed by the rotation condition, and vice-versa).

Note that in the rotation condition participants were not obliged to use rotation. Whether they did or not was noted and their results analyzed accordingly. One suggestion was that in the rotation condition, most participants would rotate the map, corresponding to the aligned condition in Rosanno and Warren’s experiments, so that we might expect similar results between the aligned and mis-aligned conditions.

5.1 Materials

Our experiments were based on a device that we have dubbed the T4 (Fig. 4). This consists of a T3, Talking Tactile TabletFootnote 1 mounted on a turntable. The T3 has a 38 cm × 30.5 cm surface on which a tactile overlay can be placed. It can detect a single point of pressure on the surface that it communicates to a computer via a (wireless) USB port. The turntable detects the angle or orientation.

5.2 Procedure

Each participant was briefed and gave consent for the experiment. They then verbally completed a pre-test questionnaire. The questionnaire was constructed in different sections to obtain information on their mobility experience, experience in using tactile maps, experience in using audio-tactile maps and demographics.

They were then introduced to the T4. They sat by the device and were able to explore a practice map. Then they were given the experimental map in one of four orientations. Then they were given the task (e.g. ‘Imagine that you are standing on point B of the path, with point A directly in front of you. What is the direction to point C?’) In the rotation condition they were then allowed to rotate the map as they wished.

When they felt they were ready they stood up and, guided by a handrail, walked to the centre of the room. They were given a beanbag in their hand, asked to point in the required direction and to drop the beanbag to mark the direction. The position of the beanbag was recorded by an overhead camera.

Each participant would complete the test under the current condition (Static or Rotation) for the same map presented in each of five orientations. They would then complete them in the other orientation.

On completions of the tests, the participant was given a post-test interview to ascertain their preferences. Twelve blind or visually impaired people undertook the experiment. They were volunteers but received an Amazon voucher for £25 in compensation for their time.

6 Results

The distribution of the pointing accuracy measures was inspected for all conditions. The distribution was approximately normal with a mean accuracy error of 12.5° and a standard deviation of 40.6°. However, there were three outliers in which the pointing accuracy error was close to 180°, which suggested that the participants were making reflection errors, confusing a point in front of them with a point behind. The three instances were from three different participants, two in the Static condition and one in the Rotation condition, so there was no particular pattern to these errors. Including these measures in the analyses would have skewed the results substantially, so they were removed from the dataset. In order to use the rest of the data from the three participants, these measures were replaced by the mean pointing accuracy for either the Static or Rotation condition, depending on which the error occurred. This resulted in an overall distribution of pointing accuracy measures with a mean of 9.2° and a standard deviation of 32.2°.

In the Rotation condition participants were not required to rotate the map. In practice 3 participants did not always use rotation when they might have, in a total of 11 instances. In that case their data was treated with the Static data.

To investigate whether the use of a static or rotatable map affected the accuracy of pointing, a two way repeated measures analysis of variance was conducted, with Static vs Rotation as one independent variable and Initial Map Alignment as the other independent variable. There was a trend towards a significant effect for Static vs Rotatable (F = 3.32, df = 1,10, p = 0.09) and a significant effect for Initial Map Alignment (F = 3.35, df = 4,40, p < 0.05). There was no significant interaction between the two variables. Figure 5 shows that there was a substantially larger error in pointing accuracy with the Static Map condition for all alignments, apart from at Zero Alignment. There was also an increase in error for both Static and Rotation conditions as the alignment increased to 90°, which then decreased as the alignment increased further to 180°.

7 Discussion

Participants in the Rotation condition are more accurate in the pointing task than in the Static condition. However, the difference fails to reach significance at the 0.05 level. It is planned to continue the experiments with a larger numbers of participants to see whether a significant result will be achieved.

This experiment was based on those reported in [7]. Rossano and Warren did not allow rotation as such, but it was assumed that when in our experiments participants rotated the map into alignment, their results would be similar to those in Rossano and Warren’s aligned condition. However, it is difficult to make any comparison because of the way Rossano and Warren treated their data. They classified responses as either correct or not, depending on whether the response was within ±30° of the target. A superficial examination does appear to show differences: participants in our experiment scored more highly in all conditions. This warrants further investigation.

8 Further Work

We also collected further data in this experiment, relating to participants’ sense of direction, their ability to recreate the maps they used in tactile form and their subjective preferences. These have yet to be analyzed. Specifically, where participants have made errors, it may be possible to use their map reconstructions to identify the nature of the error, and thereby to see whether it is possible to promote less error-prone cognitive maps. Furthermore, more-reliable results will be obtained if we can run more participants.

It has also been mentioned that this is also only a small part of the development of the T4. In the long run we plan to develop it into a multimodal tool. We plan to experiment with the use of auditory environmental cues to help users in navigation tasks. Given that the results in these simple experiments on rotation are encouraging, we would experiment with spatialized sounds that are linked to the map orientation.

It is envisaged that a map of this form would be used in preparation for visiting a new, unfamiliar location. Clearly experiments to see whether the learning from such a map transfers to the real world would be called for.

9 Conclusions

We have experimented with a multimodal, non-visual map which has the novel facility that it can be rotated to match orientation in the real world. Our results (while not achieving statistical significance) suggest that errors in an orientation/pointing task are less when map rotation is used than in a static condition. This gives encouragement to further develop the system.

Notes

References

Brock, A., et al.: Usage of multimodal maps for blind people: why and how. In: ACM International Conference on Interactive Tabletops and Surfaces (2010)

Hamid, N.N.A., Edwards, A.D.N.: Facilitating route learning using interactive audio-tactile maps for blind and visually impaired people. In: Extended Abstracts CHI 2013, pp. 37–42. ACM Press (2013)

Giudice, N., Betty, M.R., Loomis, J.M.: Functional equivalence of spatial images from touch and vision: evidence from spatial updating in blind and sighted individuals. J. Exp. Psychol. Learn. Mem. Cogn. 37(3), 621–634 (2011)

Levine, M., Jankovic, I.N., et al.: Principles of spatial problem solving. J Environ. Psychol. Gen. 111, 157–175 (1982)

MacEachren, A.M.: Learning spatial information from maps: can orientation-specificity be overcome? Prof. Geogr. 44(4), 431–443 (1992)

Paladugu, D.A., Wang, Z., Li, B.: On presenting audio-tactile maps to visually impaired users for getting directions. In: Extended Abstracts CHI 2010, pp. 3955–3960. ACM (2010)

Rossano, M.J., Warren, D.H.: The importance of alignment in blind subjects’ use of tactual maps. Perception 18(6), 805–816 (1989)

Rossano, M.J., Warren, D.H.: Misaligned maps lead to predictable errors. Perception 18, 215–229 (1989)

Shepard, R.N., Hurwitz, S.: Upward direction, mental rotation, and discrimination of left and right turns in maps. Cognition 18, 161–193 (1984)

Wang, W., Li, B., Hedgpeth, T., Haven, T. Instant tactile-audio map: enabling access to digital maps for people with visual impairment. In: ACM SIG ASSETS (2009)

Millar, S.: Understanding and Representing Space: Theory and Evidence from Studies with Blind and Sighted Children. Oxford University Press, New York (1994)

Halko, M.A., Connors, E.C., Sánchez, J., Merabet, L.B.: Real world navigation independence in the early blind correlates with differential brain activity associated with virtual navigation. Hum. Brain Mapp. 35, 2768–2778 (2014). doi:10.1002/hbm.2236

Eriksson, Y., Strucel, M.: A Guide to the Production of Tactile Graphics on Swellpaper. The Swedish Library of Talking Books and Braille, Stockholm (1995)

Pielot, M., Henze, N., Heuten, W., Boll, S.: Tangible user interface for the exploration of auditory city maps. In: O, I., Brewster, S. (eds.) HAID 2007. LNCS, vol. 4813, pp. 86–97. Springer, Heidelberg (2007)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2015 IFIP International Federation for Information Processing

About this paper

Cite this paper

Edwards, A.D.N., Hamid, N.N.A., Petrie, H. (2015). Exploring Map Orientation with Interactive Audio-Tactile Maps. In: Abascal, J., Barbosa, S., Fetter, M., Gross, T., Palanque, P., Winckler, M. (eds) Human-Computer Interaction – INTERACT 2015. INTERACT 2015. Lecture Notes in Computer Science(), vol 9296. Springer, Cham. https://doi.org/10.1007/978-3-319-22701-6_6

Download citation

DOI: https://doi.org/10.1007/978-3-319-22701-6_6

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-22700-9

Online ISBN: 978-3-319-22701-6

eBook Packages: Computer ScienceComputer Science (R0)