Abstract

Reactive synthesis techniques based on constructing the winning region of the system have been shown to work well in many cases but suffer from state explosion in others. A different approach, proposed recently, applies SAT solvers in a counterexample guided framework to solve the synthesis problem. However, this method is limited to synthesising systems that execute for a bounded number of steps and is incomplete for synthesis with unbounded safety and reachability objectives. We present an extension of this technique to unbounded synthesis. Our method applies Craig interpolation to abstract game trees produced by counterexample guided search in order to construct a monotonic sequence of may-losing regions. Experimental results based on SYNTCOMP 2015 competition benchmarks show this to be a promising alternative that solves some previously intractable instances.

A. Legg—NICTA is funded by the Australian Government as represented by the Department of Broadband, Communications and the Digital Economy and the Australian Research Council through the ICT Centre of Excellence program.

N. Narodytska and L. Ryzhyk—Work completed at Carnegie Mellon University.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

These keywords were added by machine and not by the authors. This process is experimental and the keywords may be updated as the learning algorithm improves.

1 Introduction

Reactive systems are ubiquitous in real-world problems such as circuit design, industrial automation, or device drivers. Automatic synthesis can provide a correct by construction controller for a reactive system from a specification. Reactive synthesis is formalised as a game between the controller and its environment. In this work we focus on safety games, in which the controller must prevent the environment from forcing the game into an error state.

The reactive synthesis problem is EXPTIME-complete (when starting from a symbolic representation of the game graph) so naïve algorithms are infeasible on anything but simple systems. There are several techniques that aim to mitigate this complexity by representing states and transitions of the system symbolically [4, 17, 19]. These techniques incrementally construct a symbolic representation of the set of discovered winning states of the game. The downside of this approach is that keeping track of all discovered winning states can lead to a space explosion even when using efficient symbolic representation such as BDDs or CNFs.

An alternative approach, proposed by Narodytska et al. [18], is to eschew states and focus on runs of the game. The method works by exploring a subset of the concrete runs of the game and proving that these runs can be generalised into a winning strategy on behalf of one of the players. In contrast to other existing synthesis methods, it does not store, in either symbolic or explicit form, the set of winning states. Instead, it uses counterexample guided backtracking search to identify a small subset of runs that are sufficient to solve the game.

This method has been shown to outperform BDDs on certain classes of games; however it suffers from an important limitation: it is only able to solve games with a bounded number of rounds. In case of safety games, this means proving that the controller can keep the game within the safe region for a bounded number of steps. This is insufficient in most practical situations that require unbounded realisability.

In this paper, we extend the method by Narodytska et al. [18] to unbounded safety games. To this end we enhance the method with computational learning: every time the search algorithm discovers a new counterexample, the learning procedure analyzes the counterexample, extracting a subset of states winning for one of the players from it. The learning procedure ensures that reaching a fixed point in these sets is sufficient to establish unbounded realisability. Our method can be seen as a hybrid between the counterexample guided algorithm by Narodytska et al. and methods based on losing set computation: we use the former to guide the search, while we rely on the latter to ensure convergence.

We evaluate our method on the benchmarks of the 2015 synthesis competition (SYNTCOMP’15). While our solver solves fewer total instances than competitors, it solves the largest number of unique instances, i.e., instances that could not be solved by any other sequential solver. These results confirm that there exist classes of problems that are hard for traditional synthesis techniques, but can be efficiently solved by our method. While further performance improvements are clearly needed, in its current state the method may be a worthwhile addition to a portfolio solver.

Section 2 outlines the original bounded synthesis algorithm. In Sect. 3 we describe and prove the correctness of our extension of the algorithm to unbounded games. In the following sections we evaluate our methodology, and compare our approach to other synthesis techniques.

2 Background

A safety game, \(G = \langle X, U, C, \delta , I, E \rangle \), is defined over boolean state variables X, uncontrollable action variables U, and controllable action variables C. We use \(\mathcal {X}\), \(\mathcal {U}\), and \(\mathcal {C}\) to denote sets of valuations of variables X, U, and C respectively. I is the initial state of the game given as a valuation of state variables. E(X) is the set of error states represented by its characteristic formula. The transition relation \(\delta (X, U, C, X')\) of the game is a boolean formula that relates current state and action to the set of possible next states of the game. We assume deterministic games, where \(\delta (x,u,c,x'_1) \wedge \delta (x,u,c,x'_2) \implies (x'_1=x'_2)\).

At every round of the game, the environment picks an uncontrollable action, the controller responds by choosing a controllable action and the game transitions into a new state according to \(\delta \). A run of a game \((x_0, u_0, c_0), (x_1, u_1, c_1) \dots (x_n, u_n, c_n)\) is a chain of state and action pairs s.t. \(\delta (x_k, u_k, c_k, x_{k+1})\). A run is winning for the controller if \(x_0 = I \wedge \forall i \in \{1..n\} (\lnot E(x_i))\). In a bounded game with maximum bound \(\kappa \) all runs are restricted to length \(\kappa \), whereas unbounded games consider runs of infinite length. Since we consider only deterministic games, a run is uniquely described by a list of assignments to U and C.

A controller strategy \(\pi ^c : \mathcal {X} \times \mathcal {U} \rightarrow \mathcal {C}\) is a mapping of states and uncontrollable inputs to controllable actions. A controller strategy is winning in a bounded game of maximum bound \(\kappa \) if all runs \((x_0, u_0, \pi ^c(x_0, u_0)), (x_1, u_1, \pi ^c(x_1, u_1)) \dots \) \((x_n, u_n, \pi ^c(x_n, u_n))\) are winning. Bounded realisability is the problem of determining the existence of such a strategy for a bounded game.

An environment strategy \(\pi ^e : \mathcal {X} \rightarrow \mathcal {U}\) is a mapping of states to uncontrollable actions. A bounded run is winning for the environment if \(x_0 = I \wedge \exists i \in \{1..n\} (E(x_i))\) and an environment strategy is winning for a bounded game if all runs \((x_0, \pi ^e(x_0), c_0), (x_1, \pi ^e(x_1), c_1) \dots (x_n, \pi ^e(x_n), c_n)\) are winning for the environment. Safety games are zero sum, therefore the existence of a winning controller strategy implies the nonexistence of a winning environment strategy and vice versa.

2.1 Counterexample Guided Bounded Synthesis

We review the bounded synthesis algorithm by Narodytska et al. [18], which is the main building block for our unbounded algorithm.

Example

We introduce a running example to assist the explanation. We consider a simple arbiter system in which the environment makes a request for a number of resources (1 or 2), and the controller may grant access to up to two resources. The total number of requests grows each round by the number of environment requests and shrinks by the number of resources granted by the controller in the previous round. The controller must ensure that the number of unhandled requests does not accumulate to more than 2. Figure 1 shows the variables (Fig. 1a), the initial state of the system (Fig. 1b), and the formulas for computing next-state variable assignments (Fig. 1c) for this example. We use primed identifiers to denote next-state variables and curly braces to define the domain of a variable.

This example is the \(n=2\) instance of the more general problem of an arbiter of n resources. For large values of n, the set of winning states has no compact representation, which makes the problem hard for BDD solvers. In Sect. 3 we will outline how the unbounded game can be solved without enumerating all winning states.

Our bounded synthesis algorithm constructs abstractions of the game that restrict actions available to one of the players. Specifically, we consider abstractions represented as trees of actions, referred to as abstract game trees (AGTs). Figure 2b shows an example abstract game tree restricting the environment (abstract game trees restricting the controller are similar). In the abstract game, the controller can freely choose actions whilst the environment is required to pick actions from the tree. After reaching a leaf, the environment continues playing unrestricted. The tree in Fig. 2b restricts the first environment action to request=1. At the leaf of the tree the game continues unrestricted.

The root of the tree is annotated by the initial state s of the abstract game and the bound k on the number of rounds. We denote \(\textsc {nodes}(T)\) the set of all nodes of a tree T, \(\textsc {leaves}(T)\) the subset of leaf nodes. For edge e, \(\textsc {action}(e)\) is the action that labels the edge, and for node n, \(\textsc {height}(k, n)\) is the distance from n to the last round of a game bounded to k rounds. \(\textsc {height}(k, T)\) is the height of the root node of the tree. For node n of the tree, \(\textsc {succ}(n)\) is the set of pairs \(\langle e, n' \rangle \) where \(n'\) is a child node of n and e is the edge connecting n and \(n'\).

Given an environment (controller) abstract game tree T a partial strategy \(Strat: \textsc {nodes}(T) \rightarrow \mathcal {C}\) (\(Strat: \textsc {nodes}(T) \rightarrow \mathcal {U}\)) labels each node of the tree with the controller’s (environment’s) action to be played in that node. Given a partial strategy Strat, we can map each leaf l of the abstract game tree to \(\langle s',i'\rangle =\textsc {outcome}(\langle s, i\rangle , Strat, l)\) obtained by playing all controllable and uncontrollable actions on the path from the root to the leaf. An environment (controller) partial strategy is winning against T if all its outcomes are states that are winning for the environment (controller) in the concrete game.

Example:

Intuition behind the algorithm. We present the intuition behind our bounded synthesis method by applying its simplified version to the running example. We begin by finding a trace of length k (here we consider \(k=3\)) that is winning for the controller, i.e., that starts from the initial state and avoids the error set for three game rounds (see Fig. 2a). We use a SAT solver to find such a trace, precisely as one would do in bounded model checking. Given this trace we make an initial conjecture that any trace starting with action gr0=1 gr1=0 is winning for the controller. This conjecture is captured in the abstract game tree shown in Fig. 2b. We validate this conjecture by searching for a counterexample trace that reaches an error state with the first controller action fixed to gr0=1 gr1=0. Such a trace, that refutes the conjecture, is shown in Fig. 2c. In this trace, the environment wins by playing req=2 in the first round. This move represents the environment’s partial strategy against the abstract game tree in Fig. 2b. This partial strategy is shown in Fig. 2d.

Next we strengthen the abstract game tree taking this partial strategy into account. To this end we again use a SAT solver to find a trace where the controller wins while the environment plays according to the partial strategy. In the resulting trace (Fig. 3a), the controller plays gr0=1 gr1=1 in the second round. We refine the abstract game tree using this move as shown in Fig. 3b. The environment’s partial strategy was to make two requests in the first round, to which the controller responds by now granting an additional two resources in the second round.

When the controller cannot refine the tree by extending existing branches, it backtracks and creates new branches. Eventually, we obtain the abstract game tree shown in Fig. 3c for which there does not exist a winning partial strategy on behalf of the environment. We conclude that the bounded game is winning for the controller.

The full bounded synthesis algorithm is more complicated: upon finding a candidate partial strategy on behalf of player p against abstract game tree T, it first checks whether the strategy is winning against T. By only considering such strong candidates, we reduce the number of refinements needed to solve the game. To this end, the algorithm checks whether each outcome of the candidate strategy is a winning state for \(\textsc {opponent}(p)\) by recursively invoking the synthesis algorithm on behalf of the opponent. Thus, our bounded synthesis algorithm can be seen as running two competing solvers, for the controller and for the environment.

The full procedure is illustrated in Algorithm 1. The algorithm takes a concrete game G with maximum bound \(\kappa \) as an implicit argument. In addition, it takes a player p (controller or environment), state s, bound k and an abstract game tree T and returns a winning partial strategy for p, if one exists. The initial invocation of the algorithm takes the initial state I, bound \(\kappa \) and an empty abstract game tree \(\emptyset \). Initially the solver is playing on behalf of the environment since that player takes the first move in every game round. The empty game tree does not constrain opponent moves, hence solving such an abstraction is equivalent to solving the original concrete game.

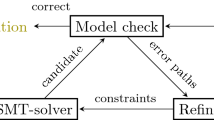

The algorithm is organised as a counterexample-guided abstraction refinement (CEGAR) loop. The first step of the algorithm uses the findCandidate function, described below, to come up with a candidate partial strategy that is winning when the opponent is restricted to T. If it fails to find a strategy, this means that no winning partial strategy exists against the opponent playing according to T. If, on the other hand, a candidate partial strategy is found, we need to verify if it is indeed winning for the abstract game T.

The verify procedure searches for a spoiling counterexample strategy in each leaf of the candidate partial strategy by calling solveAbstract for the opponent. The dual solver solves games on behalf of the opponent player.

If the dual solver can find no spoiling strategy at any of the leaves, then the candidate partial strategy is a winning one. Otherwise, verify returns the move used by the opponent to defeat a leaf of the partial strategy, which is appended to the corresponding node in T in order to refine it in line (9).

We solve the refined game by recursively invoking solveAbstract on it. If no partial winning strategy is found for the refined game then there is also no partial winning strategy for the original abstract game, and the algorithm returns a failure. Otherwise, the partial strategy for the refined game is projected on the original abstract game by removing the leaves introduced by refinements. The resulting partial strategy becomes a candidate strategy to be verified at the next iteration of the loop. In the worst case the loop terminates after all actions in the game are refined into the abstract game.

The CEGAR loop depends on the ability to guess candidate partial strategies in findCandidate. For this purpose we use the heuristic that a partial strategy may be winning if each outcome of the strategy can be extended to a run of the game that is winning for the current player. Clearly, if such a partial strategy does not exist then no winning partial strategy can exist for the abstract game tree. We can formulate this heuristic as a SAT query, which is constructed recursively by \(\textsc {treeFormula}\) (for the controller) or  (for the environment) in Algorithm 2.

(for the environment) in Algorithm 2.

The tree is first extended to the maximum bound with edges that are labeled with arbitrary opponent actions (Algorithm 1, line 14). For each node in the tree, new SAT variables are introduced corresponding to the state (\(X_T\)) and action (\(U_T\) or \(C_T\)) variables of that node. Additional variables for the opponent actions in the edges of T are introduced (\(U_e\) or \(C_e\)) and set to \(\textsc {action}(e)\). The state and action variables of node n are connected to successor nodes \(\textsc {succ}(n)\) by an encoding of the transition relation and constrained to the winning condition of the player.

3 Unbounded Synthesis

Bounded synthesis can be used to prove the existence of a winning strategy for the environment on the unbounded game by providing a witness. For the controller, the strongest claim that can be made is that the strategy is winning as long as the game does not extend beyond the maximum bound.

It is possible to set a maximum bound such that all runs in the unbounded game will be considered. The naïve approach is to use size of the state space as the bound (\({|\mathcal {X}|}\)) so that all states may be explored by the algorithm. A more nuanced approach is to use the diameter of the game [3], which is the smallest number d such that for any state x there is a path of length \({\le }d\) to all other reachable states. However, the diameter is difficult to estimate and can still lead to infeasibly long games.

We instead present an approach that iteratively solves games of increasing bound while learning bad states from abstract games using interpolation. We show that reaching a fixed point in learned states is sufficient for completeness.

3.1 Learning States with Interpolants

We extend the bounded synthesis algorithm to learn states losing for one of the players from failed attempts to find candidate strategies. The learning procedure kicks in whenever findCandidate cannot find a candidate strategy for an abstract game tree. We can learn additional losing states from the tree via interpolation. This is achieved in lines 18–20 in Algorithm 1, enabled in the unbounded version of the algorithm, which invoke learn or  to learn controller or environment losing states respectively (Algorithm 3).

to learn controller or environment losing states respectively (Algorithm 3).

Example:

Why we use interpolants. Consider node n in Fig. 4a. At this node there are two controller actions that prevent the environment from forcing the game into an error state in one game round. We want to use this tree to learn the states from which the controller can win playing one of these actions.

One option is using a BDD solver, working backwards from the error set, to find all losing states. One iteration of this operation on our example would give the set: \(\texttt {nrequests} = 3 \vee (\texttt {nrequests} = 2 \wedge ( \texttt {resource0} = 0 \vee \texttt {resource1} = 0)) \vee (\texttt {nrequests} = 1 \wedge (\texttt {resource0} = 0 \wedge \texttt {resource1} = 0))\). In the general case there is no compact representation of the losing set, so we try to avoid computing it by employing interpolation instead. The benefit of interpolation is that it allows approximating the losing states efficiently by obtaining an interpolant from a SAT solver.

Given two formulas \(F_1\) and \(F_2\) such that \(F_1 \wedge F_2\) is unsatisfiable, it is possible to construct a Craig interpolant [9] \(\mathcal {I}\) such that \(F_1 \rightarrow \mathcal {I}\), \(F_2 \wedge \mathcal {I}\) is unsatisfiable, and \(\mathcal {I}\) refers only to the intersection of variables in \(F_1\) and \(F_2\). An interpolant can be constructed efficiently from a resolution proof of the unsatisfiability of \(F_1 \wedge F_2\) [20].

We choose a non-leaf node n of T with maximal depth, i.e., a node whose children are leafs (Algorithm 3, line 3). We then split the tree at n such that both slices \(T_1\) and \(T_2\) contain a copy of n (line 4). Figure 4b shows \(T_1\), which contains all of T except n’s children, and \(T_2\) (Fig. 4c), which contains only n and its children. There is no candidate strategy for T so  is unsatisfiable. By construction,

is unsatisfiable. By construction,  and hence

and hence  is also unsatisfiable.

is also unsatisfiable.

We construct an interpolant with \(F_1 = s(X_T) \wedge \textsc {treeFormula}(k, T_1)\) and \(F_2 = \textsc {treeFormula}(k, T_2)\) (line 5). The only variables shared between \(F_1\) and \(F_2\) are the state variable copies belonging to node n. By the properties of the interpolant, \(F_2 \wedge \mathcal {I}\) is unsatisfiable, therefore all states in \(\mathcal {I}\) are losing against abstract game tree \(T_2\) in Fig. 4c. We also know that \(F_1 \rightarrow \mathcal {I}\), thus \(\mathcal {I}\) contains all states reachable at n by following \(T_1\) and avoiding error states.

Example

At node n, the interpolant \({\texttt {nrequests}} = 1 \wedge {\texttt {resource1}} = 1\) captures the information we need. Any action by the environment followed by one of the controller actions at n will be winning for the controller.

We have discovered a set \(\mathcal {I}\) of states losing for the environment. Environment-losing states are only losing for a particular bound: given that there does not exist an environment strategy that forces the game into an error state in k rounds or less; there may still exist a longer environment-winning strategy. We therefore record learned environment-losing states along with associated bounds. To this end, we maintain a conceptually infinite array of sets \(B^m[k]\) that are may-losing for the controller, indexed by bound k. \(B^m[k]\) are initialised to E for all k. Whenever an environment-losing set \(\mathcal {I}\) is discovered for a node n with bound \(\textsc {height}(k, n)\) in line 13 of Algorithm 3, this set is subtracted from \(B^m[i]\), for all i less than or equal to the bound (lines 14–16).

The  function is modified for the unbounded solver (Algorithm 4) to constrain the environment to the appropriate \(B^m\). This enables further interpolants to be constructed by the learning procedure recursively splitting more nodes from \(T_1\) (Algorithm 3, line 7) since the states that are losing to \(T_2\) are no longer contained in \(B^m\).

function is modified for the unbounded solver (Algorithm 4) to constrain the environment to the appropriate \(B^m\). This enables further interpolants to be constructed by the learning procedure recursively splitting more nodes from \(T_1\) (Algorithm 3, line 7) since the states that are losing to \(T_2\) are no longer contained in \(B^m\).

Learning of states losing from the controller is similar (learn in Algorithm 3). The main difference is that environment-losing states are losing for all bounds. Therefore we record these states in a single set \(B^M\) of must-losing states (Algorithm 3, line 6). This set is initialised to the error set E and grows as new losing states are discovered. The modified  function (Algorithm 4) blocks must-losing states, which also allows for recursive learning over the entire tree.

function (Algorithm 4) blocks must-losing states, which also allows for recursive learning over the entire tree.

3.2 Main Synthesis Loop

Figure 5 shows the main loop of the unbounded synthesis algorithm. The algorithm invokes the modified bounded synthesis procedure with increasing bound k until the initial state is in \(B^M\) (environment wins) or \(B^m\) reaches a fixed point (controller wins). We prove correctness in the next section.

3.3 Correctness

We define two global invariants of the algorithm. The may-invariant states that sets \(B^m[i]\) grow monotonically with i and that each \(B^m[i+1]\) overapproximates the states from which the environment can force the game into \(B^m[i]\). We call this operation Upre, the uncontrollable predecessor. So the may-invariant is:

The must-invariant guarantees that the must-losing set \(B^M\) is an underapproximation of the actual losing set B:

Correctness of \(\textsc {solveUnbounded}\) follows from these invariants. The must-invariant guarantees that the environment can force the game into an error state from \(B^M\), therefore checking whether the initial state is in \(B^M\) (as in line 5) is sufficient to return unrealisable. The may-invariant tells us that if \(B^m[i] \equiv B^m[i+1]\) (line 6) then \(Upre(B^m[i]) \subseteq B^m[i]\), i.e. \(B^m[i]\) overapproximates the winning states for the environment. We know that \(I \not \in B^m[k]\) due to the post-condition of checkBound, and since the may-invariant tells us that \(B^m\) is monotonic then I must not be in \(B^m[i]\). If \(I \not \in B^m[i]\) then I is not in the winning states for the environment and the controller can always win from I.

Both invariants trivially hold after \(B^m\) and \(B^M\) have been initialised in the beginning of the algorithm. The sets \(B^m\) and \(B^M\) are only modified by the functions learn and  . Below we prove that

. Below we prove that  maintains the invariants. The proof of learn is similar.

maintains the invariants. The proof of learn is similar.

3.4 Proof of \(\overline{\text{ learn }}\)

We prove that postconditions of  are satisfied assuming that its preconditions hold.

are satisfied assuming that its preconditions hold.

Lines (11 and 12) splits the tree T into \(T_1\) and \(T_2\), such that \(T_2\) has depth 1. Consider formulas  and

and  . These formulas only share variables \(X_n\). Their conjunction \(F_1 \wedge F_2\) is unsatisfiable, as by construction any solution of \(F_1 \wedge F_2\) also satisfies

. These formulas only share variables \(X_n\). Their conjunction \(F_1 \wedge F_2\) is unsatisfiable, as by construction any solution of \(F_1 \wedge F_2\) also satisfies  , which is unsatisfiable (precondition (b)). Hence the interpolation operation is defined for \(F_1\) and \(F_2\).

, which is unsatisfiable (precondition (b)). Hence the interpolation operation is defined for \(F_1\) and \(F_2\).

Intuitively, the interpolant computed in line (13) overapproximates the set of states reachable from s by following the tree from the root node to n, and underapproximates the set of states from which the environment loses against tree \(T_2\).

Formally, \(\mathcal {I}\) has the property \(\mathcal {I}\wedge F_2 \equiv \bot \). Since \(T_2\) is of depth 1, this means that the environment cannot force the game into \(B^m[\textsc {height}(k, n)-1]\) playing against the counterexample moves in \(T_2\). Hence, \(\mathcal {I}\cap Upre(B^m[\textsc {height}(k, n)-1]) = \emptyset \). Furthermore, since the may-invariant holds, \(\mathcal {I}\cap Upre(B^m[i]) = \emptyset \), for all \(i < \textsc {height}(k, n)\). Hence, removing \(\mathcal {I}\) from all \(B^m[i], i\le \textsc {height}(k, n)\) in line (15) preserves the may-invariant, thus satisfying the first post-condition.

Furthermore, the interpolant satisfies \(F_1 \rightarrow \mathcal {I}\), i.e., any assignment to \(X_n\) that satisfies  also satisfies \(\mathcal {I}\). Hence, removing \(\mathcal {I}\) from \(B^m[\textsc {height}(k, n)]\) makes

also satisfies \(\mathcal {I}\). Hence, removing \(\mathcal {I}\) from \(B^m[\textsc {height}(k, n)]\) makes  unsatisfiable, and hence all preconditions of the recursive invocation of

unsatisfiable, and hence all preconditions of the recursive invocation of  in line (17) are satisfied.

in line (17) are satisfied.

At the second last recursive call to  , tree \(T_1\) is empty, n is the root node,

, tree \(T_1\) is empty, n is the root node,  ; hence \(s(X_T) \wedge \)

; hence \(s(X_T) \wedge \)  \((k, T_1) \equiv s(X_T) \wedge B^m[\textsc {height}(k, T_1)](X^T) \equiv \bot \). Thus the second postcondition of

\((k, T_1) \equiv s(X_T) \wedge B^m[\textsc {height}(k, T_1)](X^T) \equiv \bot \). Thus the second postcondition of  holds.

holds.

The proof of learn is similar to the above proof of learn. An interpolant constructed from \(F_1=s(X_T) \wedge \textsc {treeFormula}(k, T_1)\) and \(F_2 = \textsc {treeFormula}(k, T_2)\) has the property \(\mathcal {I}\wedge F_2 \equiv \bot \) and the precondition ensures that the controller is unable to force the game into \(B^M\) playing against the counterexample moves in \(T_2\). Thus adding \(\mathcal {I}\) to \(B^M\) maintains the must-invariant satisfying the first postcondition.

Likewise, in the second last recursive call of learn with the empty tree \(T_1\) and root node n: \(\textsc {treeFormula}(k, T_1) \equiv \lnot B^M(X_T)\). Hence \(s(X_T) \wedge \textsc {treeFormula}(k, T_1) \equiv s(X_T) \wedge \lnot B^M(X_T) \equiv \bot \). Therefore \(s(X_T) \wedge B^M(X_T) \not \equiv \bot \), the second postcondition, is true.

3.5 Proof of Termination

We must prove that \(\textsc {checkBound}\) terminates and that upon termination its postcondition holds, i.e., state I is removed from \(B^m[\kappa ]\) if there is a winning controller strategy on the bounded safety game of maximum bound \(\kappa \) or it is added to \(B^M\) otherwise. Termination follows from completeness of counterexample guided search, which terminates after enumerating all possible opponent moves in the worst case.

Assume that there is a winning strategy for the controller at bound \(\kappa \). This means that at some point the algorithm discovers a counterexample tree of bound \(\kappa \) for which the environment cannot force into E. The algorithm then invokes the  method, which removes I from \(B^m[\kappa ]\). Alternatively, if there is a winning strategy for the environment at bound \(\kappa \) then a counterexample losing for the controller will be found. Subsequently learn will be called and I added to \(B^M\).

method, which removes I from \(B^m[\kappa ]\). Alternatively, if there is a winning strategy for the environment at bound \(\kappa \) then a counterexample losing for the controller will be found. Subsequently learn will be called and I added to \(B^M\).

3.6 Optimisation: Generalising the Initial State

This optimisation allows us to learn may and must losing states faster. Starting with a larger set of initial states we increase the reachable set and hence increase the number of states learned by interpolation. This optimisation requires a modification to \(\textsc {solveAbstract}\) to handle sets of states, which is not shown.

The optimisation is relatively simple and is inspired by a common greedy heuristic for minimising \({\texttt {unsat}}\) cores. Initial state I assigns a value to each variable in X. If the environment loses \(\langle I, k \rangle \) then we attempt to solve for a generalised version of I by removing one variable assignment at a time. If the environment loses from the larger set of states then we continue generalising. In this way we learn more states by increasing the reachable set. In our benchmarks we have observed that this optimisation is beneficial on the first few iterations of checkBound.

4 Evaluation

We evaluate our approach on the benchmarks of the 2015 synthesis competition (SYNTCOMP’15). Each benchmark comprises of controllable and uncontrollable inputs to a circuit that assigns values to latches. One latch is configured as the error bit that determines the winner of the safety game. The benchmark suite is a collection of both real-world and toy specifications including generalised buffers, AMBA bus controllers, device drivers, and converted LTL formulas. Descriptions of many of the benchmark families used can be found in the 2014 competition report [14].

The implementation of our algorithm uses Glucose [2] for SAT solving and Periplo [21] for interpolant generation. We intend to open source the tool for SYNTCOMP’16. The benchmarks were run on a cluster of Intel Quad Core Xeon E5405 2 GHz CPUs with 16 GB of memory. The solvers were allowed exclusive access to a node for one hour to solve an instance.

The results of our benchmarking are shown, along with the synthesis competition results [1], in Table 1. The competition was run on Intel Quad Core 3.2 GHz CPUs with 32 GB of memory, also on isolated nodes for one hour per instance. The competition results differ significantly from our own benchmarks due to this more powerful hardware. For our benchmarks we report only the results for solvers we were able to run on our cluster. The unique column lists the number of instances that only that tool could solve in the competition (excluding our solver). In brackets is the number of instances that only that tool could solve, including our solver.

Our implementation was able to solve 103 out of the 250 specification in the allotted time, including 12 instances that were not solved by any other solver in the sequential track of the competition. The unique instances we solved are listed in Table 2.

Five of the instances unique to our solver are device driver instances and another five are from the stay family. This supports the hypothesis that different game solving methodologies perform better on certain classes of specifications.

We also present a cactus plot of the number of instances solved over time (Fig. 5). We have plotted the best configuration of each solver we benchmarked. The solvers shown are Demiurge [4], the only SAT-based tool in the competition, the winner of the sequential realisability track Simple BDD Solver 2 [22], and AbsSynthe (seq3) [6].

Whilst our solver is unable to solve as many instances as other tools, it was able to solve more unique instances than any solver in the competition. This confirms that our methodology is able to fill gaps in a state of the art synthesis toolbox by more efficiently solving instances that are hard for other techniques. For this reason our solver would be a worthwhile addition to a portfolio solver. In the parallel track of the competition, Demiurge uses a suite of 3 separate but communicating solvers. The solvers relay unsafe states to one another, which is compatible with the set \(B^M\) in our solver. This technique can already solve each of the unique instances solved by our solver but there may still be value in the addition of this work to the portfolio. It remains future work to explore this possibility.

5 Related Work

Synthesis of safety games is a thoroughly explored area of research with most efforts directed toward solving games with BDDs [7] and abstract interpretation [6, 22]. Satisfiability solving has been used previously for synthesis in a suite of methods proposed by Bloem et al. [4]. The authors propose employing competing SAT solvers to learn clauses representing bad states, which is similar to our approach but does not unroll the game. They also suggest QBF solver, template-based, and Effectively Propositional Logic (EPR) approaches.

SAT-based bounded model checking approaches that unroll the transition relation have been extended to unbounded by using conflicts in the solver [15], or by interpolation [16]. However, there are no corresponding adaptations to synthesis.

Incremental induction [5] is another technique for unbounded model checking that inspired several approaches to synthesis including the work presented here. Morgenstern et al. [17] proposed an technique that computes sets of states that overapproximate the losing states (similar to our \(B^m\)) and another set of winning states (similar to the negation of \(B^M\)). Their algorithm maintains a similar invariant over the sets of losing states as our approach and has the same termination condition. It differs in how the sets are computed, which it does by inductively proving the number of game rounds required by the environment to win from a state. Chiang and Jiang [8] recently proposed a similar approach that focusses on computing the winning region for the controller forwards from the initial state in order to take advantage of reachability information and bad transition learning without needing to discard learnt clauses.

There are different approaches to bounded synthesis than the one described here. The authors of [13] suggest a methodology directly inspired by bounded model checking and it has been adapted to symbolic synthesis [11]. Lazy synthesis [12] is a counterexample guided approach to bounded synthesis that refines an implementation for the game instead of an abstraction of it.

The original bounded synthesis algorithm of Narodytska et al. [18] solves realisability without constructing a strategy. In [10] the realisability algorithm is extended with strategy extraction. The technique relies on interpolation over abstract game trees to compute the winning strategy. In the present work we use interpolation in a different way in order to learn losing states of the game. In addition, this method could be easily adapted to the unbounded realisability algorithm presented here to generate unbounded strategies.

6 Conclusion

We presented an extension to an existing bounded synthesis technique that promotes it to unbounded safety games. The approach taken as whole differs from other synthesis techniques by combining counterexample guided game solving with winning set computation. Intuitively, the abstraction refinement framework of the bounded synthesis algorithm restricts the search to consider only moves that may lead to winning strategies. By constructing sets of bad states during this search we aim to consider only states that are relevant to a winning strategy while solving the unbounded game.

The results show that our approach is able to solve more unique instances than other solvers by performing well on certain classes of games that are hard for other methodologies.

Future work includes incorporating the solver into a parallel suite of communicating solvers [4]. There is evidence that different solvers perform well on different classes of games. Thus we hypothesise that the way forward for synthesis tools is to combine the efforts of many different techniques.

References

Syntcomp 2015 results. http://syntcomp.cs.unisaarland.de/syntcomp2015/experiments/. Accessed 29 Jan 2016

Audemard, G., Simon, L.: Lazy clause exchange policy for parallel SAT solvers. In: Sinz, C., Egly, U. (eds.) SAT 2014. LNCS, vol. 8561, pp. 197–205. Springer, Heidelberg (2014)

Biere, A., Cimatti, A., Clarke, E., Zhu, Y.: Symbolic model checking without BDDs. In: Cleaveland, W.R. (ed.) TACAS 1999. LNCS, vol. 1579, pp. 193–207. Springer, Heidelberg (1999)

Bloem, R., Könighofer, R., Seidl, M.: SAT-based synthesis methods for safety specs. In: McMillan, K.L., Rival, X. (eds.) VMCAI 2014. LNCS, vol. 8318, pp. 1–20. Springer, Heidelberg (2014)

Bradley, A.R.: SAT-based model checking without unrolling. In: Jhala, R., Schmidt, D. (eds.) VMCAI 2011. LNCS, vol. 6538, pp. 70–87. Springer, Heidelberg (2011)

Brenguier, R., Pérez, G.A., Raskin, J., Sankur, O.: AbsSynthe: abstract synthesis from succinct safety specifications. In: Workshop on Synthesis, SYNT, pp. 100–116 (2014)

Burch, J.R., Clarke, E.M., McMillan, K.L., Dill, D.L., Hwang, L.J.: Symbolic model checking: \(10^{20}\) states and beyond. In: Symposium on Logic in Computer Science, pp. 428–439 (1990)

Chiang, T.W., Jiang, J.H.R.: Property-directed synthesis of reactive systems from safety specifications. In: Proceedings of the IEEE/ACM International Conference on Computer-Aided Design, ICCAD 2015, pp. 794–801 (2015)

Craig, W.: Linear reasoning. A new form of the Herbrand-Gentzen theorem. J. Symb. Log. 22(3), 250–268 (1957)

Eèn, N., Legg, A., Narodytska, N., Ryzhyk, L.: SAT-based strategy extraction in reachability games. In: AAAI Conference on Artificial Intelligences, AAAI, pp. 3738–3745 (2015)

Ehlers, R.: Symbolic bounded synthesis. In: Touili, T., Cook, B., Jackson, P. (eds.) CAV 2010. LNCS, vol. 6174, pp. 365–379. Springer, Heidelberg (2010)

Finkbeiner, B., Jacobs, S.: Lazy synthesis. In: Kuncak, V., Rybalchenko, A. (eds.) VMCAI 2012. LNCS, vol. 7148, pp. 219–234. Springer, Heidelberg (2012)

Finkbeiner, B., Schewe, S.: Bounded synthesis. Softw. Tools Technol. Transf. STTT 15(5–6), 519–539 (2013)

Jacobs, S., Bloem, R., Brenguier, R., Ehlers, R., Hell, T., Könighofer, R., Pérez, G.A., Raskin, J., Ryzhyk, L., Sankur, O., Seidl, M., Tentrup, L., Walker, A.: The first reactive synthesis competition (SYNTCOMP 2014). Computing Research Repository, CoRR abs/1506.08726 (2015). http://arxiv.org/abs/1506.08726

McMillan, K.L.: Applying SAT methods in unbounded symbolic model checking. In: Brinksma, E., Larsen, K.G. (eds.) CAV 2002. LNCS, vol. 2404, pp. 250–264. Springer, Heidelberg (2002)

Dransfield, M.R., Marek, V.W., Truszczyński, M.: Satisfiability and computing van der Waerden numbers. In: Giunchiglia, E., Tacchella, A. (eds.) SAT 2003. LNCS, vol. 2919, pp. 1–13. Springer, Heidelberg (2004)

Morgenstern, A., Gesell, M., Schneider, K.: Solving games using incremental induction. In: Johnsen, E.B., Petre, L. (eds.) IFM 2013. LNCS, vol. 7940, pp. 177–191. Springer, Heidelberg (2013)

Narodytska, N., Legg, A., Bacchus, F., Ryzhyk, L., Walker, A.: Solving games without controllable predecessor. In: Biere, A., Bloem, R. (eds.) CAV 2014. LNCS, vol. 8559, pp. 533–540. Springer, Heidelberg (2014)

Piterman, N., Pnueli, A., Sa’ar, Y.: Synthesis of reactive(1) designs. In: Emerson, E.A., Namjoshi, K.S. (eds.) VMCAI 2006. LNCS, vol. 3855, pp. 364–380. Springer, Heidelberg (2006)

Pudlák, P.: Lower bounds for resolution and cutting plane proofs and monotone computations. J. Symb. Log. 62(3), 981–998 (1997)

Rollini, S.F., Alt, L., Fedyukovich, G., Hyvärinen, A.E.J., Sharygina, N.: PeRIPLO: a framework for producing effective interpolants in SAT-based software verification. In: McMillan, K., Middeldorp, A., Voronkov, A. (eds.) LPAR-19 2013. LNCS, vol. 8312, pp. 683–693. Springer, Heidelberg (2013)

Walker, A., Ryzhyk, L.: Predicate abstraction for reactive synthesis. In: Formal Methods in Computer-Aided Design FMCAD, pp. 219–226 (2014)

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2016 Springer International Publishing Switzerland

About this paper

Cite this paper

Legg, A., Narodytska, N., Ryzhyk, L. (2016). A SAT-Based Counterexample Guided Method for Unbounded Synthesis. In: Chaudhuri, S., Farzan, A. (eds) Computer Aided Verification. CAV 2016. Lecture Notes in Computer Science(), vol 9780. Springer, Cham. https://doi.org/10.1007/978-3-319-41540-6_20

Download citation

DOI: https://doi.org/10.1007/978-3-319-41540-6_20

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-41539-0

Online ISBN: 978-3-319-41540-6

eBook Packages: Computer ScienceComputer Science (R0)