Abstract

Support Vector Machine (SVM) cannot process imbalanced problem and matrix patterns. Thus, Fuzzy SVM (FSVM) is proposed to process imbalanced problem while Support Matrix Machine (SMM) is proposed to process matrix patterns. FSVM applies a fuzzy membership to each training pattern such that different patterns can make different contributions to the learning machine. However, how to evaluate fuzzy membership becomes the key point to FSVM. Although SMM can process matrix patterns, it still has no ability to process imbalanced problem. This paper adopts SMM as the basic and proposes an entropy-based support matrix machine for imbalanced data sets, i.e., ESMM. The contributions of ESMM are: (1) proposing an entropy-based fuzzy membership evaluation approach which enhances importance of certainty patterns, (2) guaranteeing importance of positive patterns and getting a more flexible decision surface. Experiments on real-world imbalanced data sets and matrix patterns validate the effectiveness of ESMM.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

1 Introduction

Support Vector Machine (SVM) constructs a hyperplane or set of hyperplanes in a high- or infinite-dimensional space, which can be used for classification, regression, or other tasks. Intuitively, a good separation is achieved by the hyperplane that has the largest distance to the nearest training-data point of any class (so-called functional margin), since in general the larger the margin the lower the generalization error of the classifier [1]. Conventional SVM can be used in many tasks including text and hypertext categorization, classification of images, classifying proteins in medical science, and recognizing hand-written characters. Although SVM has been validated effect on these applications, it still has two disadvantages. One is that SVM cannot process matrix patterns and another is that it cannot process imbalanced problem.

\(\bullet \) Matrix patterns which dimensions are \(m\times n\) (where m and n are both larger than 1) are the basic of matrix learning. For example, video and images are both matrix patterns. In order to process matrix patterns, matrix-pattern-oriented learning machine (MatC), i.e., matrix learning machine, has been developed. Classical learning machines include matrix-pattern-oriented Ho-Kashyap learning machine with regularization learning (MatMHKS) [2], new least squares support vector classification based on matrix patterns (MatLSSVC) [3], and one-class support vector machines based on matrix patterns (OCSVM) [4]. Besides those matrix learning machines, Xie et al. have proposed a Support Matrix Machine (SMM) [5] so as to replace SVM. SMM can leverage the structure of the data matrices and has the grouping effect property. But all of these matrix learning machines cannot process imbalanced problem.

\(\bullet \) As is known to all, in many real-world classification problems, such as e-mail foldering [6], fault diagnosis [7], detection of oil spills [8], and medical diagnosis [9], we can always divide a data set into two classes, one is positive class and the other is negative class. When the size of positive class is much smaller than that of negative class, imbalanced problem occurs. Since most standard classification learning machines including Support Vector Machine (SVM) and Neural Network (NN) are proposed with the assumption on the balanced class distributions or equal misclassification costs [10], so they fail to properly represent the distributive characteristics of patterns and result in the unfavorable performance when they are adopted to process imbalanced problem. In order to overcome such a disadvantage, Fuzzy SVM (FSVM) [11] and Bilateral-weighted FSVM (B-FSVM) [12] are proposed. FSVM applies a fuzzy membership to each input pattern and reformulates SVM such that different input patterns can make different contributions to the learning of decision surface. B-FSVM treats every pattern as both positive and negative classes, but with different memberships due to we can not say one pattern belongs to one class absolutely. But for both of them, how to determine the fuzzy membership function is the key point. Furthermore, both of them cannot process matrix patterns.

In this paper, we try to propose a learning machine which can process matrix patterns and imbalanced problem. First, in order to process matrix patterns, we adopt SMM as a basic. Then in order to process imbalanced problem, we adopt the notion of FSVM, namely, applies a fuzzy membership to each input pattern. Furthermore, for the fuzzy membership, we propose a new fuzzy membership evaluation approach which assigns the fuzzy membership of each pattern based on its class certainty. In this paper, class certainty demonstrates the certainty of pattern labeled to a certain class. Due to the entropy is an effective measure of certainty, we adopt the entropy to evaluate the class certainty of each pattern. In doing so, the entropy-based fuzzy membership evaluation approach is proposed. This approach determines the fuzzy membership of training patterns based on their corresponding entropies. By adopting the entropy-based fuzzy membership evaluation and SMM, the Entropy-based Support Matrix Machine (ESMM) is proposed to process the imbalanced data sets. In practice, as the importance of positive class is higher than that of negative class in imbalanced data sets, i.e., the learning machine should pay more attention to positive patterns than negative ones. Thus, positive patterns are assigned to relatively large fuzzy memberships to guarantee the importance of positive class here. While, the fuzzy memberships of negative patterns are determined by the entropy-based fuzzy membership evaluation approach, i.e., patterns with lower class certainty are assigned to small fuzzy memberships based on the criterion that patterns with lower class certainty are more insensitive to noise, and easily mislead the decision surface, thus their importance should be weakened on imbalanced data sets. After evaluating the fuzzy membership of all training patterns, ESMM is adopted to classify imbalanced data sets.

The contributions of this paper can be highlighted as follows:

-

(1)

A new entropy-based fuzzy membership evaluation approach is proposed. This approach adopts entropy to evaluate class certainty of a pattern and determines the corresponding fuzzy membership based on class certainty. In doing so, the learning machine can pay more attention to the patterns with higher class certainty to result in more robust decision surface.

-

(2)

To guarantee the importance of positive class, the positive patterns are assigned to the relatively large fuzzy memberships, which results in the decision surface paying more attention to the positive class so as to increase generalization of learning machine.

The rest of this paper is given below. Section 2 introduces the proposed entropy-based fuzzy membership evaluation approach, then give the details of ESMM. In Sect. 2.1, several experiments on real-world imbalanced data sets and matrix patterns including images are conducted to validate the effectiveness of ESMM. Following that, conclusions are given in Sect. 4.

2 Entropy-Based Matrix Learning Machine (ESMM)

2.1 Entropy-Based Fuzzy Membership

When we process imbalanced data sets, positive class is always more important than negative class. Thus, in the proposed entropy-based fuzzy membership evaluation approach, we assign positive patterns to a relatively high fuzzy membership, e.g., 1.0, to guarantee the importance of positive class. As to the negative class, we fuzzify negative patterns based on their class certainties.

In information theory, entropy is always used to characterize the certainty of source of information. If entropy is smaller, then information is more certain [13]. By employing this character of entropy, we can evaluate the class certainty of a pattern in the training class. After getting the class certainty of each pattern, we assign fuzzy membership of each training pattern based on class certainty. In practice, the patterns with higher class certainty, i.e., lower entropy, are assigned to higher fuzzy memberships to enhance their contributions to the decision surface, and vice versa.

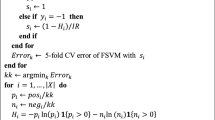

Suppose that there are N training patterns, \(\{x_i,y_i\}\) where \(i=1,2,\ldots ,N\) and \(y_i\in \{+1,-1\}\) is the class label. When \(y_i=1\), pattern \(x_i\) belongs to the positive class, otherwise, it belongs to the negative class. The probabilities of \(x_i\) belonging to positive and negative class are \(p_{+i}\) and \(p_{-i}\) respectively. The entropy of \(x_i\) is \(H_i=-p_{+i}ln(p_{+i})-p_{-i}ln(p_{-i})\) where ln represents the natural logarithm operator. Due to neighbors of a pattern can determine local information of it, thus the probability evaluation is based on its k nearest neighbors. For a pattern \(x_i\), we select its k nearest neighbors \(\{x_{i1},x_{i2},\ldots ,x_{ik}\}\) at first. Then we count the number of both positive and negative class in these k selected patterns and denote the numbers of patterns belonging to positive and negative class are \(num_{+i}\) and \(num_{-i}\) respectively. Finally, the probabilities of \(x_i\) belonging to positive and negative class are calculated with \(p_{+i}=\frac{num_{+i}}{k}\) and \(p_{-i}=\frac{num_{-i}}{k}\). After evaluating the class probabilities of \(x_i\), we can calculate its entropy.

By adopting the above entropy evaluation approach, the entropy of the negative patterns are \(H=\{H_{-1},H_{-2},\ldots ,H_{-n_{-}}\}\), where \(n_{-}\) is the number of the negative patterns. \(H_{min}\) and \(H_{max}\) are the minimum and maximum entropy of H. The entropy-based fuzzy memberships for negative patterns are evaluated as follows.

Firstly, separate the negative patterns into m subsets based on their entropy as described in Table 1, i.e., \(\{Sub_{k}\}\) where \(k=1,2,\ldots ,m\).

Then, fuzzy memberships of patterns in each subset are set as:

where \(FM_{k}\) is the fuzzy membership for patterns distributed in subset \(Sub_k\), the fuzzy membership parameter \(\alpha \in (0,\frac{1}{m-1})\) since \(FM_{k}\) is positive and not larger than 1.0. It should be declared that patterns in the same subset are set to same fuzzy membership so that these patterns selected in the same subsets have same importance to the decision surface. Finally, the fuzzy membership \(s_i\) for a training pattern \(x_i\) is assigned as: if \(y_i=+1\), then \(s_i=1.0\), else if \(y_i=-1\) and \(x_i\in Sub_k\), then \(s_i=FM_{k}\). So far, the entropy-based fuzzy membership for the training patterns are evaluated.

2.2 Entropy-Based Support Matrix Machine

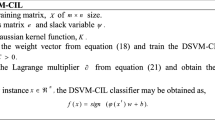

By adopting the evaluated entropy-based fuzzy membership which is given before, we propose the entropy-based support matrix machine (ESMM). The detailed description on ESMM is given below.

Suppose that there is a binary-class classification problem with N matrix patterns \((A_i,y_i,s_i)\), \(i=1,2,\ldots ,N\). Here \(A_i\in R^{m\times n}\) is the matrix representation of \(x_i\) and its class label is \(y_i \in {\{+1,-1\}}\). If \(y_i=+1\), \(x_i\) or \(A_i\) belongs to class \(+1\) or positive class, and then if \(y_i=-1\), the pattern belongs to class \(-1\) or negative class. \(s_i\) is the entropy-based fuzzy membership.

The corresponding criterion function of ESMM is defined below.

where \(W\in R^{m\times n}\) is the matrix of regression coefficients and its nuclear norm is \(\left| \left| W\right| \right| _{\star }\). \(\theta \) is the coefficient. tr is the trace of matrix. \(b_i\) is a loose variable for pattern \(A_i\). C (\(C\in {R},\) \(C\ge 0\)) is the regularization parameter that adjusts the tradeoff between model complexity and training error. Here, \(b=[b_1,\ldots ,b_i,\ldots ,b_N]^T\) and \(b_{i}\) is started as \(b_{i}\ge 0 \). The iteration for b is given in Eq. (3).

where the error vector e at k-th iteration of \(A_i\), i.e., \(e_{i}(k)\) should be \(W(k)^TA_i-1-b_i(k)\) and W(k) and \(b_i(k)\) are k-th component of W and \(b_i\). Then we adopt the similar method of SMM to get the optimal W and b here. After that, we can get the discriminant function of ESMM as below.

If \(g(A_i)>0\), then we label \(A_i\) as a positive pattern, then if \(g(A_i)<0\), we label \(A_i\) as a negative pattern.

3 Experiments

In this section, we adopt 25 real-world imbalanced data sets and 5 image data sets for examples and the compared learning machines are SVM, FSVM, MatMHKS, B-FSVM, SMM. The used 25 real-world imbalanced data sets are selected from the KEEL imbalanced benchmark ones [14, 15]. Information of these data sets are given in Tables 2 and 3.

3.1 Experimental Settings

In terms of the compared learning machines and ESMM, the experimental settings are given here. For the SVM-based learning machines, the used kernel is Radial Basis Function (RBF) kernel \(ker(x_i,x_j)=exp(-\frac{\left| \left| x_i-x_j\right| \right| _2^2}{\sigma ^2})\) where \(\sigma \) is selected from the set \(\{10^{-3}, 10^{-2},\ldots , 100, 1000\}\). For MatMHKS, the experimental setting can be found in [2]. For SMM, the experimental setting can be found in [5]. For ESMM, its setting is similar with SMM. Moreover, for ESMM, the number of the separated subsets \(m=10\) and the fuzzy membership parameter \(\alpha =0.05\) which results in the fuzzy membership \(0.5\le s_i\le 1.0\). The reason of restricting \(s_i\in [0.5,1.0]\) is that the class label of \(x_i\) should not be neglected when determining the fuzzy membership, i.e., \(x_i\) is more likely to be classified to the class which is indicated by its class label. As to negative class patterns, we set \(s_i>0.5\) to indicate that a negative pattern \(x_i\) is more likely to belong to the negative class. Moreover, the number of nearest neighbors k for calculating the class probability is selected from \(\{1,2,3,\ldots ,18,19,20\}\). In order to measure the performance of compared learning machines on imbalanced data sets, the values of Area Under the ROC Curve (AUC) [25] is given. Besides those, \(maxIter=500\) represents the maximal size of the iteration. One-against-one classification strategy is used for multi-class problems here [21,22,23,24]. The 10-fold cross validation approach [26] is adopted for the parameter selection. The computations are performed on Intel Core 2 processors with 2.66 GHz, 8G RAM, Microsoft Windows 7, and Matlab environment.

3.2 Experiments on Real-World Imbalanced Data Sets

For experiments, the AUC values are presented in Table 4. The average AUC on all used data sets are presented. Moreover, the average ranks of the learning machines on the used data sets are listed.

From the experimental results, it is found that: (1) ESMM results in the best classification performance on 12 of 30 imbalanced data sets which indicates that the our proposal outperforms the compared learning machines; (2) ESMM outperforms the traditional MatMHKS on 26 of 30 imbalanced data sets; (3) The average AUC of ESMM is respectively greater than B-FSVM, FSVM, and SVM on about 5\(\%\), 9\(\%\), and 10\(\%\), which demonstrates that ESMM is of significate advantage in processing imbalanced data sets compared to SVM, FSVM, and B-FSVM; (4) It is found that for some data sets, for example, COIL-20, the three matrix-pattern-oriented approaches perform worse than SVM. We think it is the coincidence due to the training part is gotten in random. But according to the average result, we still find that our proposed ESMM outperforms others in average; (5) For the used 25 vector data sets, ESMM performs best on the 9 data sets of them. For others, ESMM performs better in average and it does not perform worst on any vector data set. This phenomenon can explain the superiority of the ESMM on the vector data sets; (6) As we said before, ESMM and FSVM both adopt fuzzy membership to each input pattern. Now from the experiments, it is found that compared with FSVM, the better performance of ESMM validates that the proposed entropy-based fuzzy membership evaluation approach boosts the performance of a learning machine.

3.3 Influence of Parameter k on the Performance of ESMM

In ESMM, the entropy-based fuzzy membership is evaluated based on the class probability of each training pattern. Thus, the number of nearest neighbors k might have some influence on the class probability. To further investigate the effectiveness of ESMM, here, we study the influence of k on the classification performance. All data sets given in Table 2 are used and related experimental results are given in Fig. 1. The figure shows AUC on the testing sets of the adopted real-world imbalanced data sets and image data sets with respect to k. It is found that: (1) the number of the selected nearest neighbors for calculating the class probability, i.e., k, has some influence on the classification performance since AUC curves fluctuate with respect to k on most data sets; (2) on some data sets, the classification performances are sensitive to the variation of k, since AUC curves on these data sets fluctuate greatly while on some data sets, are not; (3) in generally, with k from 8 to 12, ESMM always gets best performance. Such a result can give us a guidance that how to determine an appropriate k in practical use.

3.4 Comparison Between ESMM and Entropy-Based MatMHKS (EMatMHKS)

Here, we give the comparison between ESMM and our previous proposed learning machine, entropy-based MatMHKS (EMatMHKS) [27] which is also an entropy-based matrix learning machine. Table 5 shows the comparison between ESMM and EMatMHKS. \(\uparrow \) (\(\downarrow \)) represents that ESMM performance better (worse) than EMatMHKS and \((\star )\) represents that the AUC value of ESMM is \(\star \) larger or smaller than the one of EMatMHKS. From this table, we find that ESMM performs better than EMatMHKS in average.

4 Conclusion

There are two hot spots of present research, one is imbalanced problem and the other is matrix learning. Imbalanced problem occurs when the size of positive class is more smaller than that of negative class. However, most standard classification learning machines result in unfavorable performance on imbalanced data sets since they are originally designed for processing balanced problems. Although SVM can process imbalanced data sets in some extent, it assigns the same importance to each training pattern. This results in the decision surfaces biasing toward the negative class. In order to overcome the disadvantage of SVM, some researchers propose FSVM and B-FSVM by applying fuzzy memberships to the training patterns to reflect different importance of them. Since the key point in FSVM and B-FSVM is how to determine the fuzzy membership, so this paper presents an entropy-based fuzzy membership evaluation approach for imbalanced data sets. Moreover, matrix patterns cannot be solved by those traditional learning machines including SVM well, so some scholars have developed MatMHKS and SMM. This paper adopts the entropy-based fuzzy membership and SMM, and then proposes ESMM. ESMM can not only guarantee importance of the positive class, but also pay more attentions to the patterns with higher class certainties. Thus, ESMM can results in more flexible decision surfaces than both conventional SVM, FSVM, B-FSVM, and MatMHKS on the imbalanced data sets. To validate the effectiveness of ESMM, we adopt 25 real-world imbalanced data sets and 5 image data sets for experiments. Experimental results demonstrates that ESMM outperforms the compared learning machines on real-world imbalanced data sets and the images. Moreover, in the process of ESMM, k has some influence on the classification performance.

References

Support Vector Machine. https://en.wikipedia.org/wiki/Support_vector_machine#Applications

Chen, S.C., Wang, Z., Tian, Y.J.: Matrix-pattern-oriented Ho-Kashyap classifier with regularization learning. Pattern Recogn. 40(5), 533–1543 (2007)

Wang, Z., Chen, S.C.: New least squares support vector machines based on matrix patterns. Neural Process. Lett. 26(1), 41–56 (2007)

Yan, Y., Wang, Q., Ni, G., Pan, Z., Kong, R.: One-class support vector machines based on matrix patterns. In: Jiang, L. (ed.) Proceedings of the 2011, International Conference on Informatics, Cybernetics, and Computer Engineering (ICCE2011). AINSC, pp. 223–231. Springer, Heidelberg (2011). doi:10.1007/978-3-642-25188-7_27

Xie, Y.B., Zhang, Z.H., Li, W.J.: Support Matrix Machines. In: The 32nd International Conference on Machine Learning (ICML 2015)

Dai, H.: Class Imbalance Learn. Fuzzy Total Margin Based Support Vector Mach. Appl. Soft Comput. 31, 172–184 (2015)

Deng, X., Tian, X.: Nonlinear process fault pattern recognition using statistics kernel PCA similarity factor. Neurocomputing. 121, 298–308 (2013)

Guo, Y., Zhang, H.Z.: Oil spill detection using synthetic aperture radar images and feature selection in shape space. Int. J. Appl. Earth Obs. Geoinf. 30, 146–157 (2014)

Ozcift, A., Gulten, A.: Classifier ensemble construction with rotation forest to improve medical diagnosis performance of machine learning algorithms. Comput. Methods Programs Biomed. 104(3), 443–451 (2011)

Brown, I., Mues, C.: An experimental comparison of classification algorithms for imbalanced credit scoring data sets. Expert Syst. Appl. 39(3), 3446–3453 (2012)

Lin, C., Wang, S.: Fuzzy support vector machines. IEEE Trans. Neural Netw. 13(2), 464–471 (2002)

Wang, Y., Wang, S., Lai, K.K.: A new fuzzy support vector machine to evaluate credit risk. IEEE Trans. Fuzzy Syst. 13(6), 820–831 (2005)

Shannon, C.E.: A mathematical theory of communication. SIGMOBILE Mob. Comput. Commun. Rev. 5(1), 3–55 (2001)

Alcala-Fdez, J., Fernandez, A., Luengo, J., Derrac, J., Garcia, S., Sanchez, L., Herrera, F.: A software tool to assess evolutionary algorithms for data mining problems. J. Multiple-Valued Logic Soft Comput. 17, 2–3 (2011)

Alcala-Fdez, J., Sanchez, L., Garcia, S., Jesus, M.J., Ventura, S., Garrell, J.M., Otero, J., Romero, C., Bacardit, J., Rivas, V.M.: A software tool to assess evolutionary algorithms for data mining problems. Soft. Comput. 13(3), 307–318 (2009)

Nene, S.A., Nayar, S.K., Murase, H.: Columbia object image library (COIL-20). Technical report CUCS-005-96. Columbia University (1996)

Cun, B.B.L., Denker, J.S., Henderson, D., Howard, R.E., Hubbard, W., Jackel, L.D.: Handwritten digit recognition with a back-propagation network. In: Advances in neural information processing systems (1990)

Bennett, F., Richardson, T., Harter, A.: Teleporting-making applications mobile. In: Mobile Computing Systems and Applications, pp. 82–84 (1994)

Kumar, N., Berg, A.C., Belhumeur, P.N., Nayar, S.K.: Attribute and simile classifiers for face verification. In: International Conference on Computer Vision, pp. 365–372 (2009)

Smith, B.A., Yin, Q., Feiner, S.K., Nayar, S.K.: Gaze locking, passive eye contact detection for human-object interaction. In: ACM Symposium on User Interface Software and Technology (UIST), pp. 271–280 (2013)

Milgram, J., Cheriet, M., Sabourin, R.: “One Against One” or “One Against All”, Which One is Better for Handwriting Recognition with SVMs? In: Tenth International Workshop on Frontiers in Handwriting Recognition (2013)

Debnath, R., Takahide, N., Takahashi, H.: A decision based one-against-one method for multi-class support vector machine. Pattern Anal. Appl. 7(2), 164–175 (2004)

Hsu, C.W., Lin, C.J.: A comparison of methods for multiclass support vector machines. IEEE Trans. Neural Netw. 13(2), 415–425 (2002)

Cortes, C., Vapnik, V.: Support vector machine. Mach. Learn. 20(3), 273–297 (1995)

Huang, J., Ling, C.X.: Using AUC and accuracy in evaluating learning algorithms. IEEE Trans. Knowl. Data Eng. 17(3), 299–310 (2005)

Braga-Neto, U.M., Dougherty, E.R.: Is cross-validation valid for small-sample microarray classification? Bioinformatics 20(3), 374–380 (2004)

Zhu, C.M., Wang, Z.: Entropy-based matrix learning machine for imbalanced data sets. Pattern Recogn. Lett. 88(1), 72–80 (2017)

Acknowledgment

This work is supported by Natural Science Foundation of Shanghai under grant number 16ZR1414500 and National Natural Science Foundation of China under grant number 61602296 and 51575336, and the authors would like to thank their supports.

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 IFIP International Federation for Information Processing

About this paper

Cite this paper

Zhu, C. (2017). Entropy-Based Support Matrix Machine. In: Shi, Z., Goertzel, B., Feng, J. (eds) Intelligence Science I. ICIS 2017. IFIP Advances in Information and Communication Technology, vol 510. Springer, Cham. https://doi.org/10.1007/978-3-319-68121-4_21

Download citation

DOI: https://doi.org/10.1007/978-3-319-68121-4_21

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-68120-7

Online ISBN: 978-3-319-68121-4

eBook Packages: Computer ScienceComputer Science (R0)