Abstract

A multi-channel satellite cloud image fusion method by the shearlet transform is proposed. The Laplacian pyramid algorithm is used to decompose the low frequency sub-images in the shearlet domain. It averages the values on its top layer, and takes the maximum absolute values on the other layers. In the high frequency sub-images of the shearlet domain, fusion rule is constructed by using information entropy, average gradient and standard deviation. Next, a nonlinear operation is performed to enhance the details of the fusion high frequency sub-images. The proposed image fusion algorithm is compared with five similar image fusion algorithms: the classical discrete orthogonal wavelet, curvelet, NSCT, tetrolet and shearlet. The information entropy, average gradient and standard deviation are used objectively evaluate the quality of the fused images. In order to verify the efficiency of the proposed algorithm, the fusion cloud image is used to determine the center location of eye and non-eye typhoons. The experimental results show that the fused image obtained by proposed algorithm improve the precision of determining the typhoon center. The comprehensive performance of the proposed algorithm is superior to similar image fusion algorithms.

You have full access to this open access chapter, Download conference paper PDF

Similar content being viewed by others

Keywords

- Shearlet transform

- Multi-channel satellite cloud image

- Laplacian pyramid

- Image fusion

- Typhoon center location

1 Introduction

At present, the automatic location of the typhoon center is still in at an early stage. Regarding typhoon center location technology based on satellite data, Dvorak proposed the Dvorak technique (DT) [1]. Recently, many improved Dvorak technology were subsequently developed that aim to determine the typhoon center. The main typhoon center location methods currently used include: the mathematical morphology/feature extraction method [2], the intelligent learning method [3], wind field analysis [4], temperature/humidity structure inversion [5], tempo-spatial movement matching [6] and the objective tropical cyclones location system [7]. Although these methods have certain advantages, they have some problems. For example, the subjectivity of DT is stronger. Mathematical morphology is suitable for tropical cyclones for which the morphology characteristics can be easily identified. The intelligent learning method requires a lot of experimental data and accumulated experience. It is suitable for recognizing the particular structures. The wind field analysis method is applicable for locating the centers of a tropical cyclones that are weak and do not have a clear circulation center. When the intensity level of a tropical cyclone is strong, the center location is not accurate, because it is affected by the resolution and heavy rain [7]. The temperature/humidity structure inversion method is also only applied to locate tropical cyclone centers that have a strong intensity. The tempo-spatial movement matching method mainly uses the implied movement information of the time series image which is combined with the characteristics and movement to track the tropical cyclone center [7]. However, the computational complexity of the method is very large. Other center location methods are not often used and are still in the early stages of developmental. Meanwhile, the existing typhoon center location system based on the satellite material mainly uses a single channel satellite cloud image or time sequence cloud images; therefore, the amount of the information obtained is not very large.

Considering that it can improve the accuracy of the location of the typhoon center if the multi-channel cloud images are fused. Recently, many researchers have made significant contributions in multi-channel satellite cloud image fusion. Among them, wavelet analysis has been successfully used in image fusion [8]. However, it can only capture three directions. Recently multi-scale geometric analysis tool is not only the same as the wavelet transform which has multi-resolution, but also has good time-frequency local features and a high degree of directionality and anisotropy [9]. They have been successfully applied in satellite image fusion (curvlet [10], contourlet [11], NSCT [12], tetrolet [13]). In 2005, Labate et al. proposed the shearlet transform [14]. It can realize the decomposition of images in different directions. It can not only detect all singular points, but can also follow the direction of the singular curve. In addition, it overcomes the shortcomings of the wavelet transform which loses the information in the process of image fusion. Compared with the contourlet transform, it eliminates the limited directions in the process of filtering [15]. Recently, researchers are paying more and more attention to the shearlet transform [16]. In this paper, we aim to introduce the shearlet transform into multi-channel satellite cloud images. We use the fusion cloud image to locate and explore its influence on the typhoon center position. In order to verify the performance of the proposed algorithm, the proposed algorithm is compared with five other types of image fusion algorithms that are designed by multi-scale decomposition.

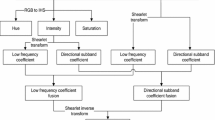

2 The Proposed Image Fusion Algorithm

2.1 Fusion Rule for the Low Frequency Component of the Shearlet Transform

After implementing the shearlet transform to an image, the low frequency component mainly includes the large-scale information of the image. The low frequency component contains less detail, but it has most of the energy of the image. Thus, the information fusion for the low frequency component is also very important. An adaptive fusion algorithm is designed to fuse the low frequency sub-images in the shearlet domain. The Laplacian pyramid algorithm is used to decompose the low frequency sub-images. It has been reported that the image fusion algorithm based on Laplacian pyramid decomposition is stable and reliable [17].

In this paper, the Laplacian pyramid decomposition algorithm is used to decompose the low frequency component in the shearlet domain. The fusion rules and specific steps of the low frequency component in the shearlet domain are designed as follows:

-

Step 1. The low frequency coefficients \( SL_{A} \) and \( SL_{B} \) of the source images \( A \) and \( B \) are decomposed by the Laplacian pyramid algorithm, respectively. The number of decomposition layers is \( Q \). The decomposed images are written as \( LA \) and \( LB \). The \( q \)th (\( 1 \le q \le Q \)) layer sub-images can be written as \( LA_{q} \) and \( LB_{q} \);

-

Step 2. An averaging method is used to fuse the top layer sub-images \( LA_{Q} \) and \( LB_{Q} \) of the Laplacian pyramid. Then, the fusion result \( LF_{Q} \) is written as:

$$ LF_{Q} (i,j) = \frac{{LA_{Q} (i,j) + LB_{Q} (i,j)}}{2} $$(1)where \( 1 \le i \le CL_{Q} \), \( 1 \le j \le RL_{Q} \), \( CL_{Q} \) is the number of rows of the \( Q \) th layer image and \( RL_{Q} \) is the number of columns of the \( Q \) th layer image;

-

Step 3. The fusion rule that chooses the greatest gray absolute value is designed to fuse the image \( LA_{q} \) and \( LB_{q} \) (\( 1 \le q \le Q - 1 \)). The fusion result \( LF_{q} \) is written as:

$$ LF_{q} (i,j) = \left\{ {\begin{array}{*{20}l} {LA_{q} (i,j),} \hfill & {\left| {LA_{q} (i,j)} \right| \ge \left| {LB_{q} (i,j)} \right|} \hfill \\ {LB_{q} (i,j),} \hfill & {\left| {LA_{q} (i,j)} \right| < \left| {LB_{q} (i,j)} \right|} \hfill \\ \end{array} } \right. $$(2) -

Step 4. Reconstruct the Laplacian pyramid and obtain the fusion result \( TL_{F} \) of the low frequency components.

2.2 Fusion Rule for the High Frequency Component of the Shearlet Transform

For the satellite cloud images which are used for locating the typhoon center, the information amount, spatial resolution and definition of the satellite cloud images are very important. The details and texture feature of the fusion image should be more abundant. Therefore, the fusion rule of the high frequency components in the shearlet domain should be designed by above the evaluation indexes.

Here, we select information entropy to evaluate the amount of information of the high frequency components in the shearlet domain. The larger the information entropy, greater the average amount of information contained in the fusion image. The standard deviation reflects the discrete degree of the gray level values relative to the average value of the gray values of the image. The greater the standard deviation, the better the contrast of the fusion image. On the other hand, the smaller the standard deviation \( \sigma \), the more uniform the gray level distribution of the image. Also, the contrast of the image is not obvious. It is not easy to identify the details of the fusion image. Image definition can be evaluated by the average gradient. The average gradient can sensitively reflect the expression ability in the minute details of the image. Generally, the greater the average gradient, the larger the rate of the gray values in the image changes and the clearer the image. Hence, we choose to use information entropy, average gradient and standard deviation to construct the fusion rule of the high frequency components in the shearlet domain.

For the high frequency components of the source images \( A \) and \( B \) in the shearlet domain, we have designed the fusion rule as follows:

-

Step 1. Firstly, we calculate the information entropy, average gradient and standard deviation of each layer in every direction of the sub-images separately. The high frequency coefficients whose layer is \( w \) \( \left( {0 < w \le W} \right) \) and direction is \( t \) \( \left( {0 < t \le T} \right) \) are written as \( \left( {SH_{A} } \right)_{w}^{t} \) and \( \left( {SH_{B} } \right)_{w}^{t} \). Their sizes are \( M \times N \) which is the same as the original image. Information entropy \( E \) can be written as:

$$ E = - \sum\limits_{i = 0}^{L - 1} {P_{i} \log_{2} P_{i} } $$(3)where \( P_{i} \) is the probability of the gray value \( i \) in the pixel of the sub-images and \( L \) is the number of the gray level. The average gradient of the high frequency sub-image is expressed as:

$$ \bar{G} = \frac{{\sum\limits_{ii = 1}^{M - 1} {\sum\limits_{jj = 1}^{N - 1} {\sqrt {\frac{{\left( {\frac{{\partial SH_{w}^{t} (x_{ii} ,y_{jj} )}}{{\partial x_{ii} }}} \right)^{2} + \left( {\frac{{\partial SH_{w}^{t} (x_{ii} ,y_{jj} )}}{{\partial y_{jj} }}} \right)^{2} }}{2}} } } }}{(M - 1)(N - 1)} $$(4)where \( SH_{w}^{t} (x_{ii} ,y_{jj} ) \) is the pixel point whose position is \( \left( {x_{ii} ,y_{jj} } \right) \) in the high frequency sub-image \( \left( {SH_{A} } \right)_{w}^{t} \) or \( \left( {SH_{B} } \right)_{w}^{t} \). Here, \( 0 < ii \le M \) and \( 0 < jj \le N \). The standard deviation of the high frequency sub-image is shown as:

$$ \sigma = \sqrt {\sum\limits_{ii = 1}^{M} {\sum\limits_{jj = 1}^{N} {\frac{{(SH_{w}^{t} (x_{ii} ,y_{jj} ) - \bar{h})^{2} }}{M \times N}} } } $$(5)where \( \bar{h} \) is the average value of gray levels in the high frequency sub-image.

-

Step 2. The information entropy \( E \), average gradient \( \bar{G} \) and standard deviation \( \sigma \) of the high frequency sub-images \( \left( {SH_{A} } \right)_{w}^{t} \) or \( \left( {SH_{B} } \right)_{w}^{t} \) are normalized. Then, we will obtain the normalization of information entropy \( E_{g} \), average gradient \( \bar{G}_{g} \) and standard deviation \( \sigma_{g} \). The high frequency sub-image whose product of these three values is the greatest is chosen as the fusion sub-image. Namely,

$$ \left( {SH_{F} } \right)_{w}^{t} = \left\{ {\begin{array}{*{20}l} {\left( {SH_{A} } \right)_{w}^{t} ,} \hfill & {(E_{g} )_{A} \times (\bar{G}_{g} )_{A} \times (\sigma_{g} )_{A} \ge (E_{g} )_{B} \times (\bar{G}_{g} )_{B} \times (\sigma_{g} )_{B} } \hfill \\ {\left( {SH_{B} } \right)_{w}^{t} ,} \hfill & {(E_{g} )_{A} \times (\bar{G}_{g} )_{A} \times (\sigma_{g} )_{A} < (E_{g} )_{B} \times (\bar{G}_{g} )_{B} \times (\sigma_{g} )_{B} } \hfill \\ \end{array} } \right. $$(6) -

Step 3. The nonlinear enhancement operation is performed on the high frequency sub-images [18]. We assume that the maximum absolute value of all gray pixel points is \( mgray \). Then, the enhanced high frequency sub-image \( \left( {E\_SH_{F} } \right)_{w}^{t} \) is written as:

$$ \left( {E\_SH_{F} } \right)_{w}^{t} (ii,jj) = a \cdot \hbox{max} h\{ sigm[c(Sh_{w}^{t} (ii,jj) - b)] - sigm[ - c(Sh_{w}^{t} (ii,jj) + b)]\} $$(7)

Here, \( b = 0.35 \) and \( c = 20 \). They are used to control the size of the threshold and enhance the rate. \( a = 1/(d_{1} - d_{2} ) \), where \( d_{1} = sigm(c \times (1 + b)) \), \( d_{2} = sigm( - c \times (1 - b)) \), \( sigm(x) = \frac{1}{{1 + e^{ - x} }} \) and \( Sh_{w}^{t} (ii,jj) = \left( {SH_{F} } \right)_{w}^{t} (ii,jj)/mgray \).

2.3 Fusion Algorithm of the Satellite Cloud Image

The registered source images are written as \( A \) and \( B \). The detail steps of the proposed image fusion algorithm are shown as follows:

-

Step 1. The registered image \( A \) and \( B \) (the size is \( M \times N \)) are decomposed by the shearlet transform. The number of decomposition layers is \( W \). The direction of decomposition is \( T \) \( \left( {T = 2^{r} ,r \in Z^{*} } \right) \), and \( Z^{*} \) means the positive integer. Then, the following coefficients can be obtained: the high coefficients \( SH_{A} \) and \( SH_{B} \) and the low coefficients \( SL_{A} \) and \( SL_{B} \);

-

Step 2. According to Sect. 3.1 which introduces the fusion rule of the low frequency part, we fuse the low frequency sub-image, and we obtain the low frequency fusion coefficient \( TL_{F} \);

-

Step 3. According to Sect. 3.2 which explains the fusion rule of the high frequency part, we fuse the high frequency sub-image, and we obtain the high frequency fusion coefficient \( E\_SH_{F} \);

-

Step 4. The inverse shearlet transform is implemented to the fused shearlet coefficients to obtain the final fusion image \( F \).

3 Experimental Results and Discussion

Two groups of satellite cloud images captured by the Chinese meteorological satellite FY-2C are used to verify the proposed fusion algorithm. There are one group of eye typhoon cloud images and one group of non-eye typhoon cloud images: 1. Infrared channel 2 cloud image and water vapor channel cloud image of the No. 0513 typhoon “Talim” which were obtained at 12:00 am on August 31, 2005; 2. Infrared channel 1 cloud image and water vapor channel cloud image of the No. 0713 typhoon “Wipha” which were obtained at 6:00 am on September 16, 2007. In order to verify the efficiency of the proposed fusion algorithm, it is compared with five similar image fusion algorithms: the classical discrete orthogonal wavelet transform [19], the curvelet transform [10], NSCT [12], the tetrolet transform [13] and the shearlet transform [15]. The number of the decomposed layers is two. In order to express the diagram conveniently, the image fusion algorithm based on the classical discrete orthogonal wavelet is labeled as “DWT”. The curvelet image fusion algorithm is labeled as “Curvelet”. The NSCT image fusion algorithm is labeled as “NSCT”. The tetrolet image fusion algorithm is labeled as “Tetrolet”. The shearlet image fusion algorithm in Ref. [15] is labeled as “Shearlet” and the proposed image fusion algorithm is labeled as “S_Lap”.

3.1 Experimental Results for the Multi-channel Satellite Cloud Image Fusion

Example 1 for Eye Typhoon Cloud Image Fusion

The first group of experimental images is the infrared channel 2 cloud image and water vapor cloud image for typhoon “Talim” which were obtained at 12 o’clock on August 31, 2005. They are shown in Fig. 1(a) and (b) respectively. Figure 1(c)–(h) respectively represents the fusion images obtained by the DWT, Curvelet, NSCT, Tetrolet, Shearlet and S_Lap, respectively.

We can see from Fig. 1 that the gray level values of the fusion image by the curvelet transform (Fig. 1(d)) and the fusion image by NSCT (Fig. 1(e)) are a little larger, and the difference between the typhoon eye and the surrounding of the clouds is small. They are close to that of the water vapor cloud image (Fig. 1(b)). In Fig. 1(f), some edge details are fuzzy in the fusion image by tetrolet transform which is influenced by the block effect. The visual qualities of the other fusion images are similar.

Example 2 for Non-Eye Typhoon Cloud Image Fusion

The second group experimental images are infrared channel 1 cloud image and water vapor channel cloud image of the No. 0713 typhoon “Wipha” which were obtained at 6 o’clock on September 16, 2007. They are shown in Fig. 2(a) and (b) respectively. Figure 2(c)–(h) respectively represents the fusion images by DWT, Curvelet, NSCT, Tetrolet, Shearlet and S_Lap respectively.

We can see from Fig. 2 that this group of cloud images belong to a non-eye typhoon. The visual quality of all the fusion images is very similar. The gray level values of the fusion images obtained by the NSCT transform (Fig. 2(e)) and the curvelet transform (Fig. 2(d)) are a little larger than those of other transforms. The difference of the details between them is not very larger.

3.2 Evaluation Parameters for the Fusion Images

In order to objectively evaluate the quality of these fusion images, information entropy \( E \), average gradient \( \bar{G} \) and standard deviation \( \sigma \) are used to evaluate these fusion images. The larger these parameters values are, better the visual quality of the fusion image is. The evaluation parameters of the fusion images in Figs. 1 and 2 are shown in Tables 1 and 2, respectively.

We can see from Tables 1 and 2 that the information entropy, average gradient and standard deviation of the fusion image by the proposed algorithm are better than those of the other fusion algorithms. The fusion images obtained by the proposed algorithm contain more information on the multi-channel cloud images and have good contrast. The proposed algorithm can extrude the details of the cloud images well. The comprehensive visual quality of the fusion images by the proposed algorithm is optimal.

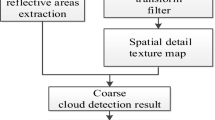

Typhoon Center Location Test based on the Fusion Cloud Images

In order to further verify the performance of the proposed image fusion algorithm, fusion images which are obtained by various algorithms are used to locate the center position of the typhoon. In this paper, the typhoon center location algorithm in [20] is used. In order to compare the accuracy of the center position by the various fusion images, we use the typhoon center position in the “tropical cyclone yearbook” which is compiled by Shanghai typhoon institute of China meteorological administration as the reference typhoon center position. In addition, the single infrared channel satellite cloud image and the water vapor channel satellite cloud image are used to locate the center position of a typhoon to verify the performance of the proposed algorithm. This is because the existing typhoon center location systems are based on a single infrared channel satellite cloud image or time series images.

Example 1 for Eye Typhoon Center Location

The typhoon center location algorithm in [20] is used to locate the center position based on the fusion typhoon cloud images. The center location results based on different fusion images are shown in Fig. 3.

We can see from Fig. 3 that the center locations in Fig. 3(b)–(e) are far away from the center of the typhoon. The center location in Fig. 3(a) is close to the reference center position of the typhoon. The center locations based on the fusion images by the tetrolet transform in Fig. 3(f) and by the shearlet transform in Fig. 3(g) are very close to the reference center position. The center location based on the fusion image by the proposed algorithm in Fig. 3(h) is almost the same as the reference center position of the typhoon. The center location errors based on Fig. 3 are shown in Table 3.

From Table 3, we can find that the error of the typhoon center location based on the fusion image obtained by the proposed algorithm is 7.85 km. The location error by the proposed algorithm is less than that of the five other the fusion algorithms, the single infrared channel 2 cloud image and single water vapor channel cloud image.

Example 2 for Non-Eye Typhoon Center Location

We use the typhoon center location algorithm in [20] to locate the center position based on the fusion typhoon cloud images in Fig. 4. The center location results are shown in Fig. 4.

We can see from Fig. 4 that the center locations of the fusion images obtained by the tetrolet transform in Fig. 4(f) and by the shearlet transform in Fig. 4(g) are far away from the reference center position of the typhoon. The center locations based on other fusion images are very close to the reference center position of the typhoon. Especially, the center locations based on the fusion images by the NSCT transform in Fig. 4(e) and the proposed algorithm in Fig. 4(h) are almost the same as the reference center position of the typhoon. The center location errors based on Fig. 4 are shown in Table 4. In Table 4, we can find that the center location error based on the fusion image obtained by the proposed algorithm is 15.46 km. The location error of the proposed algorithm is less than that of the five other the fusion algorithms, the single infrared channel 1 cloud image and the single water vapor channel cloud image.

Computing Complexity of the Algorithm

In order to verify the performance of the proposed image fusion algorithm, the computing complexities of all the image fusion algorithms above are analyzed in this manuscript. The image fusion algorithms are run in MatLab R2009a software on a Dell OptiPlex 780 desktop computer with an Intel® Core™ 2 processor and four nuclear Q9400 at 2.66 GHz. The memory of this computer is 2 GB (JinShiDun DDR3 1333 MHz) and the operating system is Windows XP professional edition 32-bit SP3 (DirectX 9.0c). The running time of the different image fusion algorithms is tested by the image fusion experiment for the eye typhoon group. The running time of all of the image fusion algorithms are shown in Table 5.

From Table 5, we can see that the DWT has the smallest image fusion algorithm running time. The running time of the proposed image fusion algorithm is shorter than the running time of other multi-scale image fusion algorithms. Thus, the computing complexity of the proposed image fusion algorithm is acceptable.

4 Conclusions

In this manuscript, we represent an efficient image fusion algorithm to improve the accuracy of locating the typhoon center based on the shearlet transform. Compared with the fusion images based on five similar image fusion algorithms (DWT, curvelet, NSCT, tetrolet transform and shearlet transfrom), the overall visual quality of the fusion image by the proposed algorithm is the best. It can identify the typhoon eye and details of the clouds well. The accuracy of center location for both the eye typhoon and non-eye typhoon can be improved by using the fusion image obtained by the proposed algorithm. It is superior to the center location methods based on the single channel satellite cloud image and other fusion images by other image fusion algorithms. Further work is needed to improve the fusion rule by combining some meteorological knowledge and practical forecasting experience. In addition, the work of this paper can be used to retrieve the typhoon wind field and to improve the prediction accuracy of typhoon intensity.

References

Dvorak, V.F.: Tropical cyclone intensity analysis and forecasting from forecasting from satellite imagery. Mon. Weather Rev. 103(5), 420–430 (1975)

Li, H., Huang, X.-Y., Qin, D.-Y.: Research the artificial intelligent algorithm for positioning of eyed typhoon with high resolution satellite image. In: Proceedings of the 2012 5th International Joint Conference on Computational Sciences and Optimization, pp. 889–891 (2012)

Li, Y., Chen, X., Fei, S.-M., Mao, K.-F., Zhou, K.: The study of a linear optimal location the typhoon center automatic from IR satellite cloud image. In: Proceedings of SPIE - The International Society for Optical Engineering, vol. 8193 (2011)

Yang, J., Wang, H.: Positioning tropical cyclone center in a single satellite image using vector field analysis. In: Sun, Z., Deng, Z. (eds.) Proceedings of 2013 Chinese Intelligent Automation Conference. Lecture Notes in Electrical Engineering, vol. 256, pp. 37–44. Springer, Heidelberg (2013). https://doi.org/10.1007/978-3-642-38466-0_5

Fan, Z.Y.: Application of satellite SSM/I data in the typhoon center location and maximum wind speed estimation, pp. 1–2. National Central University in Taiwan, Taoyuan (2004)

Wei, K., Jing, Z., Li, Y., Liu, S.: Spiral band model for locating tropical cyclone centers. Pattern Recogn. Lett. 32(6), 761–770 (2011)

Yang, H.-Q., Yang, Y.-M.: Progress in objedctive position methods of tropical cyclone center using satellite remote sensing. J. Top. Oceanogr. 31(2), 15–27 (2012)

Yang, W., Wang, J., Guo, J.: A novel algorithm for satellite images fusion based on compressed sensing and PCA. In: Mathematical Problems in Engineering, pp. 1–10 (2013)

Miao, Q.G., Shi, C., Xu, P.F., Yang, M., Shi, Y.B.: Multi-focus image fusion algorithm based on shearlets. Chin. Opti. Lett. 9(4), 1–5 (2011)

Li, S., Yang, B.: Multifocus image fusion by combining curvelet and wavelet transform. Pattern Recogn. Lett. 29(9), 295–1301 (2008)

Miao, Q.G., Wang, B.S.: A novel image fusion method using contourlet transform. In: 2006 International Conference on Communications, Circuits and Systems Proceedings, vol. 1, pp. 548–552 (2006)

Lu, J., Zhang, C.-J., Hu, M., Chen, H.: NonSubsampled contourlet transform combined with energy entropy for remote sensing image fusion. In: 2009 International Conference on Artificial Intelligence and Computation Intelligence, vol. 3, pp. 530–534 (2009)

Yan, X., Han, H.-L., Liu, S.-Q., Yang, T.-W., Yang, Z.-J., Xue, L.-Z.: Image fusion based on tetrolet transform. J. Optoelectron. Laser 24(8), 1629–1633 (2013)

Easley, G., Labate, D., Lim, W.-Q.: Sparse directional image representations using the discrete shearlet transform. Appl. Comput. Harmonic Anal. 25(1), 25–46 (2008)

Miao, Q., Shi, C., Xu, P., Yang, M., Shi, Y.: A novel algorithm of image fusion using shearlets. Opt. Commun. 284(6), 1540–1547 (2011)

Liu, X., Zhou, Y., Wang, J.: Image fusion based on shearlet transform and regional features. Int. J. Electron. Commun. 68(6), 471–477 (2014)

Moria, I.S., Jamie, P.H.: Review of image fusion technology in 2005. In: Thermosense XXVII, vol. 5782, pp. 29–45 (2005)

Zhang, C.J., Wang, X.D., Duanmu, C.J.: Adaptive typhoon cloud image enhancement using genetic algorithm and non-linear gain operation in undecimated wavelet domain. Eng. Appl. Artif. Intell. 23(1), 61–73 (2010)

Lewis, J.J., O’Callaghan, R.J., Nikolov, S.G., Bull, D.R., Canagarajah, N.: Pixel-and region-based image fusion with complex wavelets. Inf. Fusion 8(2), 119–130 (2007)

Zhang, C.J., Chen, Y., Lu, J.: Typhoon center location algorithm based on fractal feature and gradient of infrared satellite cloud image. In: International Conference on Optoelectronic Technology and Application, pp. 92990F-1–92990F-6 (2014)

Acknowledgments

The part of work in this paper is supported by Natural Science Foundation of China (Nos. 41575046, 41475059), Project of Commonweal Technique and Application Research of Zhejiang Province of China (No. 2016C33010).

Author information

Authors and Affiliations

Corresponding author

Editor information

Editors and Affiliations

Rights and permissions

Copyright information

© 2017 Springer International Publishing AG

About this paper

Cite this paper

Zhang, C., Chen, Y., Ma, L. (2017). Multi-channel Satellite Cloud Image Fusion in the Shearlet Transform Domain and Its Influence on Typhoon Center Location. In: Zhao, Y., Kong, X., Taubman, D. (eds) Image and Graphics. ICIG 2017. Lecture Notes in Computer Science(), vol 10667. Springer, Cham. https://doi.org/10.1007/978-3-319-71589-6_38

Download citation

DOI: https://doi.org/10.1007/978-3-319-71589-6_38

Published:

Publisher Name: Springer, Cham

Print ISBN: 978-3-319-71588-9

Online ISBN: 978-3-319-71589-6

eBook Packages: Computer ScienceComputer Science (R0)